10 key features for CG artists from Unreal Engine 4.27

Epic Games has released Unreal Engine 4.27, the latest update to the game engine and real-time renderer.

Although it’s tempting to think of it as a stopgap release before the game-changing Unreal Engine 5 – now in early access, and due for a production release “targeting 2022” – it’s a massive update in its own right.

The online release notes alone run to over 40,000 words.

But if you don’t want to wade through all of that documentation, we’ve picked out 10 changes we think are particularly significant for artists, as opposed to programmers – from headline features like the new in-camera VFX features, to hidden gems like multi-GPU lightmap baking and the new camera calibration plugin.

We’ve focused primarily on tools for game development and VFX work, but at the end of the article, you can find quick round-ups of the new tools for architectural visualization and live visuals.

Features marked Beta or Experimental are not recommended for use in production yet.

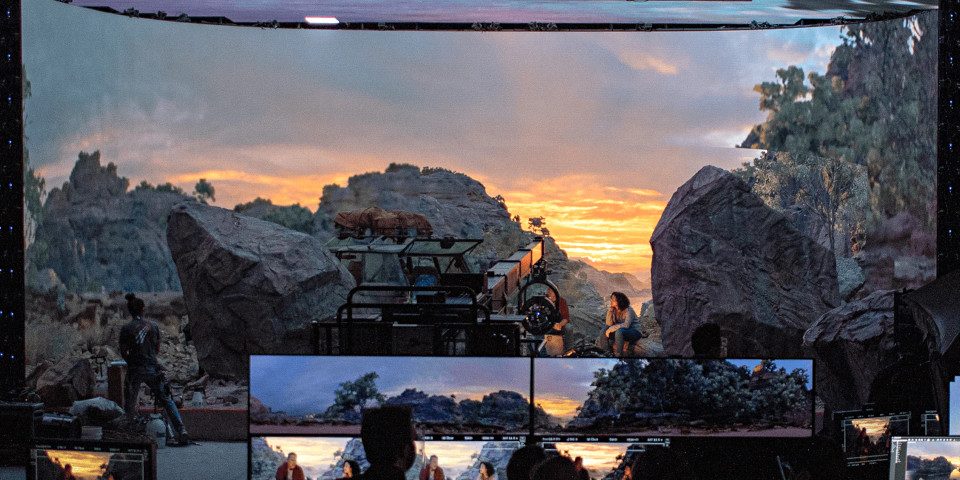

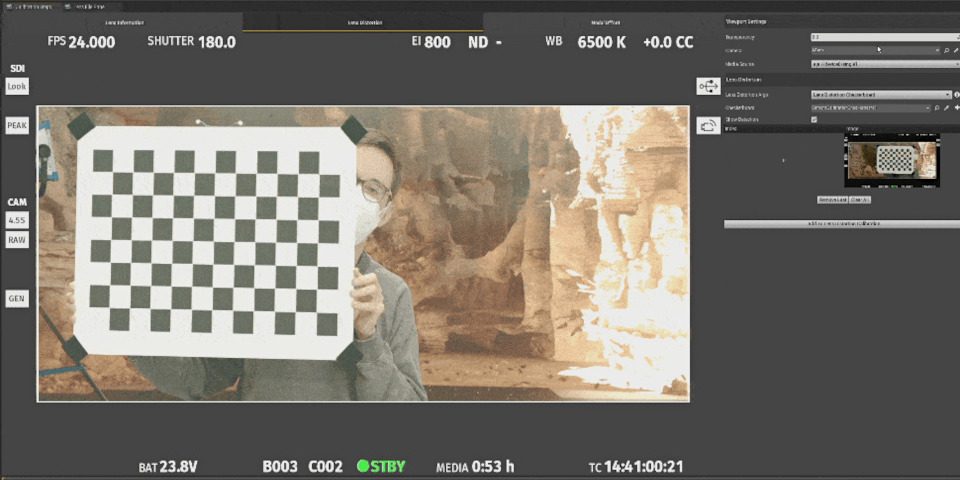

1. Support for OpenColorIO in LED volumes

Unreal Engine 4.27 includes a number of new features for in-camera visual effects work, many tested in production during the making of Epic Games’ new live-action demo.

They include a set of updates to the nDisplay system, used to project CG environments being rendered in real time in Unreal Engine onto a LED wall or dome, against which actors can be filmed.

Key changes include support for the OpenColorIO (OCIO), the colour-management standard specified by the VFX Reference Platform, and described as a ‘gateway’ to ACES colour-managed pipelines for movie work.

In addition, the process of creating new nDisplay set-ups has been streamlined, with a new 3D Config Editor, and all of the key settings consolidated into a single nDisplay Root Actor.

Features added in beta include the option to dedicate a GPU to the inner frustum of the display when running a multi-GPU set-up, enabling more complex content to be displayed there.

In addition, experimental support has been added for running nDisplay on Linux, although some key features – notably, hardware-accelerated ray tracing – are not yet supported.

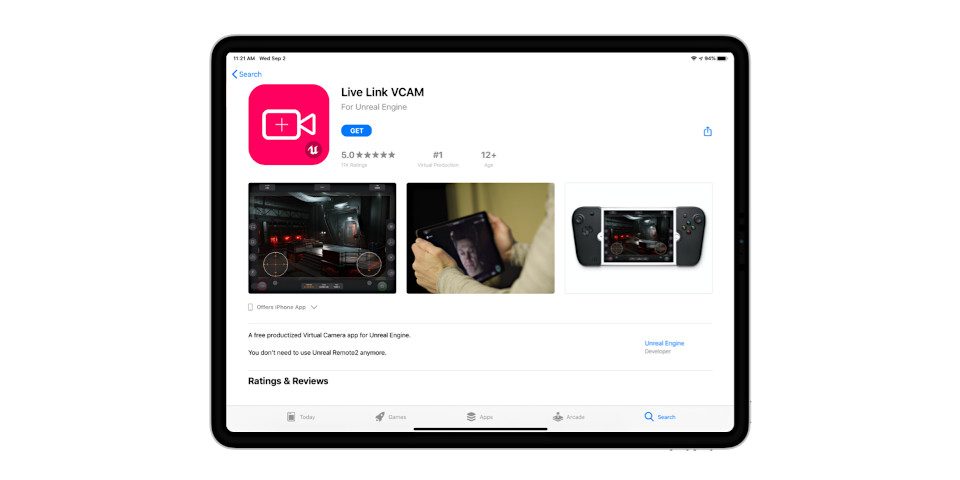

2. Control virtual cameras from iOS devices with the new Live Link VCAM app

Unreal Engine 4.27 also introduces a number of new features for controlling virtual cameras, intended for scouting locations on virtual sets and for generating camera moves.

They include Live Link VCAM, a new iOS app for controlling virtual cameras from an iPad, described as offering a “more tailored user experience” than the existing Unreal Remote app.

At the minute, we can’t find Live Link VCAM in the App Store, and the online documentation still links to Unreal Remote 2, but we’ve contacted Epic Games for the download link, and will update if we hear back.

Updated 9 September 2021: Live Link VCAM is now available to download.

Related changes include a new drag-and-drop system for building interfaces for controlling UE4 projects from a tablet or laptop, updates to the Remote Control Presets system, and a new Remote Control C++ API.

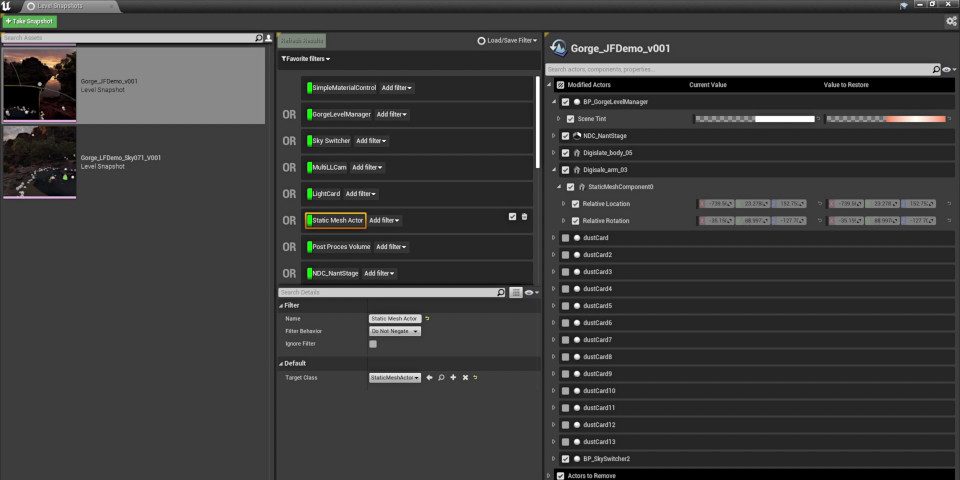

3. Level Snapshots for in-camera VFX work and design reviews (Beta)

The release also introduces a new Level Snapshot system, which makes it possible to save and restore configurations for a level without forcing a permanent change to a project, or to source control.

For in-camera VFX work, the system makes it possible to adjust a CG environment being rendered in Unreal Engine on a per-shot or per-sequence basis.

However, it can also be used more generally to create variant designs for a project for creative reviews.

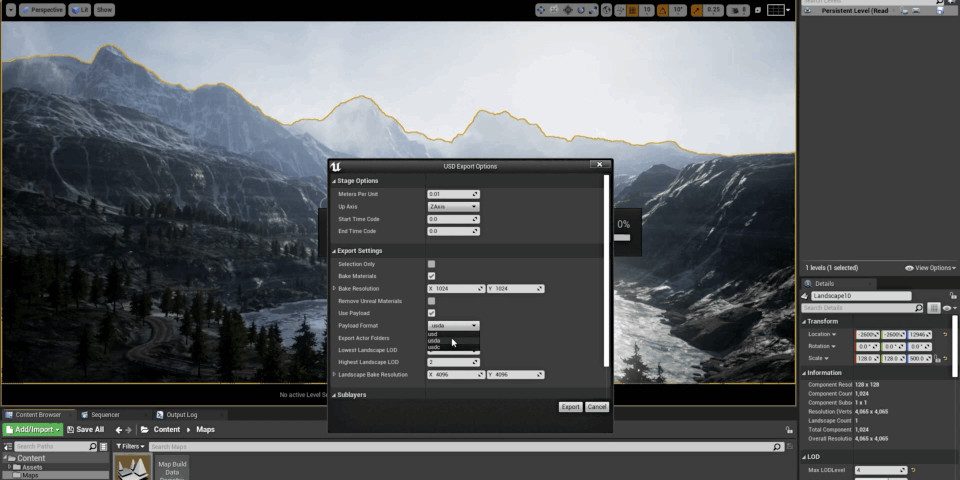

4. Export levels and animation sequences to USD

Support for Universal Scene Description, Pixar’s increasingly ubiquitous framework for exchanging production data between DCC applications, has also been extended.

Key changes include the option to export an entire Unreal Engine Level as a primary USD file, with any sublevels or assets automatically being exported as separate USD files referenced by that primary file.

Materials with textures can be baked down and exported with the level.

In addition, animation sequences can now be exported to “several USD file formats”. Export includes all bone and blendshape tracks, and both the animation preview mesh and the animation itself.

The implementation also now supports Nvidia’s MDL material schema, favoured by Nvidia over MaterialX in Omniverse, its USD-based – and UE4-compatible – online collaboration platform.

5. Attach hair grooms to Alembic caches

Artists working with hair or fur in Unreal Engine can now attach hair grooms to Alembic caches.

The change makes it possible to bind grooms directly to geometry caches imported from other DCC applications, rather than having to use the “awkward workflow” of binding a groom to a Skeletal Mesh.

It is also now possible to import grooms that have already been simulated and which contain cached per-frame hair data, and play back the simulation in the editor, Sequencer and Movie Render Queue.

6. New camera calibration tool for live compositing (Beta)

Users of Composure, Unreal Engine’s real-time compositing system, get a new Camera Calibration plugin, for matching the lens properties of the physical camera generating the video to the virtual camera in UE4.

The plugin can also be used to apply real-world lens distortion to an Unreal Engine CineCamera.

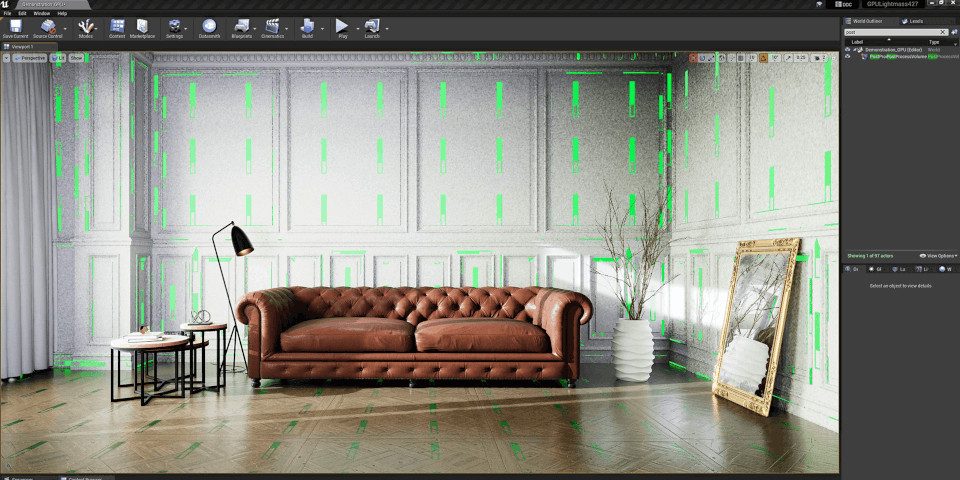

7. Multi-GPU lightmap baking with support for LODs (Beta)

GPU Lightmass, the new framework for baking lightmaps on the GPU introduced in Unreal Engine 4.26, has been updated, and now supports Level of Detail (LOD) meshes, coloured translucent shadows, and more lighting paramters, including attenuation and non-inverse-square falloff.

It is also now possible to use multiple GPUs for baking lighting, although multi-GPU support is currently limited to Windows 10 and Nvidia GPUs connected via SLI or NVLink bridges.

8. Offline-quality rendering in the Path Tracer (Beta)

Path Tracer, Unreal Engine’s physically accurate rendering mode, gets a sizeable update in verson 4.27.

It now supports refraction; transmission of light through glass surfaces, including approximate caustics; most light parameters, including IES profiles; and orthographic cameras.

The Path Tracer can also now be used to render scenes with a “nearly unlimited” number of lights.

Epic Games pitches the changes as making Path Tracer a viable alternative to the faster hybrid Real-Time Ray Tracing mode for production rendering, particularly for architectural and product visualisation.

According to Epic, Path Tracer now creates “final-pixel imagery comparable to offline renders”.

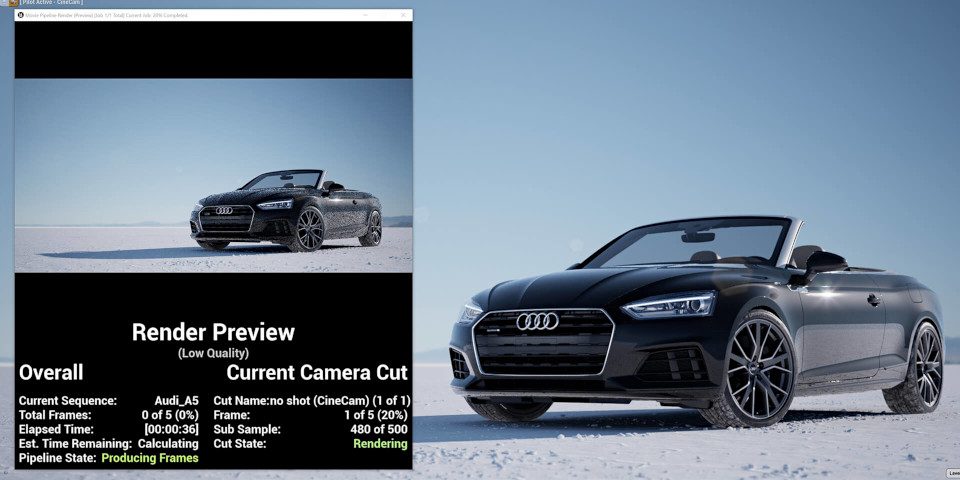

9. Batch render custom image sequences from Sequencer

Sequencer, Unreal Engine’s cinematics editor, also gets a number of new features, of which the most significant is probably the new Command Line Encoder for its Movie Render Queue.

The encoder makes it possible to batch render image sequences in custom formats using third-party software like FFmpeg, as well as in the preset BMP, EXR, JPEG and PNG formats.

Other changes include a new Gameplay Cue track, for triggering gameplay events directly from Sequencer.

In-game movie playback via Media Framework is now frame-accurately synced with the Sequencer timeline.

10. New debugging tools for Niagara particle effects

Niagara, Unreal Engine’s in-game VFX framework, gets new tools for troubleshooting particle systems, including a dedicated Debugger panel and HUD display.

A new Debug Drawing mode can be used to trace the paths of individual particles within a system.

In addition, Niagara’s Curve Editor has been updated to match the one in Sequencer, providing “more advanced editing tools to adjust keys and retiming” for particle systems.

And there’s more…

But that only scratches the surface of the new features in Unreal Engine 4.27.

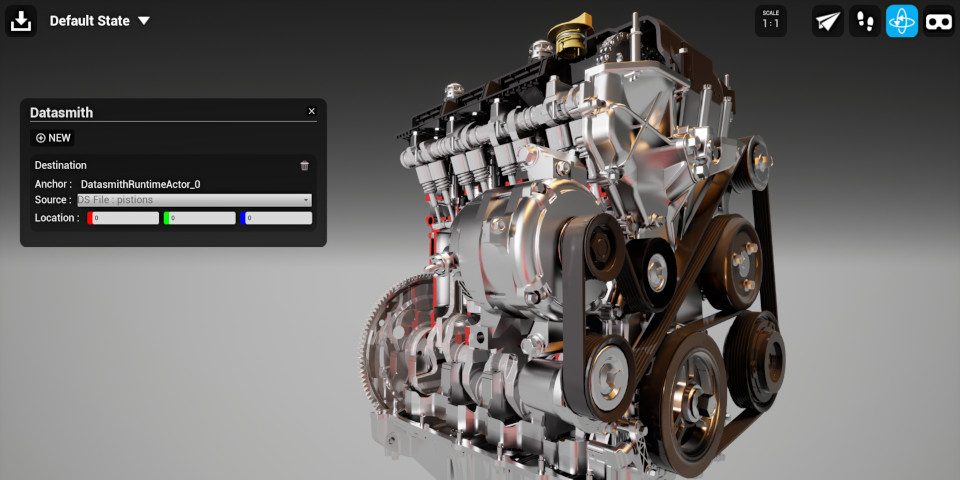

For architectural and product visualization, Unreal Engine 4.27 introduces new Datasmith plugins for ArchiCAD and SolidWorks, making it possible to live link either app to Unreal Engine or Twinmotion.

The existing Rhino and SketchUp plugins have also been updated to support live linking.

In addition, several Datasmith operations are now available at runtime, making it possible to create custom applications that can import Datasmith files and manipulate them via Blueprints.

The LIDAR Point Cloud plugin gets new Polygonal, Lasso and Paint selection methods for selecting data points, and performance has been improved when loading, processing and saving point clouds.

For design reviews, the Pixel Streaming system is now officially production-ready.

The system, which makes it possible to run a packaged UE4 project on a server, and stream the rendered frames to users’ web browsers, now supports Linux server instances and instances with AMD GPUs.

For live visuals, the DMX Plugin now integrates with Unreal Engine’s nDisplay and Remote Control systems, and the Pixel Mapping UI has been redesigned.

For virtual and augmented reality, the OpenXR plugin is now officially production-ready, and can be used to create projects for viewing on SteamVR, Oculus, Windows Mixed Reality or HoloLens hardware.

For facial motion capture, Live Link Face – Epic’s free iOS app for streaming facial animation data from iPhone footage to a 3D character inside Unreal Engine – has been updated.

A new calibration system makes it possible to set a neutral pose each actor, improving the quality of data captured; and the plugin now officially supports iPads with TrueDepth cameras, as well as iPhones.

There are also some significant new features for game developers, as opposed to game artists, including the inclusion of data compression technology Oodle and video codec Bink Video as part of Unreal Engine, following Epic Games’ acquisition of RAD Game Tools; the option to deploy Unreal Engine projects as Containers; the option to build the UE4 runtime as a library; and a new Georeferencing plugin.

You can find a full list of changes, which also include new audio features and updates to the deployment platforms supported, via the link at the foot of this story.

Pricing and system requirements

Unreal Engine 4.27 is available for 64-bit Windows, macOS and Linux.

Use of the editor is free, as is rendering non-interactive content. For game developers, Epic takes 5% of gross lifetime revenues for a game beyond the first $1 million.

Read a full list of new features in Unreal Engine 4.27 in the online changelog