Group test: NVIDIA GeForce RTX 40 Series and Super Series GPUs

Which GeForce RTX 40 Series GPU offers the best bang for your buck for CG work? To find out, Jason Lewis puts NVIDIA’s current consumer GPUs – including the new Super Series cards – through an exhaustive set of real-world tests.

Our latest group test is a kind of ‘part two’ to last year’s roundup of GeForce RTX 40 Series GPUs, in which I pitted NVIDIA’s current Ada Generation consumer cards against older cards from the Ampere and Turing generations in a series of real-world CG tests.

The original review featured three of the first cards from the GeForce RTX 40 Series to be released: the GeForce RTX 4090, GeForce RTX 4080 and GeForce RTX 4070 Ti.

Since then, NVIDIA has released its mid-cycle refresh, the ‘Super Series’ cards: the GeForce RTX 4080 Super, GeForce RTX 4070 Ti Super and GeForce RTX 4070 Super.

In this review, we will be putting the new Super cards through the same test as the originals, using recent versions of the CG applications used for benchmarking.

Jump to another part of this review

Technology focus: GPU architectures and APIs

Specifications

Testing procedure

Benchmark results

Other considerations

Verdict

Which GPUs are included in the group test?

In this review, we will be focusing on the high end of NVIDIA’s GeForce RTX 40 Series: the original GeForce RTX 4090, GeForce RTX 4080 and GeForce RTX 4070 Ti, and two of the new Super Series, the GeForce RTX 4080 Super and GeForce RTX 4070 Super.

Sadly, I wasn’t able to obtain the third Super Series card, the GeForce RTX 4070 Ti Super, and as before, we won’t be looking at the mid-range and entry-level cards: the GeForce RTX 4070, GeForce RTX 4060 Ti and GeForce RTX 4060.

This is NVIDIA’s current generation of GPUs, based on its Ada Lovelace architecture, and intended to replace the previous GeForce RTX 30 Series, based on the Ampere architecture, and GeForce RTX 20 Series, based on the Turing architecture.

For comparison, we will also be testing two cards from the GeForce RTX 30 Series, the GeForce RTX 3090 and GeForce RTX 3070, and one from the GeForce RTX 20 Series, the GeForce RTX 2080 Ti.

We will also be testing three of NVIDIA’s workstation cards, the Ampere-generation RTX A6000, and the Turing-generation Titan RTX and Quadro RTX 8000.

Technology focus: GPU architectures and APIs

Before I get to the review itself, here is a quick recap of some of the technical terms that you will encounter in it. If you’re already familiar with them, you may want to skip ahead.

Like NVIDIA’s previous-generation Ampere and Turing GPUs, the current Ada Lovelace GPU architecture features three types of processor cores: CUDA cores, designed for rasterization and general GPU computing; Tensor cores, designed for machine learning operations; and RT cores, intended to accelerate ray tracing.

In order to take advantage of the RT cores, software has to access them through a graphics API: in the case of the applications featured in this review, either DXR (DirectX Raytracing), used in Unreal Engine, or NVIDIA’s OptiX, used in most offline renderers.

In many renderers, the OptiX rendering backend is provided as an alternative to an older backend based on NVIDIA’s CUDA API. The CUDA backends work with a wider range of NVIDIA GPUs and software applications, but OptiX enables hardware-accelerated ray tracing, and usually improves performance.

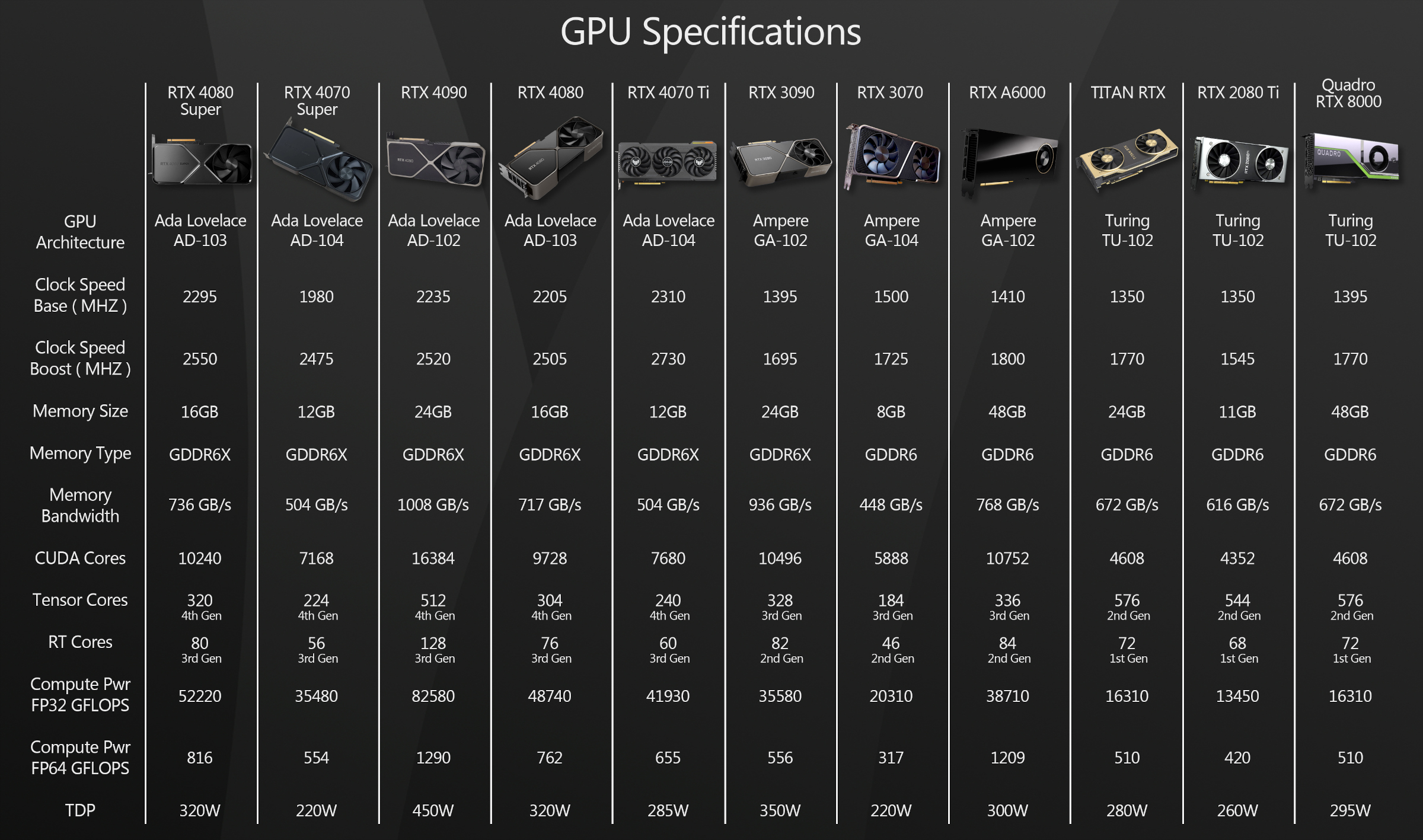

Specifications

First, let’s run through the specs of the new Super Series GPUs on test. You can find specs for the GeForce RTX 4090, GeForce RTX 4080 and GeForce RTX 4070 TI in the original review.

The GeForce RTX 4080 Super is basically just a regular 4080, but with a fully unlocked AD103 processor, bumping the CUDA core count to 10,240, up from the 9,728 cores of the original 4080. The Tensor and RT core counts are bumped to 320 and 80, up from 304 and 76. Memory bandwidth is also slightly increased to 736.3 GB/s, up from 716.8 GB/s.

In the Founders Edition, the GeForce RTX 4080 Super uses the same three-slot cooler design of the original 4080, the same 16-pin 12VHPWR power connector, and has the same TDP of 320 W.

The GeForce RTX 4070 Super is an upgraded GeForce RTX 4070, using the same AD104 GPU, but with a much more significant bump in computing cores. The CUDA core count rises to 7,168 CUDA cores, up from 5,188, while the Tensor and RT core counts rise to 224 and 56, up from 184 and 46. Memory bandwidth is unchanged, at 504.2 GB/s.

In the Founders Edition, the GeForce RTX 4070 Super uses a two-slot cooler design that is almost identical to the vanilla GeForce RTX 4070, just slightly larger in size, and uses the same 16-pin 12VHPWR connector. However, its TDP is higher: 220 W, up from 200 W for the original.

One final thing to note about the Super Series GPUs is the weight of the GeForce RTX 4080 Super. At 4.68 lbs, it’s as heavy as the original RTX 4080, and almost as heavy as the RTX 4090. I would recommend using a GPU brace to help support that weight, and prevent the motherboard bending and cracking over time. GPU braces and supports can be found online for a few dollars: in my opinion, a small investment in the structural integrity of your GPU.

Click the image to view it full-size.

Testing procedure

For the test machine, I am still using the dependable Xidax AMD Threadripper 3990X system that I reviewed in 2020. Although it is now four years old, it is still an extremely capable system and doesn’t appear to be a bottleneck for any of the GPUs tested.

The current version of the test system has the following specs:

CPU: AMD Threadripper 3990X

Motherboard: MSI Creator TRX40

RAM: 64 GB of 3,600 MHz Corsair Dominator DDR4

Storage: 2TB Samsung 970 EVO Plus NVMe SD / 1 TB WD Black NVMe SSD / 4 TB HGST 7,200 rpm HD

PSU: 1300W Seasonic Platinum

OS: Windows 11 Pro for Workstations

The only GPU not tested on the Threadripper system was the GeForce RTX 3070. I no longer have access to a desktop RTX 3070, so testing was done using the mobile RTX 3070 in the Asus ProArt Studiobook 16 laptop from this recent review.

In that review, I determined that across a range of tests, the mobile RTX 3070 was around 10% slower than its desktop counterpart, so here, I added 10% to the scores to approximate the performance of a desktop card. It isn’t an ideal methodology, but it gets us to the right ballpark.

For testing, I used the following applications:

Viewport performance

3ds Max 2024, Blender 3.6, Chaos Vantage 2.1.1, D5 Render 2.3.4, Fusion 360, Maya 2024, Modo 16.0v2, Omniverse Create 2022.3.1, SolidWorks 2022, Substance 3D Painter 9.0.0, Unigine Community 2.16.0.1, Unity 2022.1, Unreal Engine 5.3.1 and 4.27.2

Rendering

Arnold for Maya 5.1.0, Blender 3.6 (Cycles renderer), KeyShot 11.2.0, LuxCoreRender 2.6, Maverick Studio 2022.5, OctaneRender 2022.1 Standalone, Redshift 3.5.24 for 3ds Max, SolidWorks Visualize 2022, V-Ray GPU 6 for 3ds Max Hotfix 3

Other benchmarks

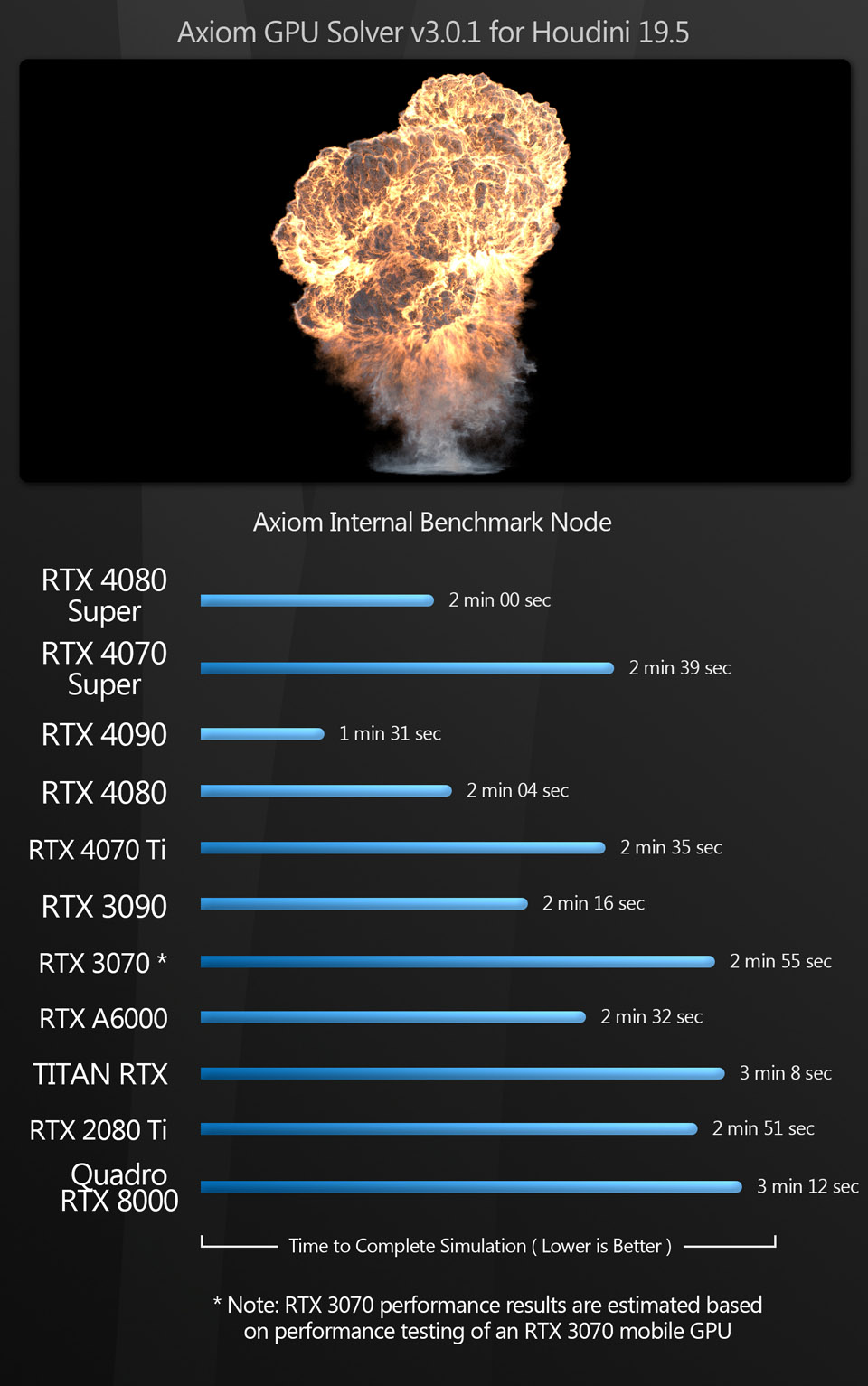

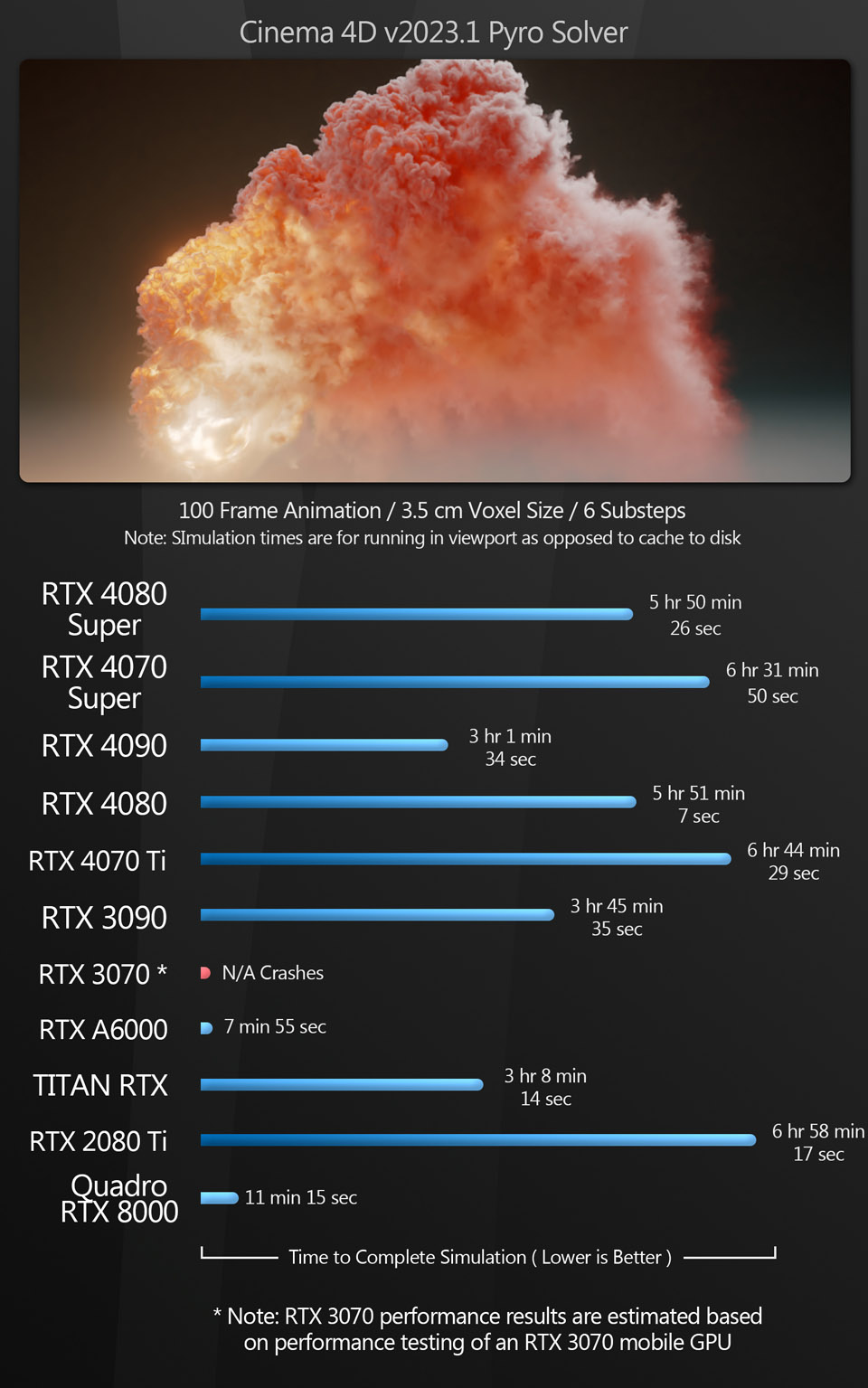

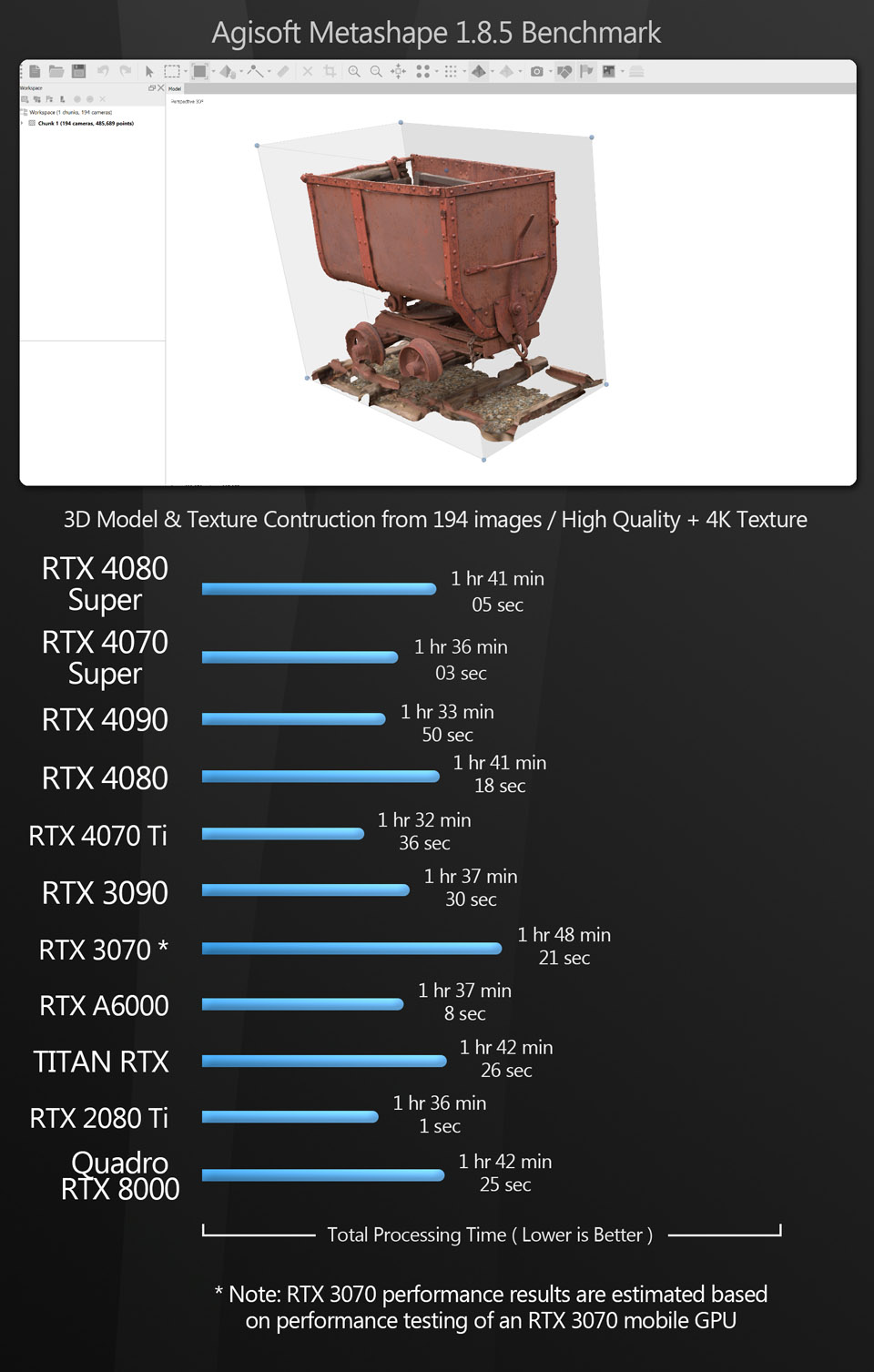

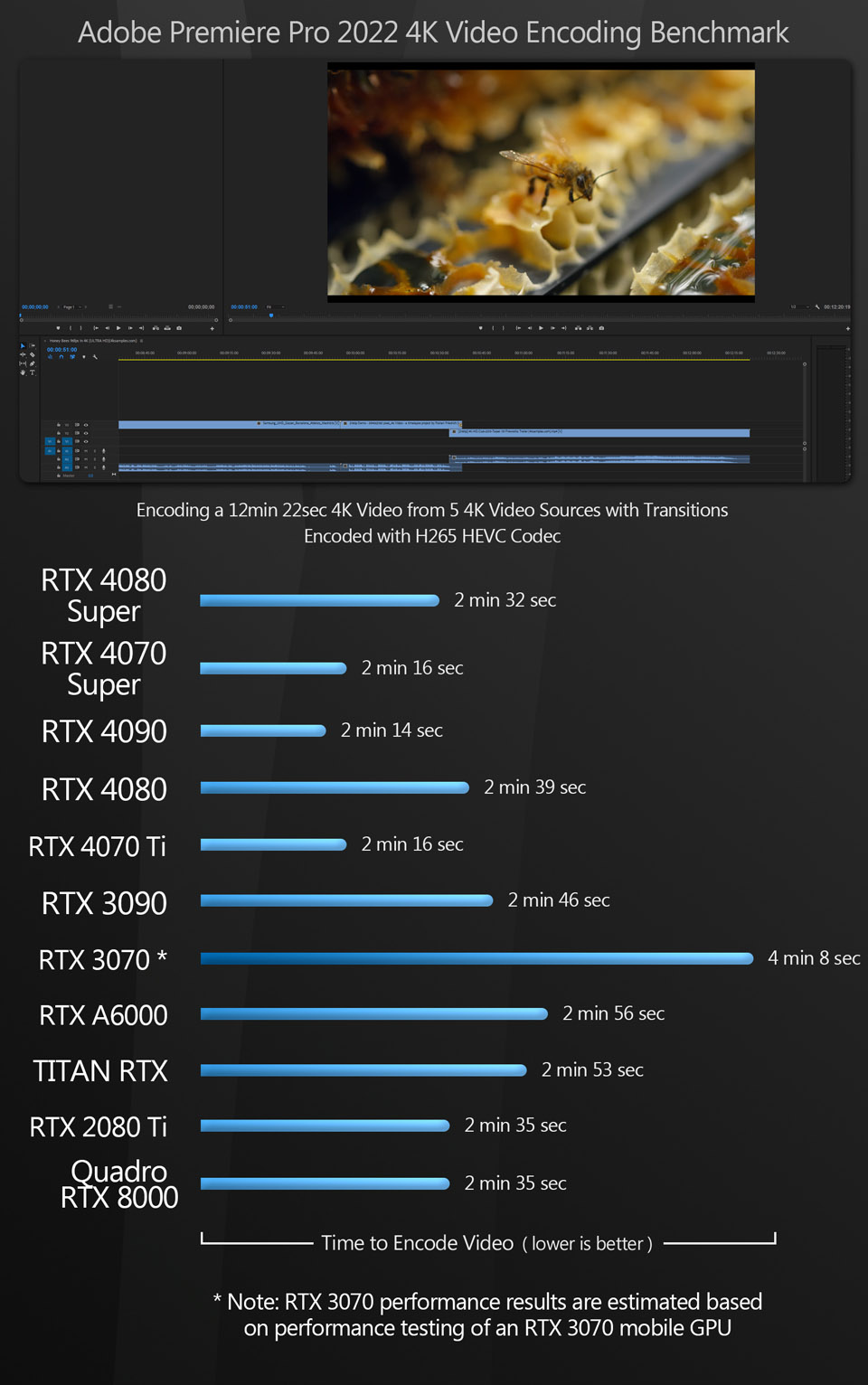

Axiom 3.0.1 for Houdini 19.5, Cinema 4D v2023.1 (Pyro solver), Metashape 1.8.5, Premiere Pro 2022

Synthetic benchmarks

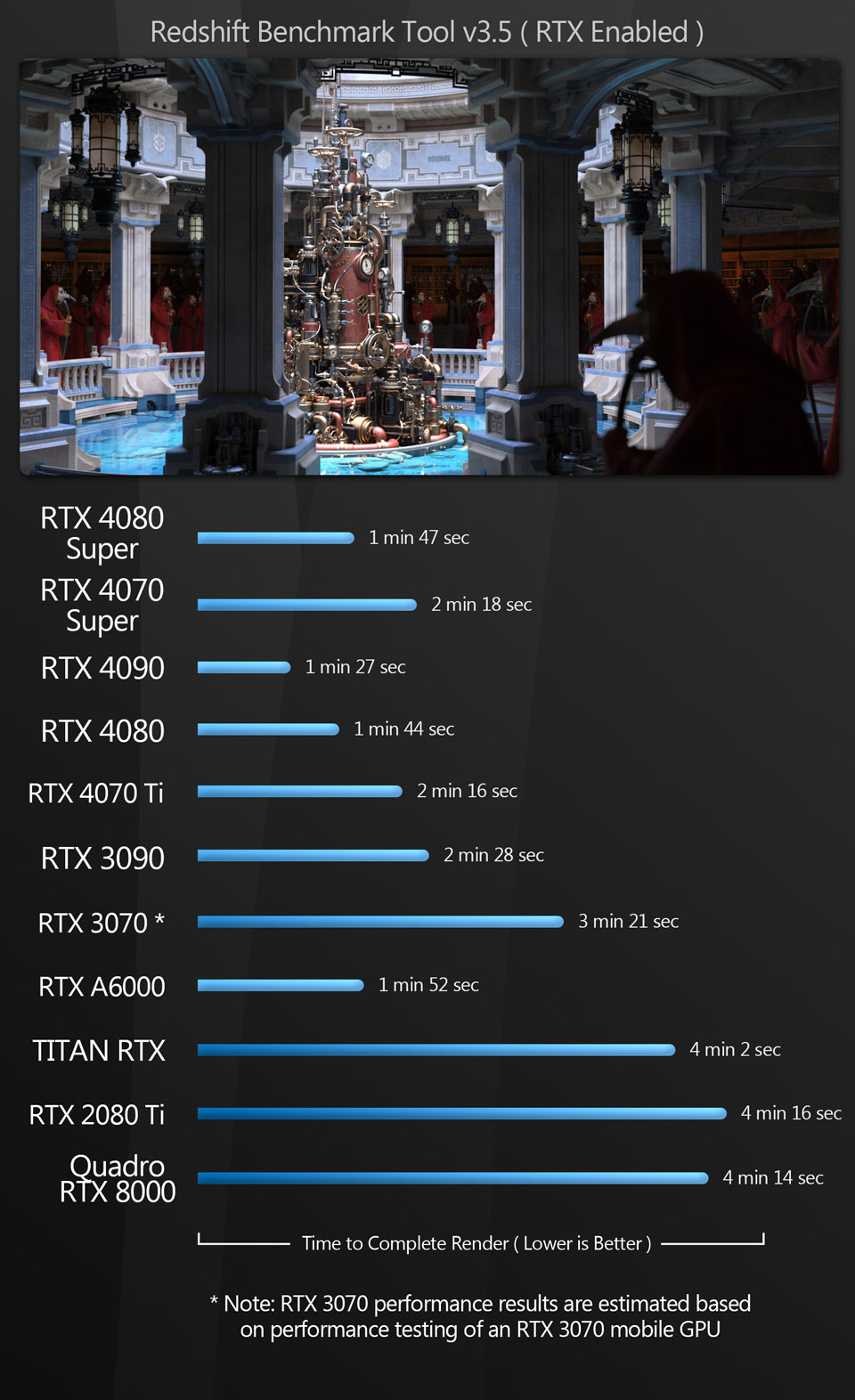

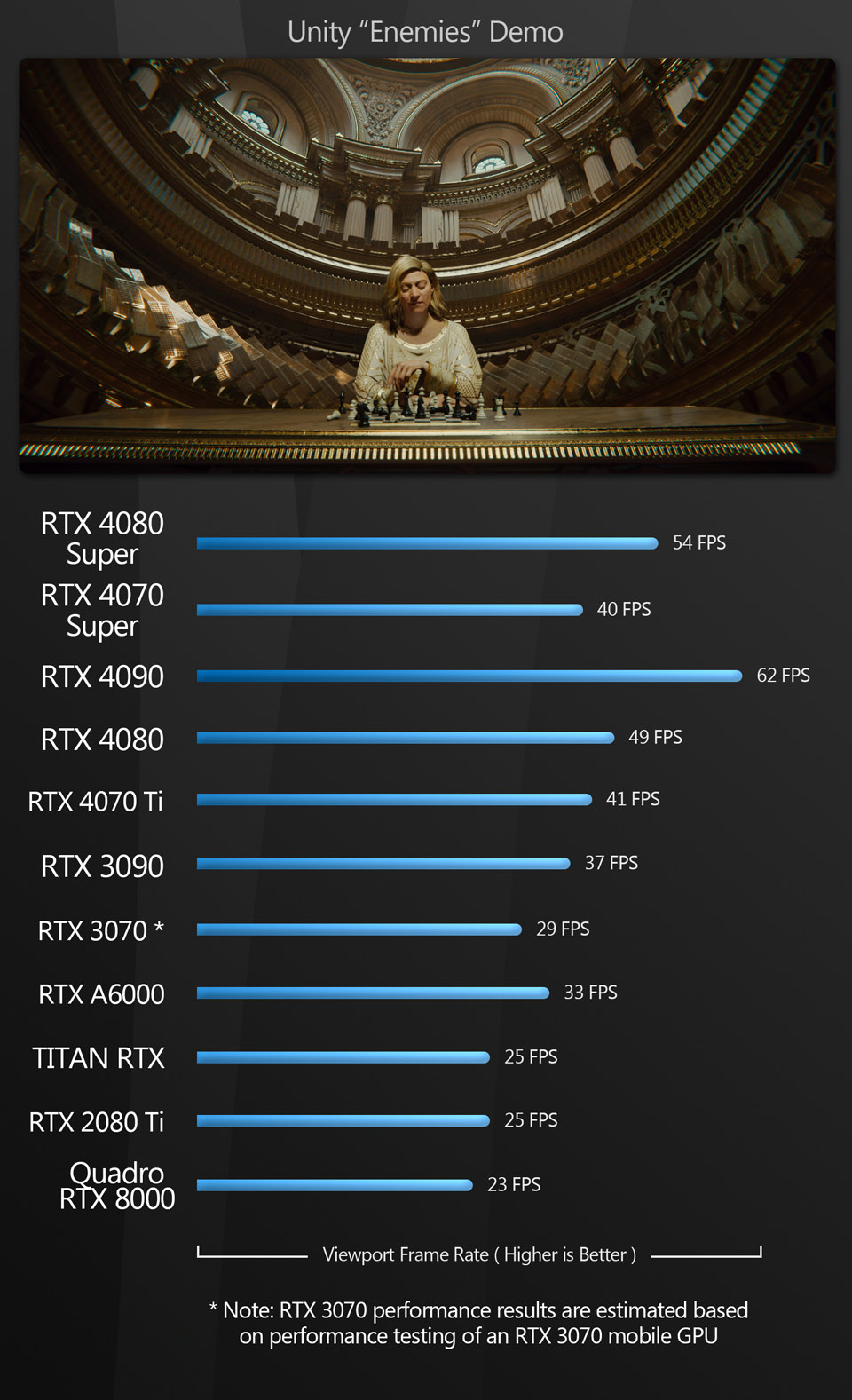

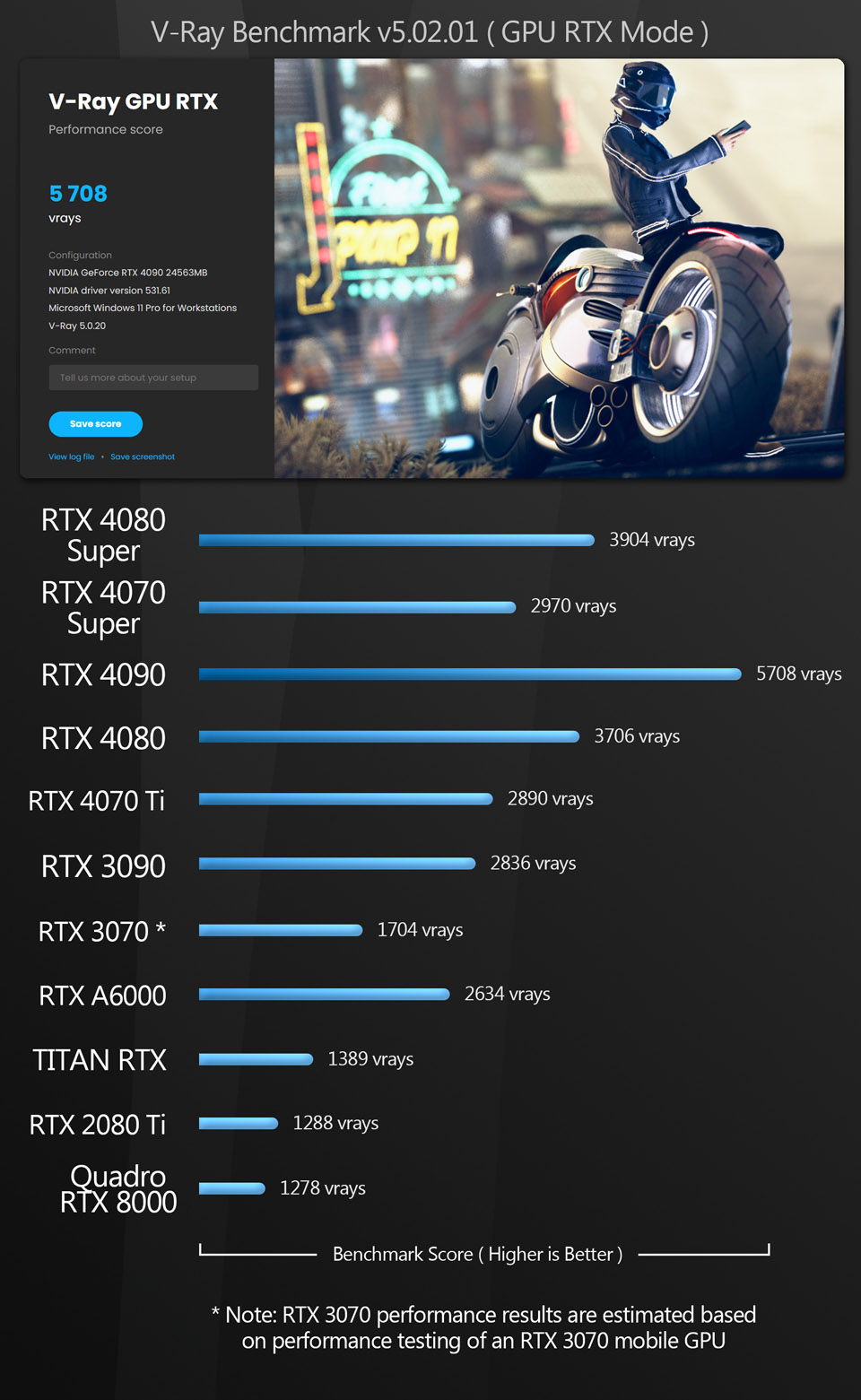

3DMark Speed Way 1.0 and Port Royal 1.2, Cinebench 2024.0.1, CryEngine Neon Noir Ray Tracing Benchmark, OctaneBench 2020.1.5, Redshift Benchmark v3.5, Unity Enemies Demo, V-Ray Benchmark v5.02.01

All benchmarking was done with NVIDIA Studio Drivers installed for the GeForce RTX GPUs and workstation drivers installed for the RTX A6000 and Quadro RTX 8000. You can find a more detailed discussion of the drivers used later in the article.

In the viewport and editing benchmarks, the frame rate scores represent the figures attained when manipulating the 3D assets shown, averaged over five testing sessions to eliminate inconsistencies. In all of the rendering benchmarks, the CPU was disabled so only the GPU was used for computing.

Testing was done on a proper productivity monitor setup, consisting of a pair of 27″ 4K monitors running at 3,840 x 2,160px and a 34” widescreen display running at 3,440 x 1,440px. All three displays had a refresh rate of 144Hz. When testing viewport performance, the software viewport was constrained to the primary display (one of the 27″ monitors): no spanning across multiple displays was permitted.

Benchmark results

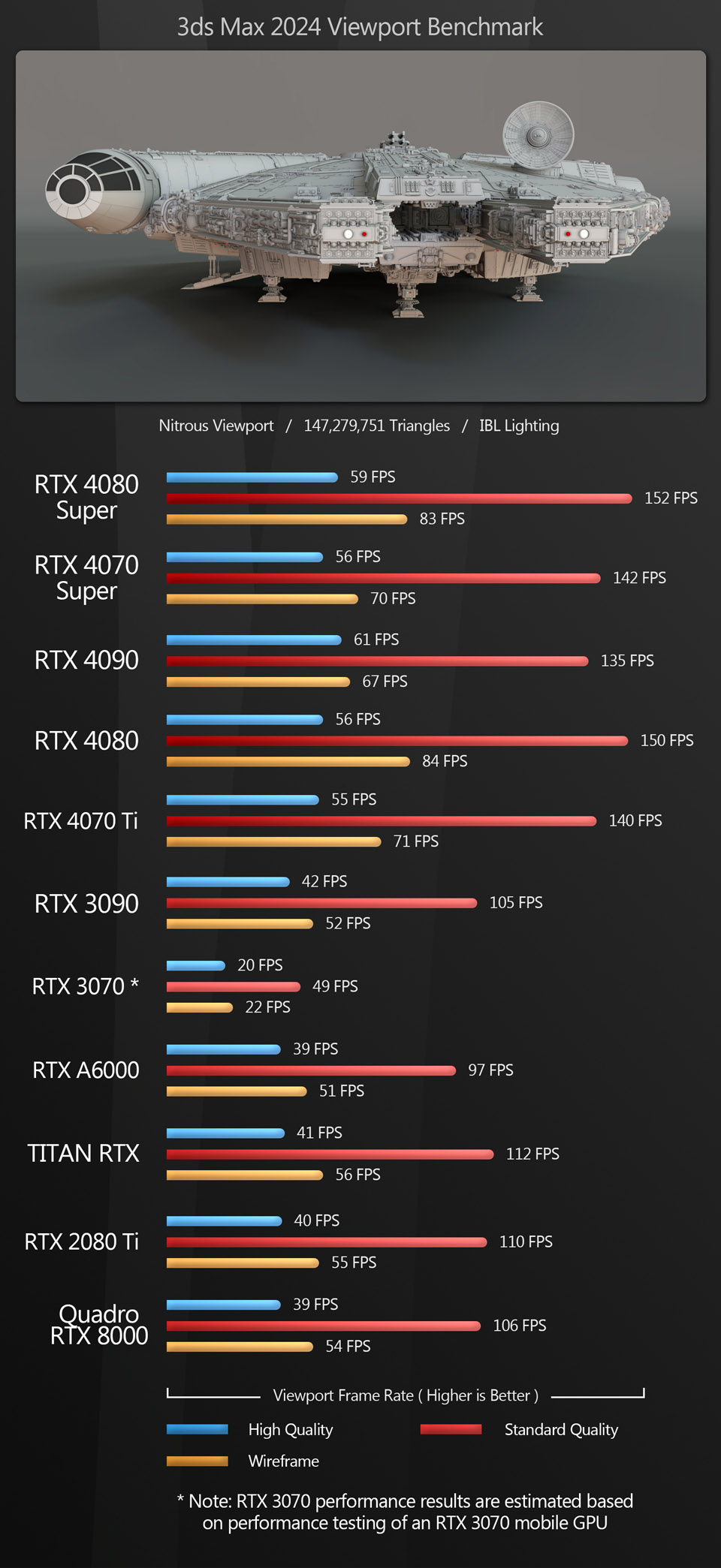

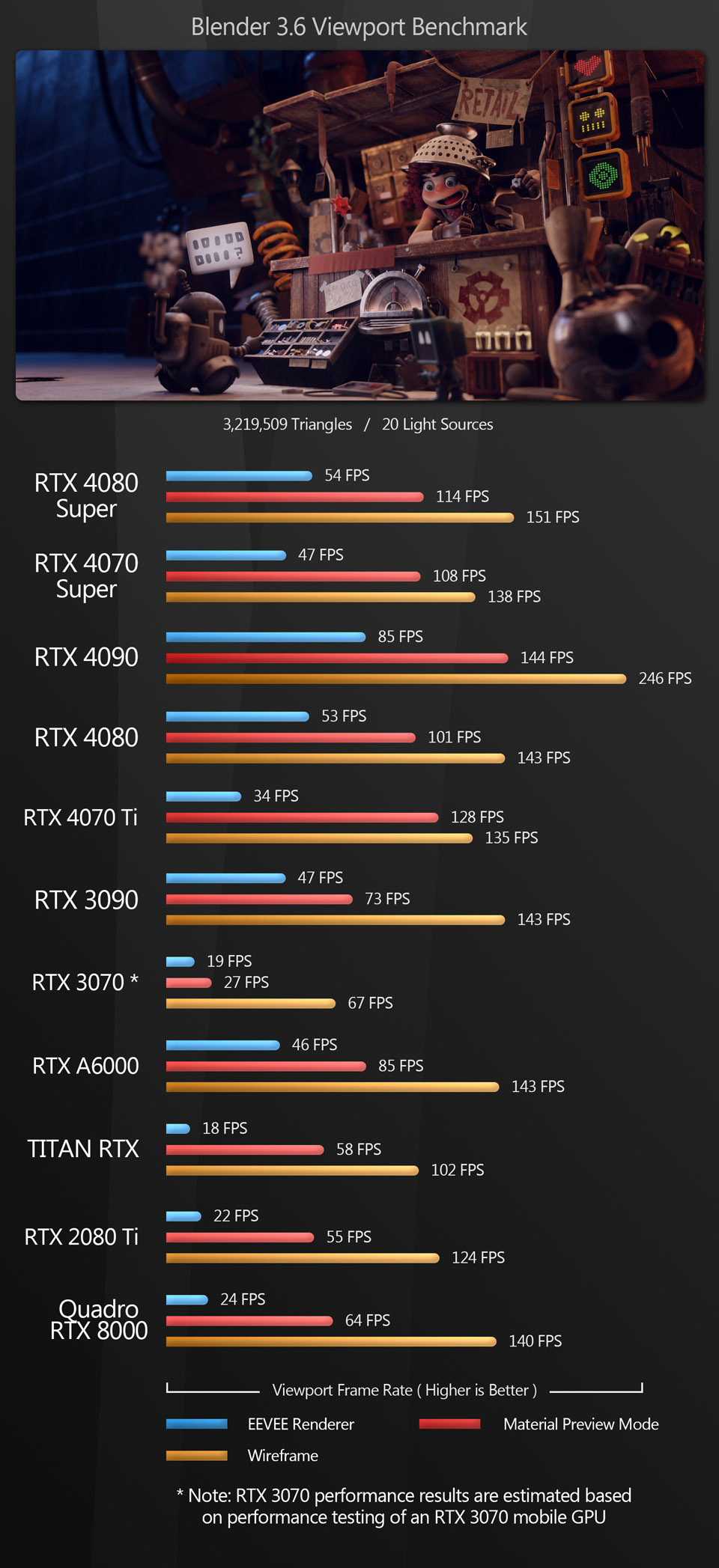

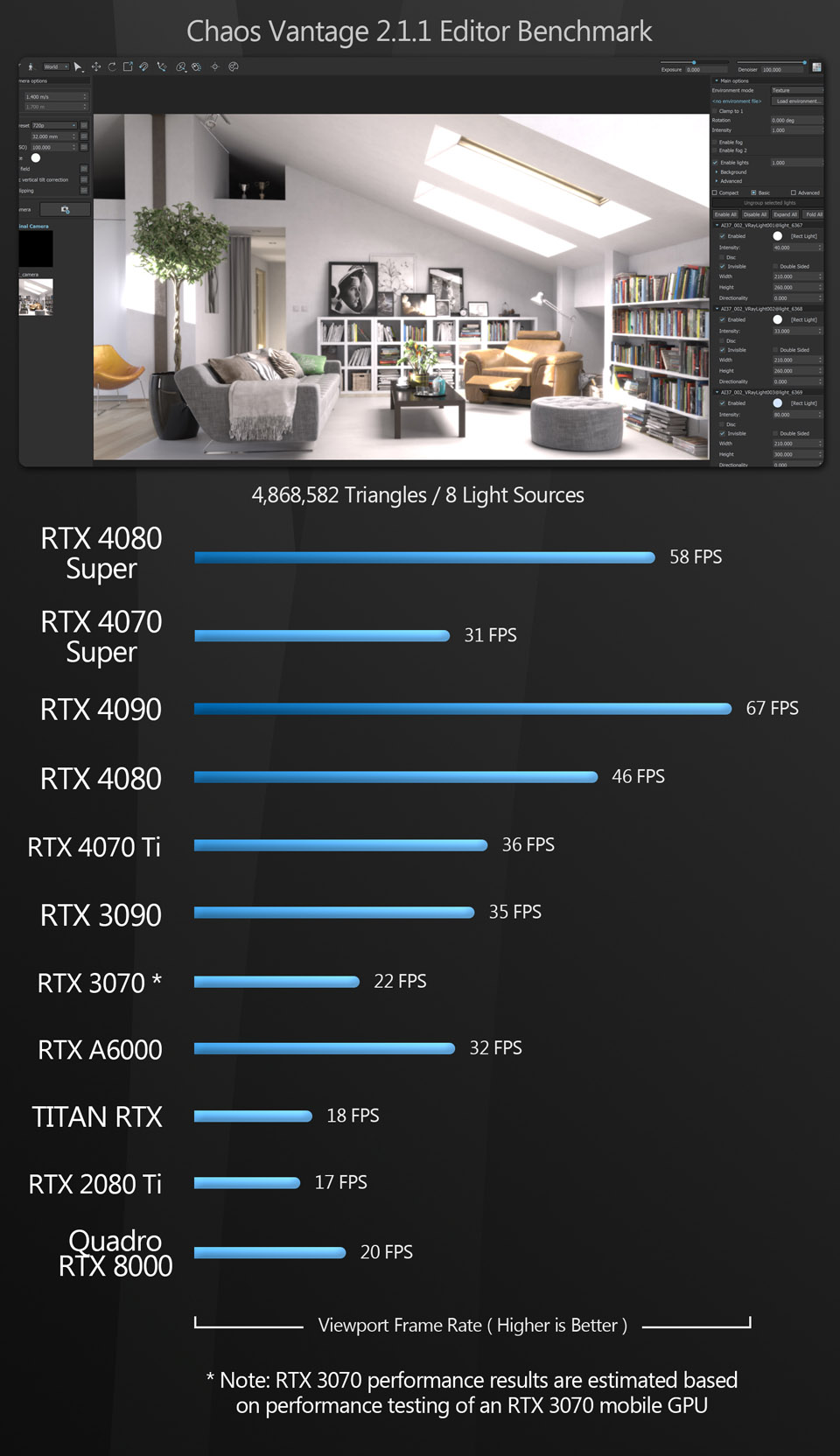

Viewport performance

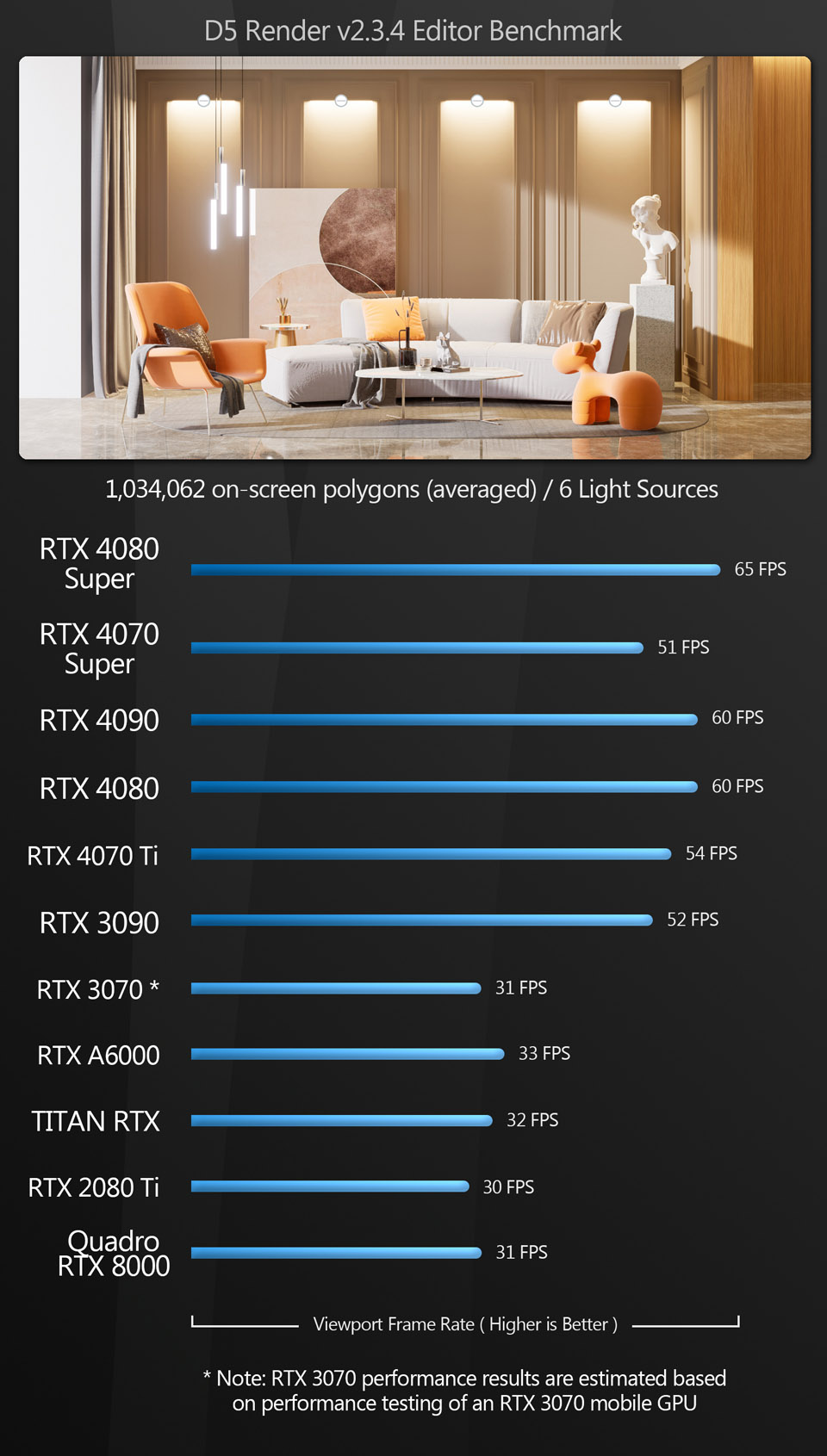

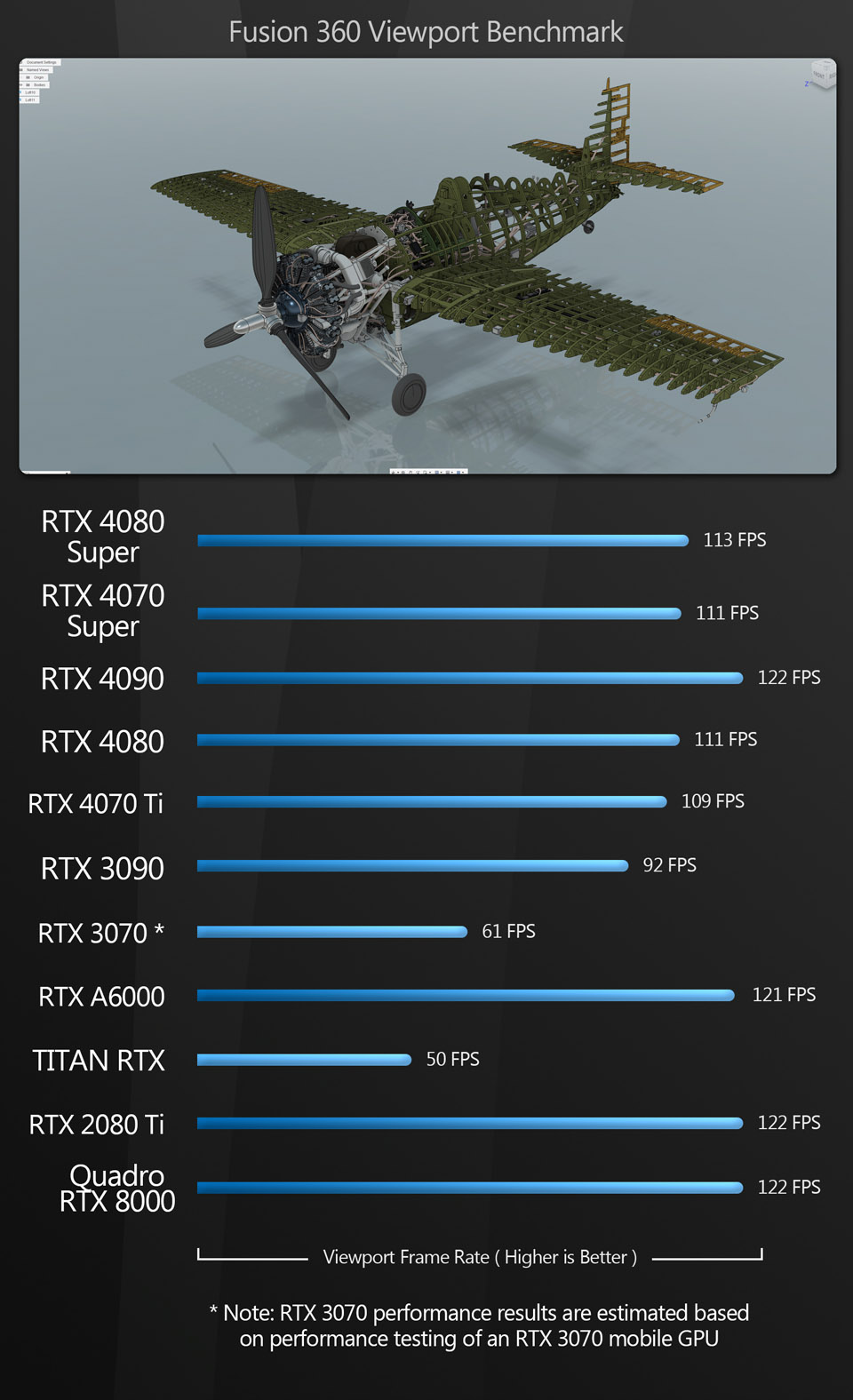

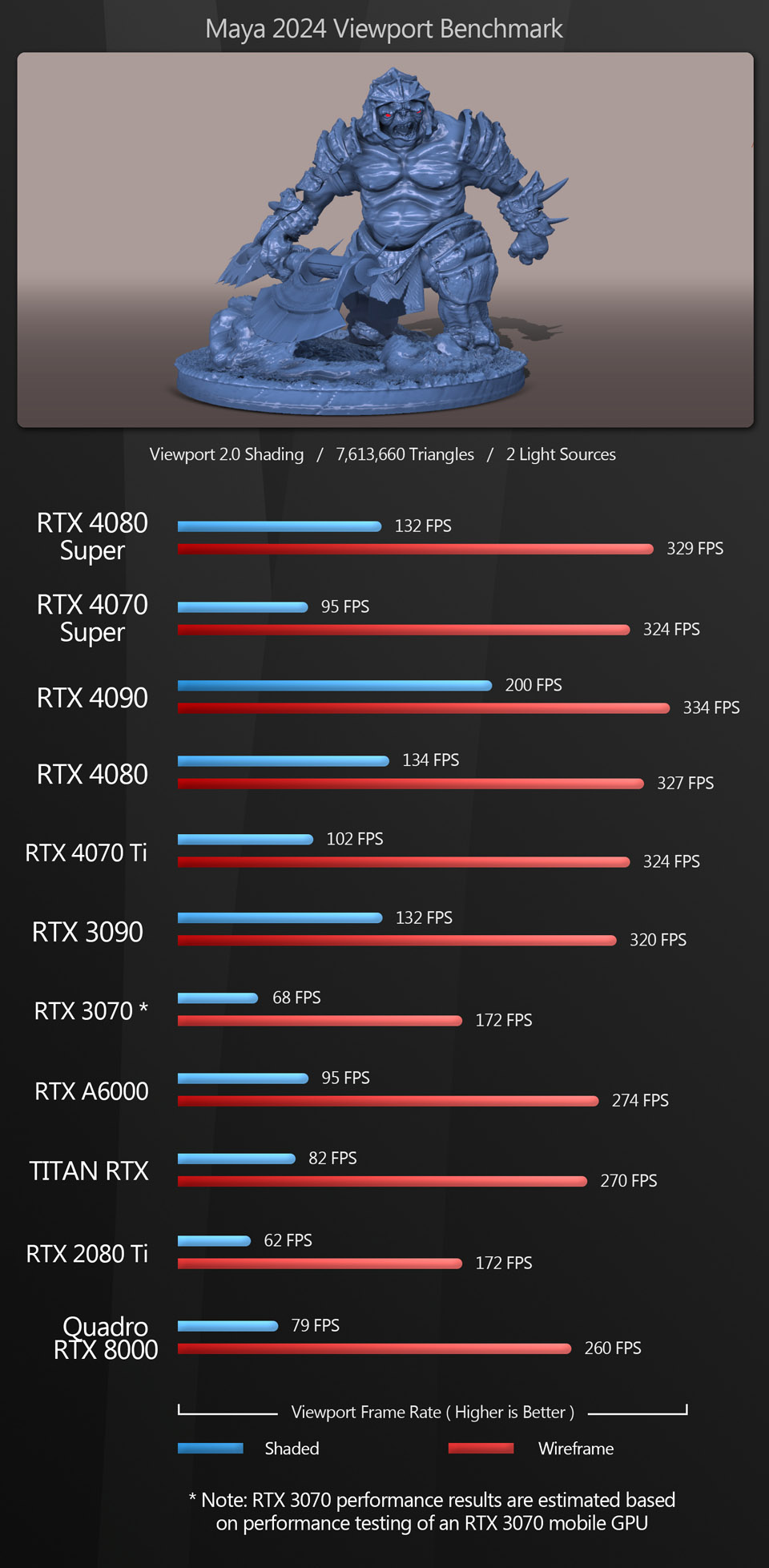

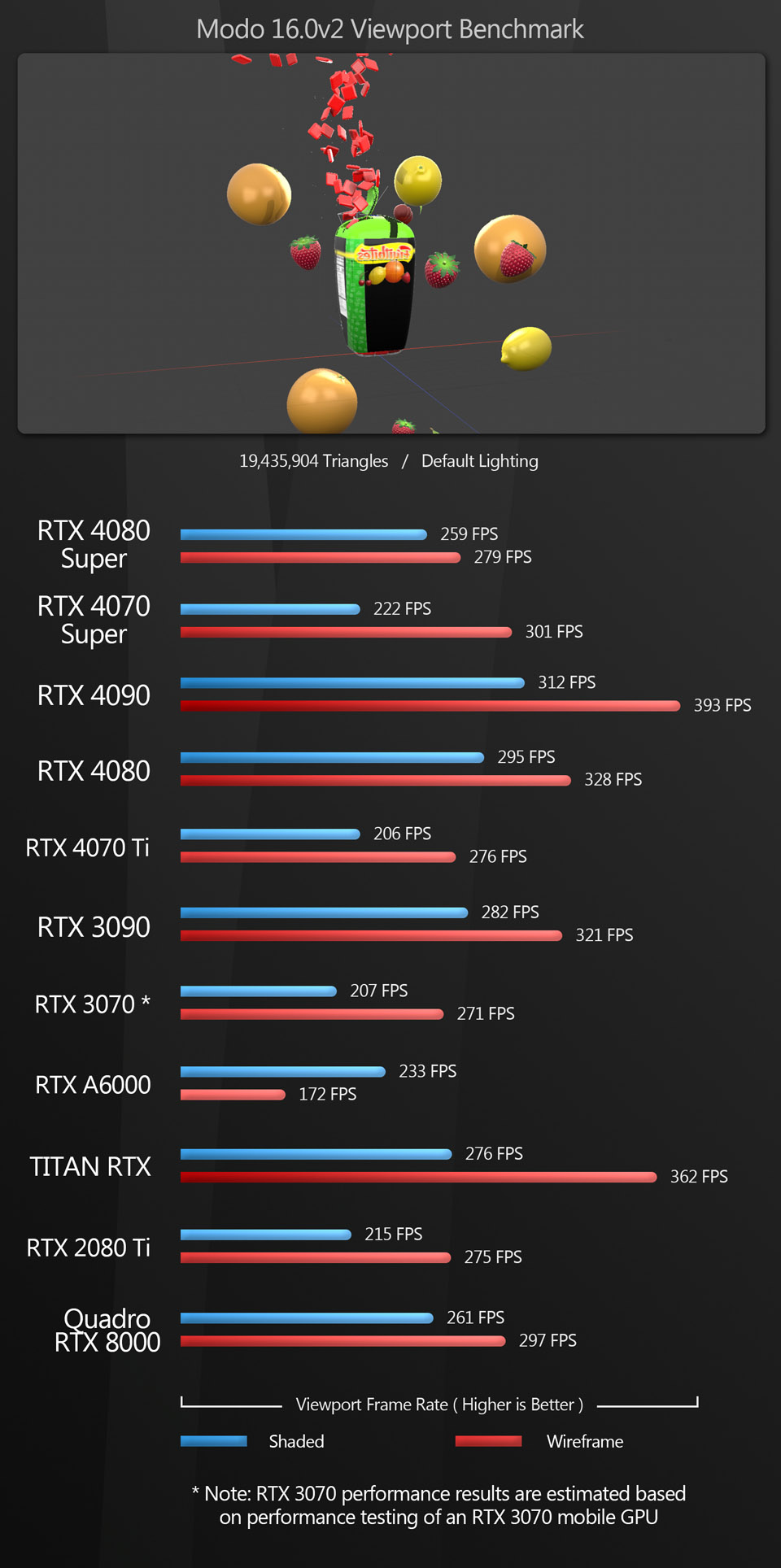

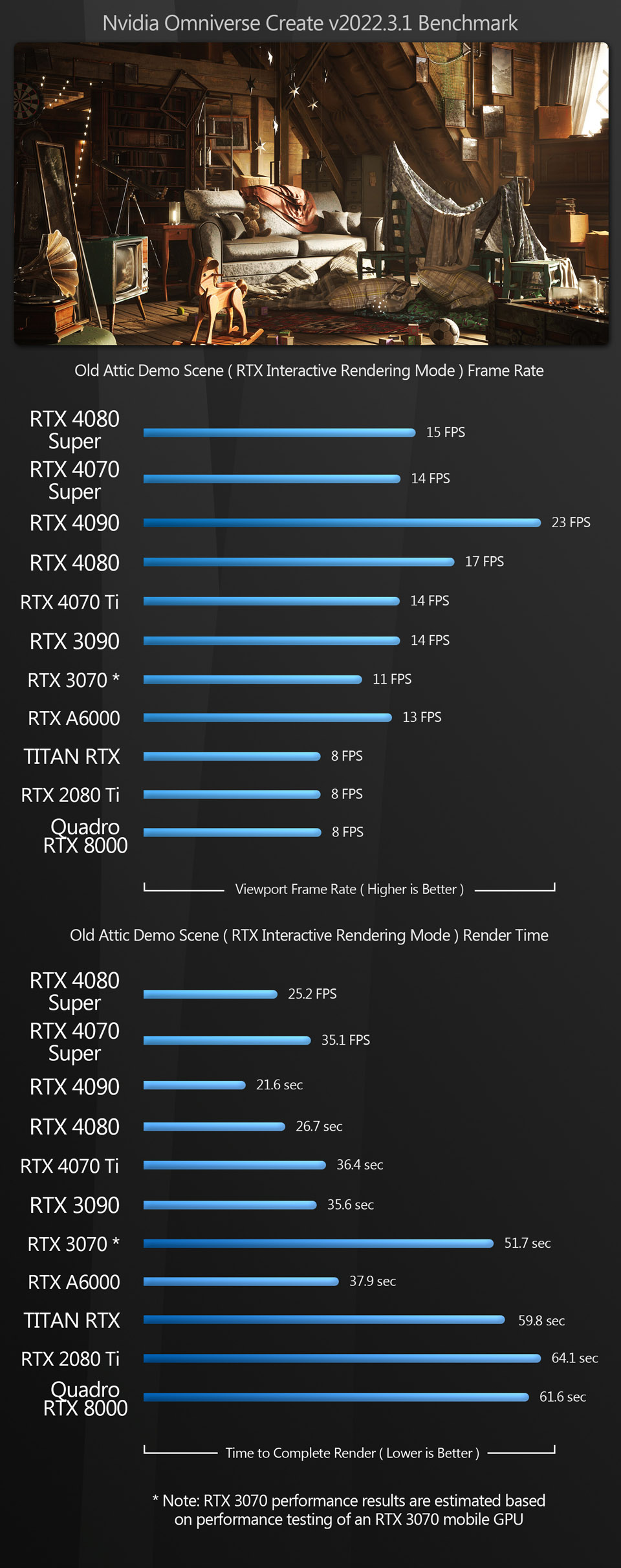

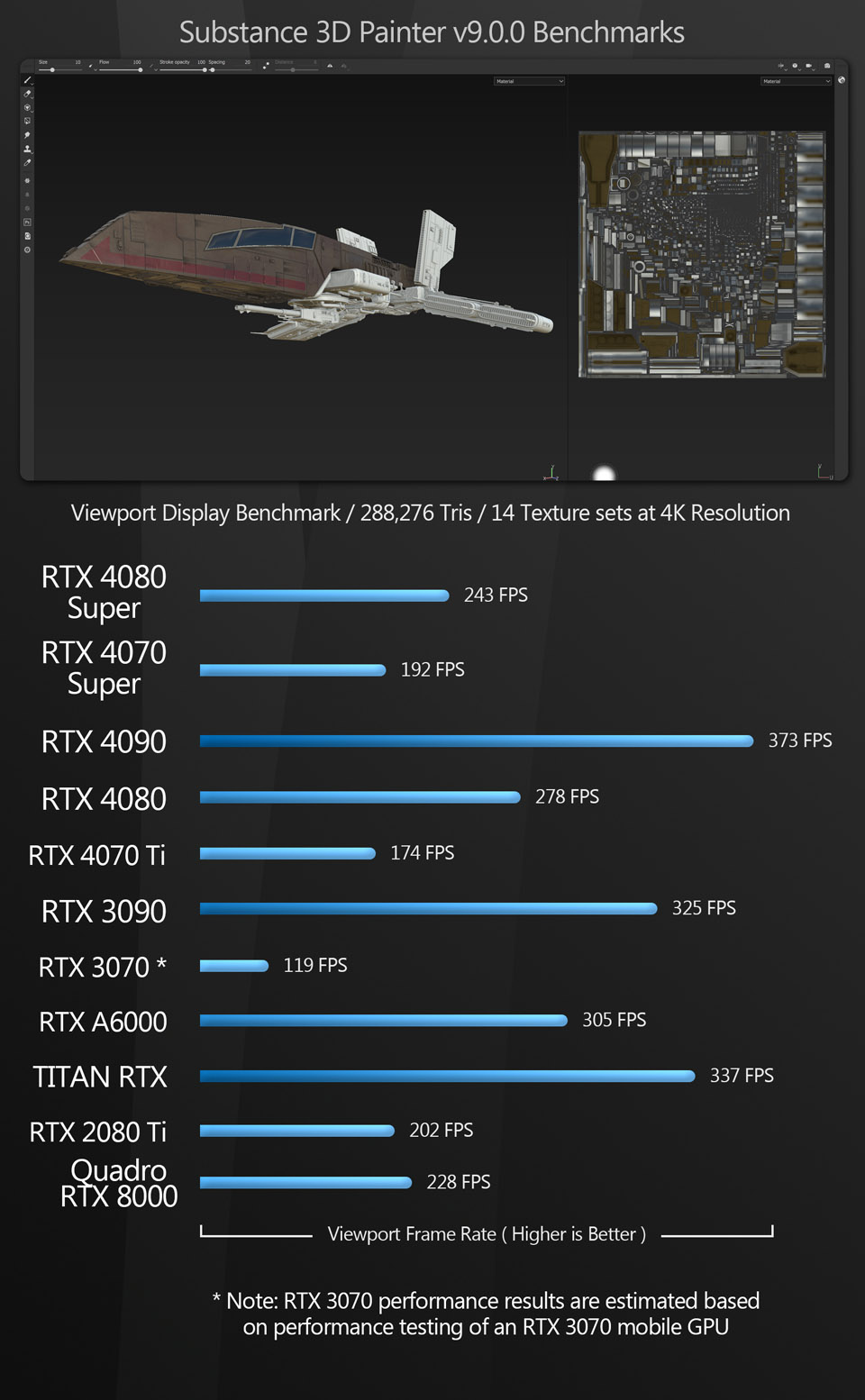

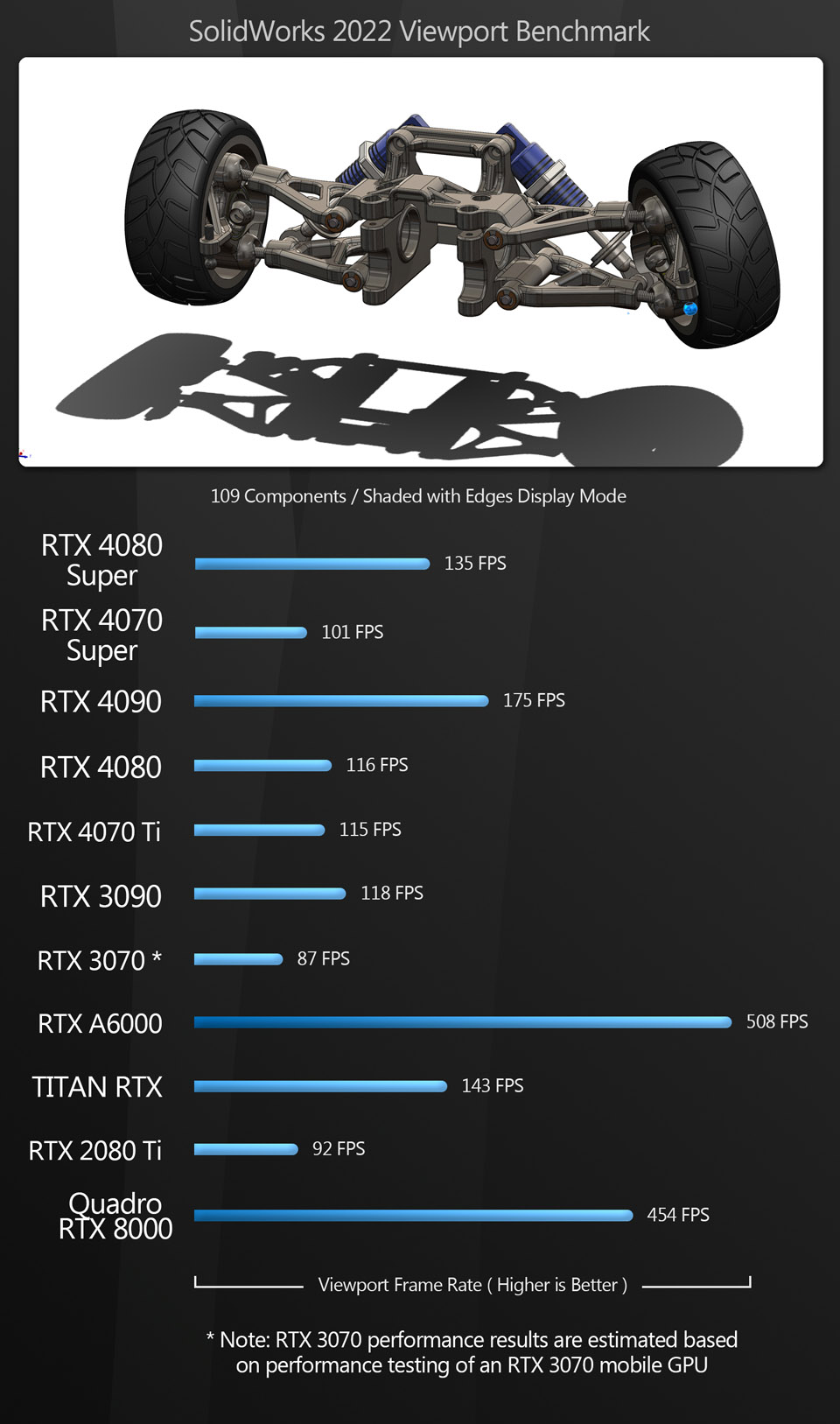

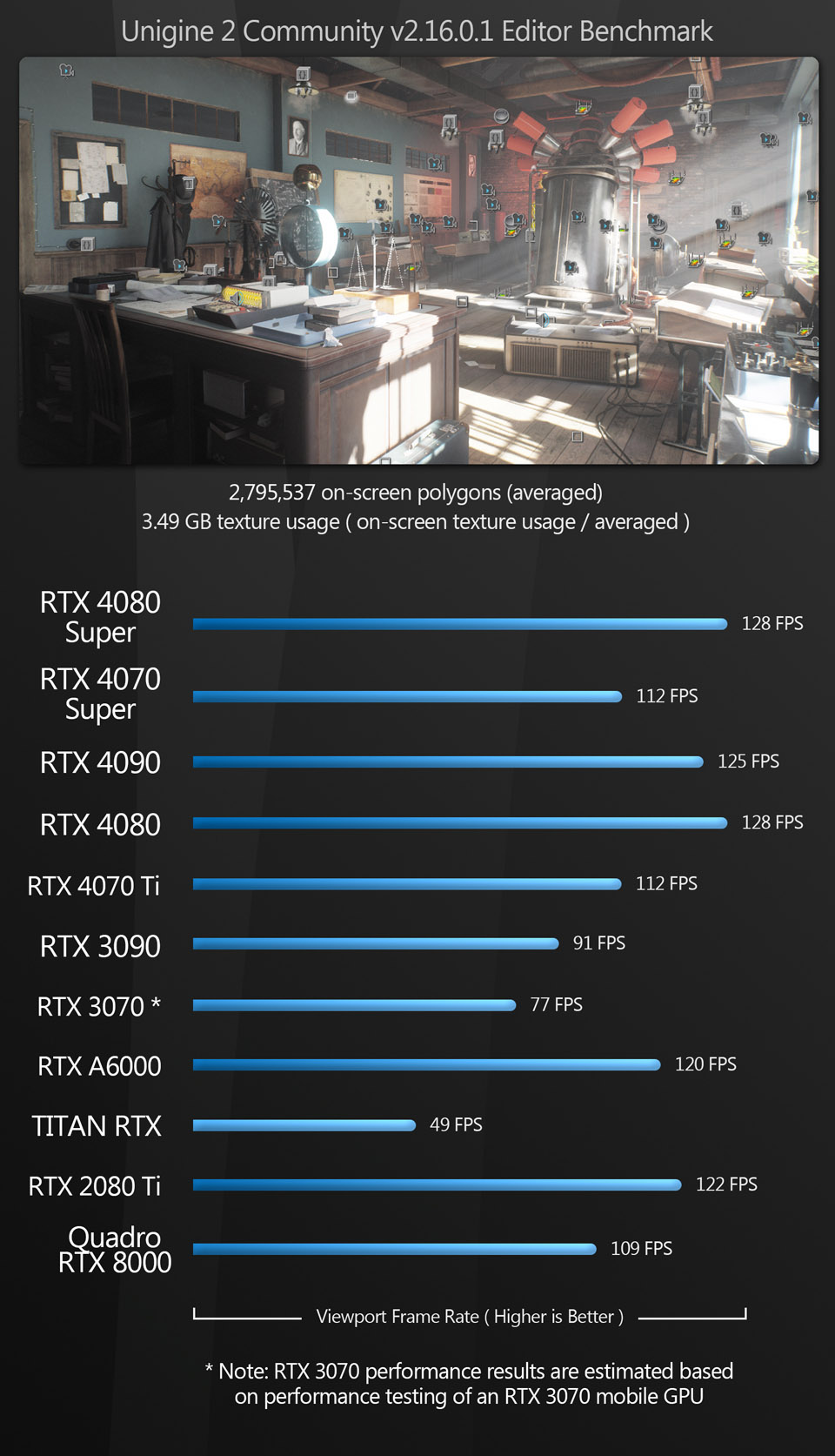

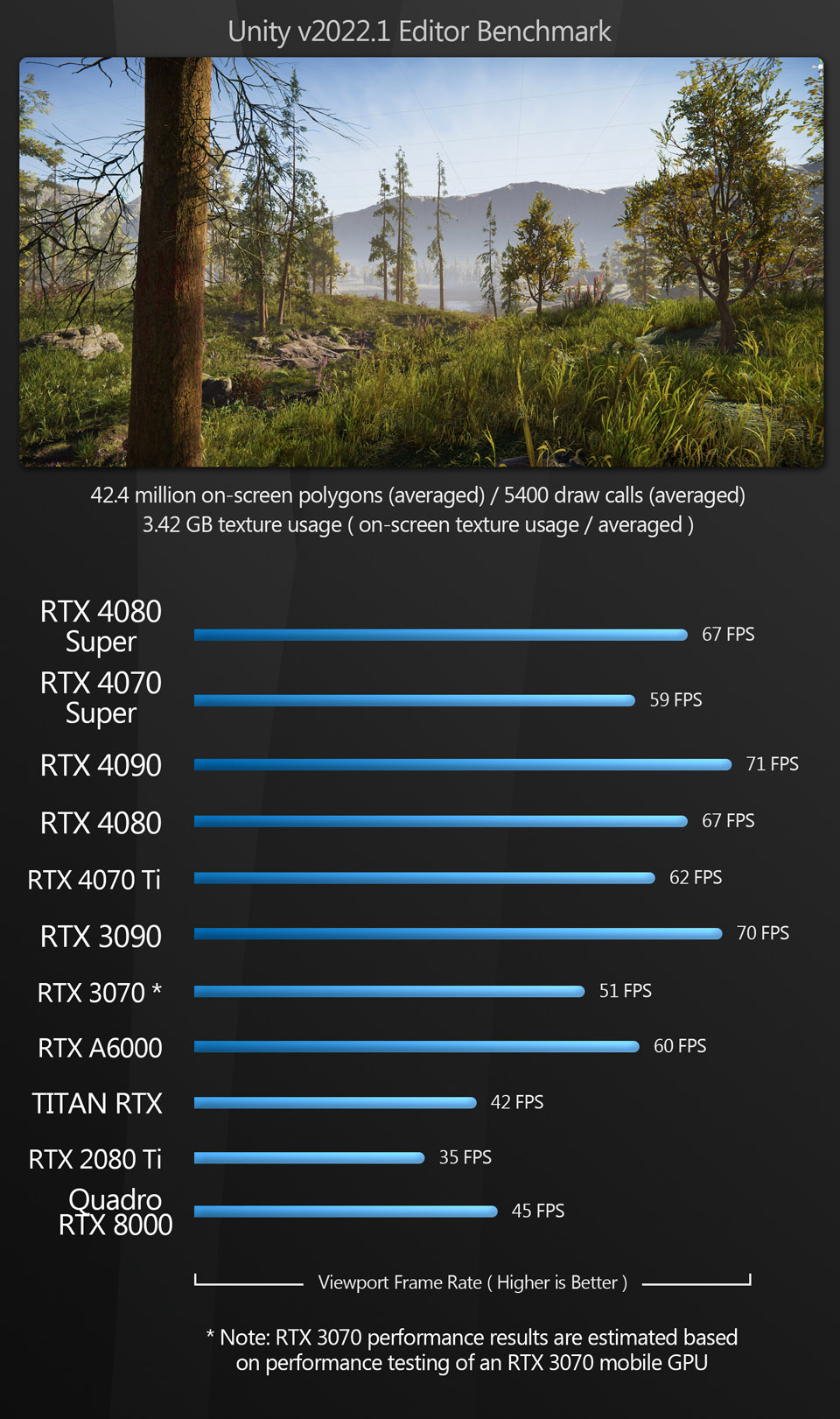

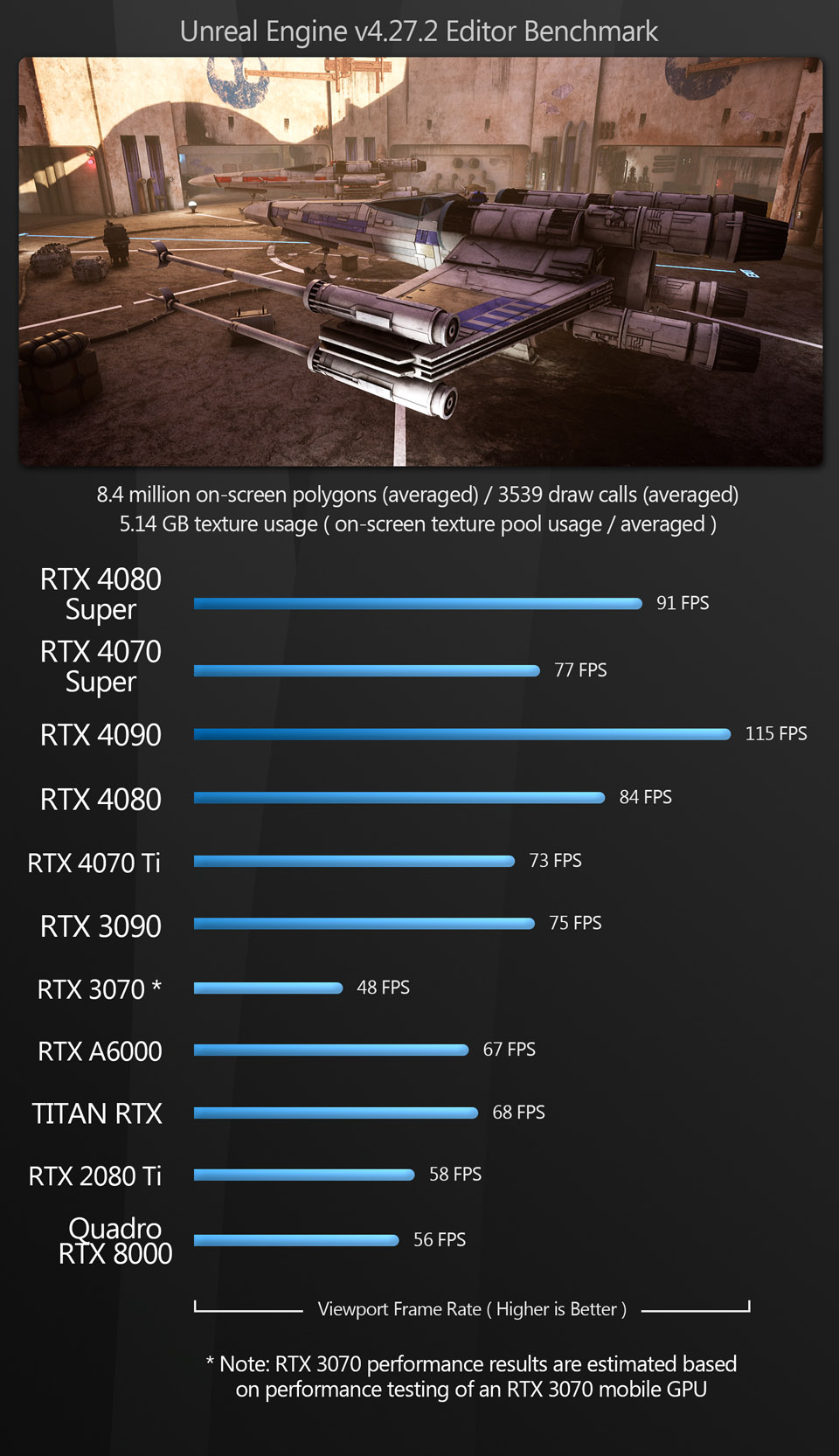

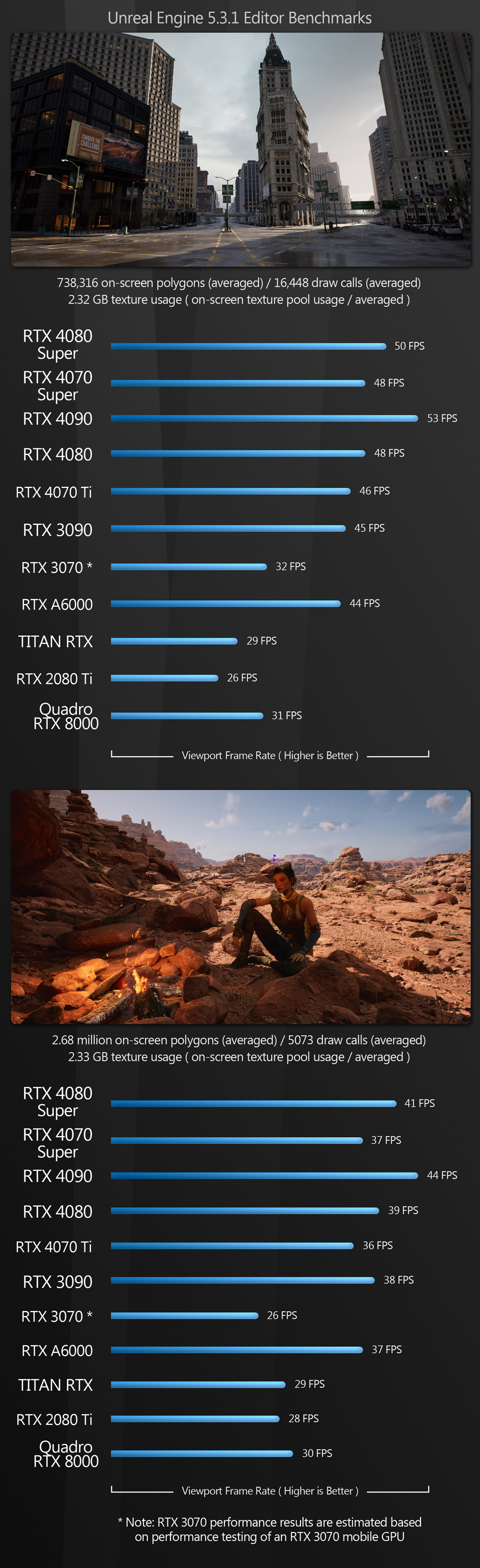

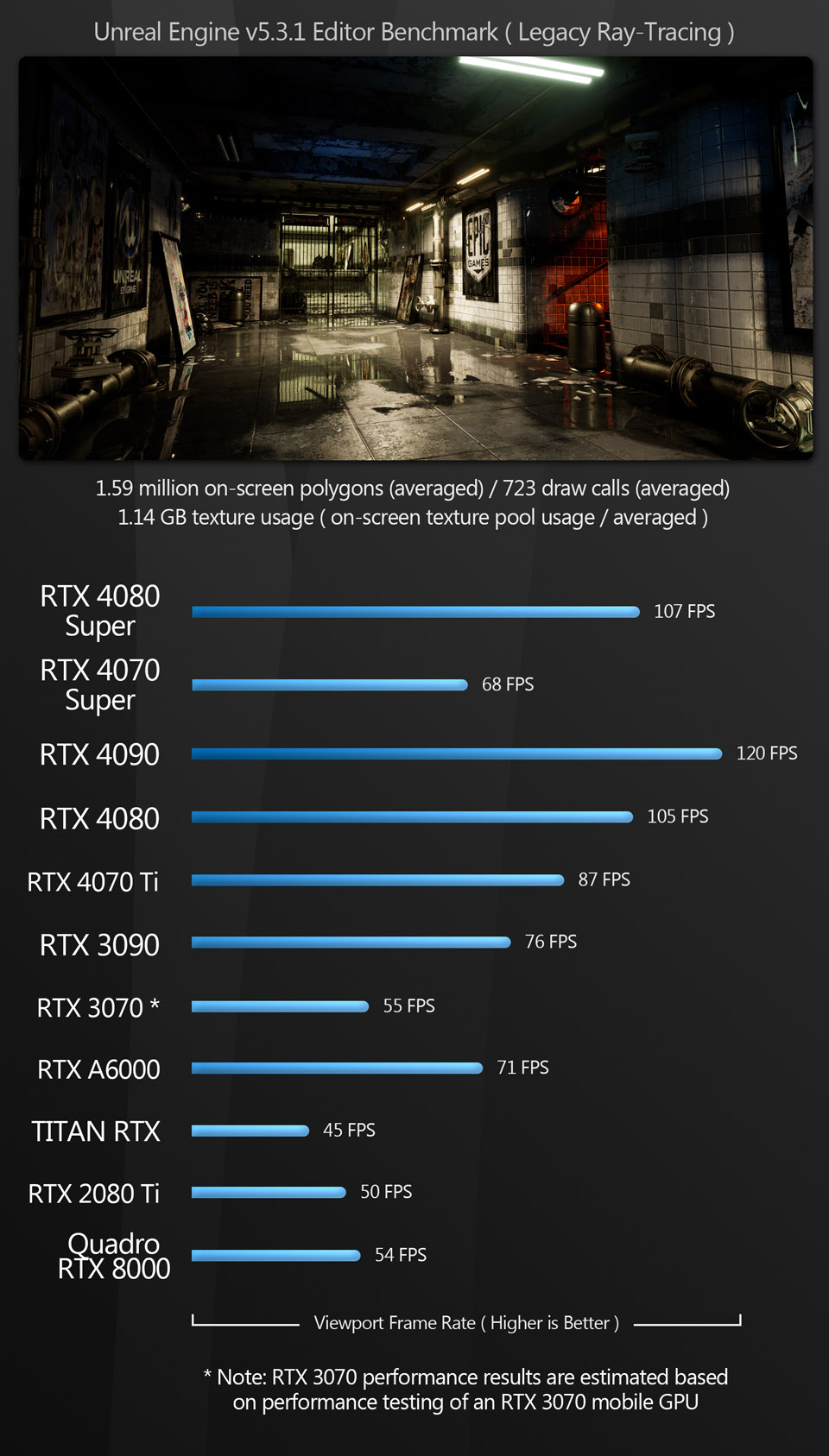

The viewport benchmarks include a number of key DCC applications – general-purpose 3D software like 3ds Max, Blender and Maya, more specialist tools like Substance 3D Painter, CAD packages like SolidWorks and Fusion 360, and real-time 3D applications like D5 Render, Unity and Unreal Engine.

In the viewport benchmarks, the performance of the GeForce RTX 4080 Super isn’t much different to its predecessor, the GeForce RTX 4080: only slightly ahead in most tests, and even falling behind in a few. If you average all of the figures, it’s 3-5% faster than the vanilla 4080.

Although I never had the chance to test a vanilla GeForce RTX 4070, the GeForce RTX 4070 Super seems to offer a much more significant performance boost, since in these tests, it’s essentially the new GeForce RTX 4070 Ti. It pulls slightly ahead of the actual 4070 TI in some tests, and falls slightly behind in others.

Another thing to note is the Unreal Engine scores. In the original group test, I noted that there was a strange performance anomaly with the City and Valley of the Ancients scenes. This seems to have been a software issue with Unreal Engine 5.1 itself, since moving to Unreal Engine 5.3 has fixed the issue, with the GeForce RTX 40 Series GPUs providing a much larger uplift in performance over the the older cards.

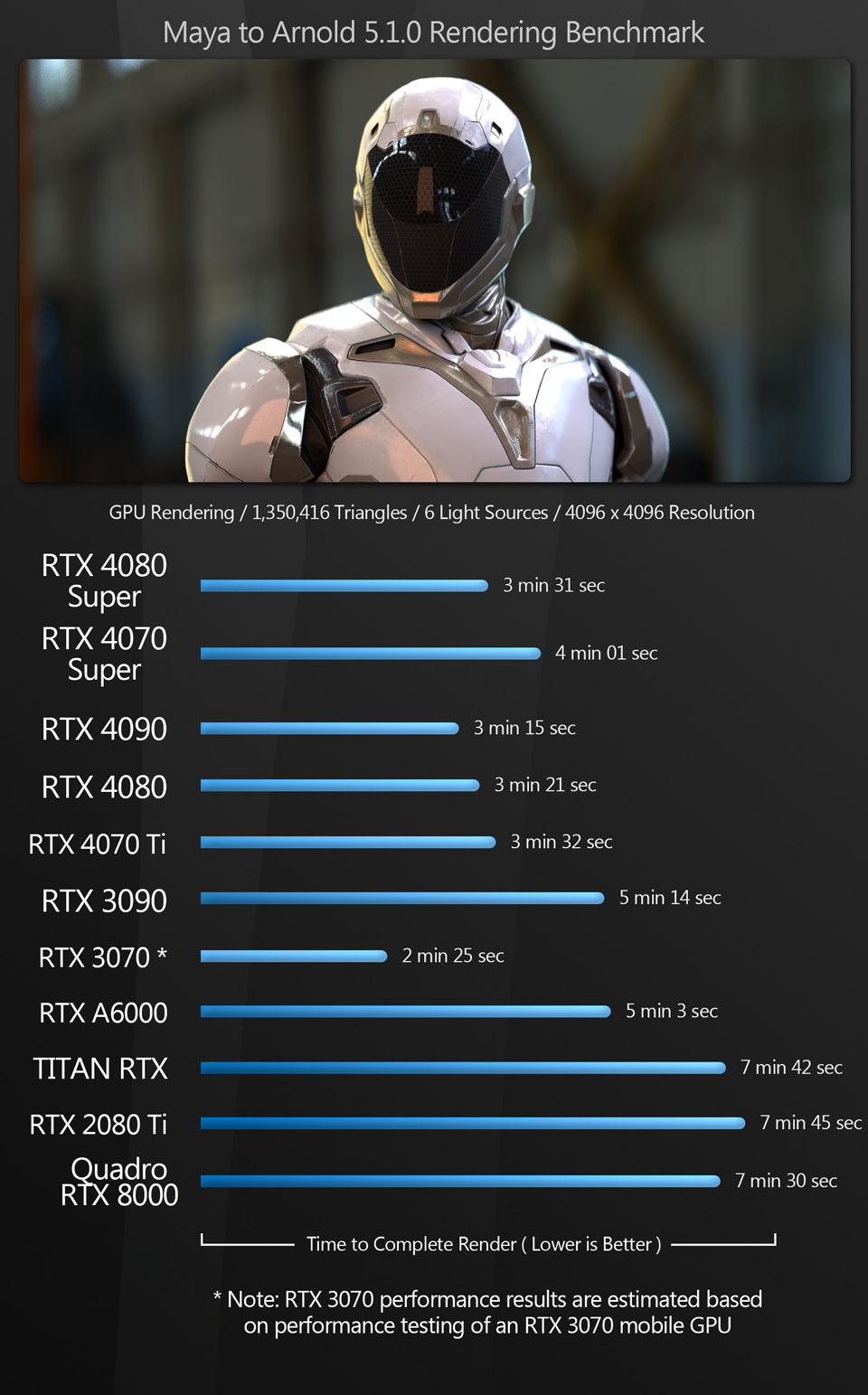

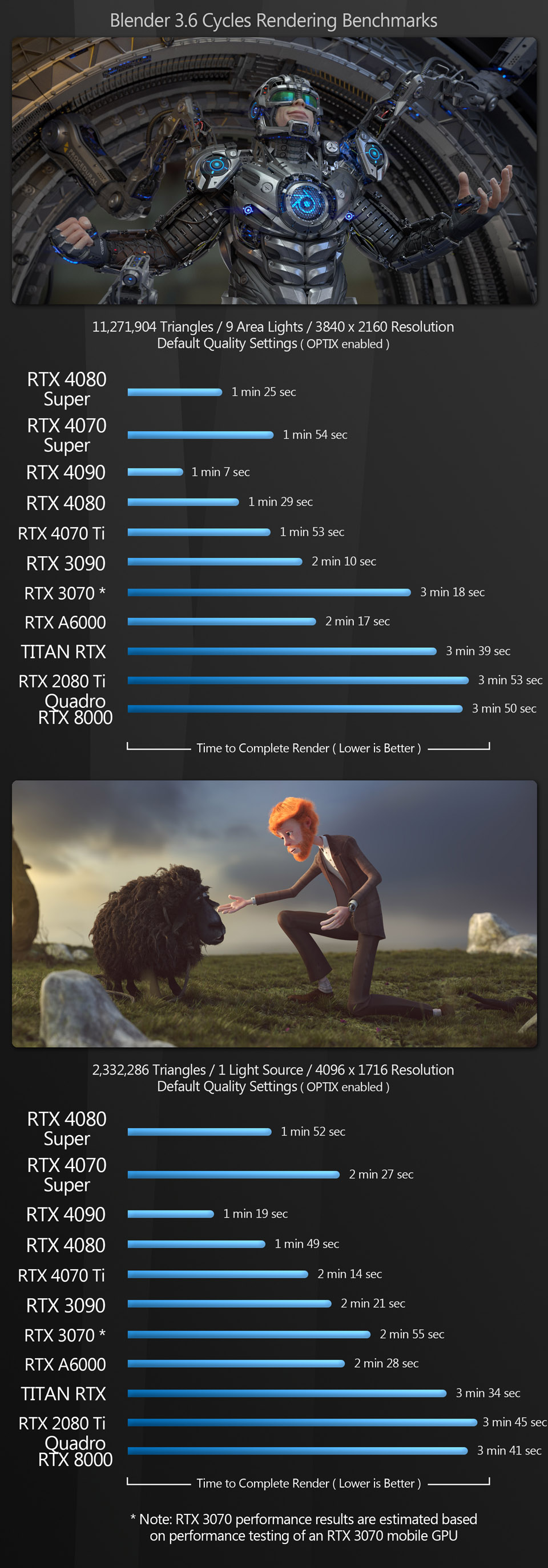

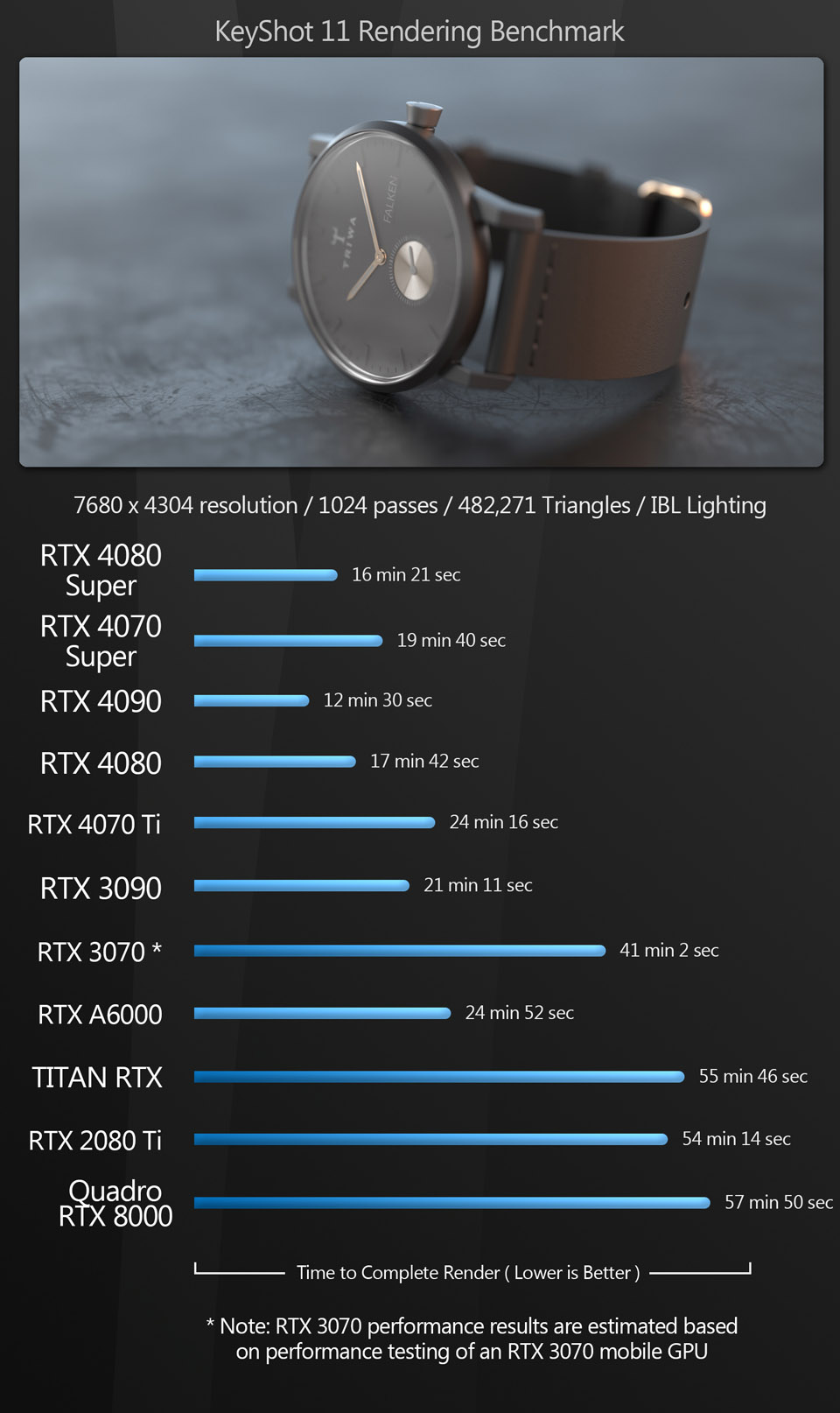

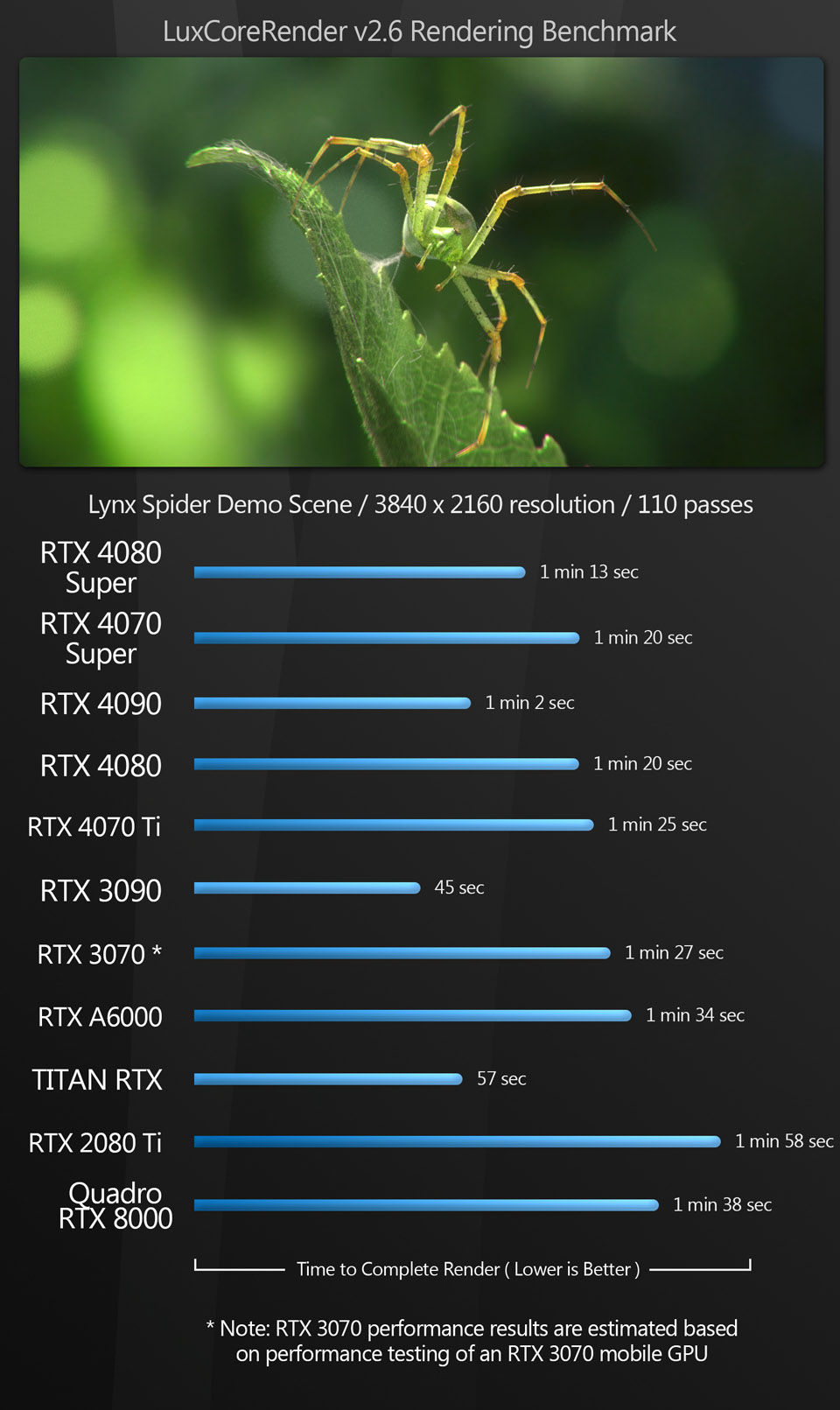

Rendering

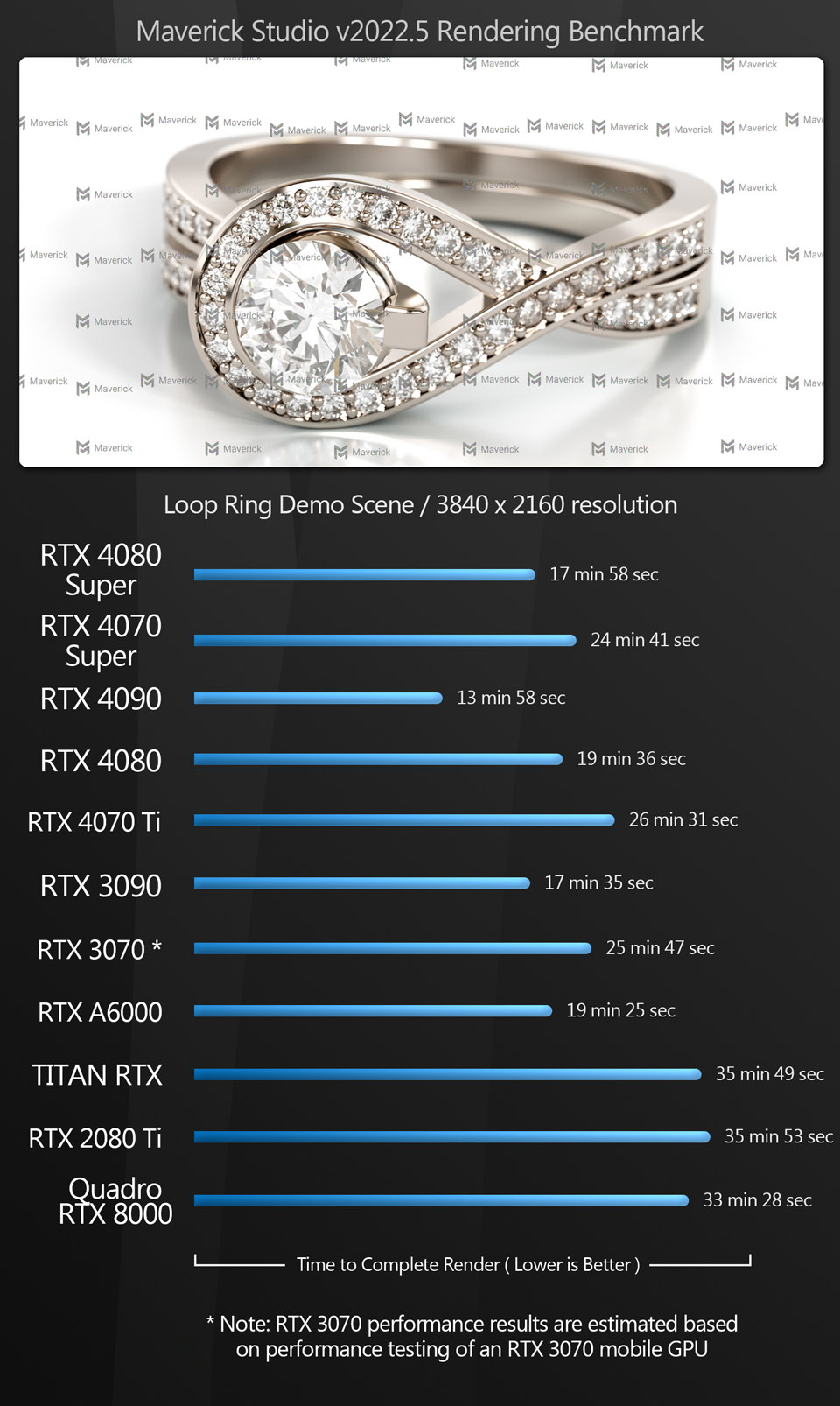

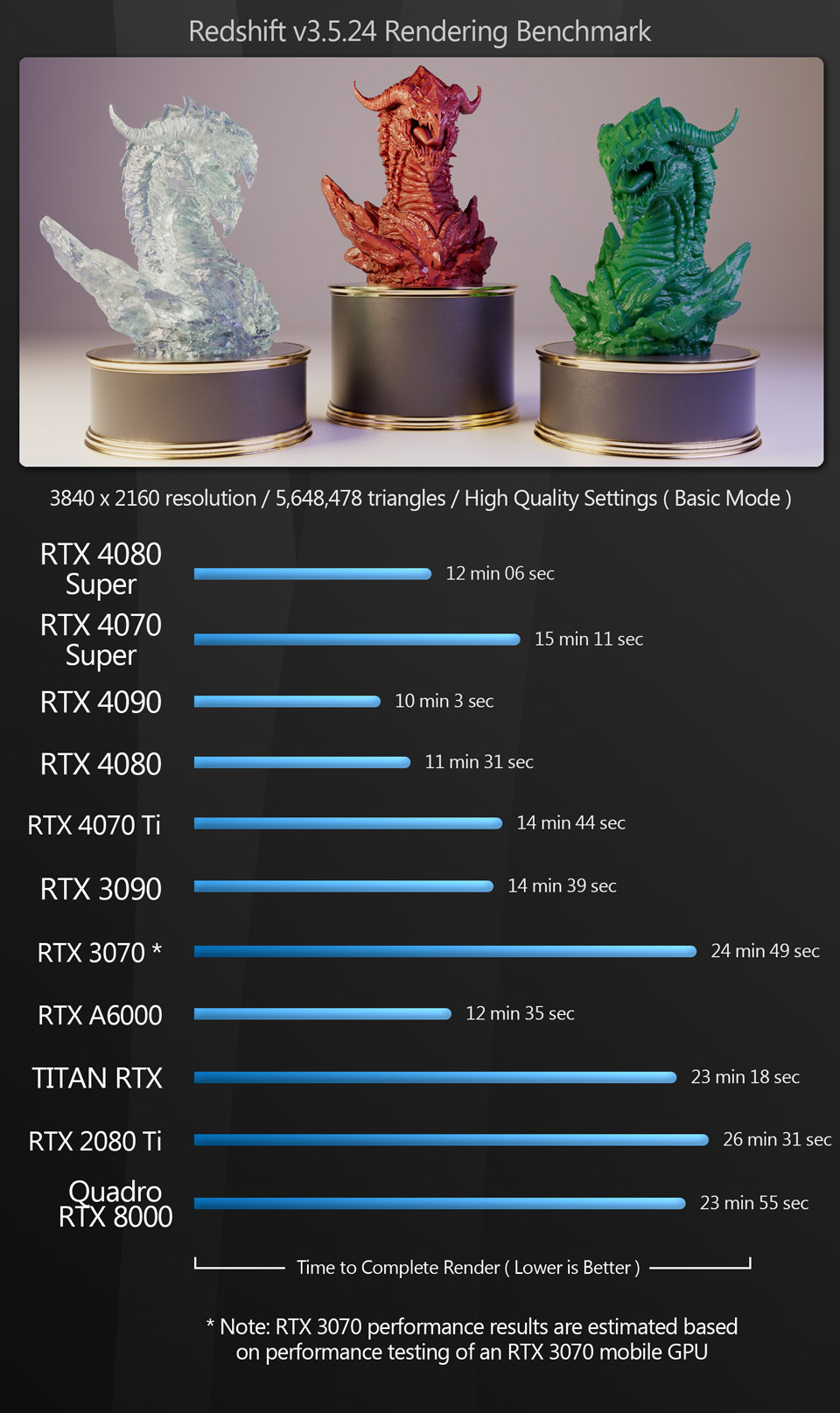

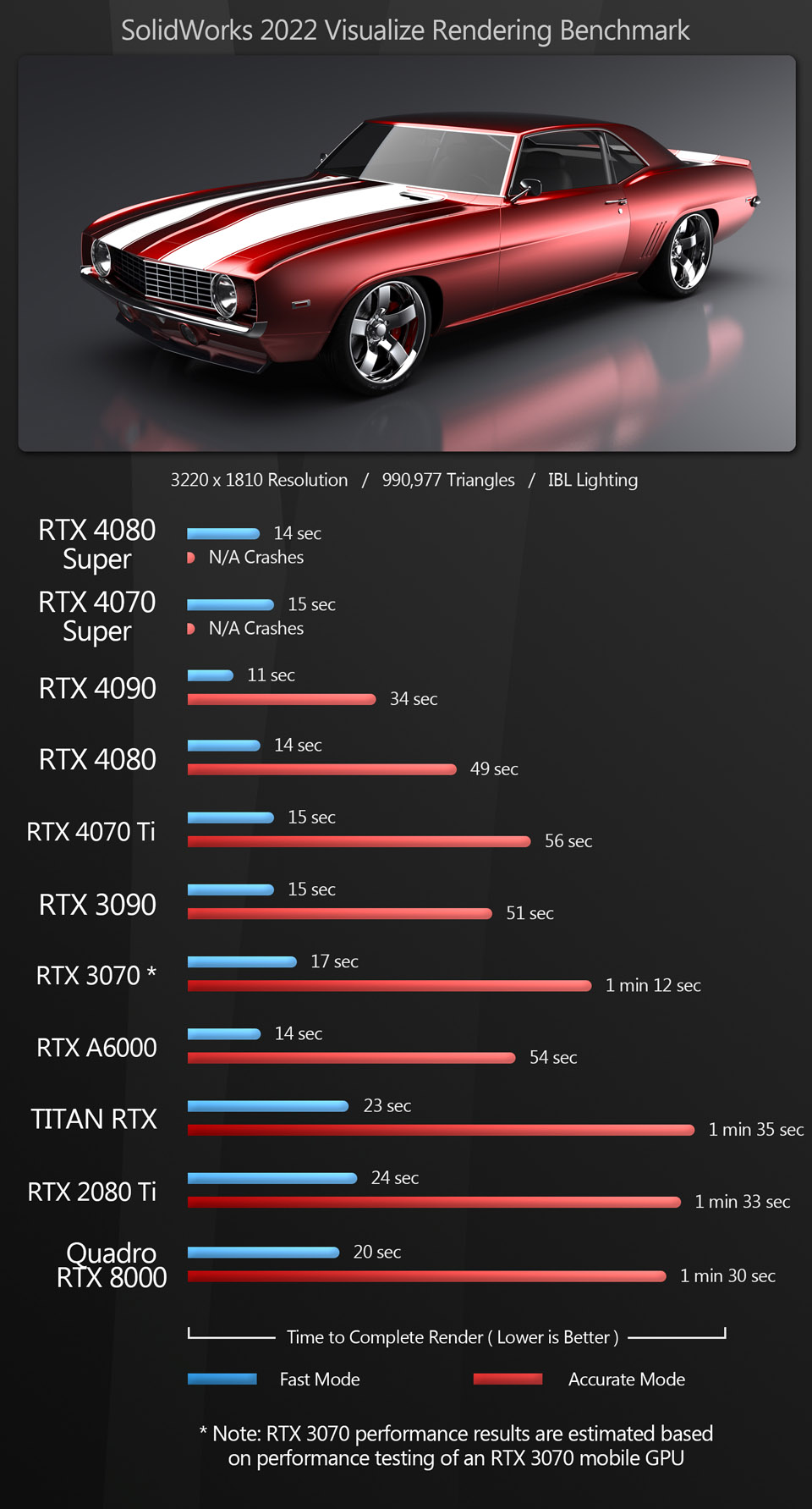

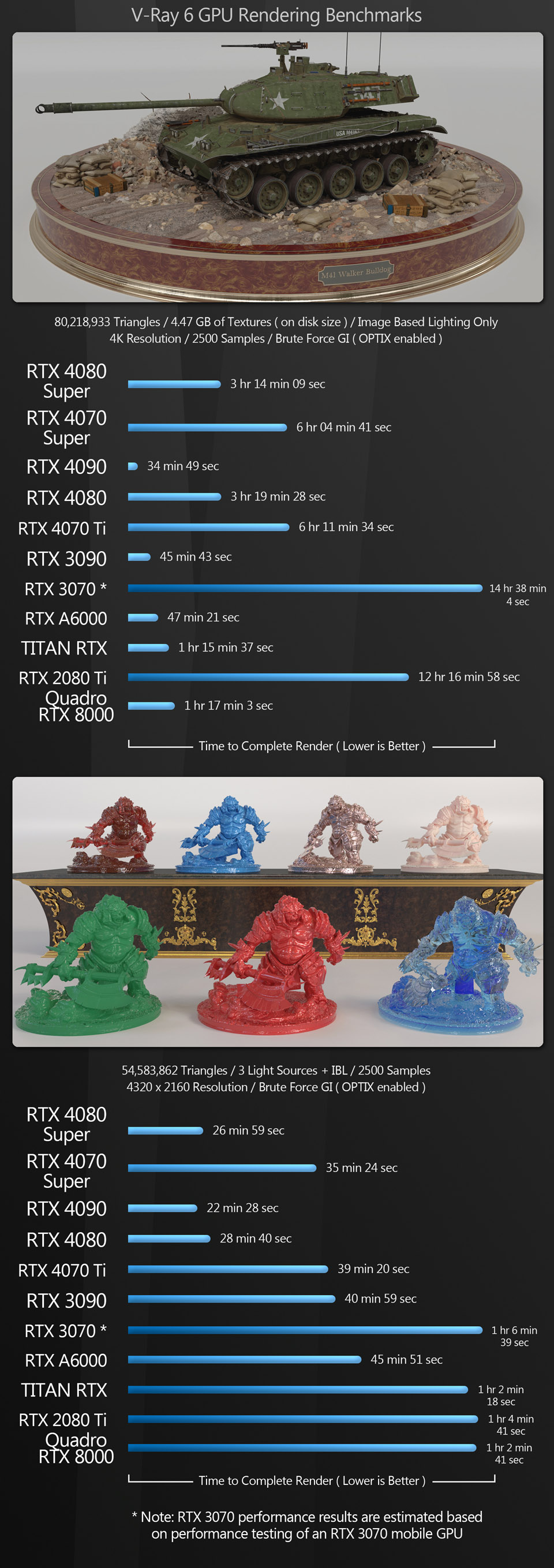

Next, we have a set of GPU rendering benchmarks, performed with an assortment of the more popular GPU renderers, rendering single frames at 4K or higher resolutions.

The rendering tests follow a similar pattern to the viewport benchmarks. The performance of the GeForce RTX 4080 Super is nearly the same as the GeForce RTX 4080, pulling slightly ahead in most tests, but falling behind in some – not really a surprise, considering that its CUDA, Tensor and RT core counts are only slightly higher, and that it has the same 16 GB of VRAM.

The GeForce RTX 4070 Super is essentially the new GeForce RTX 4070 Ti, coming slightly ahead of the original card in some tests, but falling slightly behind in others.

However, it’s worth noting that for rendering, the GeForce RTX 4090, with its 24 GB frame buffer, still reigns supreme here by a big margin.

Other benchmarks

The next benchmarks test the use of the GPU for more specialist tasks. Premiere Pro uses the GPU for video encoding; photogrammetry application Metashape uses the GPU for image processing and 3D model generation; and Houdini plugin Axiom and Cinema 4D’s Pyro solver both use the GPU for fluid simulation.

The miscellaneous tests follow a similar pattern to the viewport and rendering tests: the GeForce RTX 4080 Super is just a smidge faster than the GeForce RTX 4080, while the GeForce RTX 4070 Super is essentially the new GeForce RTX 4070 Ti. The differences in performance are even smaller than in the previous two categories.

Neither of the new GPUs fares well with the Cinema 4D Pyro simulation, again due to memory constraints. The only GPUs that really perform well are the 48 GB workstation cards.

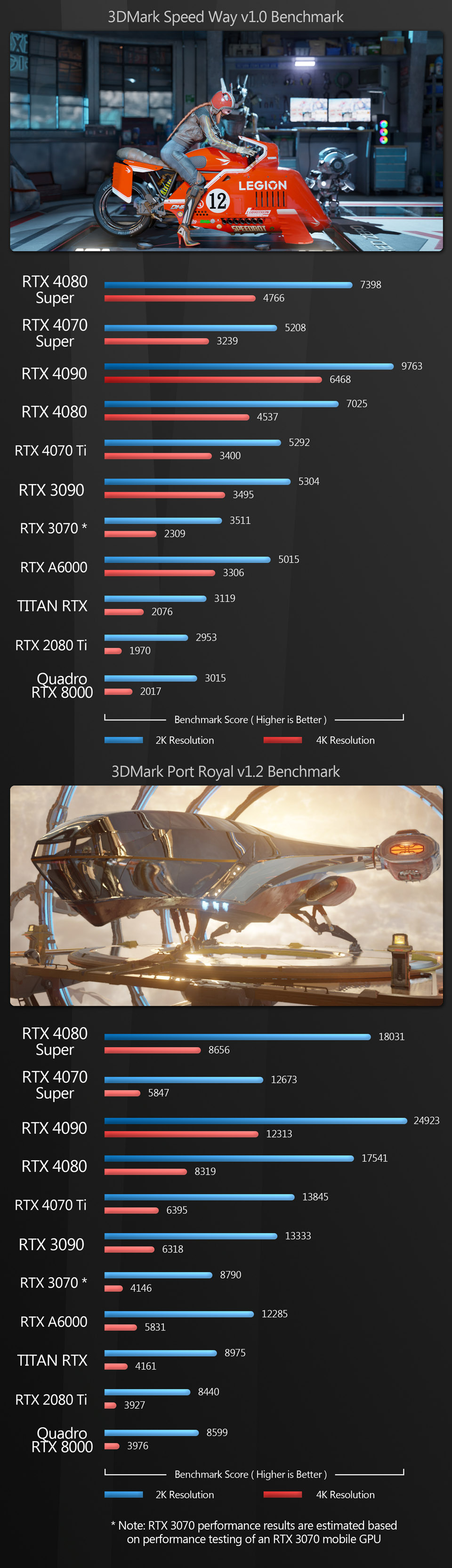

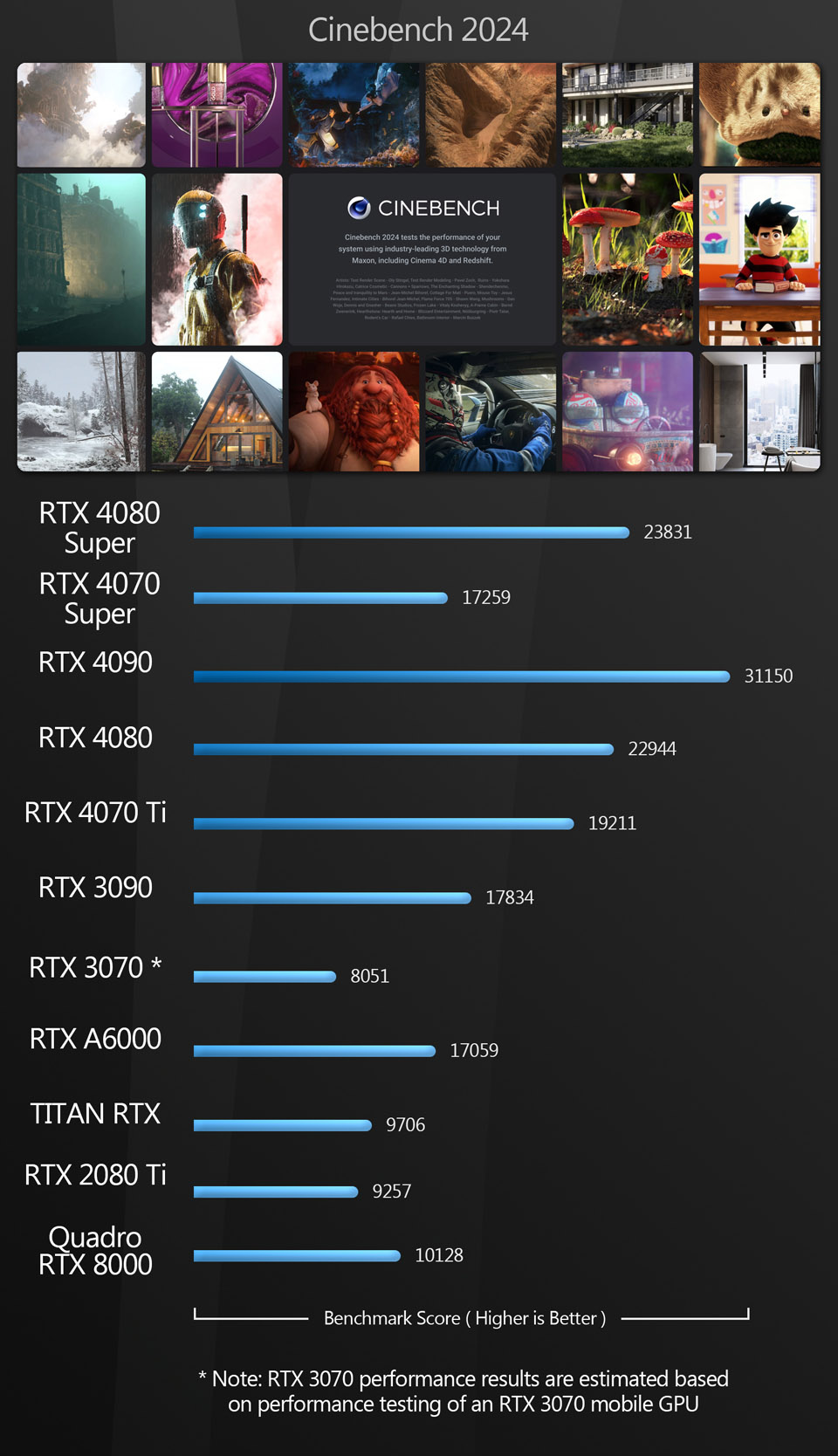

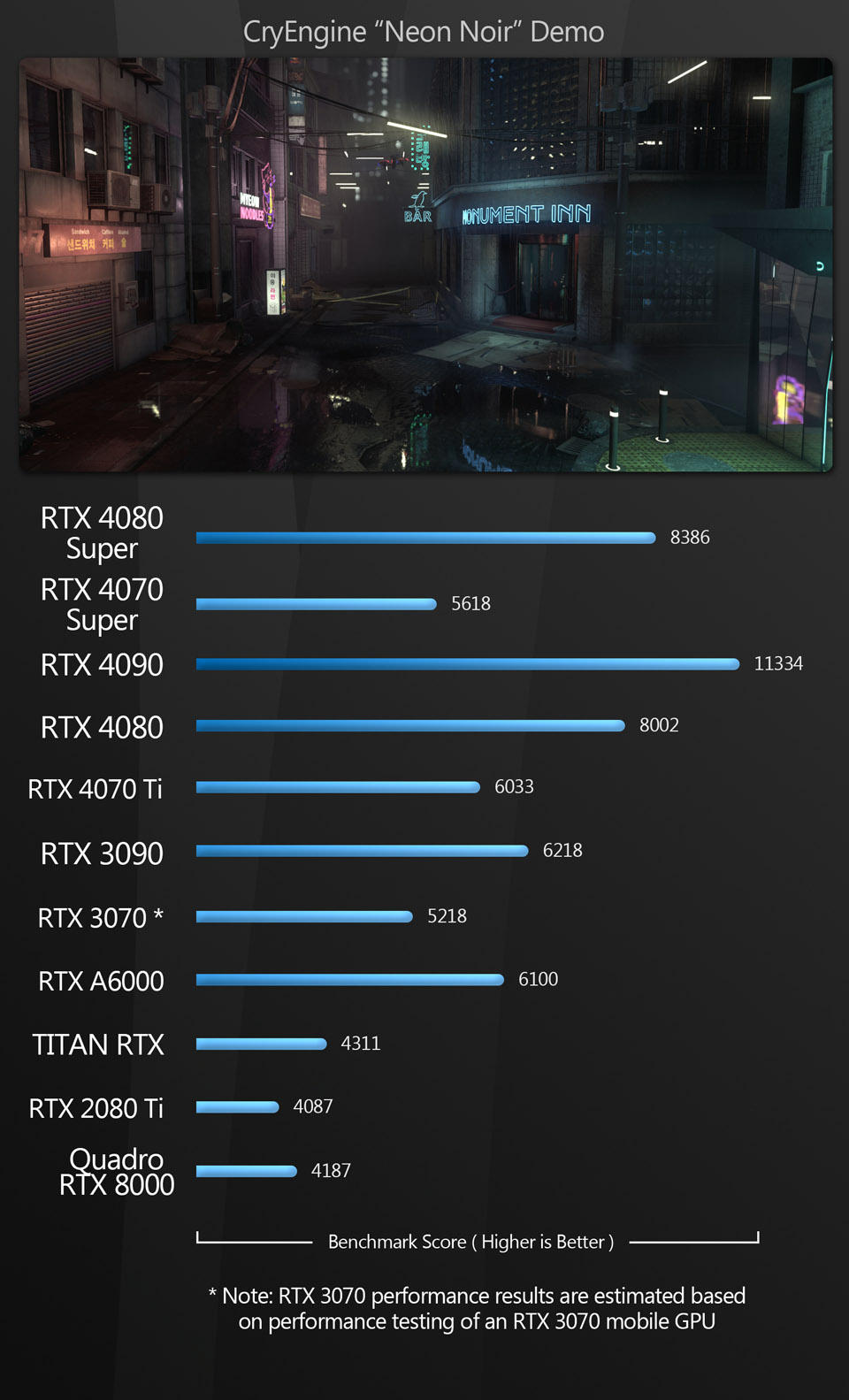

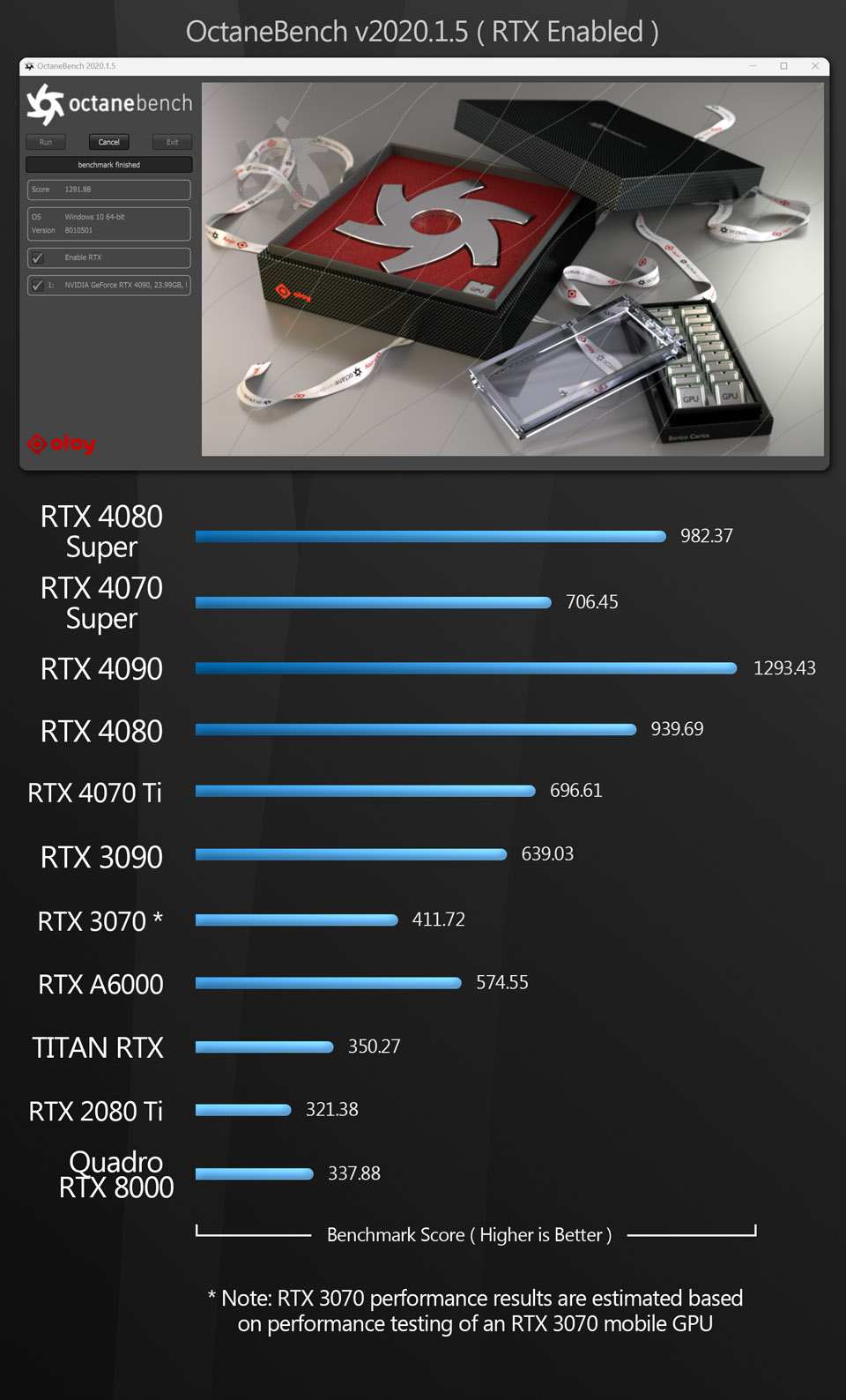

Synthetic benchmarks

Finally, we have an assortment of synthetic benchmarks. They don’t accurately predict how a GPU will perform in production, but they’re a decent measure of its performance relative to other GPUs, and the scores can be compared to those available online for other cards.

The synthetic benchmarks confirm we already know about the GeForce RTX 4080 Super, ranking it slightly higher than the standard GeForce RTX 4080.

The curiosity is the GeForce RTX 4070 Super: unlike in the previous tests, some of the synthetic benchmarks have it coming in significantly behind the GeForce RTX 4070 Ti. This is why I am not a huge fan of synthetics, as they are often tuned in a way that doesn’t reflect real-world usage.

Other considerations

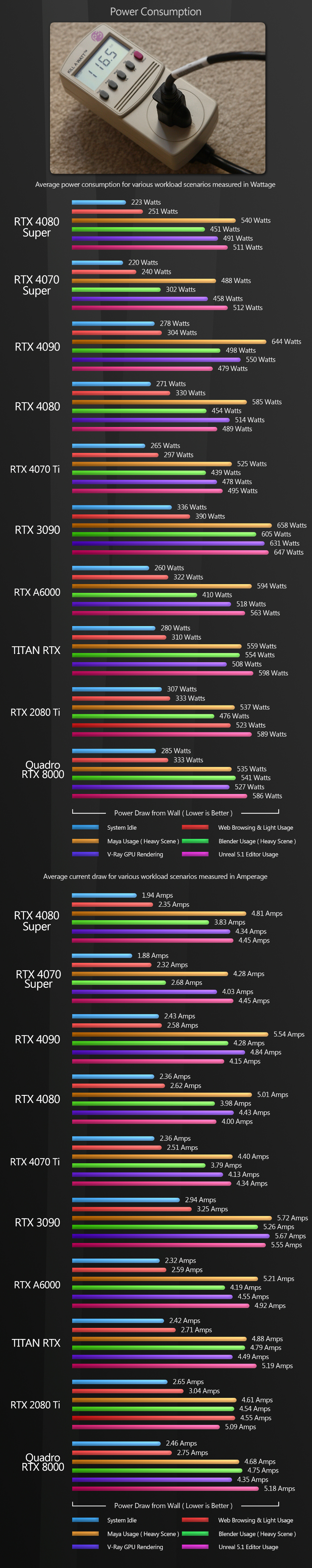

Power consumption

To test the power usage of the GeForce RTX 40 Series, I measured the power consumption of the entire test system at the wall outlet, using a P3 Kill A Watt meter. Since the test machine is a power-hungry Threadripper system, my figures will be higher than most DCC workstations.

For this group test, I measured both power and current drawn. Current (Amperage) is often overlooked by reviewers, but it can be a critical determinant of how many machines you can run on a single circuit.

Most US houses run 15A circuits from the main panel, and many circuit breakers are rated for 80% of their maximum load, so a 15A circuit with a standard breaker should not exceed 12A for continuous usage. In my tests, the current drawn by the test system approached 6A when the more power-hungry GPUs were installed. If the wall outlets in your home office are connected by a single circuit, this could determine whether you can run two workstations simultaneously, particularly when you factor in monitors and lights.

The original GeForce RTX 40 Series GPUs were a pretty good step up in power efficiency over the GeForce RTX 30 Series, and the new Super Series cards improve efficiency even further.

The GeForce RTX 4080 Super shows a marked decrease in power usage from the original GeForce RTX 4080 in every test apart from Unreal Engine 5.

The GeForce RTX 4070 Super also has lower or equal power usage to the original GeForce RTX 4070 Ti in almost every test: again, the exception being Unreal Engine 5.

Drivers

Finally, a note on the Studio Drivers with which I benchmarked the GeForce RTX GPUs. NVIDIA now offers a choice of Studio or Game Ready Drivers for GeForce cards, recommending Studio Drivers for DCC work and Game Ready Drivers for gaming. In my tests, I found no discernible difference between them in terms of performance or display quality. My understanding is that the Studio Drivers are designed for stability in DCC applications, and while I haven’t had any real issues when running DCC software on Game Ready Drivers, if you are using your system primarily for content creation, there is no reason not to use the Studio Drivers.

Click the image to view it full-size.

Verdict

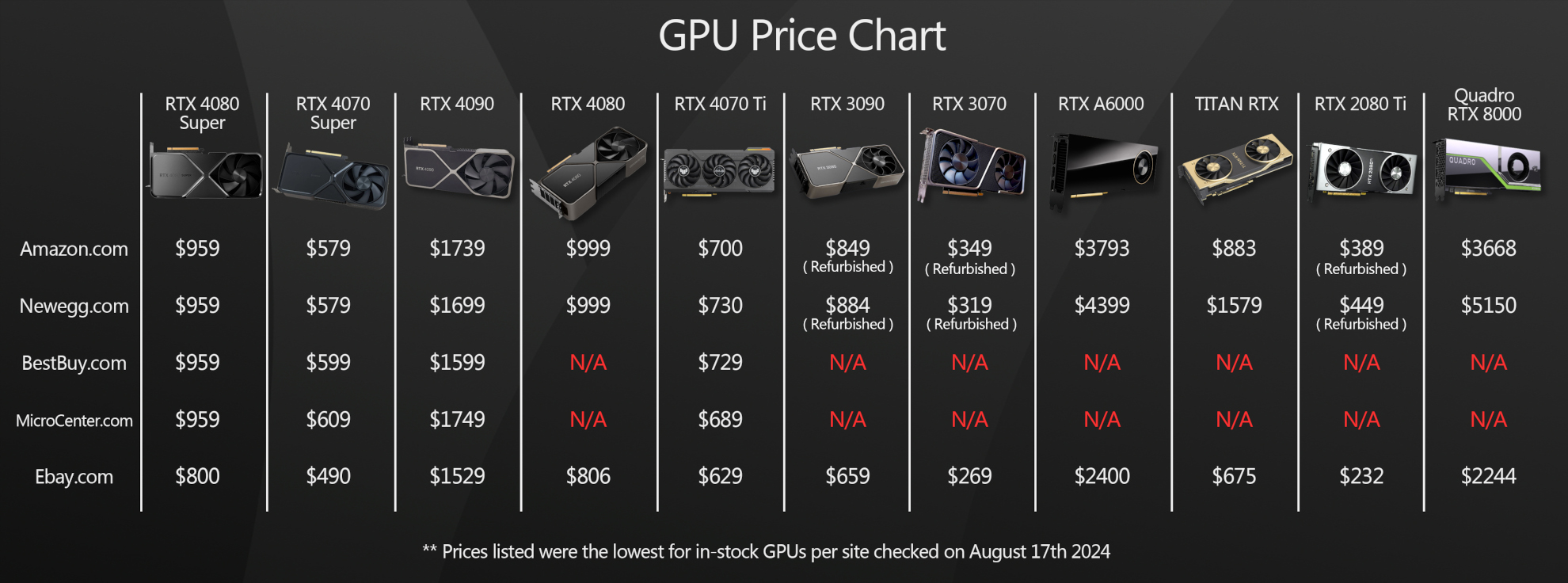

Both the GeForce RTX 4080 Super and GeForce RTX 4070 Super are marked improvements over their predecessors.

The GeForce RTX 4080 Super is only slightly faster than the original GeForce RTX 4080, but it also uses a little less power, and its biggest draw is its price: at $999, its MSRP is $200 lower.

The GeForce RTX 4070 Super surprised me by how well it performed: in my tests, it matches or slightly exceeds the original GeForce RTX 4070 Ti, and while I didn’t test a vanilla GeForce RTX 4070, I can only assume that the differences would be even more significant. The 4070 Super is smaller than the 4070 Ti and uses less power, and at $599, its launch price is $200 lower.

My only complaint is GPU memory. Both of the Super cards have the same memory capacity as their predecessors, and – as these group tests have shown many times over – memory capacity is very important for DCC work. I would have liked to see the GeForce RTX 4080 Super getting a bump up from 16 GB to 20 GB, even if it meant keeping the original MSRP of $1,199. I realize that gaming is still the priority market for these GPUs, but choice always benefits the consumer.

Overall conclusion

Both the GeForce RTX 4080 Super and GeForce RTX 4070 Super are great GPUs for content creation. They perform extremely well in most of my tests, only struggling in those that require a lot of VRAM.

But despite this, if you have the budget for it, my recommendation for heavy DCC work would still be the original GeForce RTX 4090. Its super-fast AD102 GPU – and, more importantly, its 24GB of GPU memory – will tackle just about anything you can throw at it.

Lastly, I want to thank you for taking the time to stop by. I hope this review has been helpful, and if you have any questions or suggestions, let me know at the email address below.

Links

Read more about the GeForce RTX 40 Series GPUs on NVIDIA’s website

About the reviewer

Jason Lewis is a Senior Hard Surface Artist at Lightspeed LA, a Tencent America development group, and CG Channel’s regular hardware reviewer. You can see more of his work in his ArtStation gallery.

Contact Jason at jason [at] cgchannel [dot] com.

Acknowledgements

Stephenie Ngo of NVIDIA

Chloe Larby of Grithaus Agency

Stephen G Wells

Adam Hernandez

Have your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we don’t post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.