Group test: Nvidia GeForce RTX 40 Series GPUs

Which GeForce RTX 40 Series GPU offers the best bang for your buck for CG work? To find out, Jason Lewis puts Nvidia’s new consumer GPUs – and several older cards – through an exhaustive set of real-world tests.

Welcome to another group test. In by far the biggest GPU review I have done for CG Channel, we will be taking a look at three cards from the GeForce RTX 40 Series, Nvidia’s current range of consumer GPUs.

Nvidia claims significant performance improvements for the RTX 40 Series GPUs over their predecessors, and many tests have shown that this is the case for gaming. But what about digital content creation?

That is what this article aims to find out. We will be pitting the GeForce RTX 40 Series cards against older Nvidia GPUs, including those from its RTX 30 Series and RTX 20 Series, in a series of real-world DCC tests.

Jump to another part of this review

Technology focus: GPU architectures and APIs

Specifications

Testing procedure

Benchmark results

Other considerations

Verdict

Which GPUs are included in the group test?

Since the end of 2022, Nvidia has been pushing out its GeForce RTX 40 Series of consumer GPUs, based on its Ada Lovelace architecture.

This is its current generation of GPUs, meant to replace the previous GeForce RTX 30 Series, based on the Ampere architecture, and GeForce RTX 20 Series, based on the Turing architecture.

In this review, we will be looking at three cards from the GeForce RTX 40 Series: the high-end GeForce RTX 4090, 4080 and 4070 Ti. (At the time the benchmark testing was done, the mid-range GeForce RTX 4070 and GeForce RTX 4060 Family had not been released.)

We will be testing them against two cards from the GeForce RTX 30 Series, the GeForce RTX 3090 and GeForce RTX 3070, and one from the GeForce RTX 20 Series, the GeForce RTX 2080.

We will also be testing three of Nvidia’s workstation cards, the Ampere-generation RTX A6000, and the Turing-generation Titan RTX and Quadro RTX 8000.

That makes the full line-up of GPUs on test as follows:

| Nvidia RTX GPUs on test | ||||

|---|---|---|---|---|

| Card | Architecture | Released | Type | |

| GeForce RTX 4090 | Ada Lovelace | 2022 | Consumer | |

| GeForce RTX 4080 | Ada Lovelace | 2022 | Consumer | |

| GeForce RTX 4070 Ti | Ada Lovelace | 2023 | Consumer | |

| GeForce RTX 3090 | Ampere | 2020 | Consumer | |

| GeForce RTX 3070 | Ampere | 2020 | Consumer | |

| RTX A6000 | Ampere | 2020 | Workstation | |

| Titan RTX | Turing | 2018 | Workstation | |

| GeForce RTX 2080 Ti | Turing | 2018 | Consumer | |

| Quadro RTX 8000 | Turing | 2018 | Workstation | |

Technology focus: GPU architectures and APIs

Before I get to the review itself, here is a quick recap of some of the technical terms that you will encounter in it. If you’re already familiar with them, you may want to skip ahead.

Like Nvidia’s previous-generation Ampere and Turing GPUs, the current Ada Lovelace GPU architecture features three types of processor cores: CUDA cores, designed for rasterization and general GPU computing; Tensor cores, designed for machine learning operations; and RT cores intended to accelerate ray tracing.

In order to take advantage of the RT cores, software has to access them through a graphics API: in the case of the applications featured in this review, either DXR (DirectX Raytracing), or Nvidia’s own OptiX.

In many renderers, the OptiX rendering backend is provided as an alternative to an older backend based on Nvidia’s CUDA API. The CUDA backends work with a wider range of Nvidia GPUs and software applications, but enabling OptiX usually improves performance.

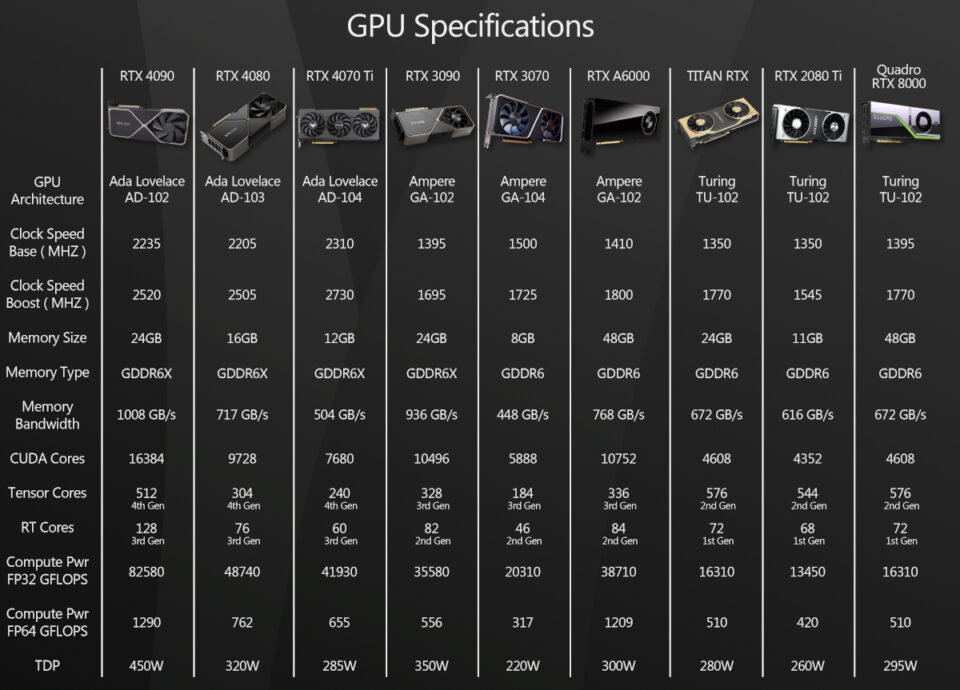

Specifications

You can find articles on other tech websites about the Ada Lovelace architecture itself, so I won’t delve into it here. Instead, here is an overview of the three GeForce RTX 40 Series GPUs on test.

First, the GeForce RTX 4090. Meant as a direct replacement to the Ampere generation’s RTX 3090 and 3090 Ti, the RTX 4090 is currently Nvidia’s fastest consumer GPU, sporting its top-tier AD102 GPU with 16,384 CUDA cores, 512 fourth-generation Tensor Cores, and 128 third-generation RT cores running at a core clock speed of 2.23 GHz, and a boost speed of 2.52 GHz. Combine that with 24 GB of 21 GB/s GDDR6X memory on a 384-bit bus, and that gives you 82.6 TFlops of single precision (FP32) compute power, 1.29 TFlops of double precision (FP64) compute power, and a memory bandwidth of 1,008 GB/s.

The RTX 4090 I tested is a Founders Edition card, and its form factor is nearly identical to the RTX 3090 Founders Edition. Like its predecessor, it’s a massive card, taking up three entire slots, and weighing in at 77.2 oz (2.2 kg): heavy enough that I bought a GPU brace to hold up the back end of the card.

At standard clock speeds, the RTX 4090 has a TDP of 450W (Nvidia says that it can consume 600W if pushed beyond factory defaults), and needs at least a 850W PSU. It uses a new 16-pin 12VHPWR power connector, so for anyone without the latest PSUs, Nvidia supplies a 16-pin to 4 x 8-pin PCIe adapter.

On the RTX 4090’s release, some users reported issues with cables melting, but later research from tech websites suggested that the most likely cause was simply user error. Having double- and triple-checked that my own test card’s connectors were properly seated, I am happy to report that I have not had any problems.

The second new card is the GeForce RTX 4080. Although it’s still an enthusiast-class GPU, it’s meant to be a more mainstream card than the RTX 4090, and takes the place of the previous-generation RTX 3080 and 3080 Ti. The RTX 4080 comes equipped with an AD103 GPU with 9,728 CUDA cores, 304 fourth-generation Tensor cores, and 76 third-generation RT cores. Like the 4090, it uses GDDR6X memory, but its frame buffer gets cut down from 24 GB to 16 GB, and it gets a smaller 256-bit bus.

The RTX 4080 also runs slightly slower, with a base clock speed of 2.21 GHz, and a boost clock speed of 2.51 GHz. Put all those numbers together and you get 48.7 TFlops of single precision compute power, 0.76 TFlops of double precision compute power, and a memory bandwidth of 717 GB/s.

The RTX 4080 on test was also a Founders Edition card. It’s nearly identical in size to the RTX 4090 Founders Edition but, at 75.1 oz (2.1 kg), very slightly lighter. At factory speeds, it has a TDP of 320W and requires at least a 750W PSU. The 4080 uses the same 16-pin 12VHPWR connector as the RTX 4090, the difference being that the included adapter is a 16-pin to 3 x 8-pin PCIe model.

The final new card is the GeForce RTX 4070 Ti. It’s an interesting product, as it was originally due to be a a second RTX 4080 SKU, but due to complaints from users, Nvidia rebranded it and reduced the price slightly.

The RTX 4070 Ti has an AD104 GPU with 7,680 CUDA cores, 240 fourth-generation Tensor Cores, and 60 third-generation RT cores. It also uses GDDR6X memory and further shrinks the frame buffer to 12GB on a 192-bit bus. The clock speeds for the 4070 Ti are actually higher than either the RTX 4080 and the 4090: the Asus card on test has a base clock speed of 2.31 GHz and a boost clock speed of 2.73 GHz, higher than Nvidia’s reference boost clock speed of 2.61 GHz. It provides 40.1 TFlops of single precision compute power, 0.63 TFlops of double precision compute power, and has a memory bandwidth of 504 GB/s.

Another interesting aspect of the 4070 Ti is that there is no Founders Edition model. As a result, I tested an Asus TUF Gaming edition card. Like the RTX 4090 and RTX 4080, the Asus RTX 4070 Ti is a three-slot design, although the cooling arrangement is very different from the two Founders Edition cards, with three axial fans pulling air from the same direction, rather than a two-fan design.

Despite the differences in board layout, the Asus RTX 4070 TI is roughly the same size as the RTX 4090 and RTX 4080, but significantly lighter: 47.8 oz (1.4 kg). At factory speeds, it has a TDP of 285W, with Asus recommending at least a 750W PSU.

Click the image to view it full-size.

Testing procedure

For the test machine, I am still using the dependable Xidax AMD Threadripper 3990X system that I reviewed in 2020. Despite the fact that it is now three years old, it is still an extremely capable system and doesn’t appear to be a bottleneck for any of the GPUs tested.

The current version of the test system has the following specs:

CPU: AMD Threadripper 3990X

Motherboard: MSI Creator TRX40

RAM: 64 GB of 3,600 MHz Corsair Dominator DDR4

Storage: 2TB Samsung 970 EVO Plus NVMe SD / 1 TB WD Black NVMe SSD / 4 TB HGST 7,200 rpm HD

PSU: 1300W Seasonic Platinum

OS: Windows 11 Pro for Workstations

The only GPU not tested on the Threadripper system was the GeForce RTX 3070. I no longer have access to a desktop RTX 3070, so testing was done using the mobile RTX 3070 in the Asus ProArt Studiobook 16 laptop from this recent review. In that review, I determined that across a range of tests, the mobile RTX 3070 was around 10% slower than its desktop counterpart, so here, I added 10% to the scores to approximate the performance of a desktop RTX 3070 card. It’s not an ideal methodology, but it gets us to the right ballpark.

For testing, I used the following applications:

Viewport performance

3ds Max 2023, Blender 3.3.1, Chaos Vantage 1.8.2, D5 Render 2.3.4, Fusion 360, Maya 2023, Modo 16.0v2, Omniverse Create 2022.3.1, SolidWorks 2022, Substance 3D Painter 8.2.0, Unigine Community 2.16.0.1, Unity 2022.1, Unreal Engine 5.1.0, 5.0.3 and 4.27.2

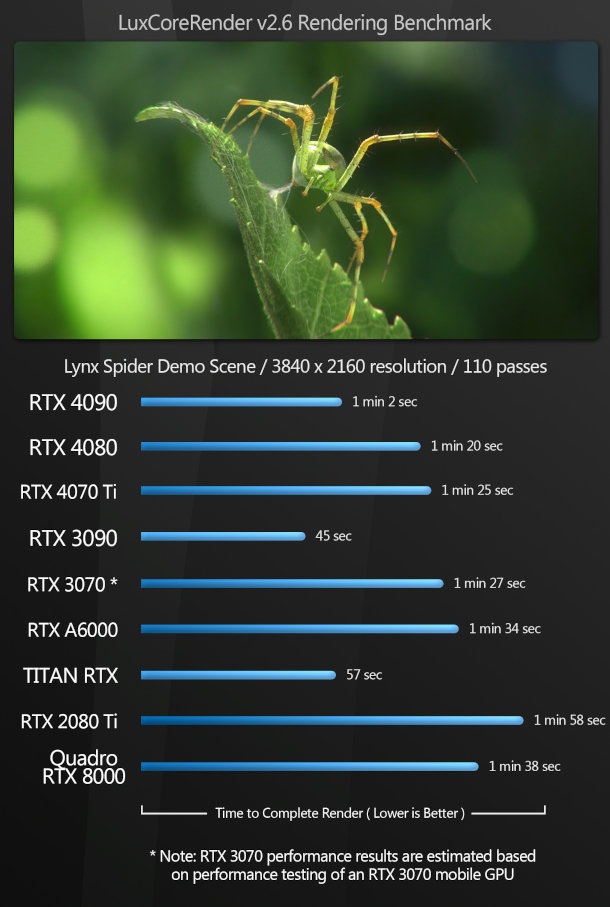

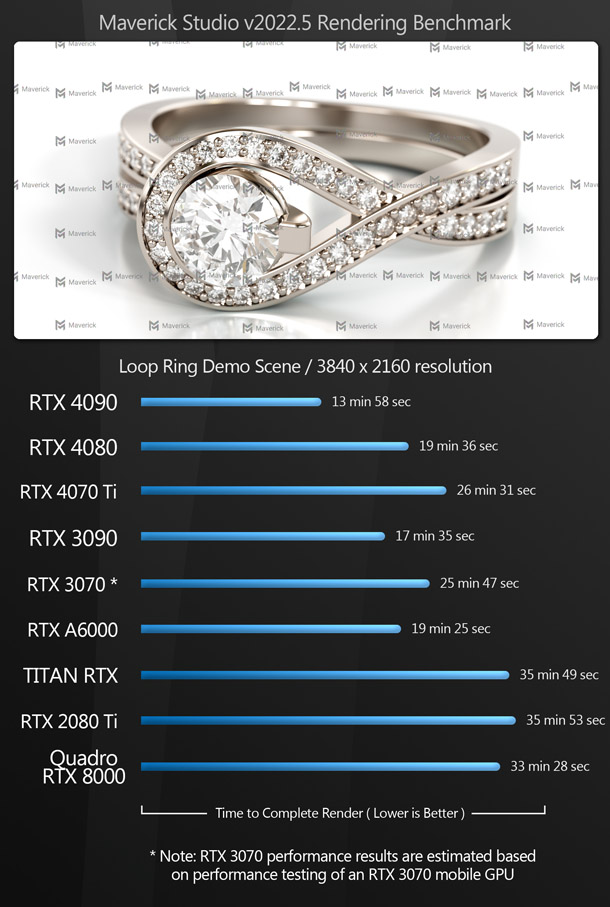

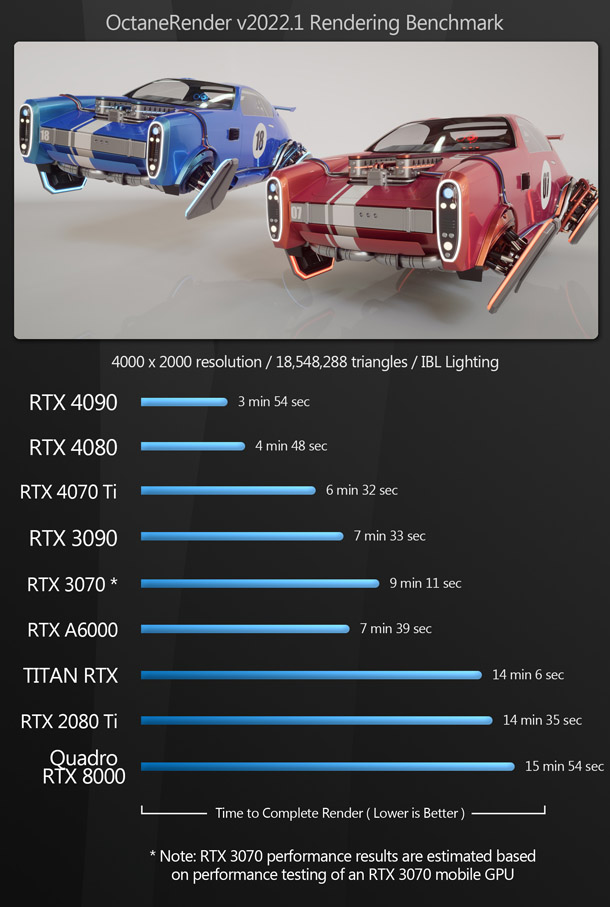

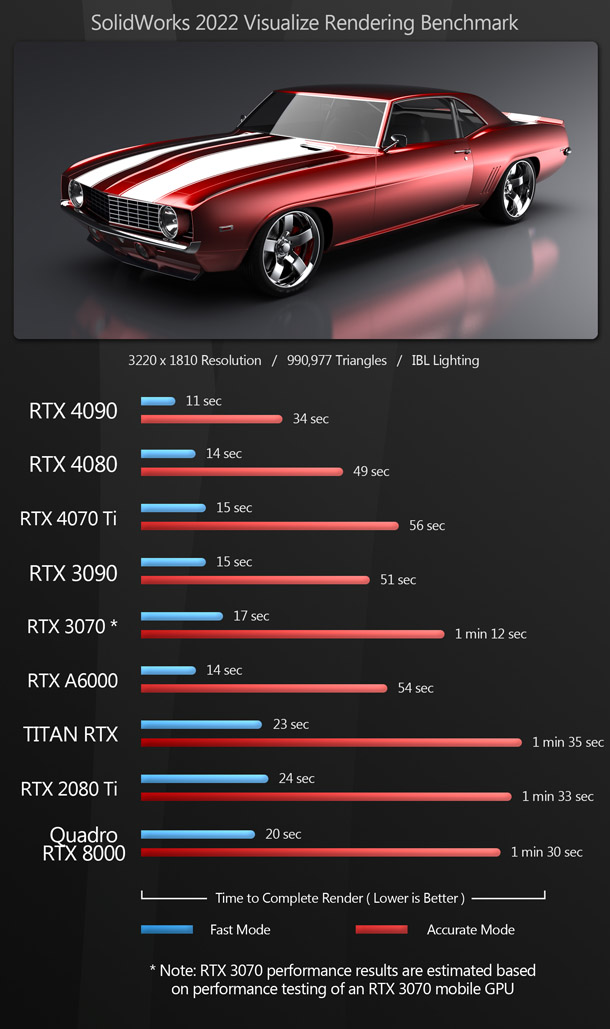

Rendering

Arnold for Maya 5.1.0, Blender 3.3 (Cycles renderer), KeyShot 11.2.0, LuxCoreRender 2.6, Maverick Studio 2022.5, OctaneRender 2022.1 Standalone, Redshift 3.5.12 for 3ds Max, SolidWorks Visualize 2022, V-Ray GPU 6 for 3ds Max Hotfix 3

Other benchmarks

Axiom 3.0.1 for Houdini 19.5, Cinema 4D v2023.1 (Pyro solver), Metashape 1.7.4, Premiere Pro 2022

Synthetic benchmarks

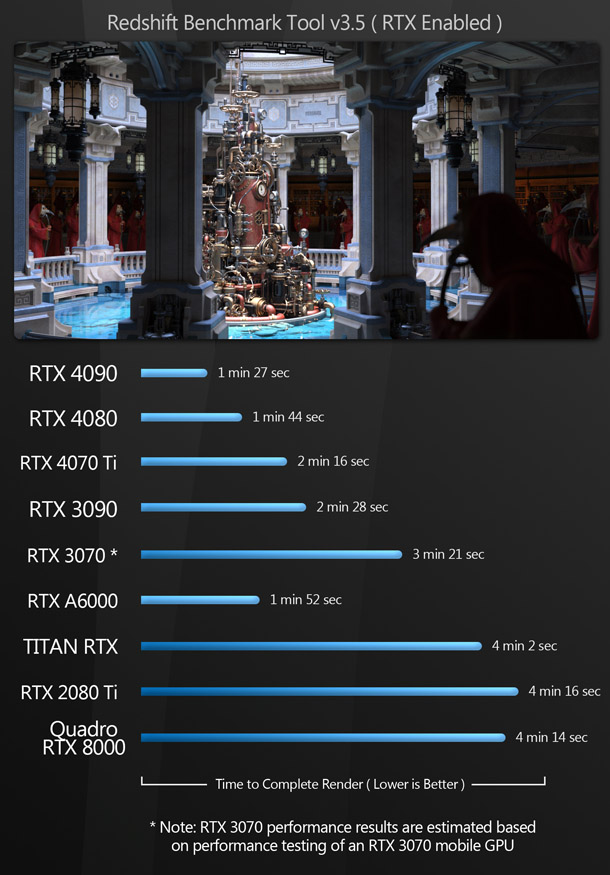

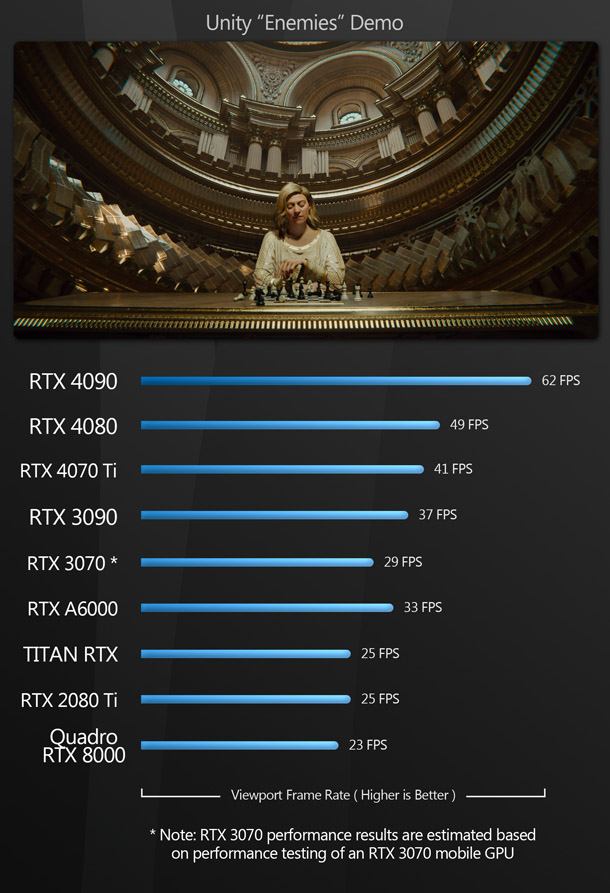

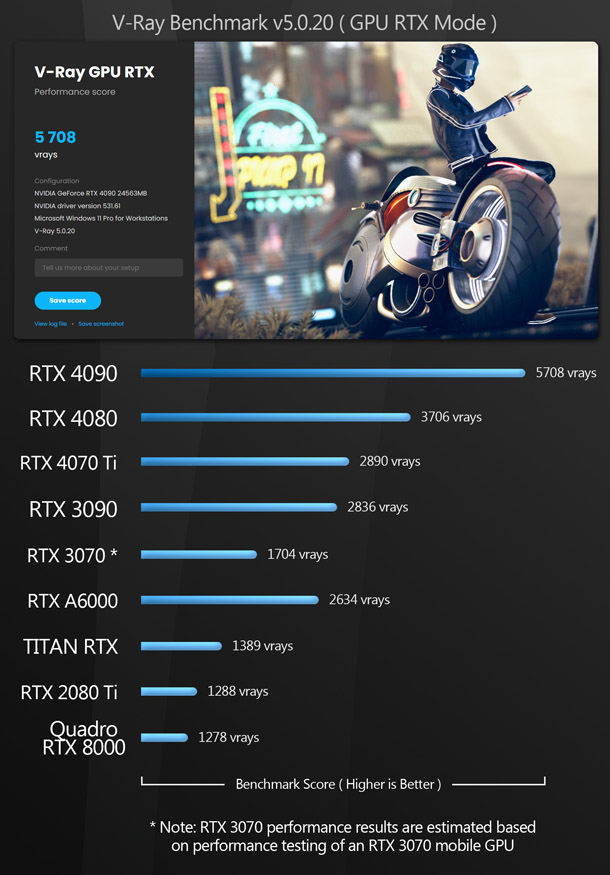

3DMark Speed Way 1.0 and Port Royal 1.2, CryEngine Neon Noir Ray Tracing Benchmark, OctaneBench 2020.1.5, Redshift Benchmark v3.5, Unity Enemies Demo, V-Ray Benchmark v5.02.01

All benchmarking was done with Nvidia Studio Drivers installed for the GeForce RTX GPUs and Enterprise Drivers installed for the RTX A6000 and Quadro RTX 8000. You can find a more detailed discussion of the drivers used later in the article.

In the viewport and editing benchmarks, the frame rate scores represent the figures attained when manipulating the 3D assets shown, averaged over five testing sessions to eliminate inconsistencies. In all of the rendering benchmarks, the CPU was disabled so only the GPU was used for computing.

This time, testing was done on a proper productivity monitor setup, consisting of a pair of 27″ 4K monitors running at 3,840 x 2,160px and a 34” widescreen display running at 3,440 x 1,440px. All three displays had a refresh rate of 144Hz. When testing viewport performance, the software viewport was constrained to the primary display (one of the 27″ monitors): no spanning across multiple displays was permitted.

Benchmark results

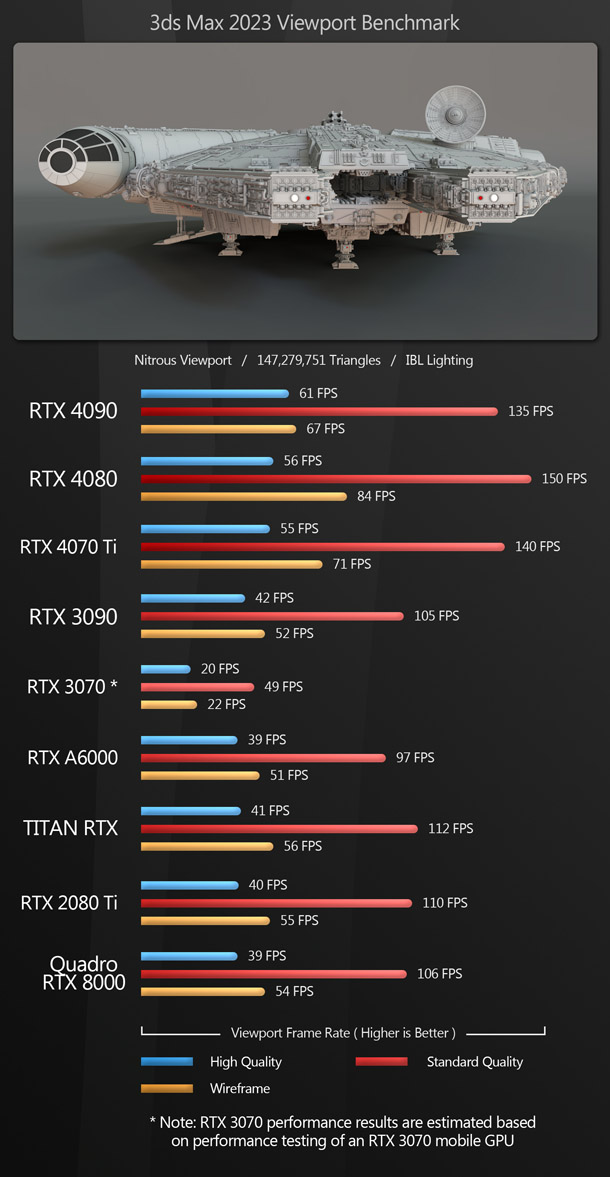

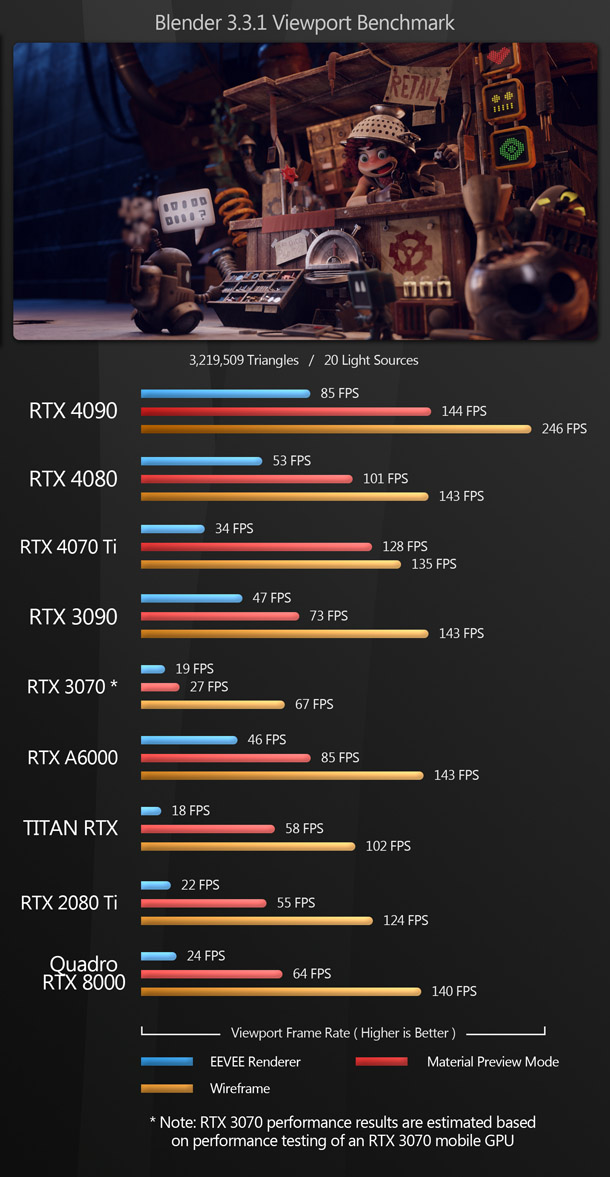

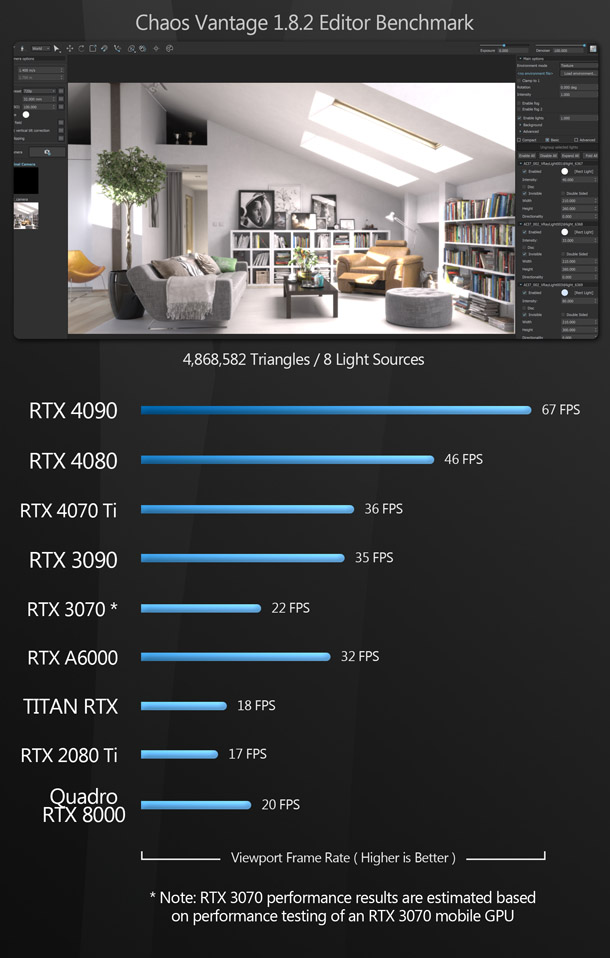

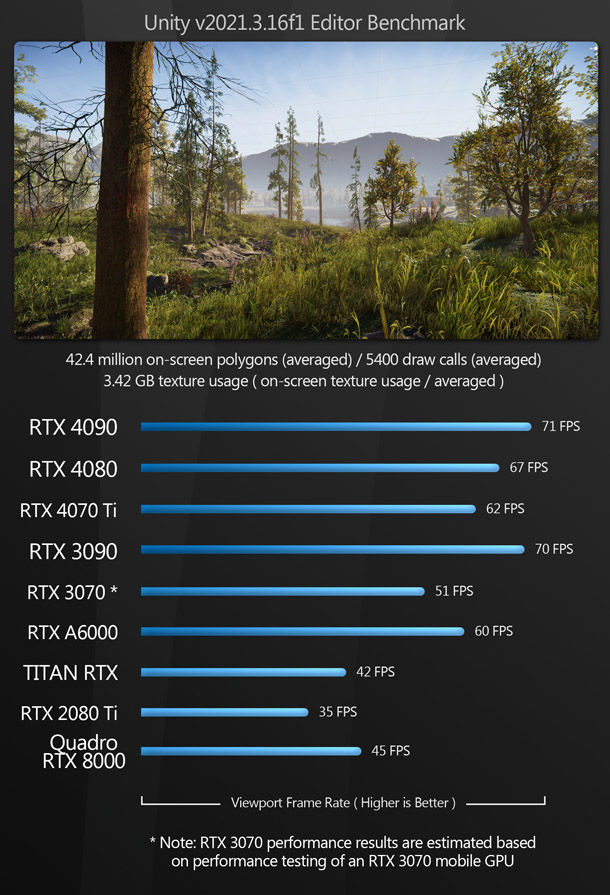

Viewport performance

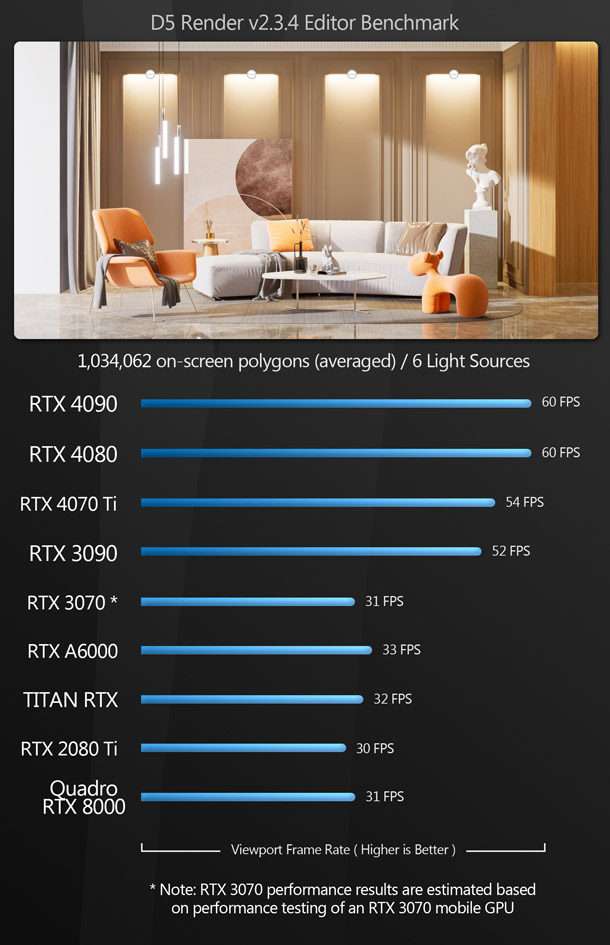

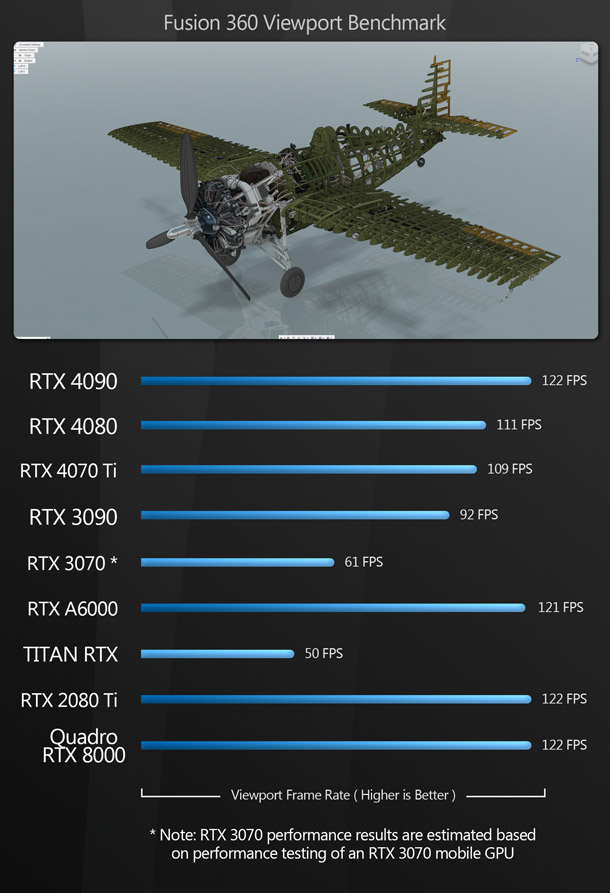

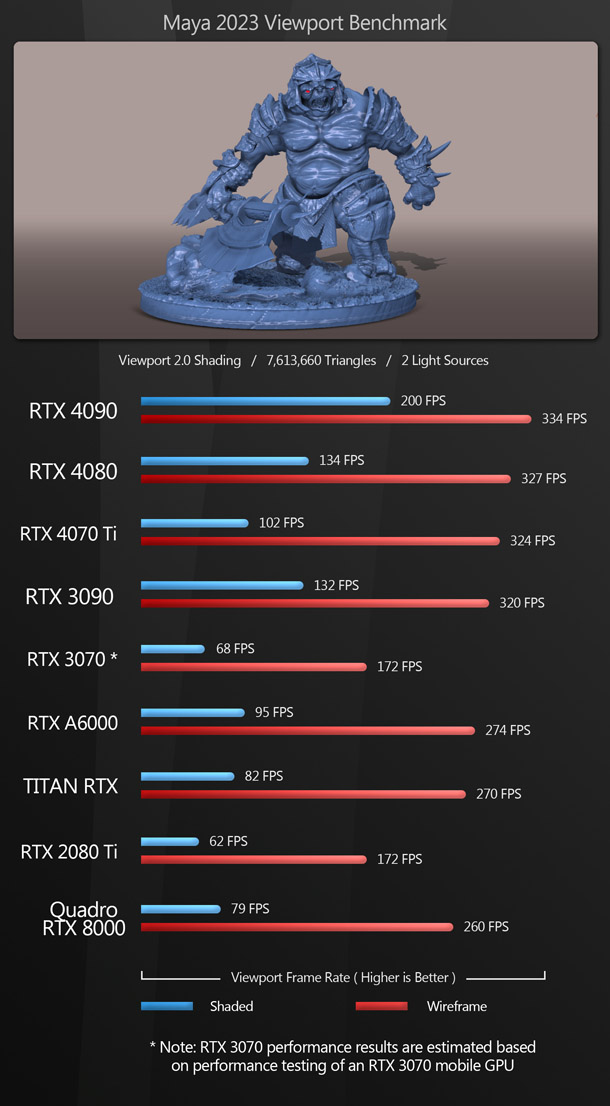

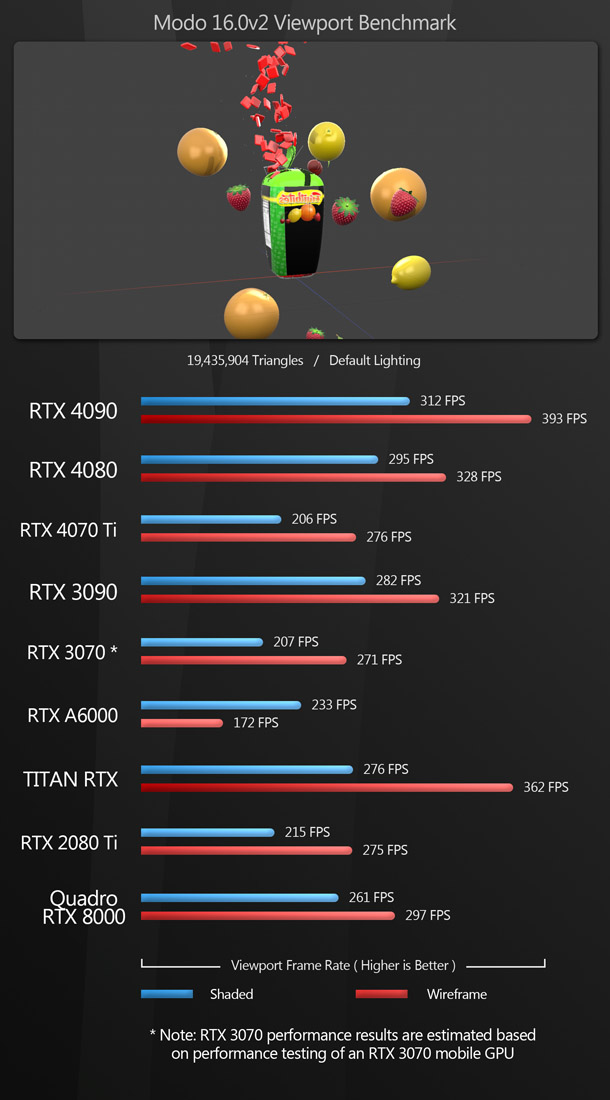

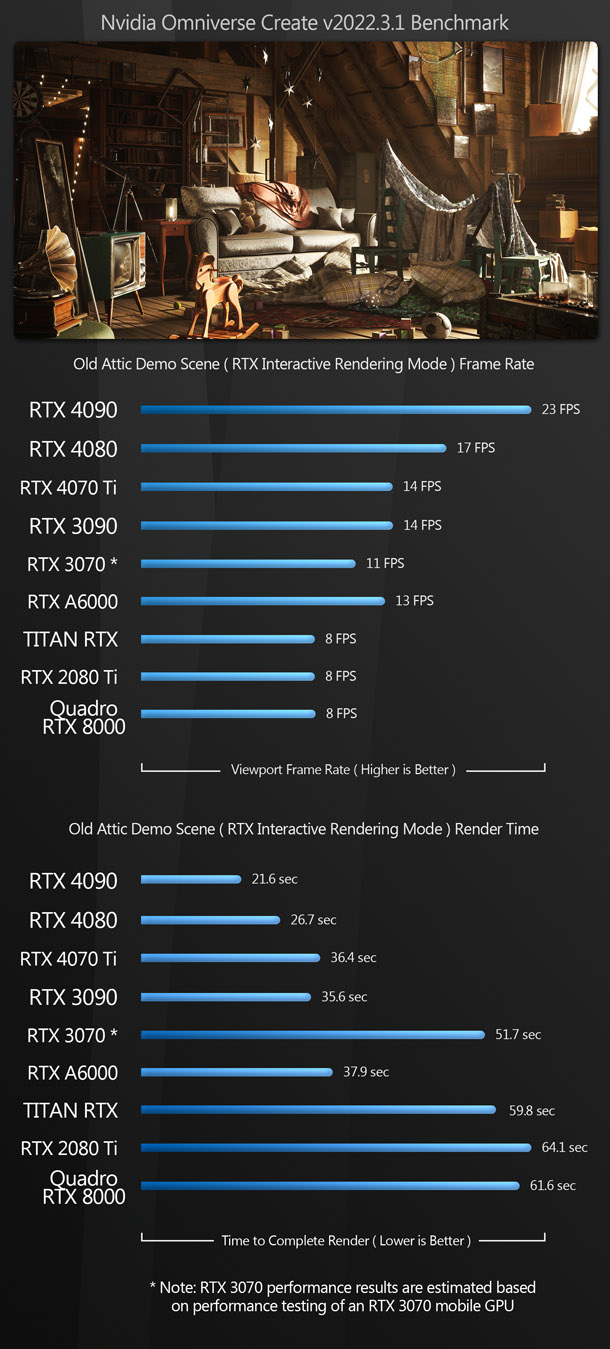

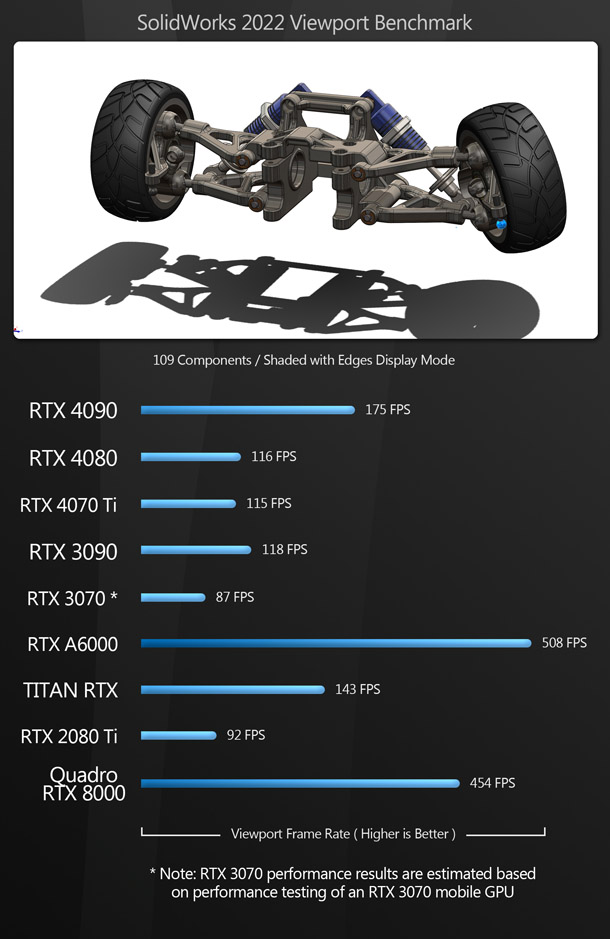

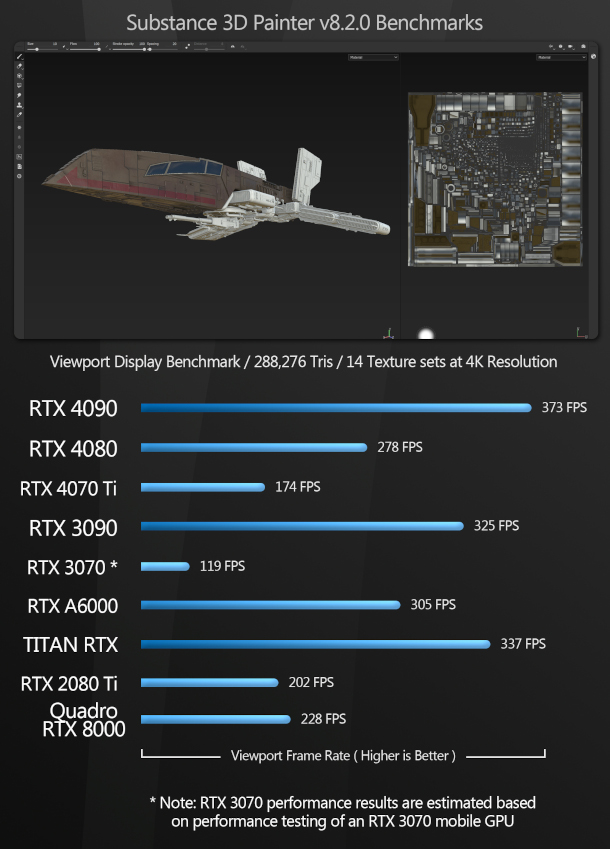

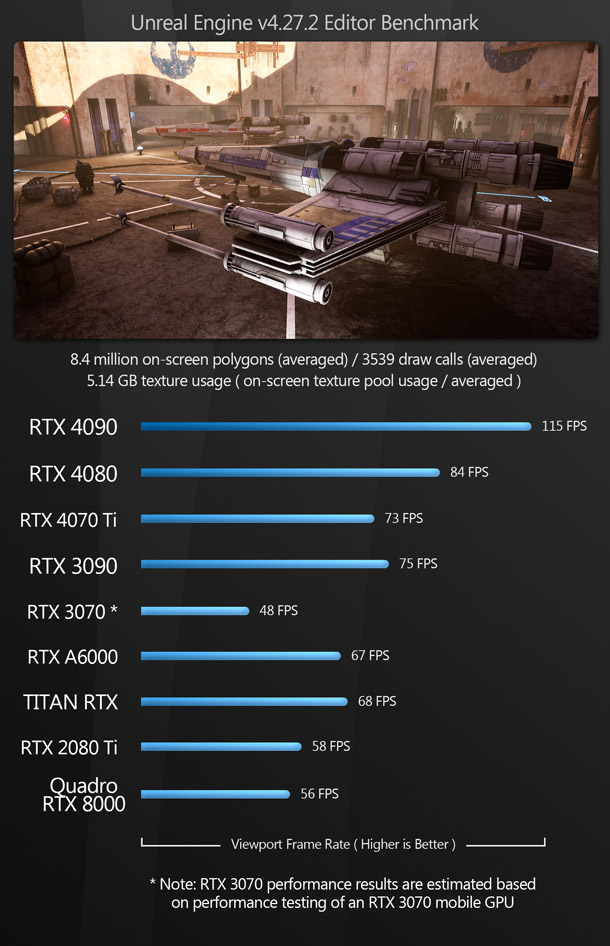

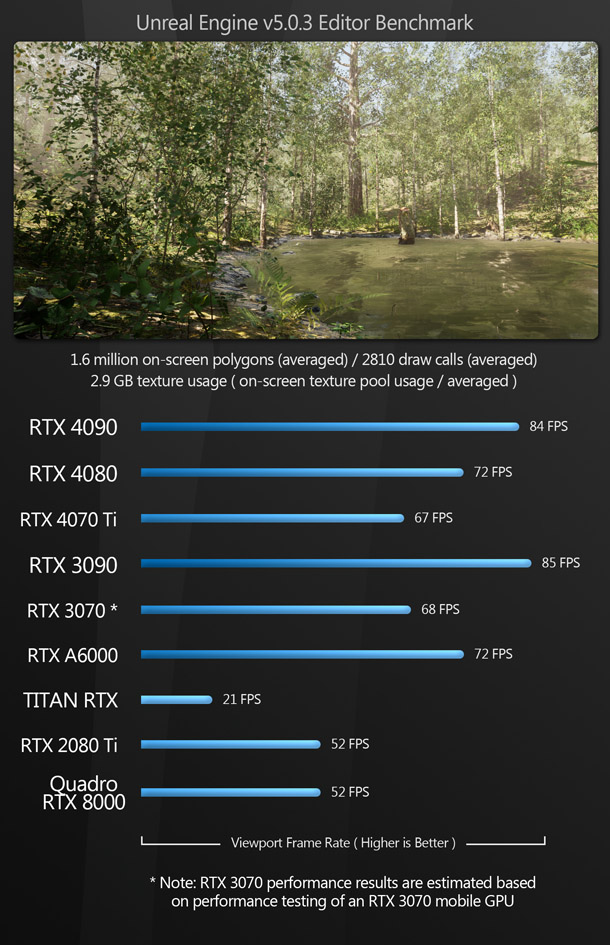

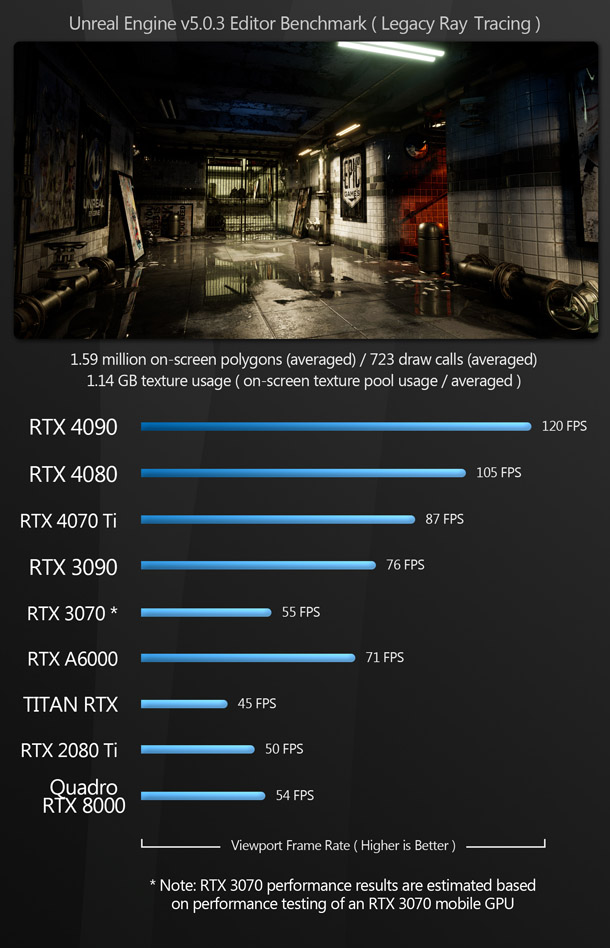

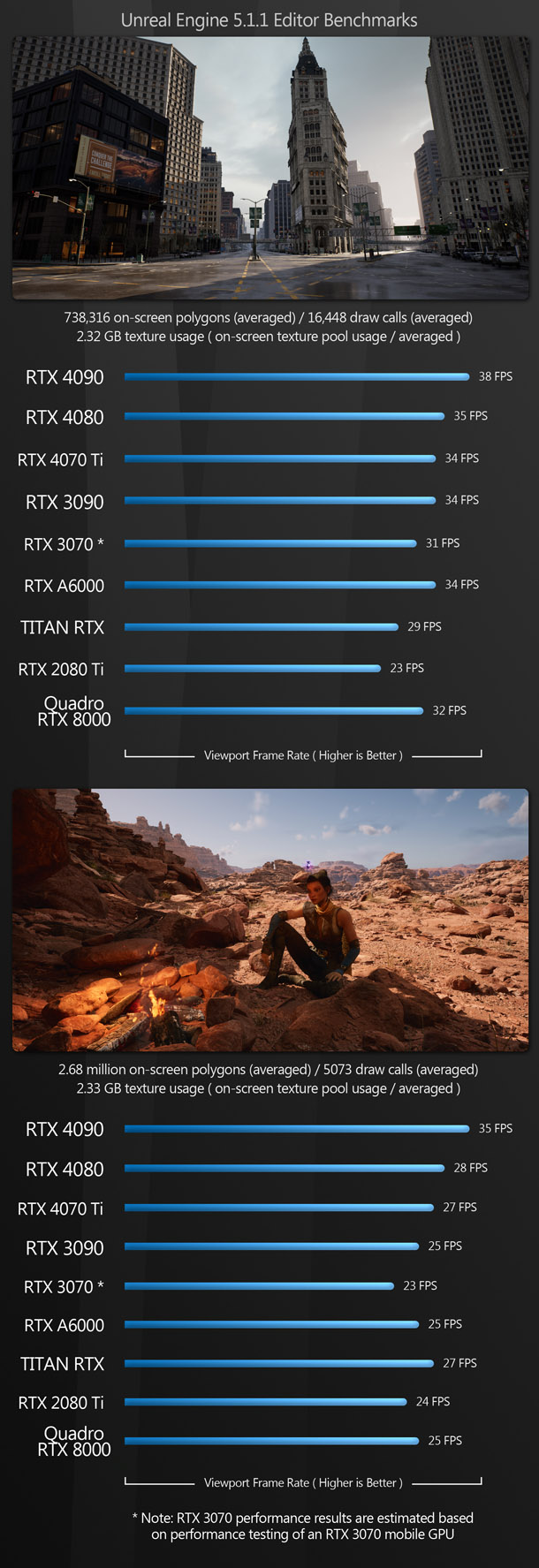

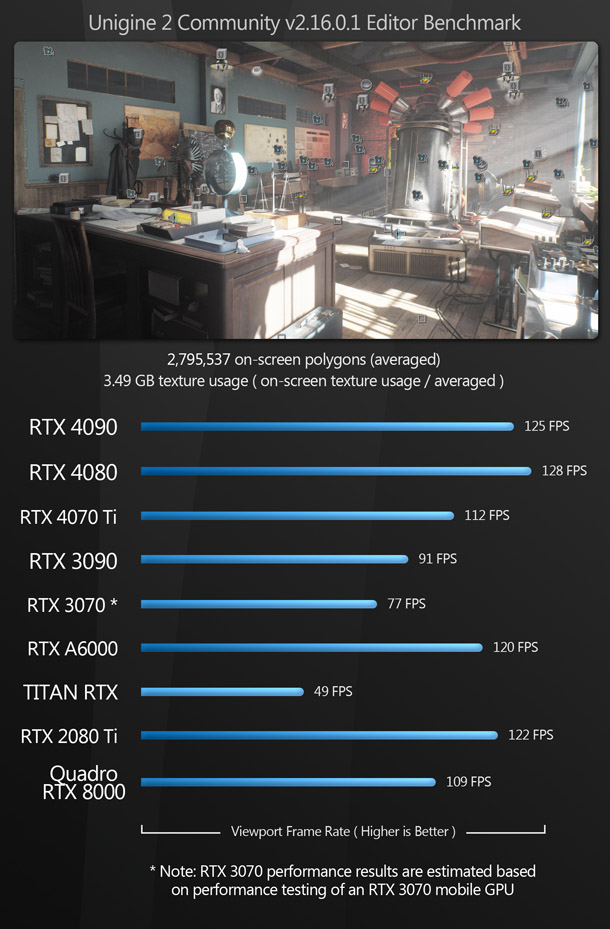

The viewport benchmarks include a number of key DCC applications – general-purpose 3D software like 3ds Max, Blender and Maya, more specialist tools like Substance 3D Painter, CAD packages like SolidWorks and Fusion 360, and real-time 3D applications like D5 Render, Unity and Unreal Engine.

As you might expect, the GeForce RTX 4090 takes the lead in almost all the viewport tests, with the other GeForce RTX 40 Series GPUs close behind, but there are few anomalies worth mentioning.

First, the CAD packages, SolidWorks and Fusion 360. In the Solidworks benchmark, both the previous-generation RTX A6000 and the two-generations-old Quadro RTX 8000 beat all of the GeForce GPUs on test, suggesting that Solidworks prefers Nvidia’s professional drivers. Even the Turing-generation Titan RTX comes pretty close to the performance of the GeForce RTX 4090.

With Fusion 360, the pattern is similar, with the RTX A6000 and Quadro RTX 8000 matching the GeForce RTX 4090 and beating all of the other GPUs, although here, the Titan RTX falls a long way behind.

The other anomaly is Unreal Engine 5.1. I used several Unreal scenes for benchmarking. On the two most demanding – the city scene and the Valley of the Ancients project – the GeForce RTX 4080 GPUs had the highest frame rates, but only by tiny margins. On less taxing scenes, the margins were much larger.

Digging deeper, I discovered that when running the two most complex scenes, GPU usage in the Unreal Editor was very low: around 30-40% for the GeForce RTX 40 Series GPUs; slightly higher for the 30 Series and 20 Series. CPU and memory usage and disk I/O were nowhere close to maximum, so there were no bottlenecks elsewhere in the system. My suspicion is that this low GPU usage may be the result of new features in Unreal Engine 5 – particularly Nanite and World Partition – reducing strain on the GPU.

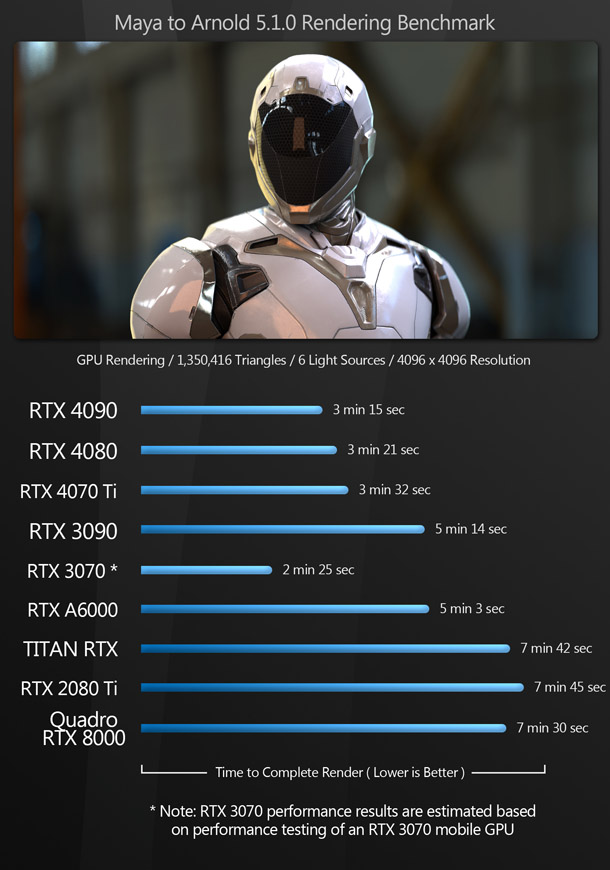

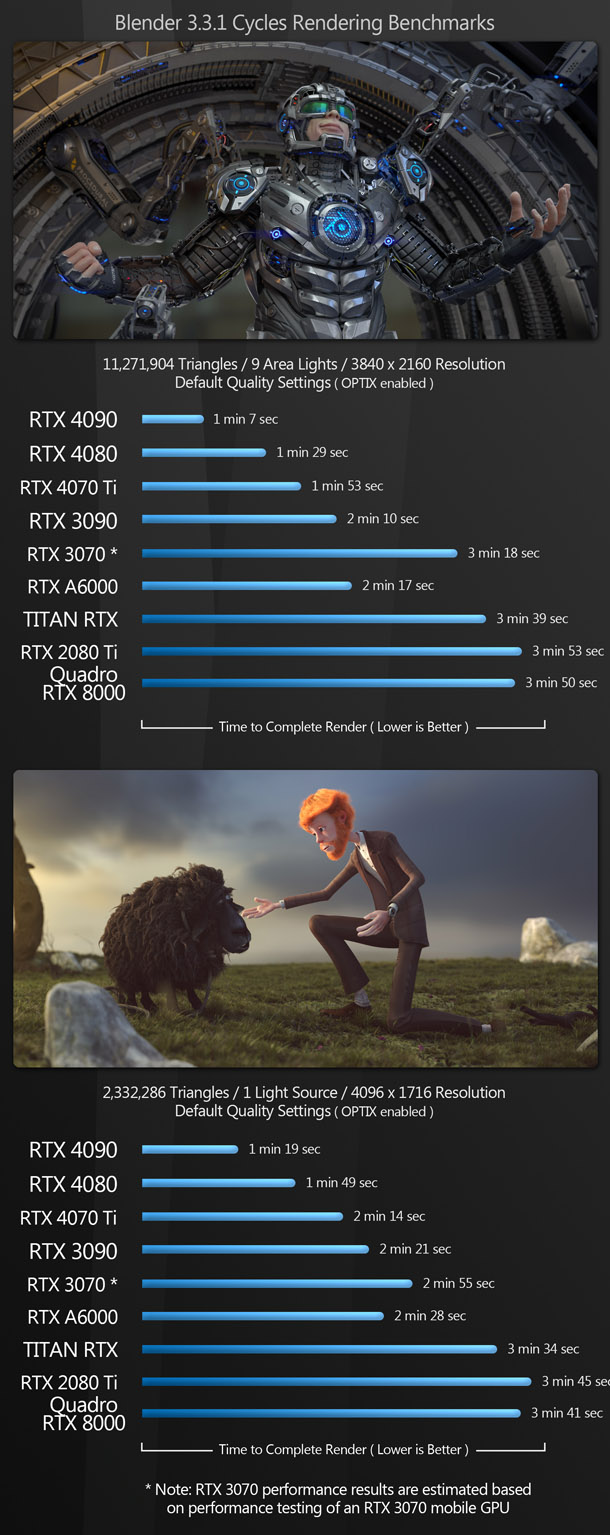

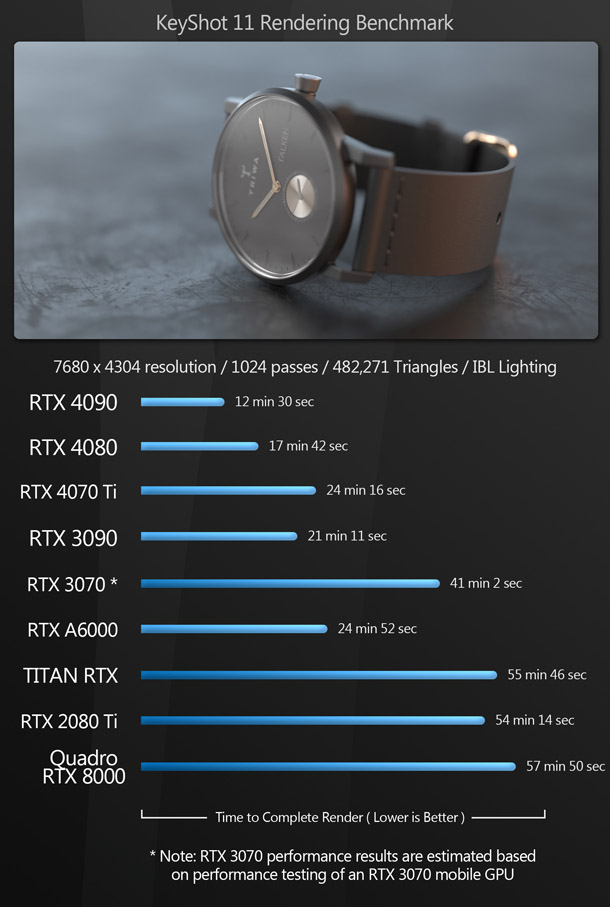

Rendering

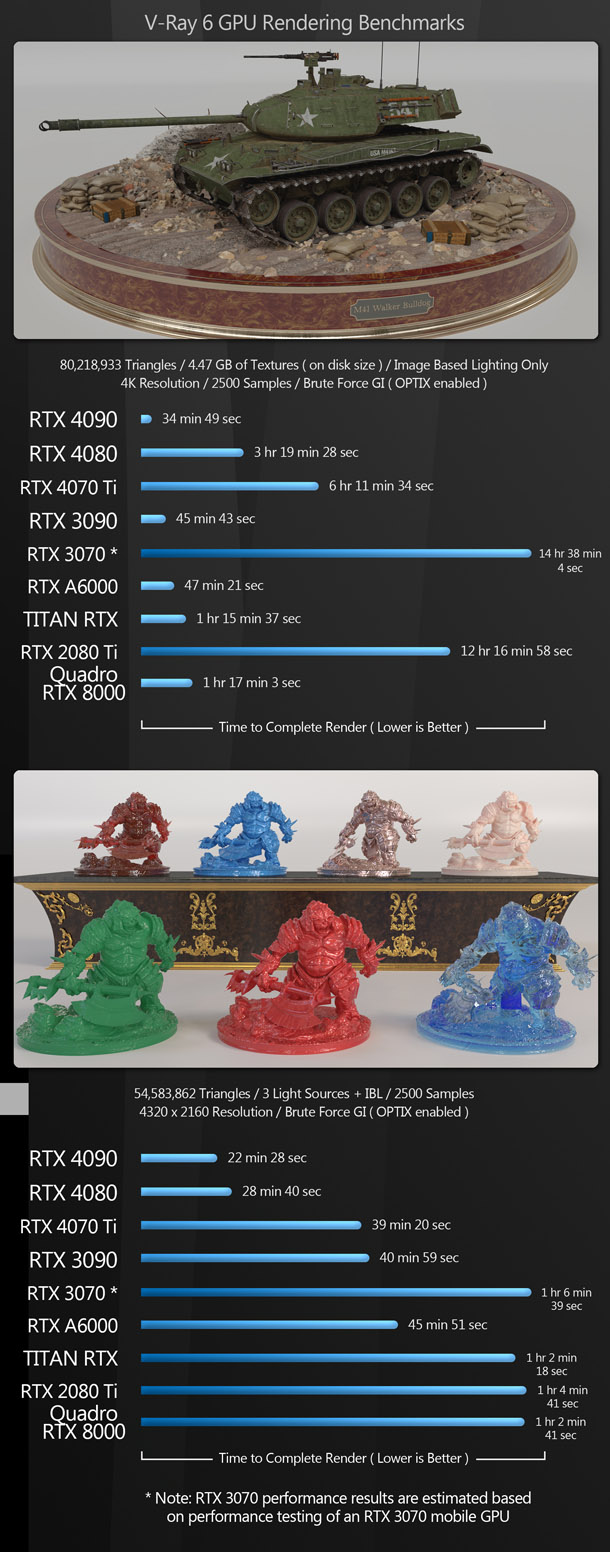

Next, we have a set of GPU rendering benchmarks, performed with an assortment of the more popular GPU renderers, rendering single frames at 4K or higher resolutions.

In the rendering benchmarks, the GeForce RTX 40 Series GPUs deliver some pretty significant performance increases over the previous-generation cards, with a few notable exceptions.

The first is with the open-source LuxCoreRender, where the GeForce RTX 3090 and the Titan RTX beat out the GeForce RTX 40 Series GPUs. This is most likely due to the fact that LuxCoreRender doesn’t get updated as frequently as the commercial applications and has not been optimised for the new GPU hardware yet.

The second, and more important, exception is the V-Ray GPU tank scene. This is designed to stress GPU memory, causing cards with less than 24 GB of VRAM to go out-of-core: once GPU memory fills up, data must be transferred between it and system RAM, causing significant slow-downs.

Here, the 24 GB GeForce RTX 4090 comes out on top, but most of the older cards beat the GeForce RTX 4080 and RTX 4070 Ti, simply due to the fact that they also have much larger frame buffers.

This is one of those instances where a case can be made for the professional GPUs, despite their much higher cost: their larger frame buffers make it possible to render much more complex scenes without issues.

Finally, we have the Arnold scene. Although the GeForce RTX 40 Series GPUs outperform most of the older cards, the mobile RTX 3070 in the Asus ProArt laptop system beats all of them by a considerable margin. Considering that it has the smallest frame buffer of all the GPUs on test, this is very odd. My suspicion is that this is due to the newer Zen 3 architecture of the CPU in the laptop, compared to the Zen 2 architecture of the desktop system, but this is still a pretty big impact for a CPU to have on a GPU rendering test.

Other benchmarks

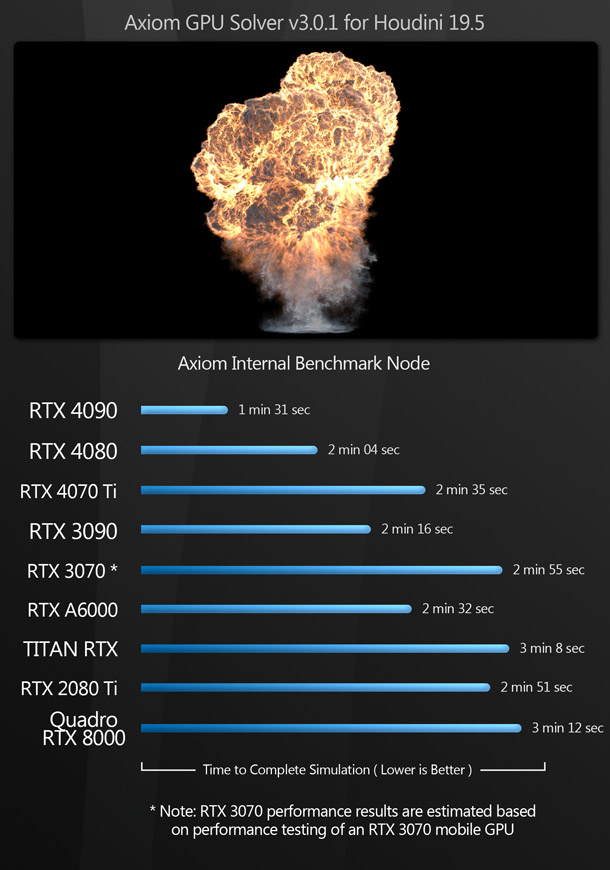

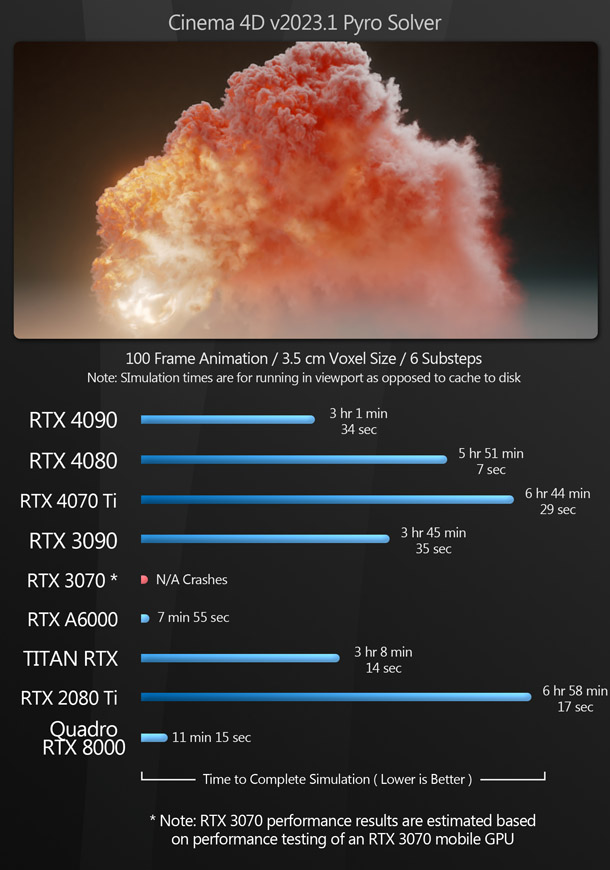

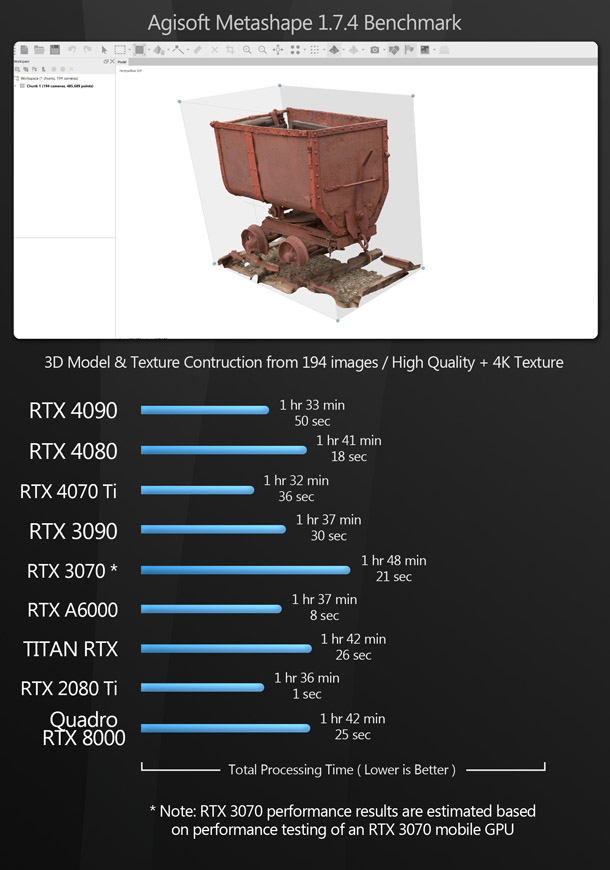

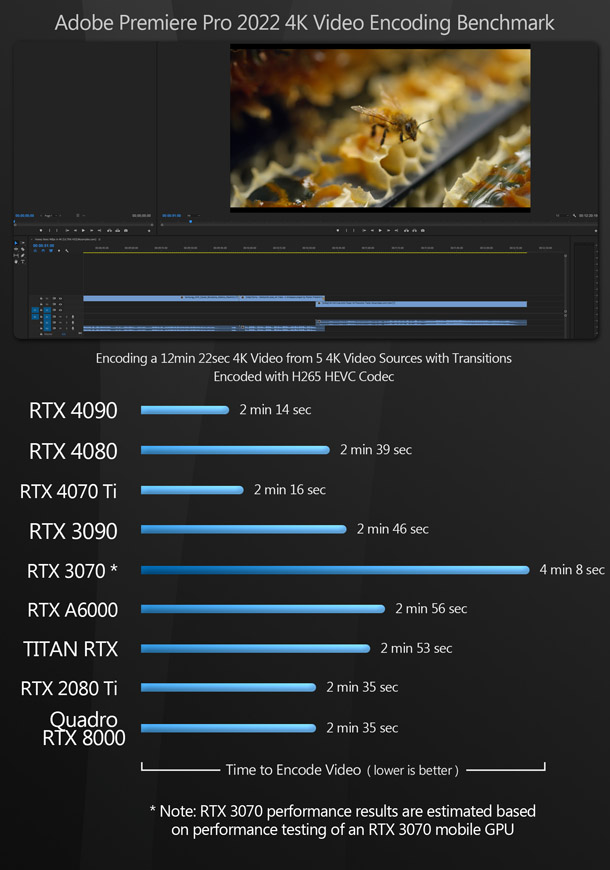

The next benchmarks test the use of the GPU for more specialist tasks. Premiere Pro uses the GPU for video encoding; photogrammetry application Metashape uses the GPU for image processing and 3D model generation; and Houdini plugin Axiom and Cinema 4D’s Pyro solver both use the GPU for fluid simulation.

Perhaps due to the range of tasks they test, the scores for the additional benchmarks are all over the place.

In Premiere Pro, all of the new GPUs do very well, with the RTX 4090 taking the top spot. The more interesting result is that the RTX 4070 Ti beats the RTX 4080, as do the older RTX 2080 Ti and Quadro RTX 8000 – not by much, but a win is still a win.

The Metashape results follow a similar pattern, in that the RTX 4090 and RTX 4070 Ti take the top spots, but the relative differences between cards are much smaller as Metashape doesn’t tax the GPU particularly hard: average GPU usage is around 20%.

Next, we have Cinema 4D’s new Pyro solver. Like GPU rendering, fluid simulations are graphics-memory-intensive, and here, performance is almost entirely dictated by the amount of GPU memory available. During testing, I noticed that performance of all of the GPUs was good until I hit their memory limits, after which things would grind to a near-halt. With 48GB of on-board memory, the RTX A6000 and Quadro RTX 8000 beat all of the newer GeForce RTX 40 Series cards by a large margin.

In Axiom’s well-optimised internal benchmark, the results are more predictable, with the RTX 4090 and RTX 4080 taking the top spots, followed by the previous-generation RTX 3090.

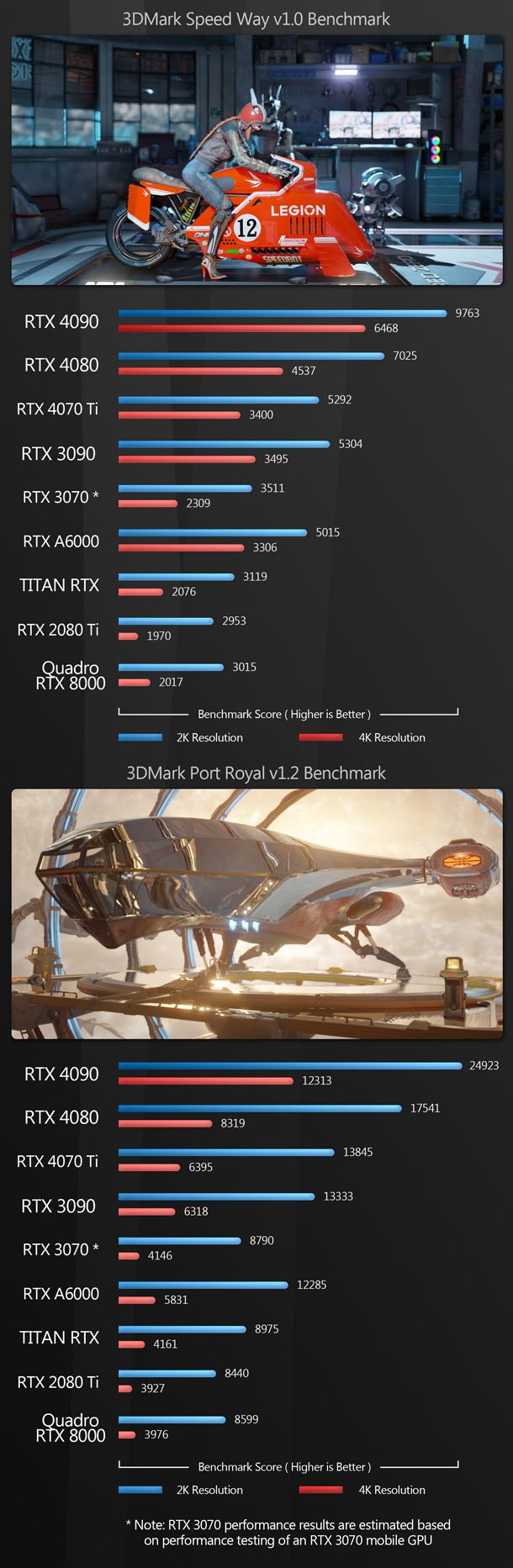

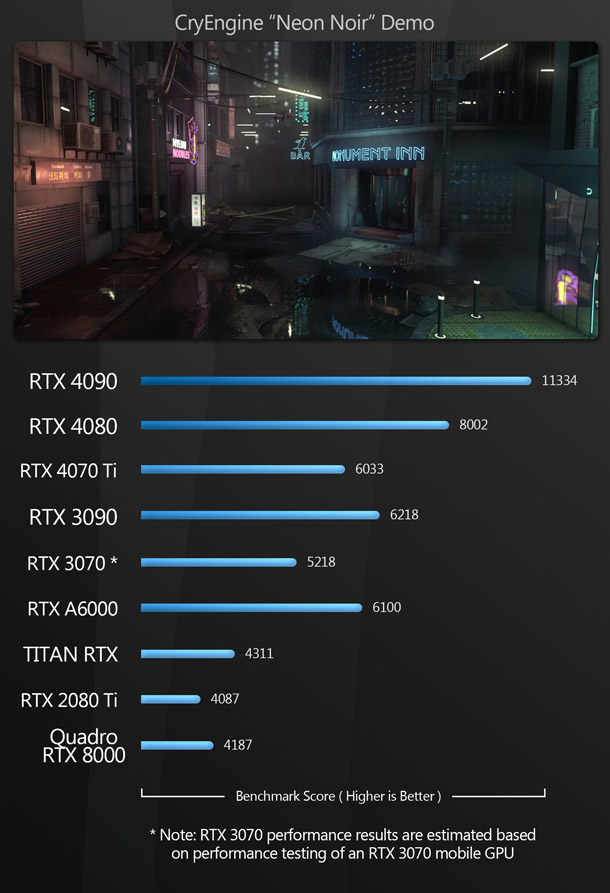

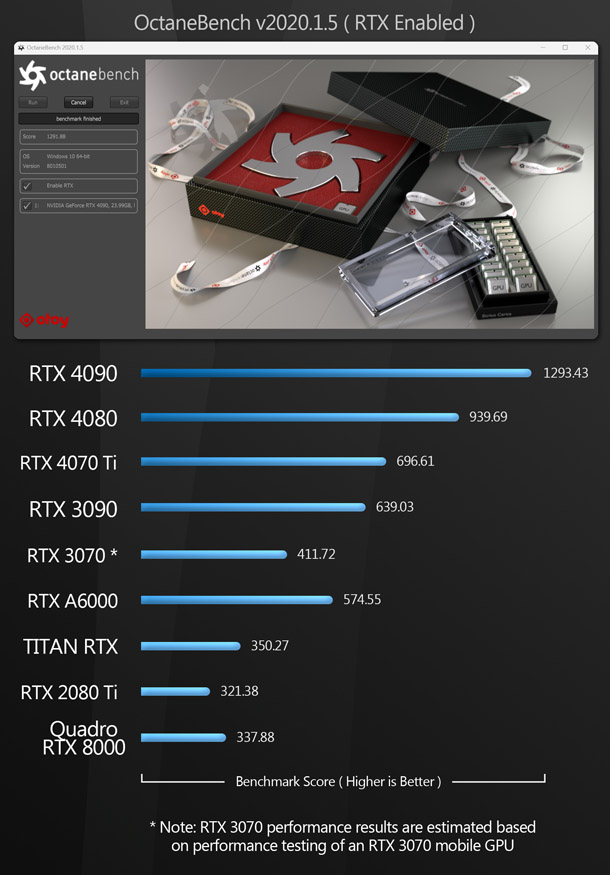

Synthetic benchmarks

Finally, we have an assortment of synthetic benchmarks. They don’t accurately predict how a GPU will perform in production, but they’re a measure of its performance relative to other GPUs, and the scores can be compared to those available online for other cards.

As expected, the RTX 40 Series GPUs take the top spots here, by moderate to significant margins. The even scaling in scores is impressive, but again, the results aren’t really representative of real-world performance.

Other considerations

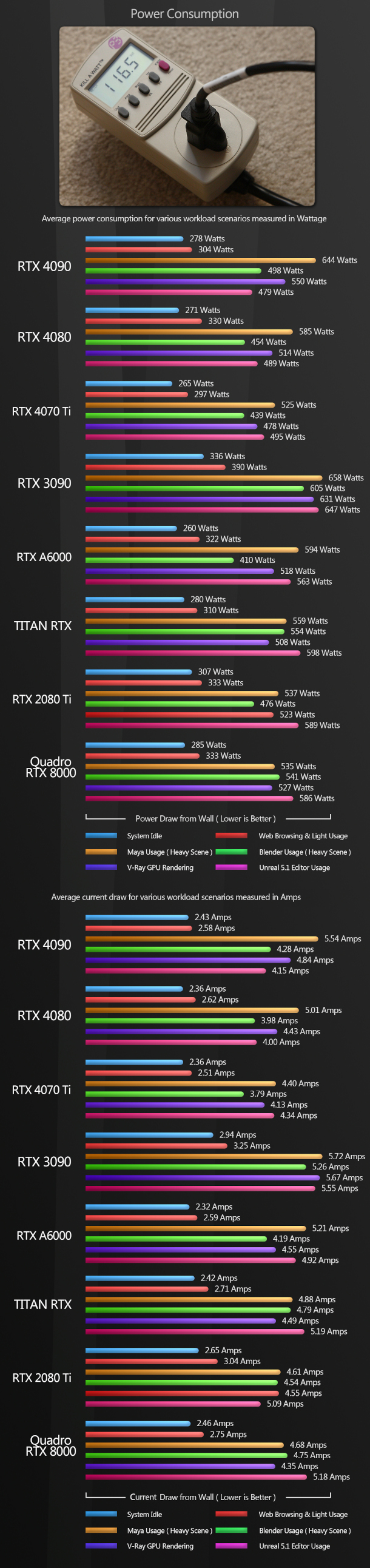

Power consumption

To test the power usage of the GeForce RTX 40 Series, I measured the power consumption of the entire test system at the wall outlet, using a P3 Kill A Watt meter. Since the test machine is a power-hungry Threadripper system, my figures will be higher than most standard DCC workstations.

For this group test, I measured both power and current drawn. Current (Amperage) is often overlooked by reviewers, but it can be a critical determinant of how many machines you can run on a single circuit.

Most US houses run 15A circuits from the main panel, and many circuit breakers are rated for 80% of their maximum load, so a 15A circuit with a standard breaker should not exceed 12A for continuous usage. In my tests, the current drawn by the test system approached 6A when the more power-hungry GPUs were installed. If the wall outlets in your home office are connected by a single circuit, this could determine whether you can run two workstations simultaneously, particularly when you factor in monitors and lights.

While the GeForce RTX 40 Series GPUs draw a fair amount of power, they are actually more efficient than the older Ampere and Turing cards in many of the tests. In my book, increased performance for little increase in power usage – in some cases, reduced power usage – is a winning combination.

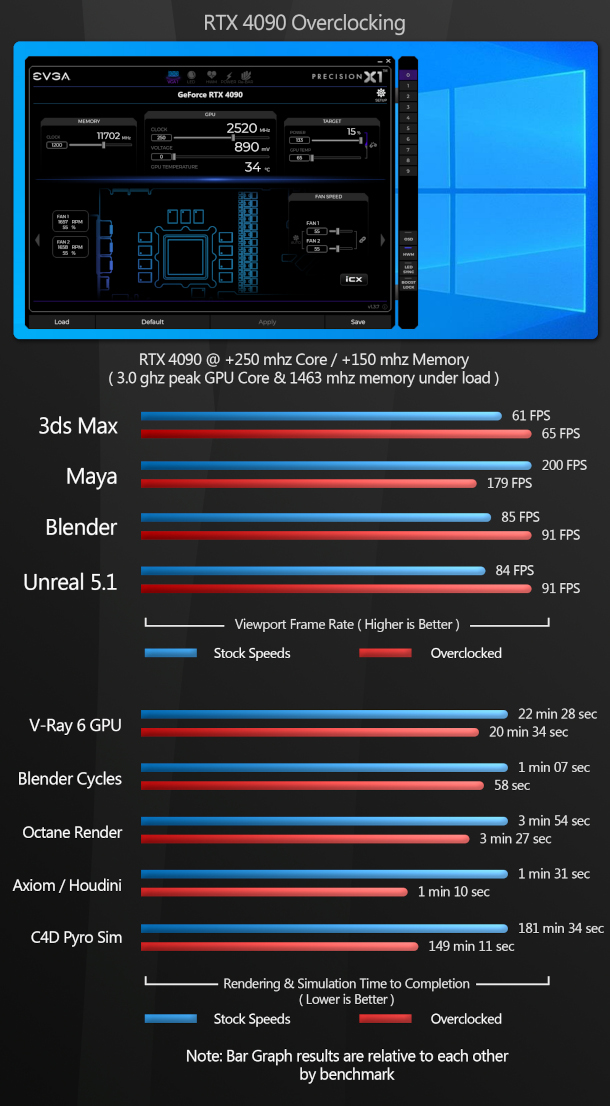

Overclocking

The GeForce RTX 4090 has a standard clock speed of 2.23 to 2.52 GHz (in practice, my test card seemed to run at a constant 2.52 GHz under load), at which speed, its TDP is rated at 450W. But the 16-pin to 4 x 8-pin adapter cable that Nvidia provides can run the RTX 4090 at stock speeds with only three of the four 8-pin PCIe connectors plugged in. Plug in the fourth, and you can push the GPU to a TDP of 600W.

To test how much this improves performance, I repeated several of the benchmarks with the RTX 4090 overclocked to a base CPU clock speed of 3.00 Ghz and a base memory clock speed of 1,463 MHz.

Overclocking the RTX 4090 improves performance in most of the tests, with GPU rendering, simulation and the synthetic benchmarks showing improvements of 10-20%. There was less effect on viewport frame rates, and in one case, viewport performance actually went down.

Although running temperatures barely increased, whether the performance gains are worth the extra power used is for you to decide.

Drivers

Finally, a note on the Studio Drivers with which I benchmarked the GeForce RTX GPUs. Nvidia now offers a choice of Studio or Game Ready Drivers for GeForce cards, recommending Studio Drivers for DCC work and Game Ready Drivers for gaming. In my tests, I found no discernible difference between them in terms of performance or display quality. My understanding is that the Studio Drivers are designed for stability in DCC applications, and while I haven’t had any real issues when running DCC software on Game Ready Drivers, if you are using your system primarily for content creation, there is no reason not to use the Studio Drivers.

Verdict

From these test results, it is clear that the GeForce RTX 40 Series GPUs provide a significant performance improvement over their predecessors, so long as the tasks you want to perform on them fit into graphics memory. If you do a lot of memory-intensive work, you may be better off buying a previous-generation card with more on-board memory: a GeForce RTX 3090 rather than a GeForce RTX 4080, for example.

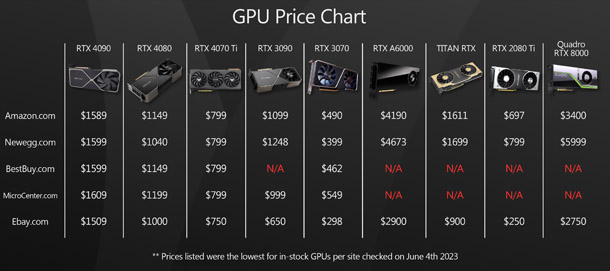

Let’s look at the three new cards on test one by one, beginning with the GeForce RTX 4090. Aside from the worstation-class RTX 6000 Ada Generation – not tested here – it’s the fastest GPU you can buy right now, either for gaming or content creation. In addition to the massive performance gains over the previous-generation RTX 3090, it uses a little less power, runs a little bit cooler, and has room for overclocking.

Its MSRP of $1,599 is a tough pill to swallow, but consider it in context. Two generations ago, the cheapest 24GB Nvidia GPU was the much slower Titan RTX, which had a launch price of $2,499, and before that, the only Nvidia cards officially certified for use with DCC software were the even more expensive Quadro GPUs. Although the RTX 4090 costs $100 more than its immediate predecessor, the RTX 3090, it offers nearly double the performance in some situations.

Next, we have the GeForce RTX 4080. This is an interesting product. It’s a powerful GPU, and beats all of the previous-generation GeForce RTX 30 Series GPUs quite comfortably, except in tests that require more than 16 GB of graphics memory.

But with a MSRP of $1,199, the GeForce RTX 4080 is just too close in price to the RTX 4090, given that it’s a good deal slower, and comes with substantially less on-board memory. At $1,000, it would be an easier sell. If $1,199 is the most you can spend, the RTX 4080 will serve you well, but if you can afford it, I would recommend paying the extra $400 for the RTX 4090.

Finally, we have the GeForce RTX 4070 Ti. When I started testing, I didn’t expect it to do well, so I was surprised how strongly it performed. In many of the benchmarks, it was nipping at the heels of the RTX 4080, and in several cases, it also beat the previous-generation RTX 3090.

I think the sweet spot for a card like this would be around $600-700, but with a MSRP of $799, it’s considerably cheaper than either the RTX 4080 or RTX 4090, making it easy to recommend to artists on a budget. Its main limitation is its 12 GB of graphics memory, so if you do memory-intensive work, and can find a secondhand GeForce RTX 3090 for $600-800, that may be a better option.

Overall conclusions

While all three GeForce RTX 40 Series cards on test are monsters, my own pick would be the RTX 4090. It’s a fantastic GPU for content creation, especially modelling and rendering, and its 24 GB frame buffer is large enough for all but the most demanding DCC tasks. Just make sure you have a large enough PSU to keep it fed: while 850W is the minimum, I’d recommend 1000W to leave room for overclocking and a faster CPU.

My runner-up pick is the RTX 4070 Ti. Although it’s quite a bit less powerful than the RTX 4090, it’s better value for money than the the RTX 4080. Its 12 GB of GPU memory will be a limitation in some workflows, but as software developers get better at optimising out-of-core performance, that may become less of an issue.

Lastly, I want to say thanks for taking the time to stop by. I hope this review has been helpful and informative, and if you have any questions or suggestions, please let me know in the comments.

Links

Read more about the GeForce RTX 40 Series GPUs on Nvidia’s website

About the reviewer

CG Channel hardware reviewer Jason Lewis is Lead Environment Artist at Team Kaiju, part of Tencent’s TiMi Studio Group. See more of his work in his ArtStation gallery. Contact him at jason [at] cgchannel [dot] com

Acknowledgements

I would like to give special thanks to the following people for their assistance in bringing you this review:

Stephenie Ngo of Nvidia

Sean Kilbride of Nvidia

Jordan Dodge of Nvidia

Chloe Larby of Grithaus Agency

Lino Grandi of Otoy

Stephen G Wells

Adam Hernandez