Videos: the best of Siggraph 2016’s technical papers

Last week, ACM Siggraph posted its annual preview reel (above) showing the highlights of the technical papers due to be presented at Siggraph 2016, which takes place in Anaheim from 24-28 July.

As usual, it provides a very watchable overview of the conference’s key themes, but for the detail, you need to turn to the full videos accompanying the papers – which are steadily appearing online as the show approaches.

Below, we’ve rounded up our own pick of the year’s technical advances in computer graphics: from cutting-edge research in fluid simulation to new sketch-driven artists’ tools – and even new ways of 3D printing the Moomins.

Fluid simulation

Surface-Only Liquids

Fang Da, David Hahn, Christopher Batty, Chris Wojtan, Eitan Grinspun

One problem of standard methods of fluid simulation is that they don’t reproduce surface tension accurately.

Surface-Only Liquids proposes a new simulation method that “operates on the surface [of a body of liquid] only without ever discretising the volume, based on Helmholtz decomposition and the Bound Element Method”.

The result is detailed recreations of a range of liquid phenomena – check out the beautiful shots of crown splashes, water bells and water jets colliding in the video above – with realistic surface tension and gravity.

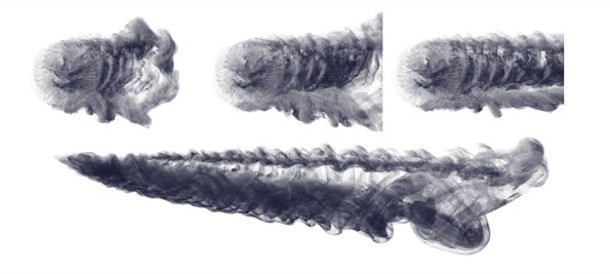

Resolving Fluid Boundary Layers with Particle Strength Exchange and Weak Adaptivity

Xinxin Zhang, Minchen Li, Robert Bridson

Whereas fluid simulation techniques were traditionally either grid-based or particle-based, the hybrid FLIP (FLuid Implicit Particle) solvers used in many modern DCC packages combine the benefits of both methods.

Much of the pioneering work was done by Robert Bridson – among other things, co-creator of Maya’s Bifrost toolset – whose students at the University of British Columbia have now extended the approach further.

The paper above combines the FLIP method with viscous particle strength exchange to better transfer momentum where liquids encounter solid boundaries – a technique the authors christen ‘VFLIP’.

In addition, it introduces Weakly Higher Resolution Regional Projection (WHIRP): a “cheap and simple way” to increase the resolution of the simulation grid by overlaying high-res grids on a coarser global grid.

As well as creating two lovely new acronymns for TDs to play with, the result is more detailed, realistic sims – although sadly, we can’t find a video at the minute, only the image above of smoke passing throgh a rotating fan.

Schrödinger’s Smoke

Albert Chern, Felix Knöppel, Ulrich Pinkall, Peter Schröder, Steffen Weißmann

Smoke is also the subject of this eye-catching demo from a team at CalTech, TU Berlin and Google.

A new approach for purely Eulerian (that is, grid-based) simulation of fluids, the technique takes ideas from quantum mechanics – notably the Schrödinger wave equation that gives the paper its title.

The authors describe the technique as accurately recreating fine fluid stuctures like wakes and vortex filaments without the need for traditional workarounds like using Lagrangian (particle-based) techniques for advection.

It’s also very nice eye candy, with brightly coloured puffs of smoke meeting and mingling – including, in one memorable simulation, smoke clouds shaped like the Utah teapot and the Stanford bunny.

Towards Animating Water With Complex Acoustic Bubbles

Timothy R. Langlois, Changxi Zheng, Doug L. James

But liquid simulation isn’t just about how the liquid looks, as this paper from a team at Cornell, Columbia and Stanford universities reminds us: it can also be about simulating how a liquid sounds.

Since the sounds of drips and splashes are mainly created by the noises air bubbles make, the work uses a two-phase simulation, tracking bubbles as they form, merge, split and pop near the liquid surface.

In practice, we doubt it will put Foley artists out of work just yet, but it does – as Siggraph’s teaser video puts it – answer the age-old question: “What does a liquid armadillo sound like when it lands in a glass of water?”

Rigging and skinning

Pose-Space Subspace Dynamics

Hongyi Xu, Jernej Barbič

Simulating the secondary motion of soft tissues increases the realism of character animation – but also greatly increases computation times: a problem this paper from a team at USC seeks to resolve.

The resulting demo combines an IK rig with FEM (Finite Element Method) dynamics for soft tissues, precomputed for a representative set of poses then combined at runtime into a single, fast dynamic system.

According to the authors, the technique is fast enough for VR work, fits into standard character pipelines and, as it doesn’t require skin data capture, works equally for humans, animals and fictional creatures.

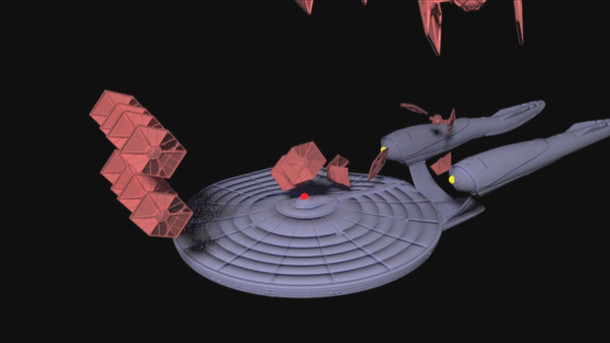

Example-based Plastic Deformation of Rigid Bodies

B. Jones, N. Thuerey, T. Shinar, A. W. Bargteil

Sadly, not every demo movie from this year’s Siggraph technical papers is embeddable – which is a particular source of regret for a paper whose demo shows plastic TIE fighters colliding with the USS Enterprise.

In theory, it demonstrates a more efficient technique for mimicking plastic deformations and fracturing when near-rigid man-made materials collide. But secretly, we’re sure it’s been done to mess with sci-fi nerds’ heads.

Capture technologies

Live Intrinsic Video

Abhimitra Meka, Michael Zollhoefer, Christian Richardt, Christian Theobalt

Breaking down photographic images into their reflectance and shading components is nothing new, as you will know if you remember Tandent Vision Science’s neat, but extremely expensive, Lightbrush software.

This paper from a team at the Max Planck Institute for Informatics takes things a step further, extracting the component layers efficiently enough to work with video streams, not just still images.

The technique separates the surface colour of each object from its surface shading, generating a perfect, shadow-free diffuse texture, opening the way for real-time recolouring or retexturing of live footage.

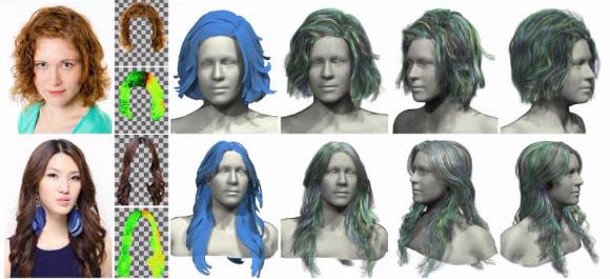

AutoHair: Fully Automatic Hair Modeling From A Single Image

Menglei Chai, Tianjia Shao, Hongzhi Wu, Yanlin Weng, Kun Zhou

Sadly, two of the most promising papers relating to the capture of real-world objects don’t seem to be online yet.

This one claims to be “the first fully automatic method for 3D hair modeling from a single image” and has been tested on a set of internet photos “resulting in a database of 50,000 3D hair models”.

The work has been done by a team at Zhejiang University, so above, we’ve linked to the homepage of Kun Zhou, director of the university’s State Key Lab of CAD and CG, where we hope the paper will eventually appear.

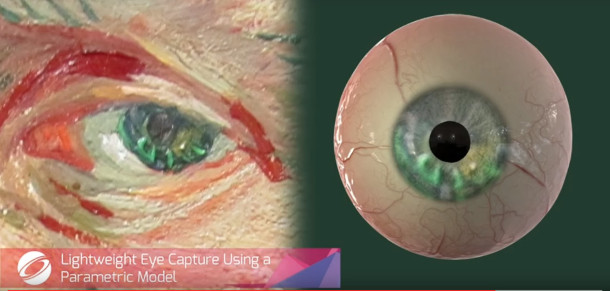

Lightweight Eye Capture Using a Parametric Model

Pascal Bérard, Derek Bradley, Markus Gross, Thabo Beeler

And hair isn’t the only thing that can be captured from single images, as this demo from a team at ETH Zürich and Disney Research Zürich shows: you can also reconstruct realistic eyes.

Again, we’ve linked to the ETH Zürich website until the actual paper becomes available online, but the demo images show it at work on animal eyes – and even paintings, as in the Van Gogh self-portrait above.

Sketching and rendering

Interactive Sketching of Urban Procedural Models

Gen Nishida, Ignacio Garcia-Dorado, Daniel G. Aliaga, Bedrich Benes, Adrien Bousseau

Tools like SketchUp simplify the process of designing buildings – but that doesn’t mean that complete novices find them easy to use: a failing that Interactive Sketching of Urban Procedural Models aims to address.

The work of Purdue University’s High Performance Graphics Lab, the project combines sketch-based and procedural modelling techniques, generating detailed 3D models from even the shakiest of 2D drawings.

Would-be architects simply sketch the rough form of a building, then draw in a typical window and door, and the team’s software – created by training neural networks to recognise the form intended – does the rest.

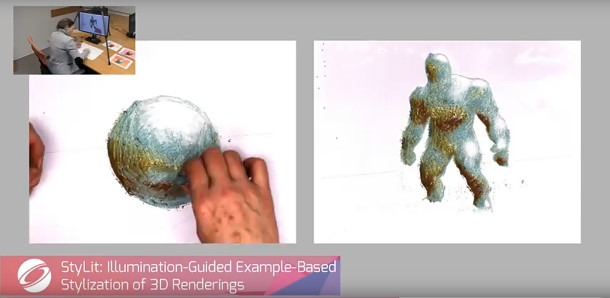

StyLit: Illumination-Guided Example-Based Stylization of 3D Renderings

Jakub Fiser, Ondrej Jamriska, Michal Lukac, Eli Shechtman, Paul Asente, Jingwan Lu, Daniel Sykora

Again, we’ve had to link to one of the researcher’s homepages as the full paper isn’t online yet, but this work on artist-driven methods of non-photorealistic rendering looks very promising.

The demo shows a user sketching a sphere using traditional media, and the resulting pattern of pastel strokes being transferred dynamically to an animated 3D character as its shading style.

As well as researchers at the Czech Technical University in Prague, the team includes staff from Adobe Research, so there’s the possibility that the technique could make its way into commercial tools in future.

Just plain fun

My Text in Your Handwriting

Tom S.F. Haines, Oisin Mac Aodha, Gabriel J. Brostow

In the past, forgers used to need considerable artistic skill to reproduce their target’s handwriting, with its unique letter forms and spacing, and characteristic patterns of line thickness and pressure.

Now, thanks to a team from University College London, you can simply feed in an annotated sample of their work and let an algorithm do the rest.

In the video above, the software takes on Abraham Lincoln, and even shows what the result would have been had Conan Doyle really written the famous – but entirely apocryphal – phrase, ‘Elementary, my dear Watson’.

Acoustic Voxels: Computational Optimization of Modular Acoustic Filters

Dingzeyu Li, David Levin, Wojciech Matusik, Changxi Zheng

Some of the most winning Siggraph papers combine serious maths with distinctly frivolous real-world examples – as in the case of this team from Columbia University and MIT’s work on acoustic voxels.

At heart, it’s a serious-minded piece of work on designing 3D forms with specific acoustic properties – for example, for creating more efficient earmuffs or engine mufflers.

But the main test case that the team chose to demonstrate its technique? 3D printing plastic hippos, pigs and octopi that you can play as musical instruments. Parp.

Procedural Voronoi Foams for Additive Manufacturing

Jonàs Martínez, Jérémie Dumas, Sylvain Lefebvre

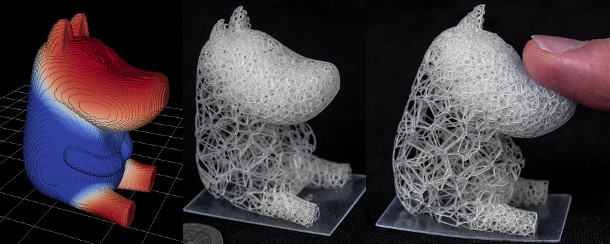

Last but not least for this year’s round-up of Siggraph technical papers, another appealing application of serious science to 3D printing is INRIA’s work on Voronoi open-cell foams.

By varying the size and spacing of the cells within the foam, the team can control the mechanical properties of a 3D object formed from it – as they demonstrate by 3D printing the adorably squishy Moomin above.

Visit the technical papers section of the Siggraph 2016 website

Read Ke-Sen Huang’s invaluable list of Siggraph 2016 papers available on the web

(More detailed than the Siggraph website, and regularly updated as new material is released publicly)