Review: Nvidia GeForce RTX 3090 GPU

With 24GB of graphics memory, the GeForce RTX 3090 is the unoffical workstation card of Nvidia’s Ampere generation of gaming GPUs. See how it performs with key DCC applications in Jason Lewis’s real-world tests.

In this review, I am going to be looking at Nvidia’s recently released GeForce RTX 3090, the current top-of-the-line part in its GeForce line of consumer GPUs, and compare it to the two top-tier GPUs from the firm’s previous generation of cards, the GeForce RTX 2080 Ti and the prosumer Titan RTX.

I would have liked to have included another card that uses Nvidia’s current Ampere GPU architecture, the GeForce RTX 3080, but was unable to get my hands on one for this review. Tech news sites report that this is a problem that many people currently share. I am hoping to revisit this review at some point in the future with most, if not all, of the GeForce RTX 30 series GPUs when they become more widely available.

The positioning of the cards in the series is pretty standard when it comes to the lower-end and middle-tier cards, the GeForce RTX 3060 Ti, 3070 and 3080, but the GeForce RTX 3090 is something of an anomaly from a gaming standpoint. With a nearly fully unlocked GA102 GPU, and 24GB of GDDR6X memory, its specs are similar to the previous-generation Titan RTX, which begs the question: is this the Titan of Nvidia’s current Ampere generation of GPUs, or will we see an even more powerful Titan card in future?

But regardless of its name, the GeForce RTX 3090’s specs make it an ideal card for DCC workloads, which is why many are calling it the unofficial workstation GPU of the Ampere line-up. In this review, I am going to put that claim to the test, and see how the RTX 3090 measures up in a slew of real-world content creation tasks.

Jump to another part of this review

Technology focus: GPU architectures and APIs

Specifications and price

Testing procedure

Benchmark results

Other considerations

Verdict

Technology focus: GPU architectures and APIs

Before I discuss the card on test, here is a quick recap of some of the technical terms that will crop up in this review. If you’re already familiar with them, you may want to skip ahead.

Both Nvidia’s current Ampere GPU architecture and its previous-generation Turing architecture feature three types of processor cores: CUDA cores, designed for general GPU computing; Tensor cores, designed for machine learning operations; and RT cores, intended to accelerate ray tracing calculations.

In order to take advantage of the RT cores, software applications have to access them through a graphics API: in the case of the applications featured in this review, either DXR (DirectX Raytracing), used in real-time renderers like Unreal Engine, or Nvidia’s own OptiX API, used by most offline renderers. In most of those renderers, the OptiX rendering backend is provided as an alternative to an older backend based on Nvidia’s CUDA API. The CUDA backends work with a wider range of Nvidia GPUs, but OptiX enables ray tracing accelerated by the RTX cards’ RT cores, often described simply as ‘RTX acceleration’.

As an Ampere card, the GeForce RTX 3090 has the same types of processor cores as the two Turing cards on test, the GeForce RTX 2080 TI and the Titan RTX, but more recent versions of them: it uses the second generation of Nvidia’s RT ray tracing cores and the third generation of its Tensor AI cores.

Specifications and price

Although many of Nvidia’s current board partners make their own GeForce RTX 3090 cards, the one on test is a Founders Edition card direct from Nvidia. Quite a few online reviews, like this one from Tom’s Hardware, dive deeply into the card’s architecture, so I am only going to include a brief overview here.

The GeForce RTX 3090 sports the most powerful Ampere GPU, the GA102, with the RTX 3090 using a nearly fully unlocked version sporting 10,496 CUDA cores, 82 second-generation RT ray tracing cores and 328 third-generation Tensor machine learning cores. (The CUDA figure is a bit misleading, as in the past, CUDA core count was measured solely using INT32 cores, of which the RTX 3090 has 5,248, but due to architecture changes to the Ampere GPUs, CUDA core throughput has effectively doubled, so Nvidia now measures CUDA core count based off that double throughput.)

The RTX 3090 comes equipped with 24GB of GDDR6X memory on a 384-bit bus. It has a core clock speed of 1,395MHz and a boost clock speed of 1,695MHz.

One aspect of the RTX 3090 that sets it apart from the other Ampere GPUs – and all previous GeForce GPUs – is its power draw. The RTX 3090 is an extremely power-hungry GPU. It is officially rated at 350W and Nvidia recommends a minimum of 750W for your system’s PSU, but I would personally recommend 1,000W or more, especially if you are running an HEDT CPU and/or lots of system RAM and storage drives.

The RTX 3090 is also, to put it bluntly, a massive card. At 12.3 inches long and 5.4 inches wide (31.3 x 13.8cm), and taking up a full three slots, it is over an inch longer and an inch wider than the GeForce RTX 3080 and the top-tier Turing GPUs. It is also a very heavy card. I haven’t actually weighed it, but it is significantly heavier than any of the Turing GPUs I have tested, and internet sources claim that it tips the scales at close to five pounds.

At $1,499, the GeForce RTX 3090 is slightly more expensive than its predecessor, the GeForce RTX 2080 Ti, which had a launch price of $999 – or $1,199 for its own Founders Edition – but significantly less expensive than the Titan RTX, which had a launch price of $2,499.

Testing procedure

For this review, my test system was a Xidax X-10 workstation powered by an AMD Threadripper 3990X CPU. You can find more details in my review of the Threadripper 3990X.

CPU: AMD Threadripper 3990X

Motherboard: MSI Creator TRX40

RAM: 64GB of 3,600MHz Corsair Dominator DDR4

Storage: 2TB Samsung 970 EVO Plus NVMe SSD / 1TB WD Black NVMe SSD / 4TB HGST 7200rpm HD

PSU: 1300W Seasonic Platinum

CPU cooler: Alphacool 360mm AIO liquid cooler

OS: Windows 10 Pro for Workstations

For testing, I used the following applications:

Viewport and DCC application performance

3ds Max 2021.2, Blender 2.90, Fusion 360, Maya 2020.1, Modo 13.0v1, SolidWorks 2020 SP0, Substance Painter 2020.2.1, Unreal Engine 4.25

GPU rendering

Arnold for Maya (using MtoA 4.1.0), Blender 2.90 (using Cycles), Cinema 4D R22 (using Radeon ProRender), D5 Render 1.6.2, KeyShot 9.0, Maverick Studio 2021.1, OctaneBench 2020.1, Redshift 3.0.33, Solidworks 2020 SP0 (using Visualize), V-Ray 5 for 3ds Max (using V-Ray GPU)

Other benchmarks

Metashape 1.6.5, Premiere Pro 2020 (14.1), Substance Alchemist 2020.3.0

Synthetic benchmarks

3DMark

In the viewport and editing benchmarks, the frame rate scores represent the figures attained when manipulating the 3D assets shown, averaged over five testing sessions to eliminate inconsistencies. In all of the rendering benchmarks, the CPU was disabled so only the GPU was used for computing. Testing was performed on a single 32” 4K display running at its native resolution of 3,840 x 2,160 pixels, at 60Hz.

Benchmark results

Viewport and DCC application performance

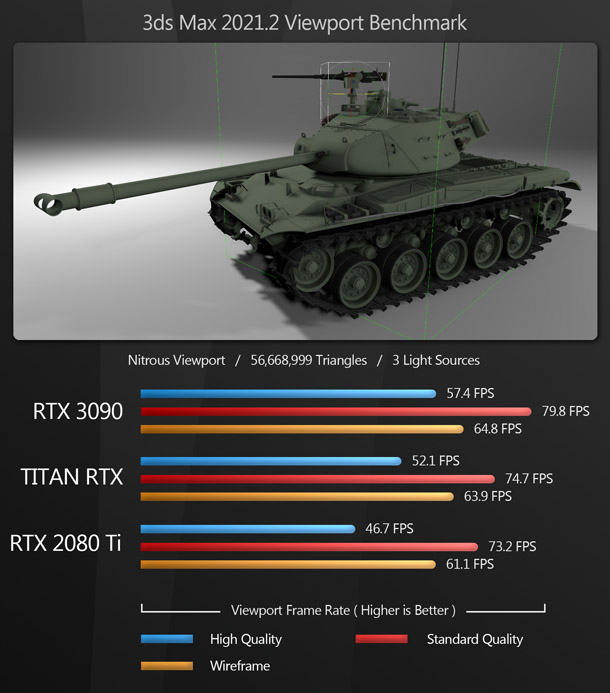

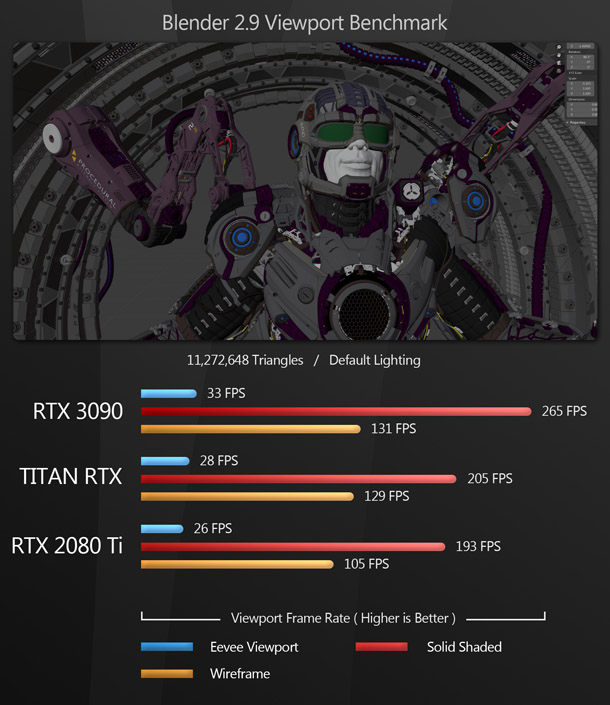

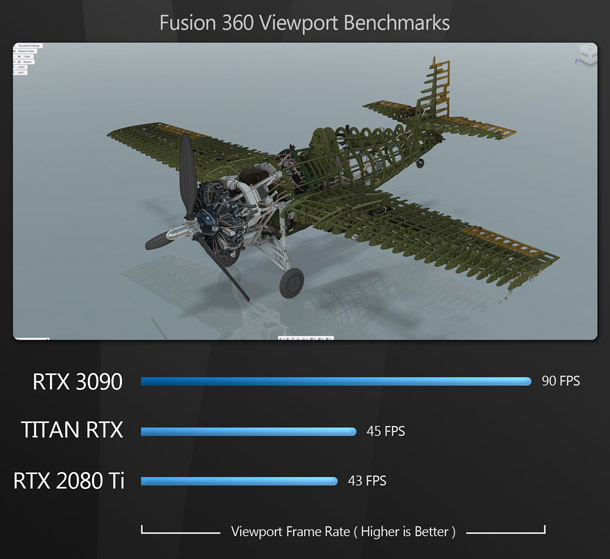

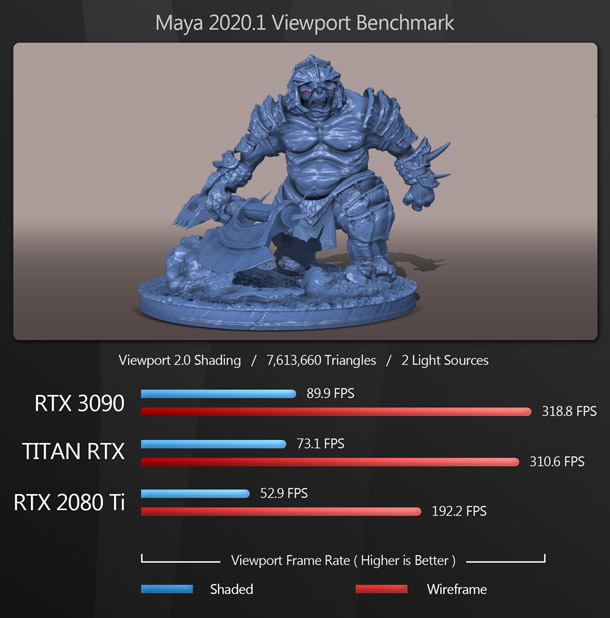

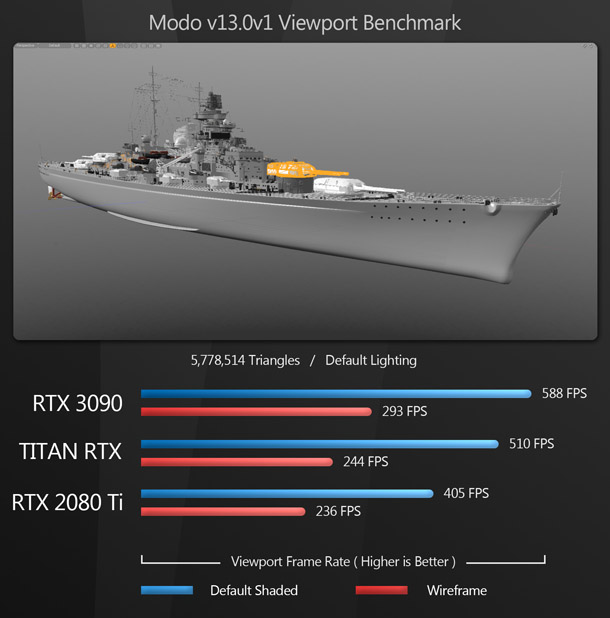

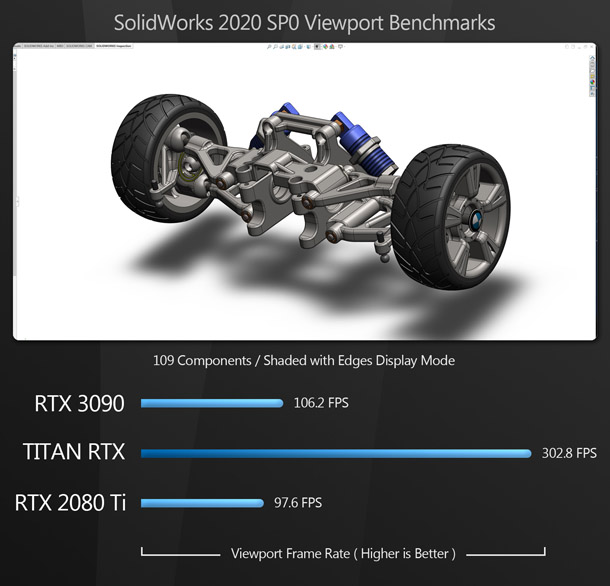

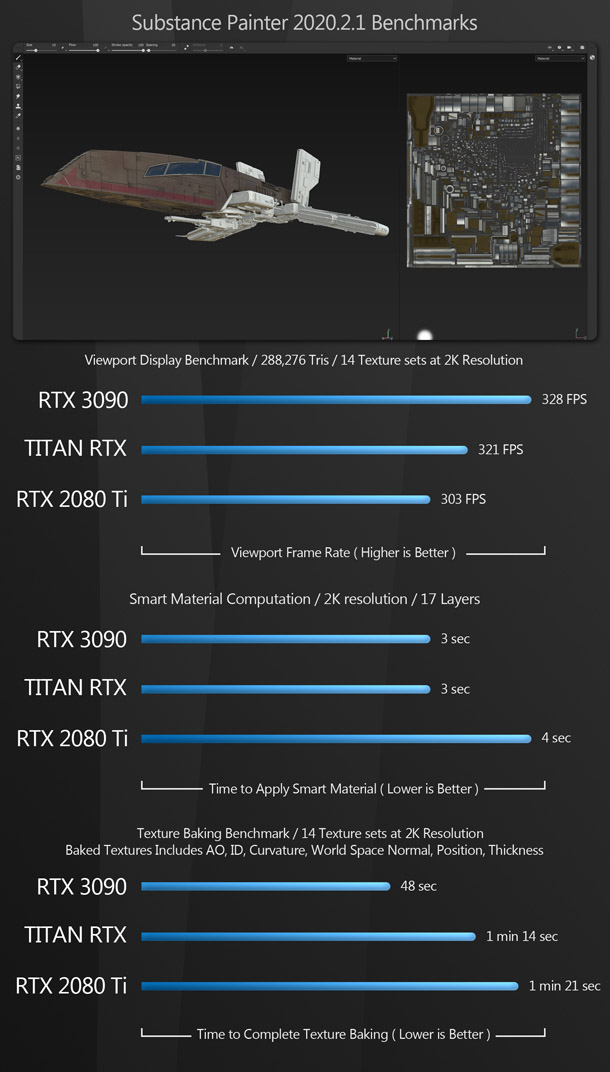

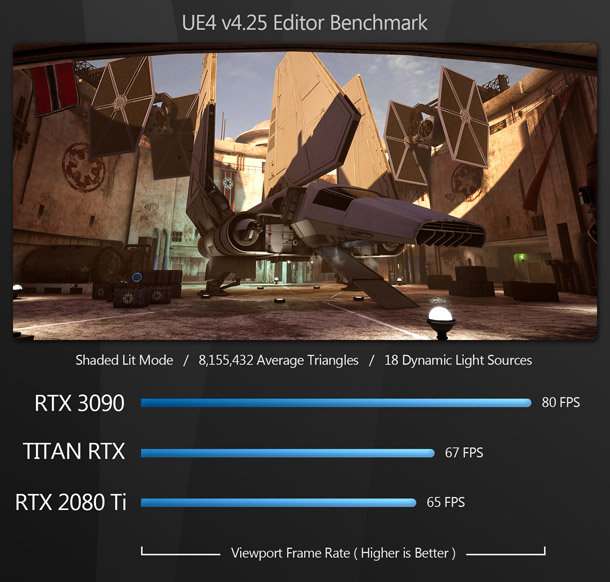

The viewport benchmarks include many of the key DCC applications, including 3ds Max, Blender and Maya, plus CAD package SolidWorks and game engine Unreal Engine.

The GeForce RTX 3090 takes first place in all of the viewport tests except one – the single anomaly being the SolidWorks test, in which the previous-generation Titan RTX has a substantial lead. This may be due to the fact that the Titan’s drivers enable features otherwise only available in Nvidia’s professional GPUs, although my tests suggest that for most applications, there is little difference in performance between Nvidia’s Studio and Game Ready drivers: something discussed in more detail later in this article.

Another point to note is that many off-the-shelf DCC applications don’t seem to be optimised to fully harness the power of newer GPUs: while the GeForce RTX 3090 takes the lead in the 3ds Max, Maya and Modo tests, that lead is smaller than I would have expected from its specifications alone.

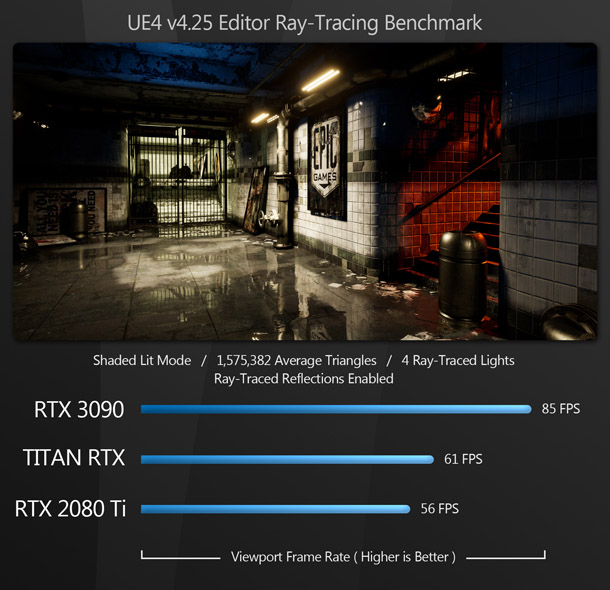

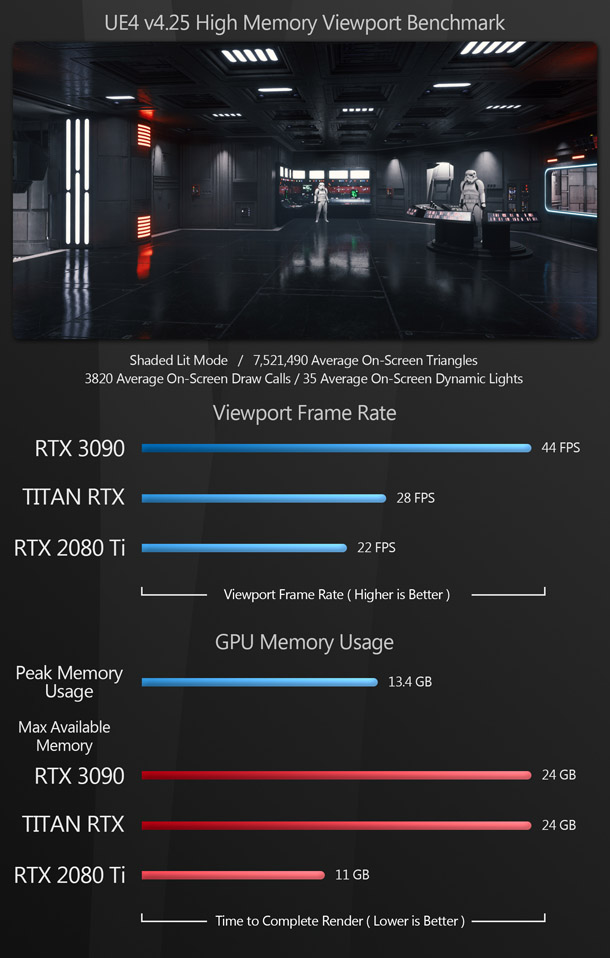

However, the Unreal Engine tests show significant gains in interactive performance over the Titan RTX and the GeForce RTX 2080 Ti. The UE4 viewport ray tracing benchmark is especially impressive, with the RTX 3090 hitting almost 90fps at 4K resolution.

GPU rendering

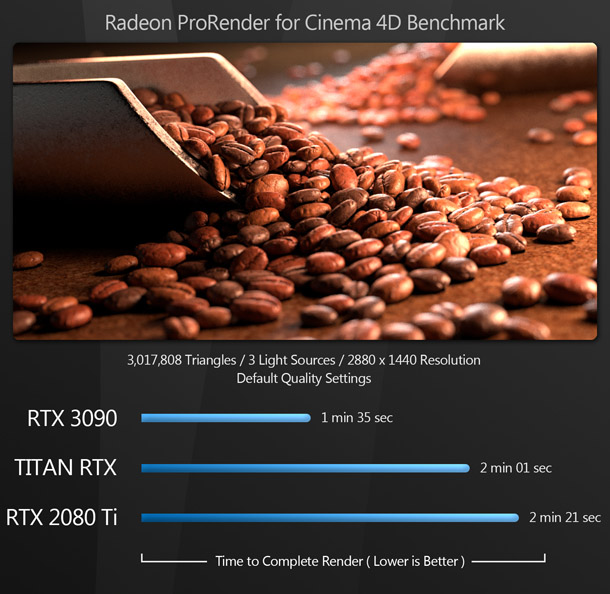

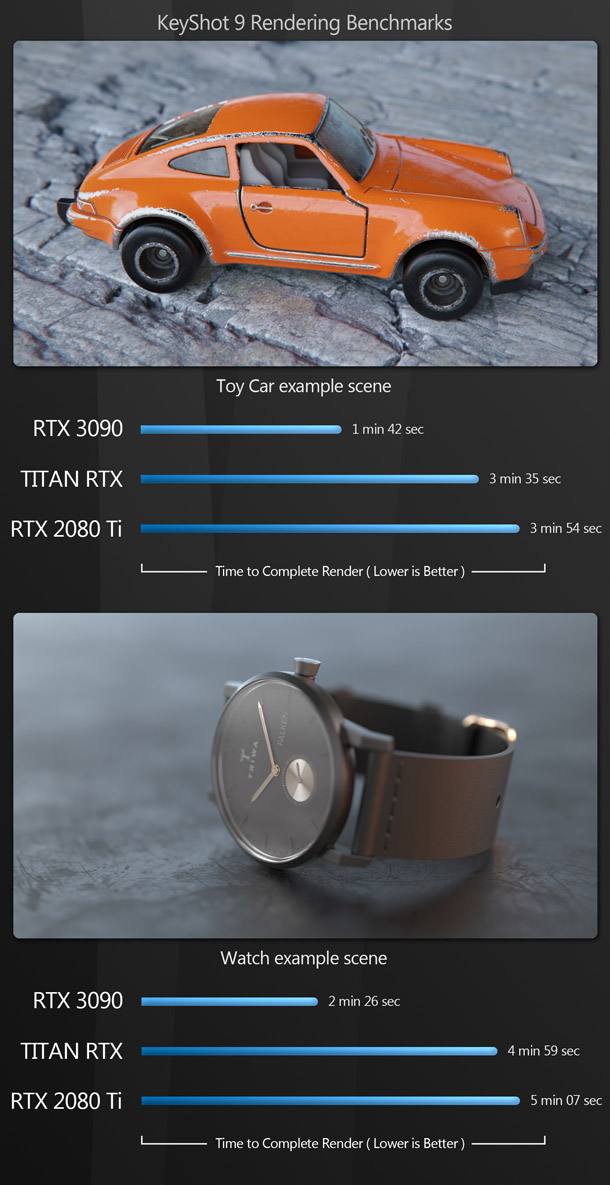

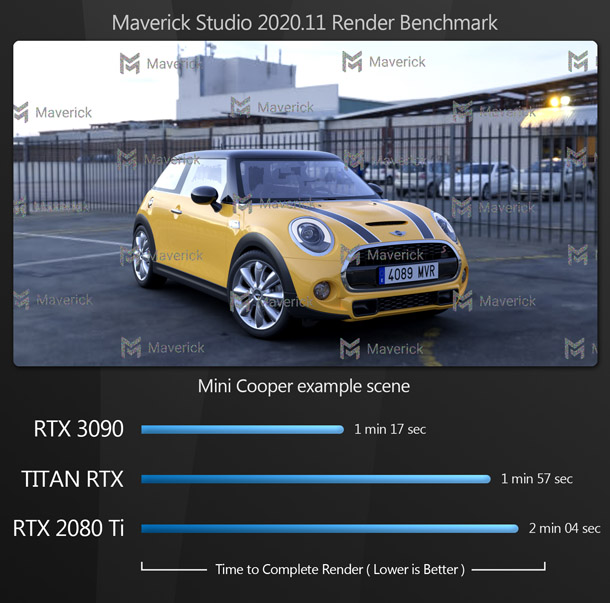

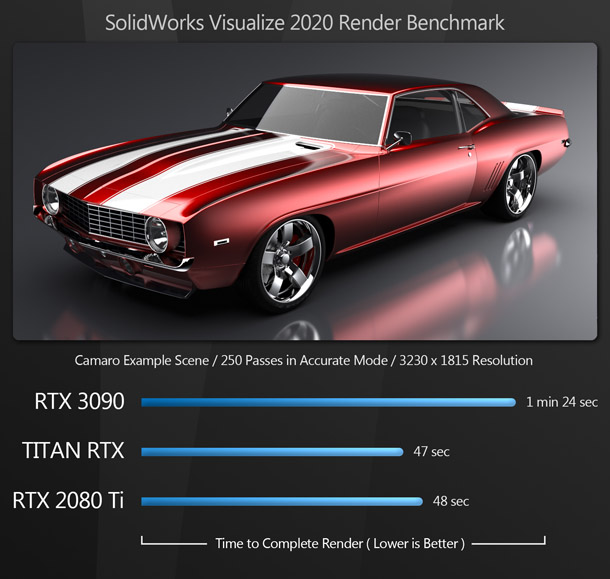

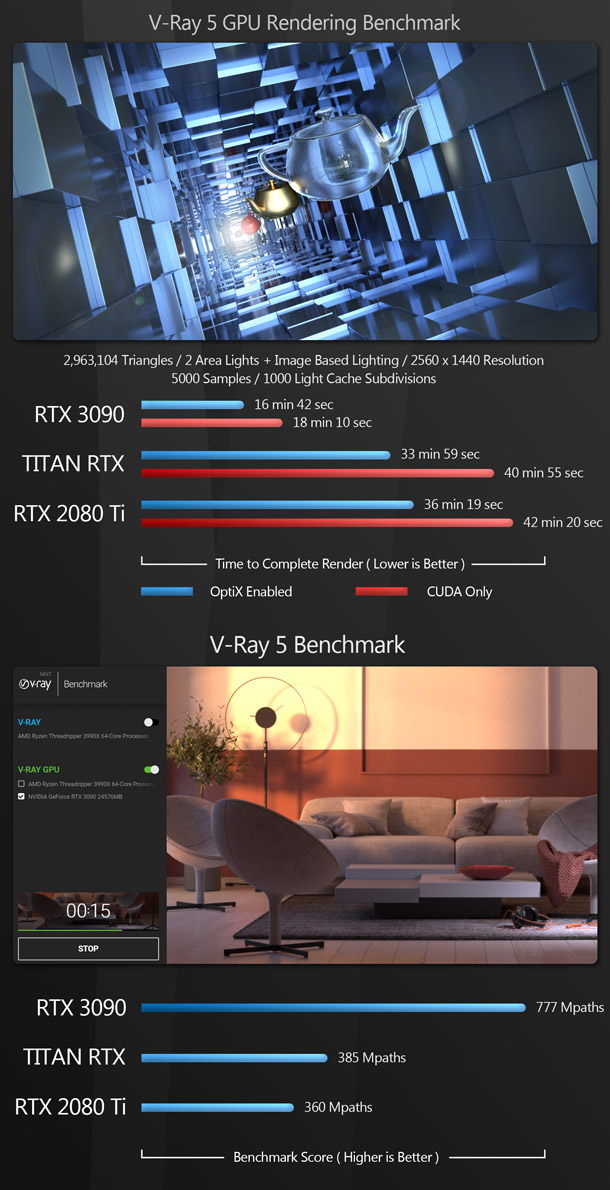

I’ve divided the rendering benchmarks into two groups. The first set of applications either don’t use OptiX for ray tracing, or don’t make it possible to turn the OptiX backend on and off, so it isn’t possible to assess the impact of RTX acceleration on performance.

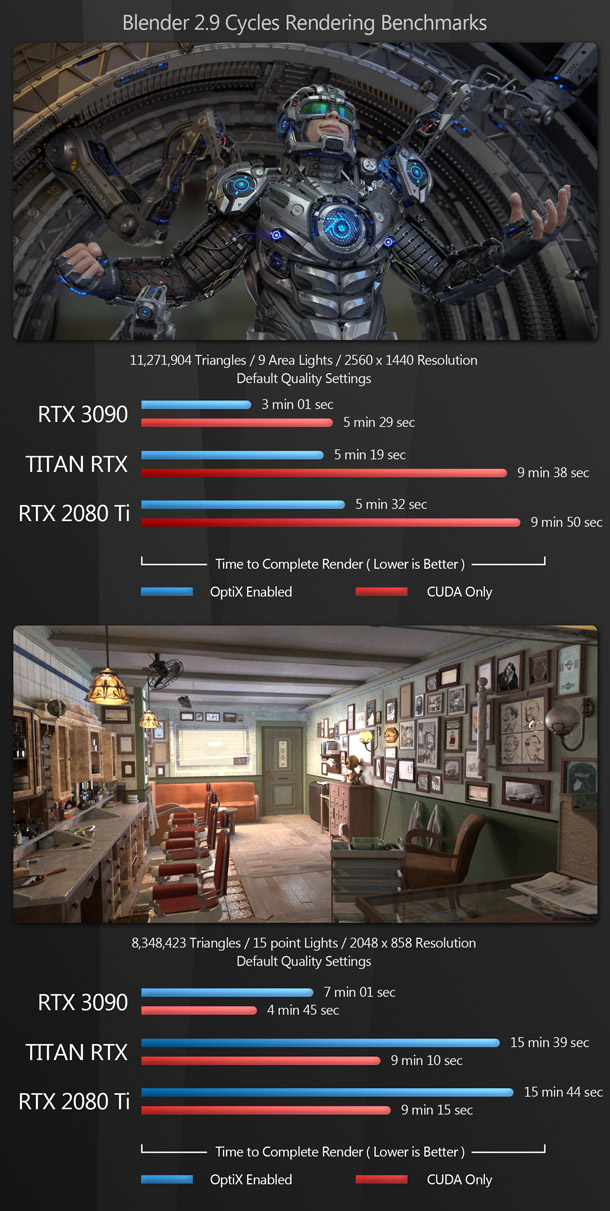

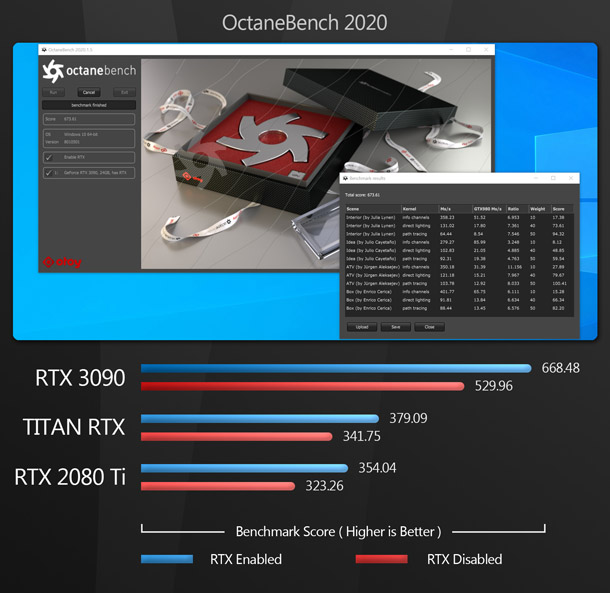

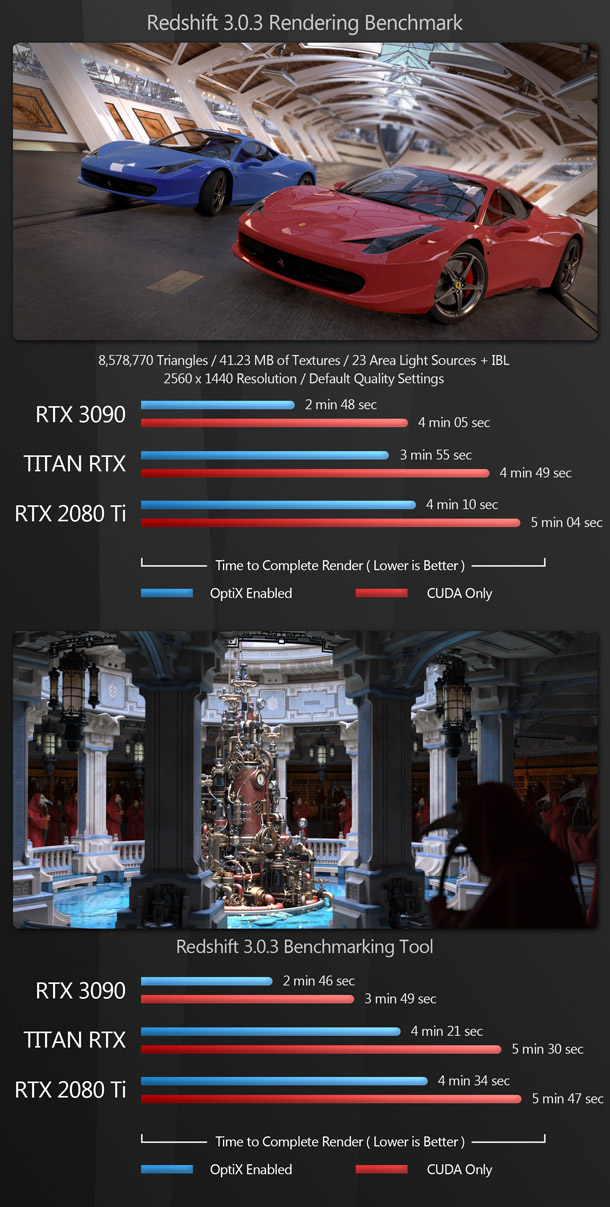

In the second set of applications, it is possible to render with OptiX enabled or using CUDA alone, making it possible to measure the increase in performance when RTX acceleration is enabled.

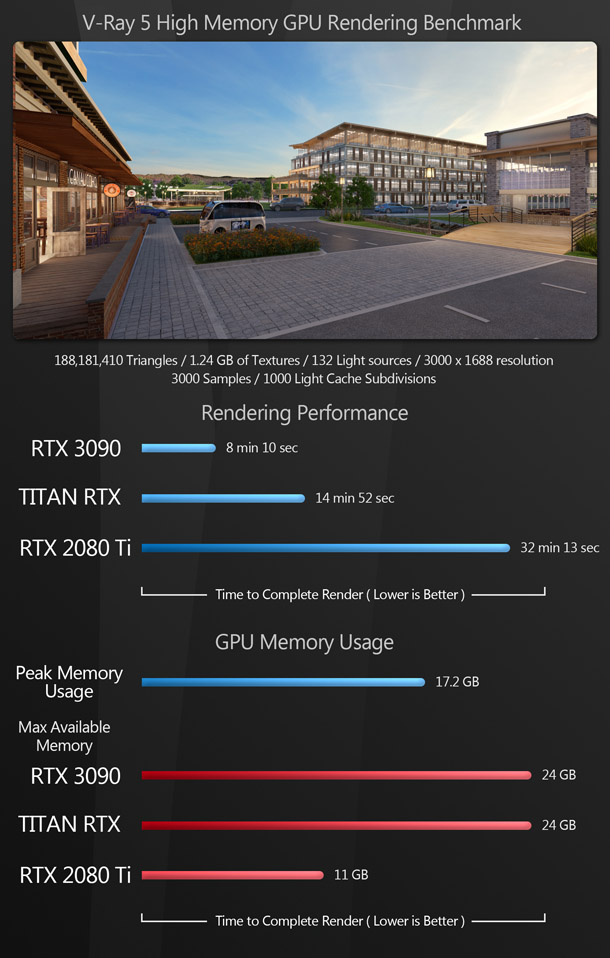

The GPU rendering benchmarks follow a similar pattern to the viewport tests, with the RTX 3090 taking first place in most cases: this time, by a larger margin. The scores with OptiX enabled are particularly impressive.

However, like the viewport benchmarks, there is an anomaly – actually, two anomalies. The first is with SolidWorks Visualize, where the RTX 3090 falls behind the Titan RTX and the RTX 2080 Ti. My suspicion is that Visualize simply hasn’t yet been updated to make full use of the RTX 3090’s new GPU architecture.

The other anomaly (and the more curious one) is with Blender’s Cycles renderer. In the standard Blender Barber Shop interior scene, the RTX 3090 takes top spot, but enabling RTX acceleration actually makes the render significantly slower than using CUDA alone. This is also true for the Titan RTX and RTX 2080 Ti. I suspect that this may be due to the heavy use of indirect lighting in the scene: one of the few scenarios in which my tests suggest that CPU rendering still outperforms GPU rendering. When rendered on the CPU alone, the Xidax system’s Threadripper 3990X outperformed even the RTX 3090 by a significant margin.

Other benchmarks

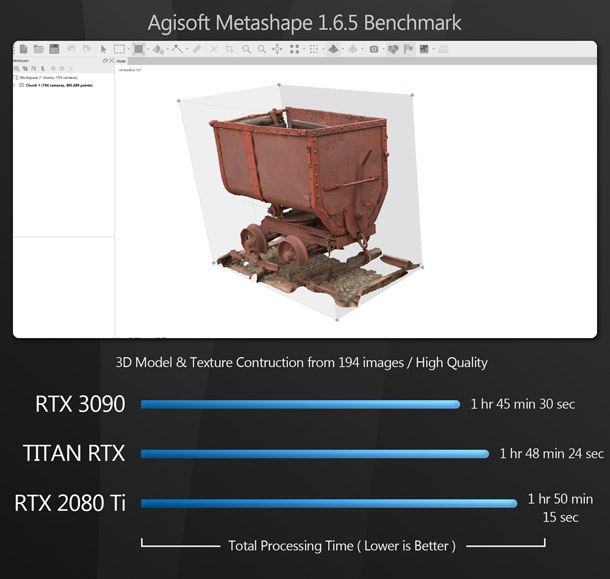

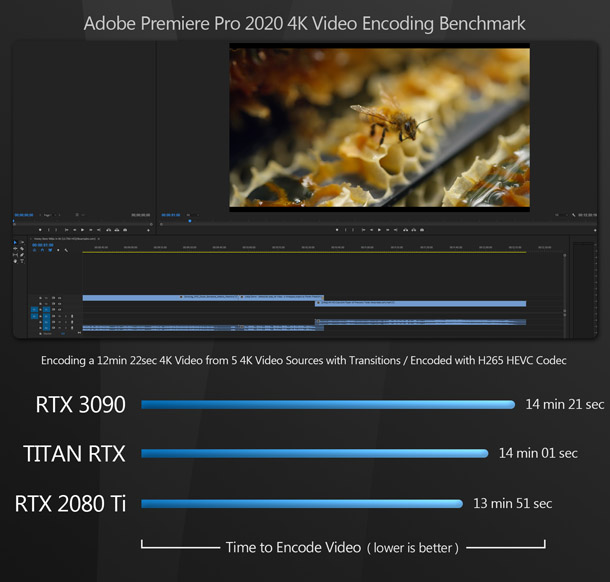

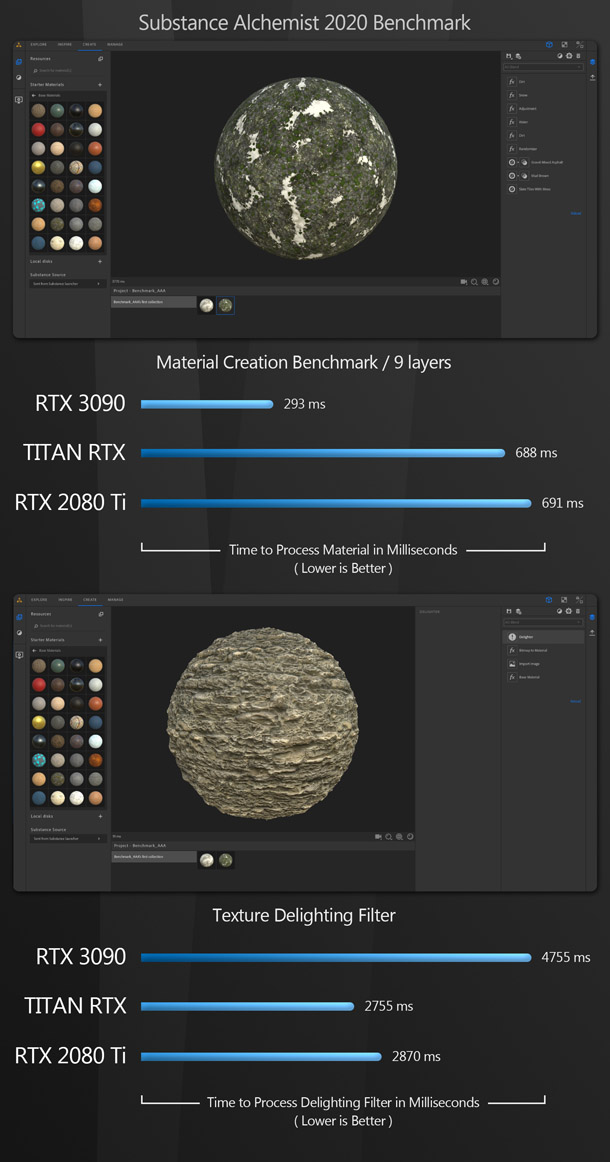

The next set of benchmarks make use of the GPU for more specialist tasks. Photogrammetry application Metashape uses the GPU for image processing, Substance Alchemist uses the GPU for texture baking, material processing, and to run certain filters, and Premiere Pro uses the GPU for video encoding.

Unlike the viewport and rendering benchmarks, the additional tests proved to be a mixed bag. In Metashape, the RTX 3090 comes in first, followed by the Titan RTX and the RTX 2080 Ti, but the margins are very small.

With Substance Alchemist, the RTX 3090 takes top spot in the material processing test but comes in last with the AI based de-lighting test. Since Alchemist’s de-lighting filter didn’t even start working with the RTX 3090 until the version of the software used in this review, I imagine that it is still being optimised for the third-generation Tensor cores. Hopefully, we will see performance improve in future releases.

With Premiere Pro, the RTX 3090 takes last place, although again, the margins here are relatively small.

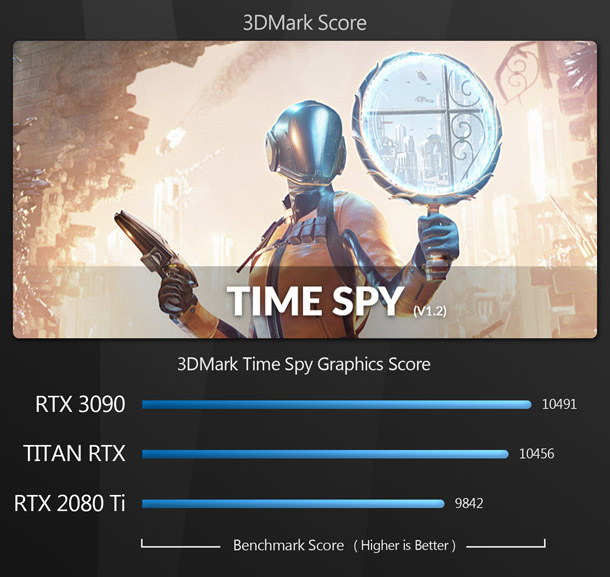

Synthetic benchmarks

Finally, we have the synthetic benchmark 3DMark. Synthetic benchmarks don’t accurately predict how a GPU will perform in production, but they do provide a way to compare the cards on test with older models, since scores for a wide range of GPUs are available online

No surprises here: the RTX 3090 takes first place, followed by the Titan RTX and the RTX 2080 Ti.

Other considerations

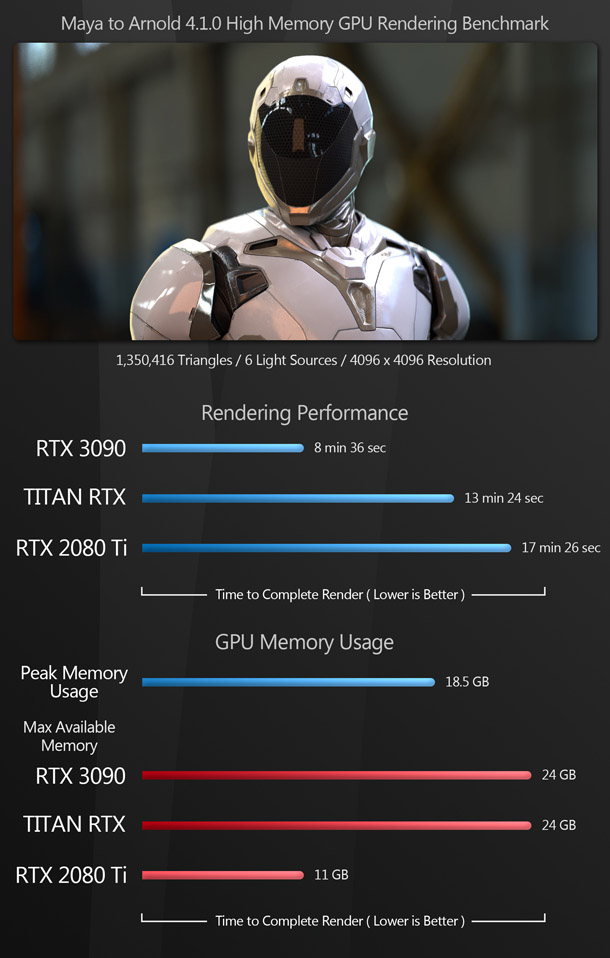

DCC tasks and memory usage

As part of the GeForce product line, the RTX 3090 is marketed as a consumer GPU – that is, a gaming GPU. But for many, it’s the unofficial workstation GPU of the Ampere generation. The reason is its 24GB of VRAM.

Many DCC tasks, particularly GPU rendering, use more of a GPU’s VRAM than gaming, with the possible exception of 8K gaming, which is barely in its infancy. Although many GPU renderers can work ‘out of core’ by paging data out to system RAM once the VRAM fills up, in most cases, this incurs a pretty significant performance penalty. Some software simply crashes if a task requires more VRAM than is available.

For that reason, my final group of benchmarks test GPU rendering of scenes that require a lot of VRAM.

The impact of VRAM on GPU rendering is most apparent when comparing the Titan RTX and RTX 2080 Ti. Both cards have almost identical GPUs, but at 24GB, the Titan RTX has almost twice as much GPU memory.

Another example is the UE4 test scene. At 13.4GB, loading the entire level into memory – as many devs do while working – requires more VRAM than is available on any GeForce GPU bar the RTX 3090. In contrast, when a game is played, only a small part of the game world is loaded. As an example, in my tests, running Red Dead Redemption 2 at 4K Ultra settings uses a maximum of 9.5GB of VRAM, and an average of 8.2GB.

Studio Drivers

Before I get to my verdict, a note on drivers. Nvidia produces two sets of GPU drivers compatible with the GeForce RTX 3090: Studio Drivers, intended for DCC work, and Game Ready Drivers.

In my review of the GeForce RTX 2080 Ti, I compared the performance of a range of applications under Studio and Game Ready Drivers, and found almost no difference in viewport or rendering performance. Studio Drivers are meant to improve software stability, but I have had no real stability issues when using the game drivers. If you also use your GeForce GPU for gaming and experience weird errors with your DCC applications, give the Studio Drivers a try; otherwise, there is no real reason to switch.

Verdict

The Titan of the Ampere generation>?

Given its specs, and its strong performance in my DCC benchmarks, the GeForce RTX 3090 deserves its reputation as the unofficial workstation card of Nvidia’s Ampere generation of GPUs. In previous generations, that title was held by the Titan cards. So is the RTX 3090 also the unofficial Titan of the Ampere generation?

It certainly exhibits the same massive increase in VRAM over the next most powerful consumer card available, the GeForce RTX 3080, that the previous-generation Titan RTX did over the GeForce RTX 2080 Ti. But while the Titan RTX featured a fully unlocked GPU, the GPU in the GeForce RTX 3090 – like the RTX 2080 Ti before it – is not. A fully unlocked GA102 GPU has 10,752 available CUDA cores, 336 Tensor cores, and 84 RT cores; the GeForce RTX 3090’s version has 10,496 available CUDA cores, 328 Tensor cores and 82 RT cores. The Titan RTX’s drivers also featured a subset of pro-level features that the RTX 3090’s do not.

If we do see an official Titan of the Ampere generation, my guess is that it will feature a fully unlocked GA-102-400 GPU clocked slightly higher than the RTX 3090, and carry 48GB of VRAM.

Is the GeForce RTX 3090 worth the money?

At $1,499 for the Founders Edition, the GeForce RTX 3090 is pretty expensive for a gaming card. But is it good value for money for DCC work? The previous-generation Titan RTX proved to be popular among content creators, providing the power to tackle gruelling DCC tasks at a much lower price than Nvidia’s equivalent professional card. The GeForce RTX 3090 not only features the same massive 24GB of VRAM as the Titan RTX, but outperforms it in almost all of my tests, and costs $1,000 less.

If you are a content creator that needs that kind of GPU power, that seems like a recipe for great value. When you consider that the top-of-the-range Ampere-based workstation card, the RTX A6000, costs $4,650, the GeForce RTX 3090 is – at least relatively speaking – a steal.

Summary

So what conclusions can you take away from all the information presented in this review? For one thing, the GeForce RTX 3090 is a beast of a GPU. Even though it’s officially a gaming card, for gaming alone, it’s overkill. But for DCC work, it’s one of the best GPUs that money can buy. With a massive increase in CUDA core count and a new generation of ray tracing and machine learning cores, it significantly outperforms all previous-generation GeForce cards, and even – SolidWorks aside – the Titan RTX. Just make sure you have a strong power supply, and a big enough case for it to fit in, if you plan to upgrade!

Lastly, I just want to say thanks for taking the time to stop by here. I hope this information has been helpful. If you have any questions or suggestions, let me know in the comments below.

Links

Read more about the GeForce RTX 3090 on Nvidia’s website

About the reviewer

Jason Lewis is Senior Environment Artist at Obsidian Entertainment and CG Channel’s regular reviewer. You can see more of his work in his ArtStation gallery. Contact him at jason [at] cgchannel [dot] com

Acknowledgements

I would like to give special thanks to the following people for their assistance in bringing you this review:

Jordan Dodge of Nvidia

Kasia Johnston of Nvidia

Sean Kilbride of Nvidia

Cole Hagedorn of Edelman

Xidax

Redshift Rendering Technologies/Maxon Computer

Stephen G Wells

Adam Hernandez

Thad Clevenger