Review: Nvidia GeForce RTX 2080 Ti

Jason Lewis assesses how Nvidia’s current top-of-the-range gaming GPU compares to the firm’s other GeForce and Titan RTX graphics cards in a punishing series of real-world 3D and GPU rendering tests.

If you’re a regular reader of CG Channel, you may remember that last year, I assessed how well Nvidia’s latest Turing graphics cards handle 3D software in our group test of GeForce, Titan and Quadro RTX GPUs. In this follow-up review, I’m going to take a look at a GPU I didn’t include first time around: Nvidia’s current top-of-the-range consumer card, the GeForce RTX 2080 Ti.

As well as seeing how the RTX 2080 Ti performs with a representative range of DCC applications, I will be testing how far Nvidia’s RTX hardware can accelerate GPU rendering, and attempting to answer a couple of questions that readers posed in response to the previous review: how important is memory capacity when choosing a GPU for production work, and how important is it to use Nvidia’s Studio GPU drivers?

For comparison, I will be re-testing the other consumer and prosumer cards from the original review: the current-generation Titan RTX and GeForce RTX 2080, and the previous-generation GeForce GTX 1080 and GeForce GTX 1070. I haven’t included the Titan V this time around, since my original tests showed that the Titan RTX was both cheaper and better for DCC work; and I hope to return to Nvidia’s workstation graphics cards, the Quadro RTX series, in a future review.

Unfortunately, I haven’t been able to test Nvidia’s low-end or mid-range GeForce RTX cards, the GeForce RTX 2060 and 2070 and their Super variants. However, I have included a popular synthetic benchmark, 3DMark, as part of my tests, so you should be able to get some idea of how they would have performed by comparing my results to the other 3DMark scores available online.

Jump to another part of this review

Technology focus: GPU architectures and APIs

Specifications and prices

Testing procedure

Benchmark results

Analysis: GPU rendering and GPU memory

Analysis: Studio versus Game Ready drivers

Verdict

Technology focus: GPU architectures and APIs

Before I discuss the cards on test, here’s a quick recap of some of the technical terms I touched on in the previous review. If you’re already familiar with them, you may want to skip ahead.

Nvidia’s current-generation Turing GPU architecture features three types of processor cores: CUDA cores, designed for general GPU computing; Tensor cores, designed for machine learning operations; and RT cores, new in the RTX cards, and intended to accelerate ray tracing calculations.

In order to take advantage of the RT cores, software applications have to access them through a graphics API: in the case of the applications featured in this review, either DXR (DirectX Raytracing), used in Unreal Engine, or Nvidia’s own OptiX API, used by the majority of the offline renderers.

In most of those renderers, the OptiX rendering backend is provided as an alternative to an older backend based on Nvidia’s CUDA API. The CUDA backends work with a wider range of Nvidia GPUs, but OptiX enables ray tracing accelerated by the RTX cards’ RT cores, often described simply as ‘RTX acceleration’.

Specifications and prices

Although a number of manufacturers produce their own variants of the GeForce RTX 2080 Ti, for the purposes of this review, I used one of Nvidia’s own Founders Edition cards.

If you discount the Titan RTX, which occupies a halfway house between traditional gaming and workstation cards, it’s Nvidia’s current top-of-the-range consumer GPU.

In fact, the 2080 Ti and the Titan RTX have similar specs. Both use Nvidia’s Tu102 GPU with the same base clock speed of 1,350 MHz, although the Titan has a higher boost clock speed: 1,770MHz, as opposed to 1,545MHz, or 1,635MHz overclocked. The Titan’s Tu102 is also fully unlocked, with 4,608 CUDA cores, 576 Tensor cores and 72 RT cores, whereas the 2080 Ti’s slightly nerfed version has 4,352 CUDA cores, 544 Tensor cores and 68 RT cores.

The biggest difference between the cards is the amount of onboard GDDR6 RAM they carry. The 2080 Ti has 11GB of RAM; at 24GB, the Titan has more than double that. Price varies by a similar margin: the 2080 Ti tested has a current street price of around $1,200; the Titan of around $2,500.

You can see the specs for the other cards tested here, the GeForce RTX 2080, GeForce GTX 1080 and GeForce GTX 1070, in the table below, and read more about their pros and cons in the original group test.

Testing procedure

As with the previous group test, the test system for this review was a BOXX Technologies APEXX T3 workstation sporting an AMD Ryzen Threadripper 2990WX CPU, 128GB of 2666MHz DDR4 RAM, a 512GB Samsung 970 Pro M.2 NVMe SSD, and a 1000W power supply.

Testing was done using a subset of the applications used in the group test, in some cases updated to the current versions of the software. The benchmarks were run on Windows 10 Pro for Workstations, and were broken into four categories:

Viewport and editing performance

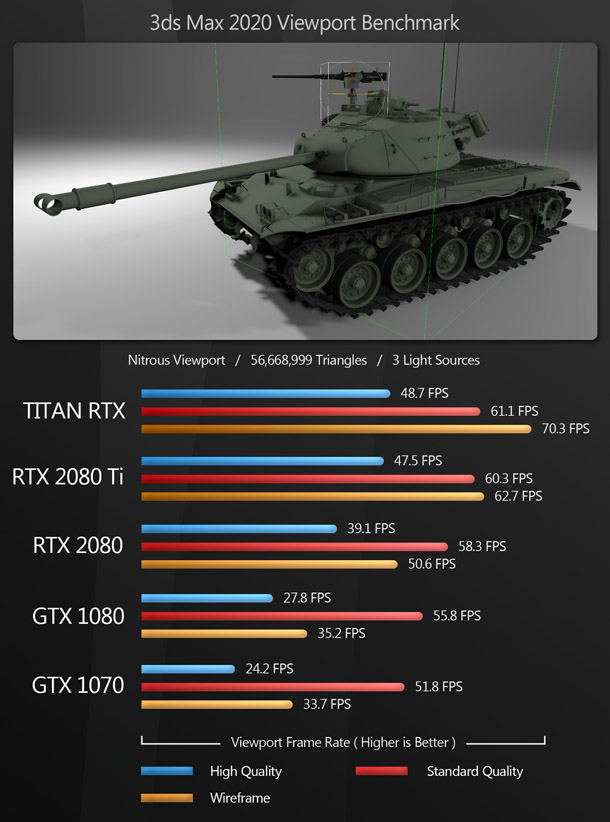

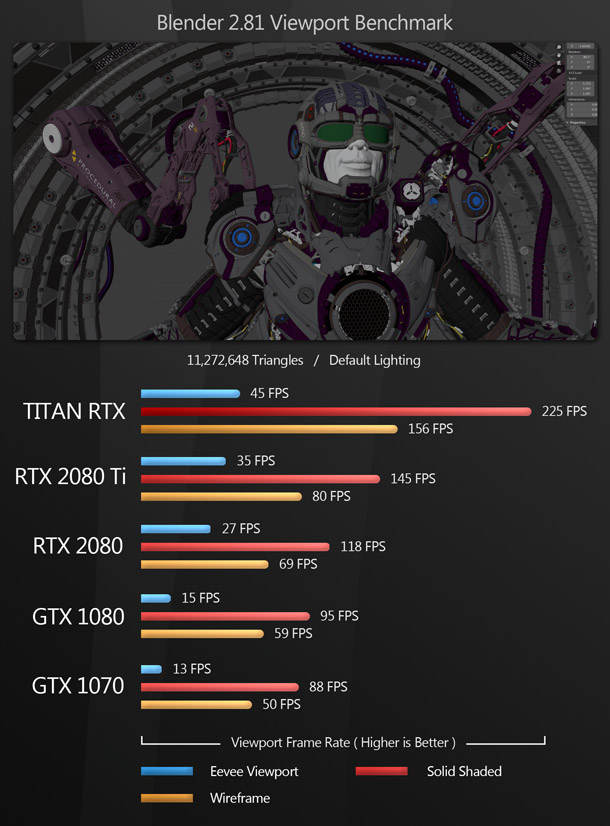

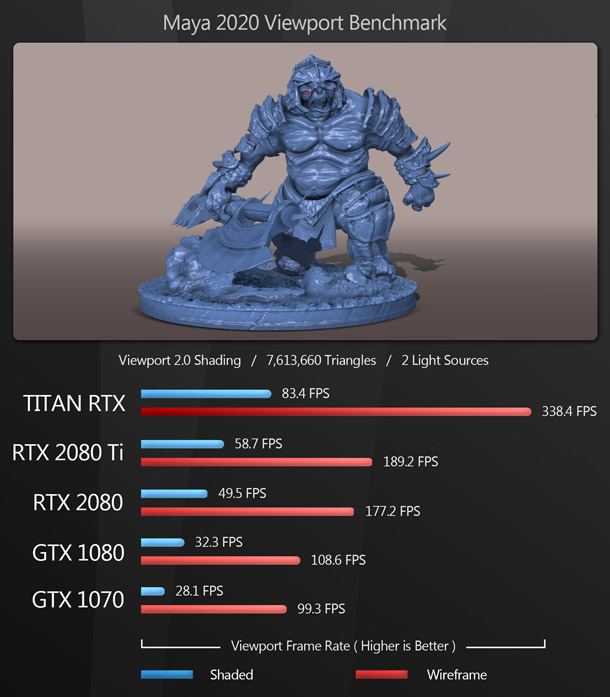

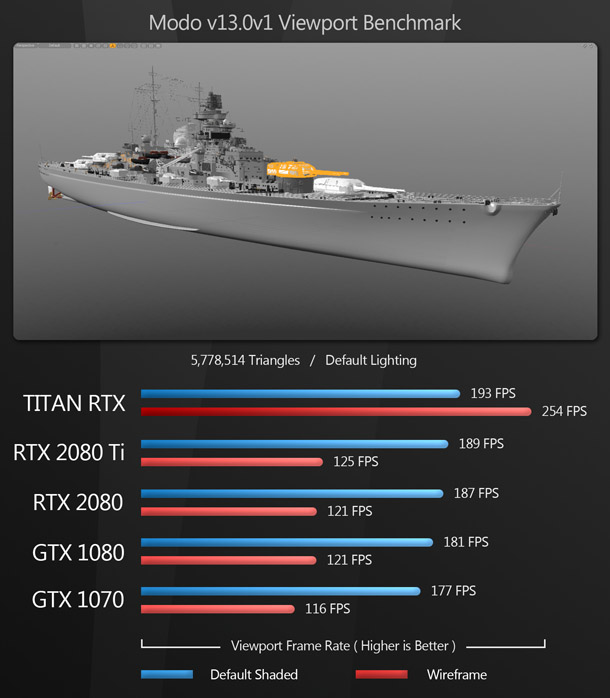

3ds Max 2020, Blender 2.81, Maya 2020, Modo 13.0v1, SolidWorks 2020, Substance Painter 2019, Unreal Engine 4.24

Rendering

Blender 2.81 (using Cycles), Cinema 4D R20 (using Radeon ProRender), KeyShot 9, Maverick Studio Build 410, OctaneRender 2019 (using OctaneBench), Redshift 3.0.13 (beta) for 3ds Max, SolidWorks 2020 Visualize, V-Ray Next (V-Ray 4.3 for 3ds Max, using V-Ray GPU)

Other benchmarks

Metashape 1.5.1, Premiere Pro CC 2019 (video encoding), Substance Alchemist 2019, Unreal Engine 4.24 (VR performance)

Synthetic benchmarks

3DMark

In the viewport and editing benchmarks, the frame rate scores represent the figures attained when manipulating the 3D assets shown, averaged over five testing sessions to eliminate inconsistencies. In all of the rendering benchmarks, the CPU was disabled, so only the GPU was used for computing. Testing was performed on a single 32” 4K display, running its native resolution of 3,840 x 2,160px at 60Hz.

Benchmark results

Viewport and editing performance

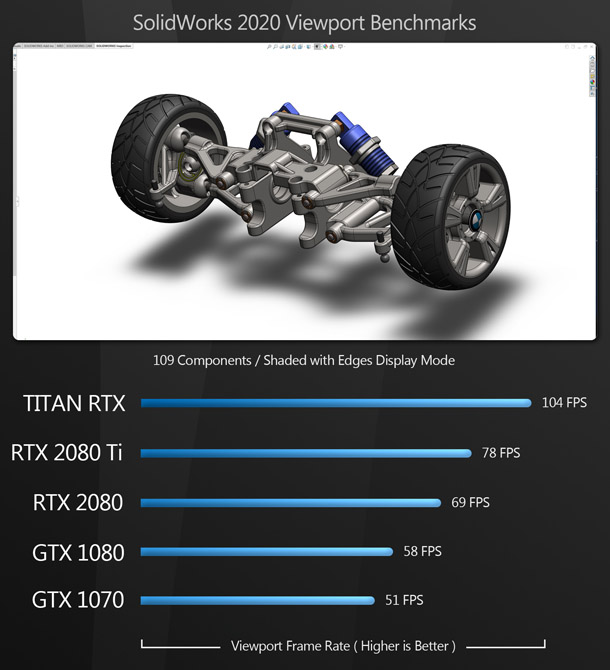

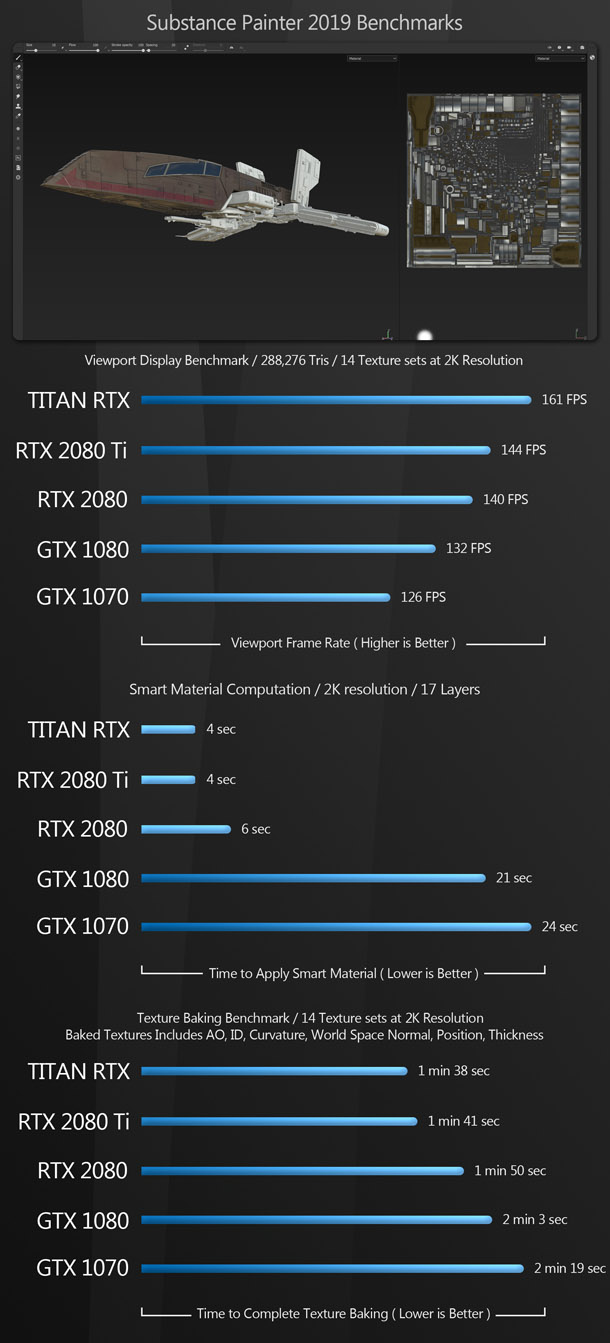

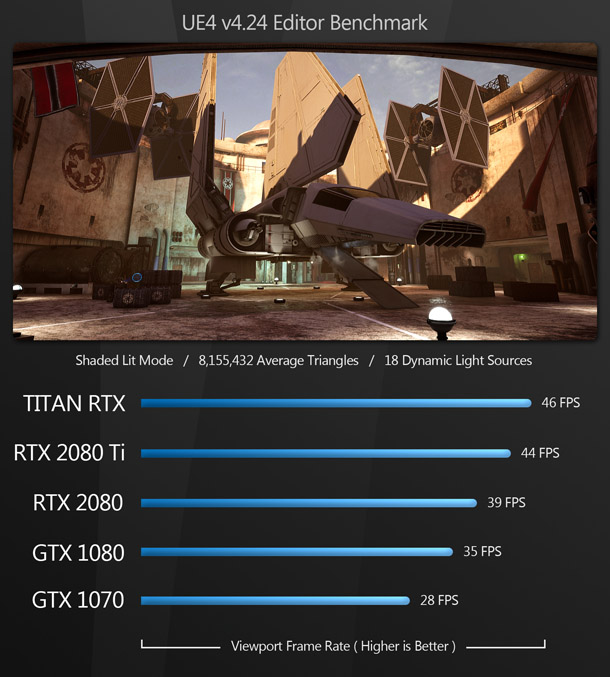

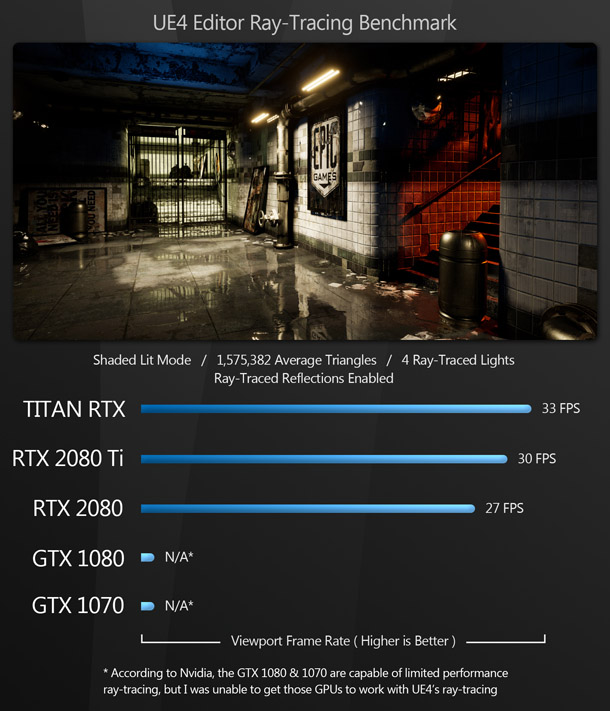

The viewport benchmarks include many of the key DCC applications – both general-purpose 3D software like 3ds Max, Blender and Maya, and more specialist tools like Substance Painter – plus CAD package SolidWorks and game engine Unreal Engine.

As expected, the Titan RTX takes the first place in all of the tests with the RTX 2080 Ti coming in a very close second, except in the SolidWorks benchmark, where the Titan has a commanding lead. The RTX 2080 takes third place in all of the tests, followed by the GTX 1080 and the GTX 1070.

Rendering

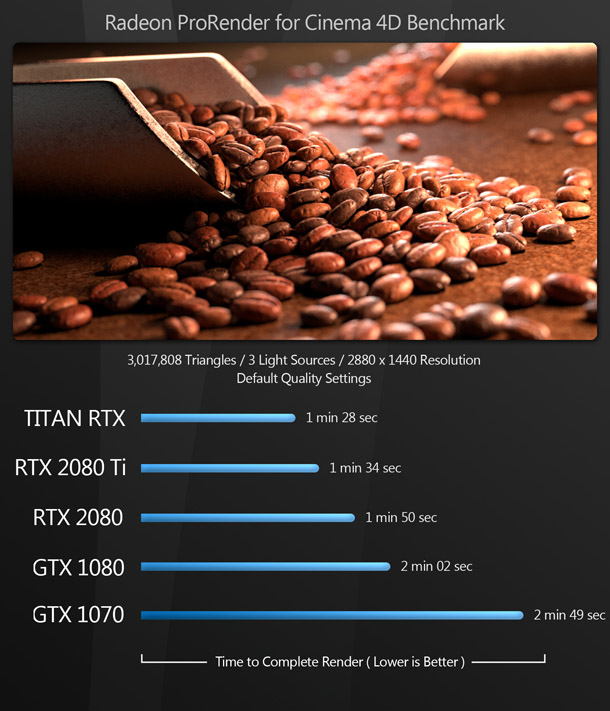

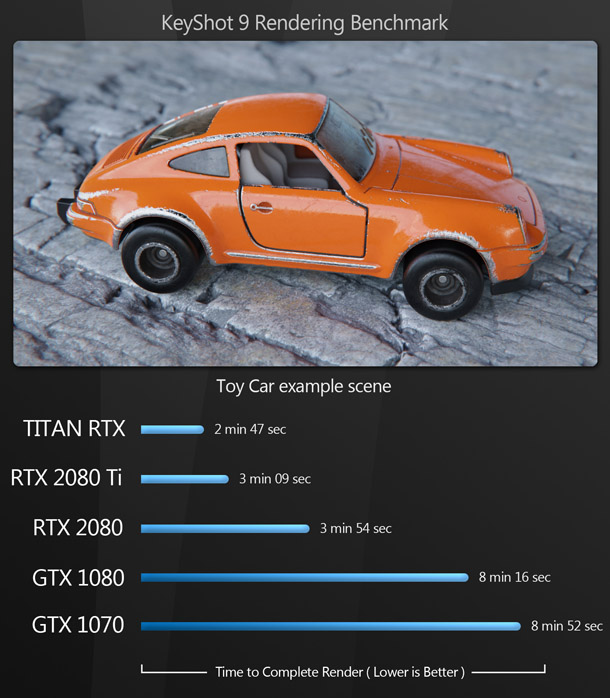

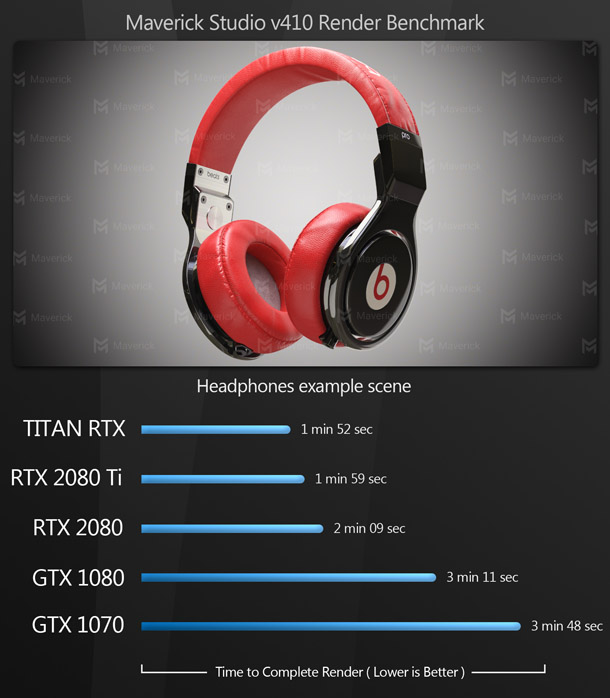

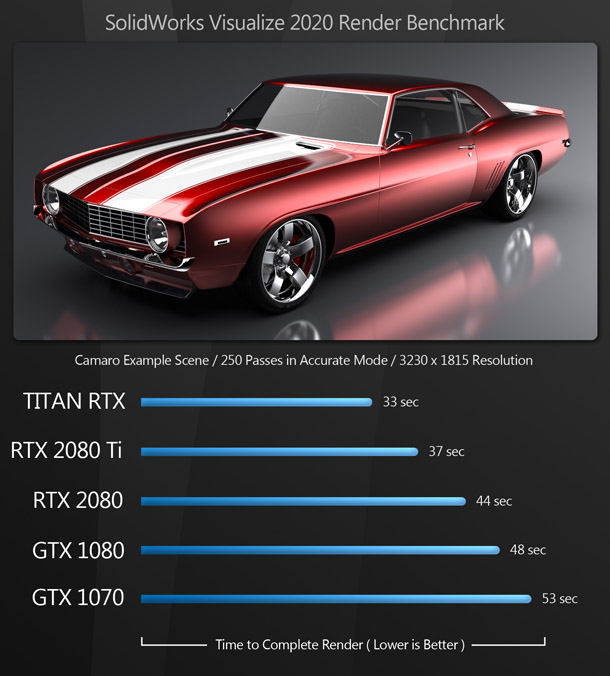

I’ve divided the rendering benchmarks into two groups. The first set of applications either don’t use OptiX for ray tracing, or don’t make it possible to turn the OptiX backend on and off, so it isn’t possible to assess the impact of RTX acceleration on performance.

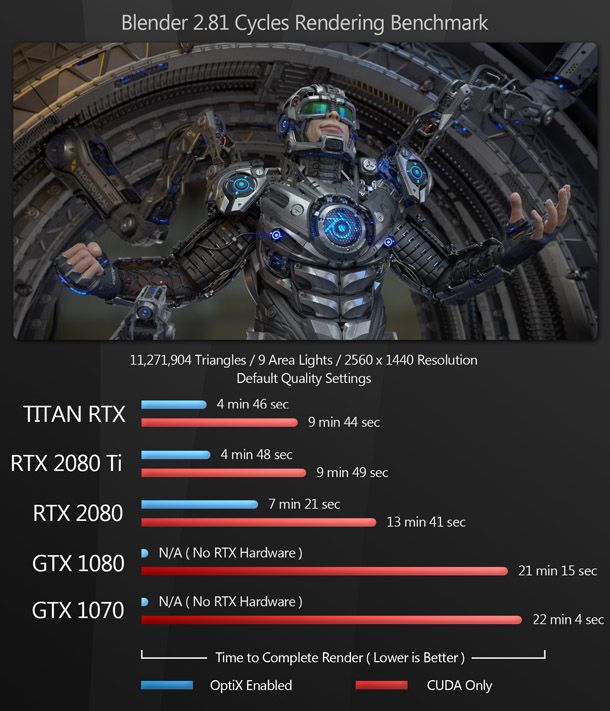

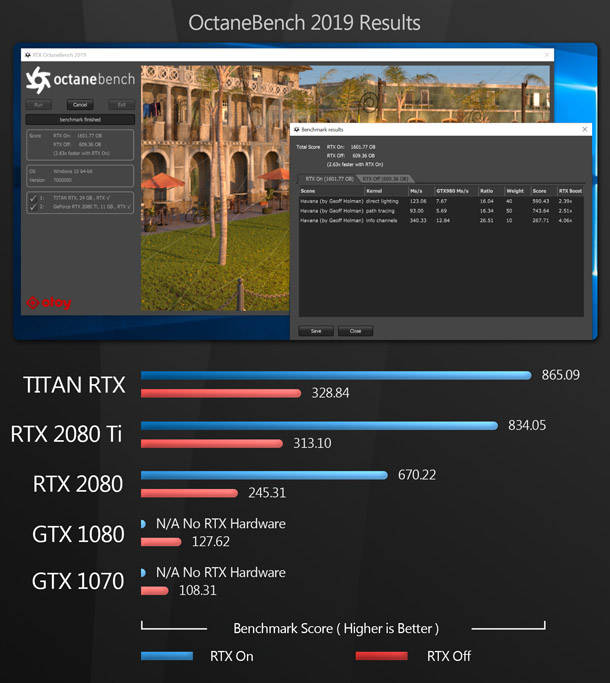

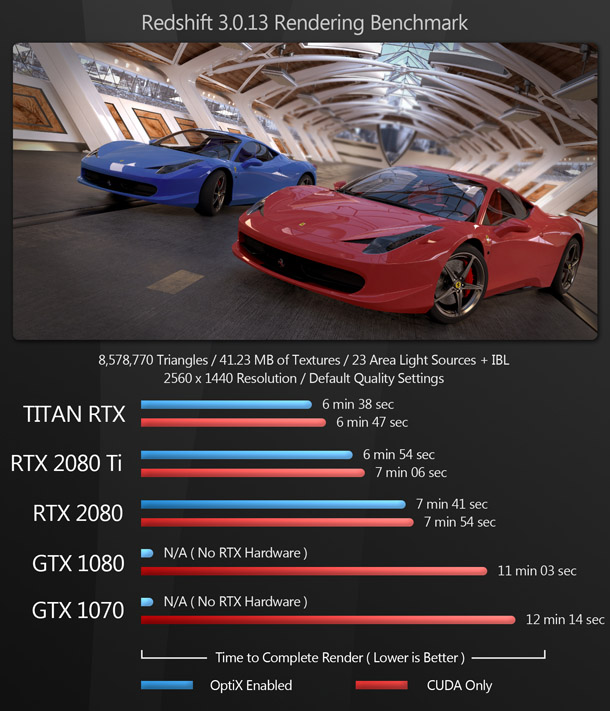

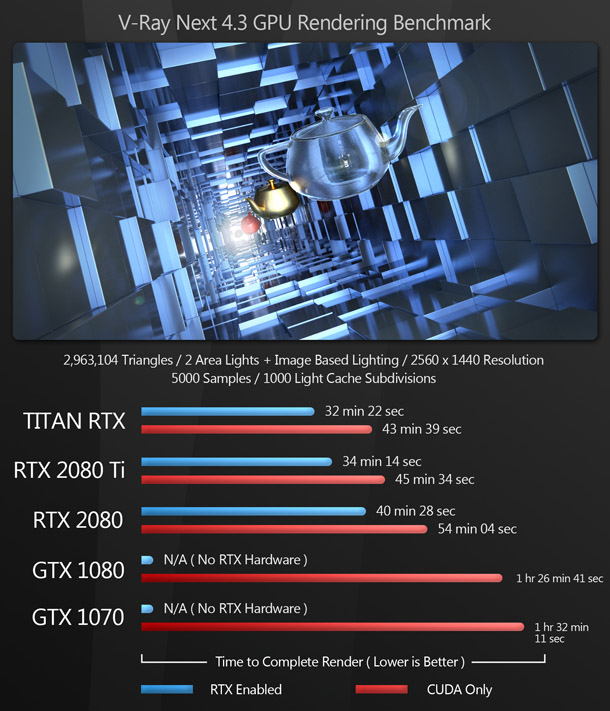

In the second set of applications, it is possible to render with OptiX enabled or using CUDA alone, making it possible to measure the increase in performance when RTX acceleration is enabled.

GPU rendering performance follows a similar pattern to viewport performance: the Titan RTX takes first place in all of the tests, although the RTX 2080 Ti follows it pretty closely. The RTX 2080 takes third place in all of the tests, followed by the GTX 1080 and GTX 1070.

What is interesting to see is how performance increases when OptiX is enabled, and the software can offload ray tracing calculations to the RT cores of the RTX cards. In Redshift, the impact is relatively small – although bear in mind that version 3.0 is still in early access – but in the V-Ray benchmark, performance increases by 33-35%, and in the Blender benchmark, by 85-105%.

Other benchmarks

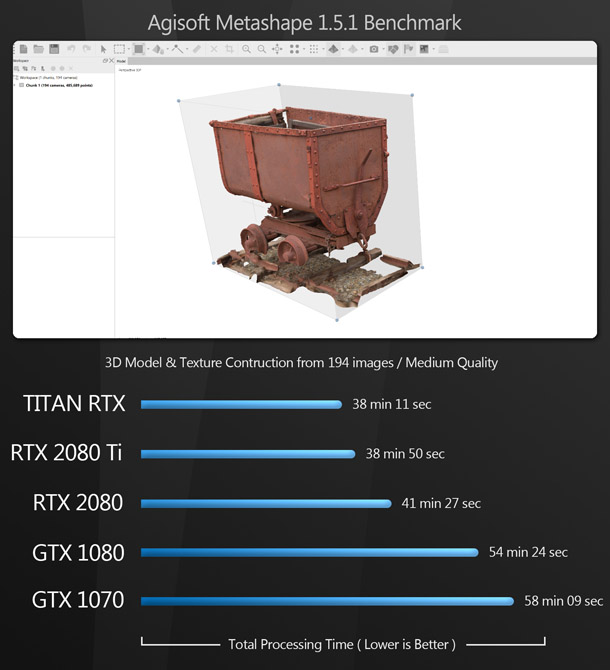

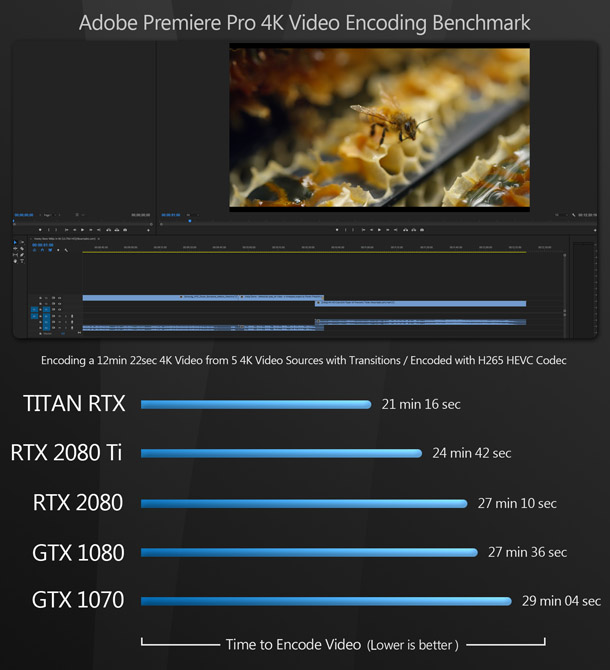

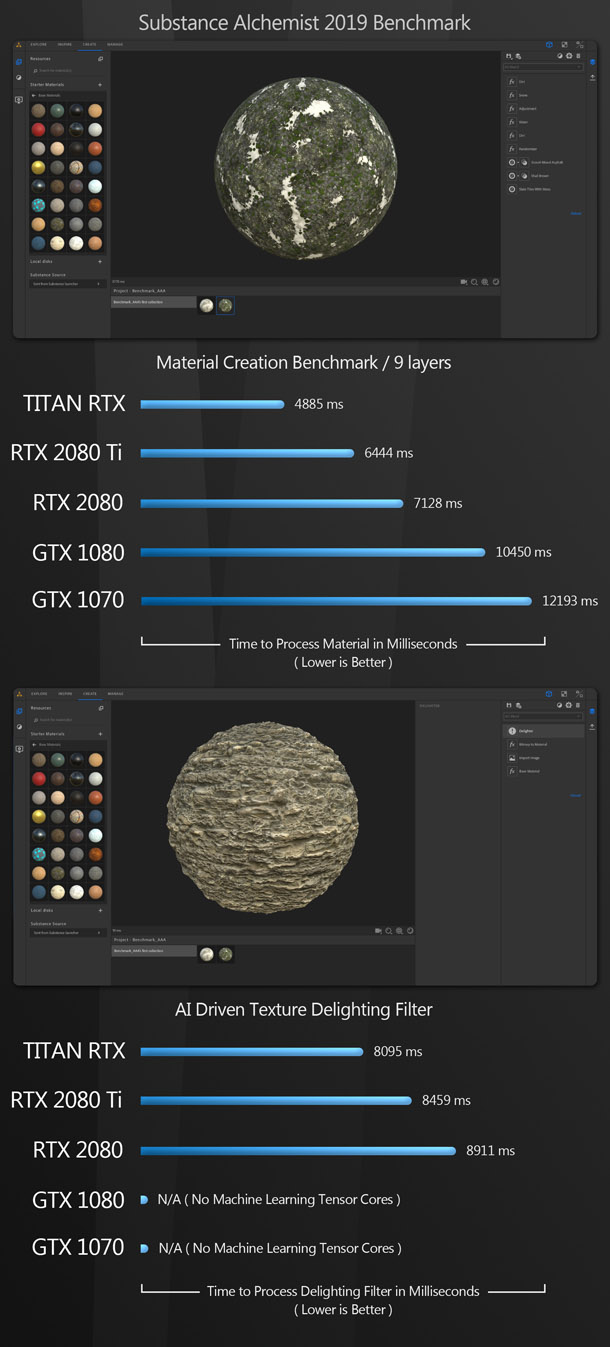

The next set of benchmarks are designed to test the use of the GPU for more specialist tasks. Photogrammetry application Metashape uses the GPU for image processing, Substance Alchemist uses the GPU for texture baking and to run certain filters, and Premiere Pro uses the GPU for video encoding.

As with the previous benchmarks, the Titan RTX takes first place in all of the tests, followed by the RTX 2080 Ti, RTX 2080, GTX 1080 and GTX 1070. The variation in performance is smallest with Premiere Pro, and largest with Substance Alchemist.

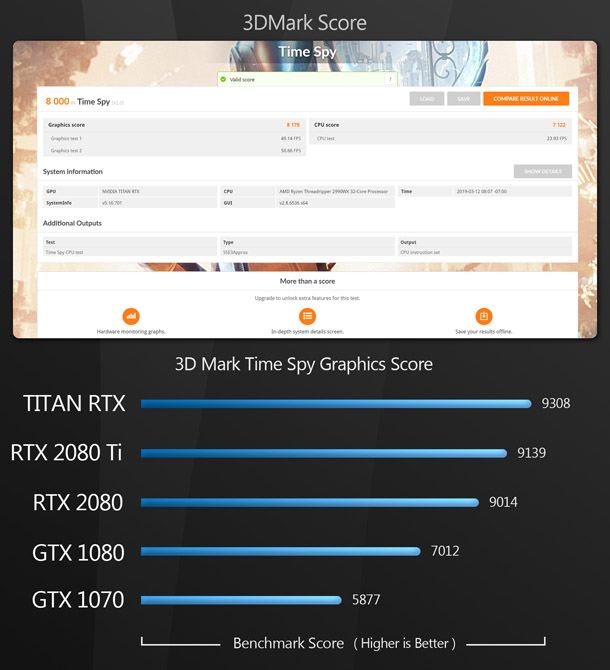

Synthetic benchmarks

Finally, we have the synthetic benchmark 3DMark. Synthetic benchmarks don’t accurately predict how a GPU will perform in production, but they do provide a way to compare the cards on test with older models, since scores for a wide range of GPUs are available online. Unlike previous reviews, I haven’t included Cinebench here, since the last version to feature a GPU benchmark is now over six years old.

Further analysis

GPU rendering and GPU memory

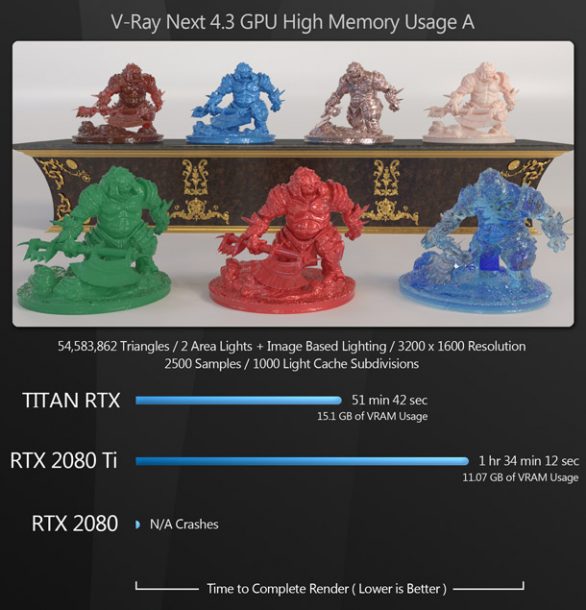

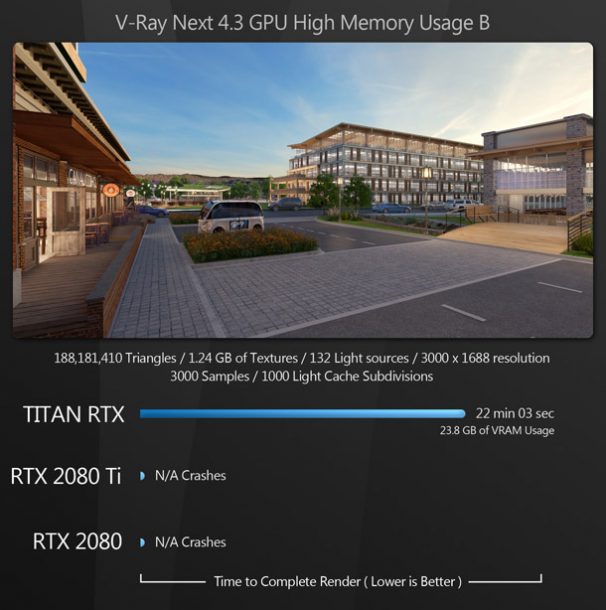

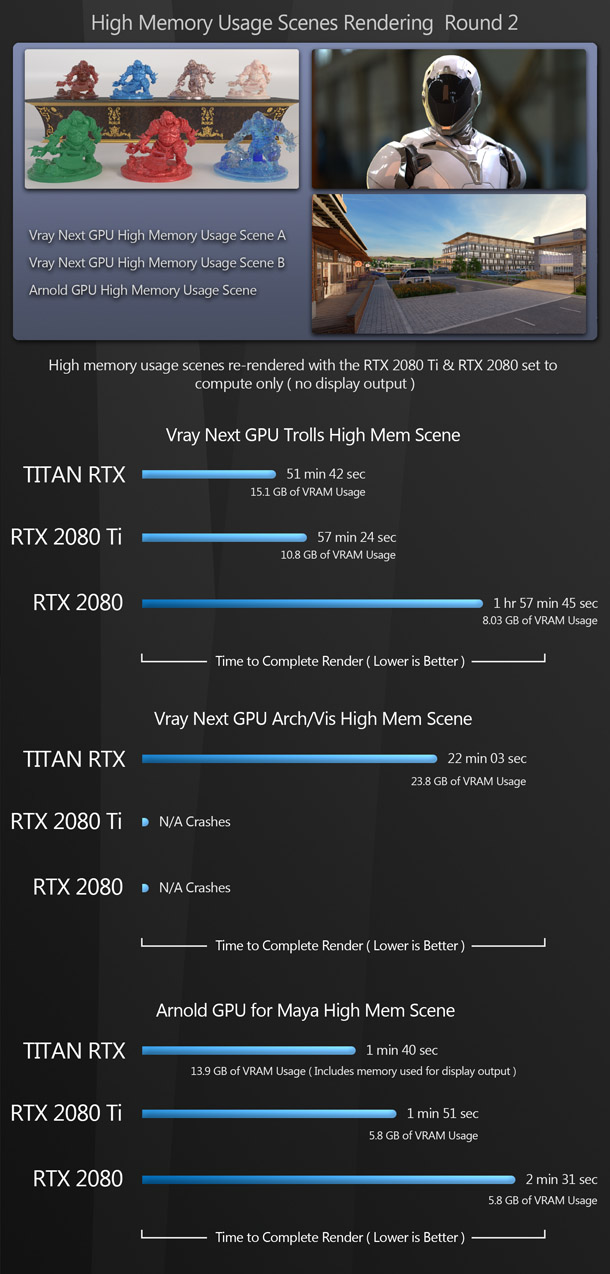

Looking at the GPU rendering benchmarks, you might wonder why anyone would spend $2,500 on a Titan RTX, when the GeForce RTX 2080 Ti performs almost as well, for less than half the price. The answer is its memory capacity. To illustrate why the Titan – or other GPUs with a lot of graphics memory – can be worth the cost for DCC professionals, I set up a few particularly complex scenes. To measure GPU memory usage during benchmarking, I used the hardware monitor in EVGA’s Precision X1 utility.

If a GPU doesn’t have enough graphics memory for a computational task, it has to send data out to system RAM. In GPU renderers, this is known as out-of-core rendering. As you can see from the results above, this is much slower than accessing on-board memory, and much less reliable.

In the V-Ray trolls scene, the Titan RTX – in which the entire scene fits into on-board memory – performs much better than the GeForce RTX 2080 Ti, while the GeForce RTX 2080 won’t render the scene at all. Neither the 2080 nor the 2080 Ti will render the other two scenes, crashing every time in the V-Ray architectural scene, and quitting with out-of-memory errors in the Arnold for Maya scene.

Part of the problem for the GeForce RTX cards is that they have to use significant portions of their graphics memory to display content on screen, reducing the memory available for rendering. There is a solution to this: to put two GPUs into your workstation. Most GPU rendering applications allow you to specify manually which GPU will be used for compute tasks, so if you disable the GPU that is used for display purposes, the application will fall back to the compute GPU, which will have all of its memory available for rendering.

To test this, I ran the three high-memory usage benchmarks again in dual-GPU setups, using the Titan RTX for display purposes only, and the RTX 2080 or RTX 2080 Ti for rendering.

This time, things are a little different. Freed from their display duties, both the 2080 Ti and the 2080 can render the V-Ray trolls scene. The 2080 has to go out of core, which slows it down quite a bit, but the 2080 Ti comes quite close to the performance of the Titan. The results are similar in the Arnold for Maya scene, although the V-Ray architectural scene still won’t render on either GeForce card.

So if the price tag of the Titan RTX makes you uneasy, one alternative would be to buy two RTX 2080 Ti cards. However, it isn’t clear whether GeForce RTX GPUs can make use of Nvidia’s NVLink technology to pool GPU memory in the same way as the Titan RTX and Quadro RTX cards (you can find a detailed analysis in this article). If not, even with some of its 24GB of graphics memory being used for display duties, a single Titan RTX would still be more versatile than a dual-2080 Ti setup, in which only the 11GB of graphics memory on the dedicated compute card would be available for GPU rendering.

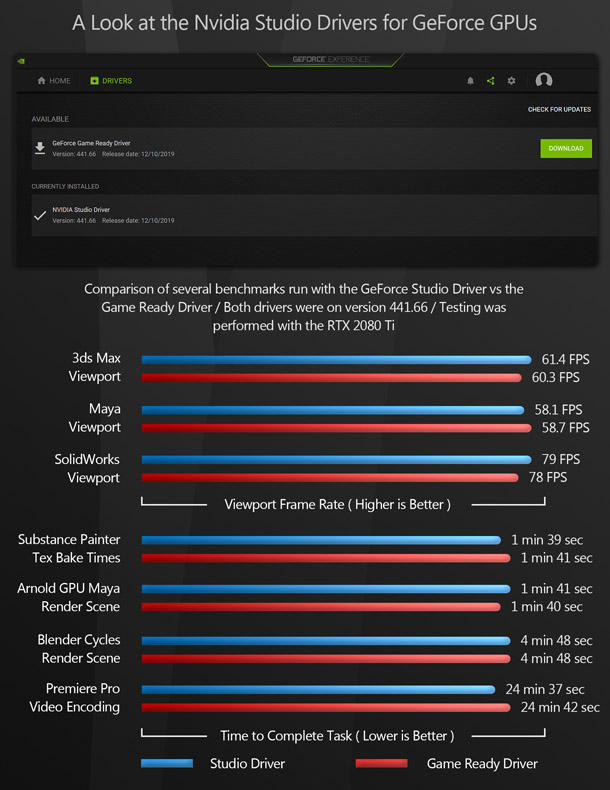

Studio versus Game Ready drivers

Another thing that I have been asked recently is whether Nvidia’s Studio drivers provide any advantage over its Game Ready drivers for DCC work. To test this, I ran every benchmark in this review on the RTX 2080 Ti with version 441.66 of the Studio driver and version 441.66 of the Game Ready driver, using Wagnardsoft’s Display Driver Uninstaller utility to make sure I had a clean install when switching from one to the other.

As you can see from selection of results above, choice of driver had no practical impact on performance. I did some more research and it seems that the intent of the Studio drivers is really to improve stability when using DCC applications, not performance. However, I’ve been using GeForce GPUs with DCC software for a long time now, and have never had any real problems with stability, so if you are currently using the Game Ready drivers and everything is running fine for you, my advice would be to stick with them.

Verdict

So what lessons can you take away from this review? First, that the GeForce RTX 2080 Ti is a very powerful GPU for DCC work. Unless the tasks you are throwing at it require more than 11GB of graphics memory, it comes very close to the performance of the Titan RTX, for less than half the cost.

Second, the Turing GPUs in general offer significant performance boosts for GPU renderers that can make use of their dedicated ray tracing hardware. And finally, Nvidia’s Studio drivers have little impact on the performance of DCC applications. They don’t hurt performance either, and they may improve stability, so there’s no reason not to use them: just don’t expect to see a major increase in speed.

Lastly, I just wanted to say thanks for taking the time to stop by here. I hope this information has been helpful and informative. If you have any questions or suggestions, let me know in the comments.

About the reviewer

Jason Lewis is Senior Environment Artist at Obsidian Entertainment and CG Channel’s regular reviewer. You can see more of his work in his ArtStation gallery. Contact him at jason [at] cgchannel [dot] com

Acknowledgements

I would like to give special thanks to the following people for their assistance in bringing you this review:

Gail Laguna of Nvidia

Sean Kilbride of Nvidia

Shannon McPhee of Nvidia

Redshift Rendering Technologies

Otoy

Bruno Oliveira on Blend Swap

Reynante Martinez

Stephen G Wells

Adam Hernandez

Thad Clevenger