Ziva Dynamics launches ZRT Face Trainer in beta

Character simulation specialist Ziva Dyamics has launched ZRT Face Trainer, an intriguing machine-learning-trained cloud-based facial rigging service for games and real-time work.

The system, which is now available for selected users to test for free, “transforms any face mesh into a high-performance real-time puppet in one hour”.

A move from full-body character simulation to facial rigging and animation

Although best known for full-body character work, in the shape of Ziva VFX, its Maya soft tissue simulation plugin, Ziva Dynamics has been hinting at a move into facial rigging and animation for some time now.

Late last year, the firm posted a teaser video of a new machine-learning-based facial performance system, followed by a demo of a real-time markerless facial solve.

Some of that technology has now been made publicly available – at least to anyone selected to take part – with the launch of the closed beta of ZRT Face Trainer.

The new cloud-based automated facial rigging platform was trained to mimic the range of expressions of human actors using a 15TB library of 4D scan data.

Convert any human(oid) head mesh into a real-time puppet capable of tens of thousands of facial shapes

According to the product website, ZRT Face Trainer can convert uploaded character head meshes into a “real-time puppet” capable of expressing over 72,000 facial shapes within an hour.

The face puppets are “only 30MB at runtime”, and run at 3ms/frame on a single CPU thread, making them suitable for use in games and real-time applications.

(The website doesn’t specify in which game engine those figures were obtained, but demos on the product webpage use the early access release of Unreal Engine 5.)

Before a head mesh can be processed, it needs to be cleaned and retopologised manually to match a standard point order, and adequately subdivided to generate convincing deformations.

You can read an overview of the process, for which Ziva Dynamics recommends Wrap, R3DS’s retopology software, and Maya, in this downloadable PDF.

The results look pretty impressive in the demo videos on the product website, which show standard range-of-motion animations for 12 different 3D head models.

Most are realistically proportioned human heads, although there are also a few humanoids and aliens.

Still only a taster for the full release of the service

You can get access to ZRT Face Trainer by applying for the closed beta via the link at the foot of the story: if selected, you can then upload your own test models for processing.

At the minute, that seems only to involve being sent a rendered animation of the character performing a range of facial expressions, rather than anything more interactive.

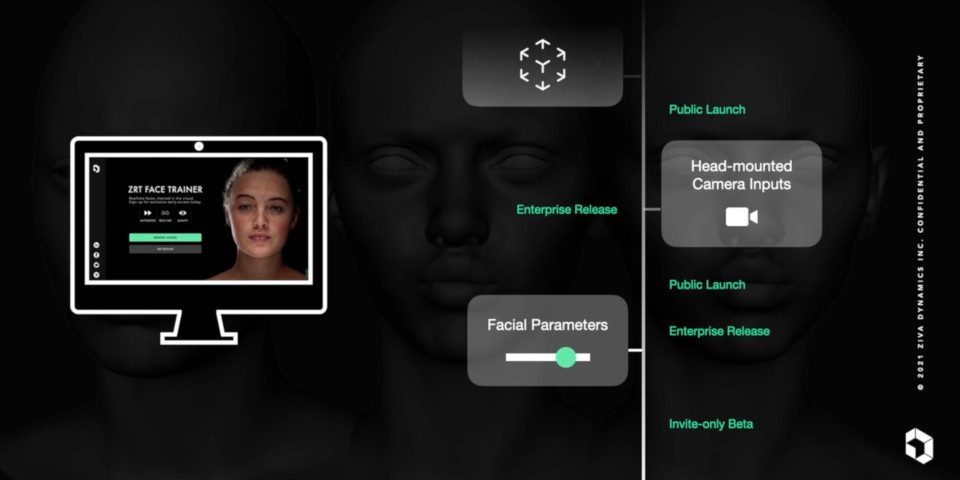

According to Ziva Dynamics’ roadmap, support for customisable facial parameters will be added before the full public launch, followed by the option to drive characters via input from a head-mounted camera.

Pricing and system requirements

ZRT Face Trainer is a cloud-based service, and is currently available in invite-only closed beta. Ziva Dynamics hasn’t announced pricing or a public release date yet.

Read more about ZRT Face Trainer on Ziva Dynamics’ website

(Includes link to apply for the closed beta)