Group test: Nvidia professional GPUs 2015

Discover which of Nvidia’s current professional workstation graphics cards gives you the best bang for your buck as Jason Lewis puts the Quadro M6000, K5200 and K2200 through a battery of real-world CG tests.

Our last group test of professional graphics cards took place back 2013 and featured all of the latest models from AMD and Nvidia. But technology has moved on, so we’re back to take a look at new cards that have been released since then, to see how they compare to each other, and to their previous-generation counterparts.

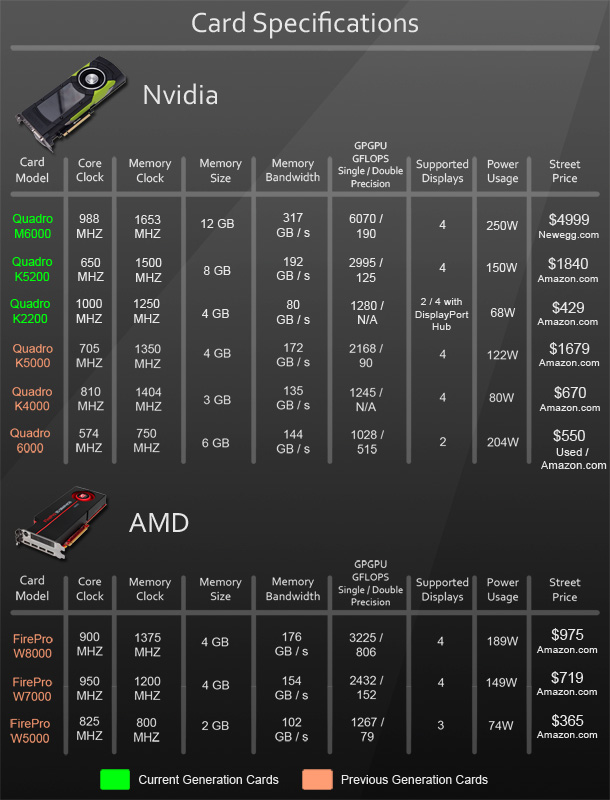

Unfortunately AMD declined to participate in this year’s review, meaning that we have no new FirePro cards in this year’s group test. Instead, we will be looking at Nvidia’s new workstation cards, and comparing them to previous-generation models from Nvidia and AMD. The top end of Nvidia’s Quadro line-up consists of four cards: the ultra-high-end M6000, the high-end K5200 and the mid-range K4200 and K2200.

M6000

The M6000 is Nvidia’s newest flagship product, replacing last year’s K6000, and is designed to take on the most extreme media, design and visualisation projects. It is built using a fully enabled version of Nvidia’s new GM200 chip, and sports 12GB of GDDR5 RAM.

The M6000 also has powerful GPU compute abilities, with a peak single-precision performance of 7 teraflops, and is the first Quadro card to offer Nvidia’s GPU boost feature, which allows the GPU to increase its clock speed depending on workload and thermal state, allowing the M6000 to achieve clock rates of 988MHz to 1.14 GHz. The memory is clocked at 6.6GHz, giving a throughput of 317GB/s. Like previous high-end Quadros, the M6000 uses ECC error-correcting memory, which should offer a higher level of reliability, especially with high-load GPU compute tasks.

Connectivity for the M6000 includes a single DVI-I port, and four full-size DisplayPort connectors that allow the M6000 to drive either two 5K or four 4K or lower-resolution displays at 30-bit color.

K5200

The K5200 is the high-end part in the current Quadro line-up. Built on a pared-down version of Nvidia’s Kepler GK110 chip running at 650MHz, the K5200 sports 8GB of GDDR5 RAM running at 6GHZ on a 256-bit memory bus. This should provide a significant improvement over the K5000 it replaces, with its GK104 chip and 4GB RAM. Like the M6000, the K5200 uses ECC error-correcting memory.

Connectivity includes a DVI-I port, a DVI-D port, and two full-size DisplayPort connections, allowing the K5200 to drive either two 4K displays or four lower-res displays at 30-bit color.

K4200

The K4200 is a mid-range card, and uses a cut-down version of the GK104 chip running at 780MHz, similar to the one found in the previous-gen Quadro K5000, and provides 4GB of GDDR5 RAM running at 5.4GHZ on a 256-bit memory bus. Unlike the M6000 and K5200, the K4200’s RAM is not ECC-enabled.

In theory, the K4200 should boast some of the most significant gains over its predecessor, the K4000, as its compute/shader performance is almost 70% higher, and its memory bandwidth almost 30% higher.

Like the K5200, the K4200 can drive up to two 4K or four lower-res displays at 30 bit color. However, since the K4200 is a single-slot card, there are only three outputs on the back of the card: a single DVI-I port, and two full-size DisplayPort connectors. To enable the fourth display, you will need a DisplayPort hub. Unfortunately, I was not provided with a K4200 card to test for this review, but Nvidia claims that its performance falls right between the K5200, and the next card in the lineup, the K2200.

K2200

The K2200 is the other mid-range part in Nvidia’s Quadro lineup. Unlike the K5200 and K4200 cards, which are based on Kepler GPUs, the K2200, like the M6000, uses Nvidia’s newest Maxwell GPU architecture.

Sporting a fully-enabled GM107 chip running at 1GHZ and carrying 4GB of GDDR5 RAM running at 5GHZ on a 128-bit bus, the K2200 looks to be an especially high performer in this price sector. It can deliver up to 1.3 TFLOPS of single-precision compute/shader performance – 78% higher than the K2000 card it replaces – and sports double the RAM: 4GB versus 2GB.

Like the K4200, the K2200 is a single-slot card and possesses a single DVI-I port, and two full-size DisplayPort connectors. Also like the K4200, the K2200 can drive either two 4K displays, or four lower-res displays with the addition of a DisplayPort hub.

Note: street prices were correct as of May 2015, but may vary over time.

Why choose a workstation card?

Before we get into the benchmarks, let’s discuss some of the issues that governed our testing process, including multi-monitor set-ups, the growth of GPU computing – and our choice of cards itself. We’ve covered these in previous group tests, so if you’re already familiar with them, you may want to skip ahead to the test results. As well as its professional cards, Nvidia offers a range of less expensive GPUs, its GeForce series, aimed at gamers. So what’s the difference between such consumer cards and their workstation equivalents?

Professional vs consumer cards

The questions on most artists’ minds when considering what GPU to buy are, “Do I really need a professional card? And what’s the difference between professional and consumer cards anyway?” The answer to the first question depends on the kind of work you will be doing: something I’ll elaborate on later. The answer to the second is: “Not a lot – from a hardware standpoint, at least.”

Unlike the pioneering days of desktop graphics work – the late 1990s and early 2000s, when companies like 3Dlabs, Intergraph and ELSA were still building specialised hardware specifically aimed at professional users – today’s professional and consumer cards use essentially the same hardware, with a few key differences.

The first of these is that pro cards typically carry much more RAM than their consumer equivalents: important for displaying large datasets in 3D applications, and even more so for GPU computing. Second, according to Nvidia reps, the GPU chips on pro cards are usually hand-picked from the highest-quality parts of a production run, guaranteeing reliability under continuous heavy loads. Again, this is particularly significant for GPU computing, where the heavy continuous loads under which the GPU is run can tax a chip far more than gaming.

The biggest difference between professional and consumer cards is their driver set and software support. While consumer hardware is tuned more towards fill rate and shader calculations, pro cards are tuned for 3D operations such as geometry transformations and vertex matrices, as well as better performance under GPU computing APIs such as CUDA and OpenCL.

Pro cards are also optimised and certified for use with graphics applications. The driver set for AMD’s FirePro and Nvidia’s Quadro cards includes extensive optimisations for popular DCC and CAD packages such as 3ds Max, Maya, AutoCAD and SolidWorks. These not only increase performance, but also offer excellent stability and predictability when compared to their desktop counterparts, particularly for CAD.

When I have polled other users, the consensus is that while such applications work on consumer cards, performance can be sub-par, and viewport glitches and anomalies are quite common: issues that are much less frequent with pro cards, and when identified, are usually addressed quickly, since manufacturers offer much more extensive customer support for their professional products than the equivalent consumer cards.

Essentially, professional cards are targeted at users who rely not only on speed, but on stability and support. So long as you stay within their memory limitations, you may get adequate performance out of a consumer card – but during crunch periods, when you need reliability as well as performance, things can get a little iffy.

Multi-monitor setups

Another feature of professional cards is their ability to drive multiple displays at resolutions of 4K or 5K. Two-monitor setups have been commonplace for some time now in professional graphics work, but with the ever-increasing desire to work more efficiently, we are starting to see more three- and four-monitor setups.

While that may sound like overkill, I can assure you that the extra desktop real estate can do a lot for productivity. Say, for example, that you have a pair of 30″ displays, a 22″ display and a Wacom Cintiq: you could have 3ds Max or Maya open one of the 30-inchers, Photoshop on the other, ZBrush or Mudbox running on the Cintiq, and your reference art or a web browser open on the 22-inch display! No more [Alt]-tabbing, and no more stacking windows so that only one or two are visible at the same time.

All of Nvidia’s current Quadro cards support such a workflow, being capable of driving up to four displays simultaneously, either connected directly or via DisplayPort hubs in the case of lower-end cards.

GPGPU computing

Another consideration in our tests has been the rise of GPGPU computing: the use of the graphics processor to augment the system’s CPUs when performing general computing tasks. GPU computing has been maturing at a significant rate over the last few years, with GPU-accelerated renderers gaining a foothold in production environments, increasingly for final-frame rendering as well as pre-viz and look development. Although the consensus still seems to be that CPUs are still superior for certain rendering functions, existing GPU-accelerated renderers like iray, OctaneRender, and V-Ray’s V-Ray RT engine have evolved significantly, while there are several promising newcomers in the market, including Redshift and cebas’s moskitoRender.

There are two major APIs for GPU computing: CUDA and OpenCL. CUDA is Nvidia’s proprietary technology, while OpenCL is an open standard supported by both Nvidia and AMD. Nvidia embraced GPU computing as long ago 2006, when it introduced the G80 – its first unified shader architecture – on the 8800 GTX, and as a result, CUDA is still a more mature technology. Many, including myself, expected that OpenCL would have gained more of a foothold in the market by now, but progress – at least in the media and entertainment sector – has been slower than predicted. Although the technology continues to progress, software developers tell me that there are still certain things they can only do with CUDA.

GPU computing is where professional cards set themselves apart from their consumer counterparts. In order for the GPU to perform a compute task efficiently, the entire data set that task will be addressing must be loaded into its on-board memory. Otherwise, the software will either have to transfer data between system and graphics RAM, resulting in vastly diminished performance – or, in some cases, will ignore the GPU entirely. This is where the high-end workstation cards, with upwards of 4GB of on-board memory, come into their own.

Test procedure

As our test platform, we used a powerful Z820 workstation, generously provided by HP. The test machine sports two 3.1GHz eight-core Xeon E5-2687W CPUs, 32GB of DDR3 RAM and a 15,000 RPM SAS drive, ensuring that the only bottlenecks in performance should be the GPUs themselves. Read our review of the Z820 here.

All of the tests were run under 64-bit Windows 7 Professional with all of the current service packs and updates installed, on a 30” display at its native resolution of 2,560 x 1,600px.

For benchmarking, we used the following standard suite of DCC and rendering applications:

Viewport performance

3ds Max 2015, Maya 2015, Softimage 2015, Mudbox 2015, Blender 2.73, Modo 701, LightWave 11.5, Cinema 4D R14, Mari 2.0, Unreal Engine 4.5.1, CryEngine 3.6.12

GPGPU performance

Iray 2.1, OctaneRender 2.17, V-Ray 2.5 (V-Ray RT), Blender 2.73 (Cycles renderer), Arion 2.7, Redshift 1.2.69, moskitoRender 1.0, LuxMark 2.0

Synthetic benchmarks

Cinebench 15, 3DMark, FurryBall 4, ratGPU 0.8.0

Unless otherwise stated, the viewport performance benchmarks show the frame rate when manipulating the test scene in the viewport, averaged over a number of test runs.

I try not to use synthetic benchmarks such as SPECviewperf and SYSmark, since the results they give have no meaningful relationship to real-world production. Instead, I try to confine my tests to actual DCC applications, with assets similar to those you would find in a production environment. However, due to reader requests, I do use two standard synthetic benchmarks: Cinebench and 3DMark. In this group test, we’ve also recategorised the FurryBall and ratGPU tests as synthetic benchmarks: while both of them return actual render times rather than arbitrary figures, as with conventional synthetics, it isn’t possible to inspect their built-in test scenes to determine polygon counts and texture resolutions, as in our other tests.

Where possible, as well as the Quadro M6000, K5200 and K2200 on test, we also benchmarked Nvidia’s previous-generation Quadro K4000, K5000 and 6000; and AMD’s FirePro W5000, W7000 and W8000.

Benchmark results

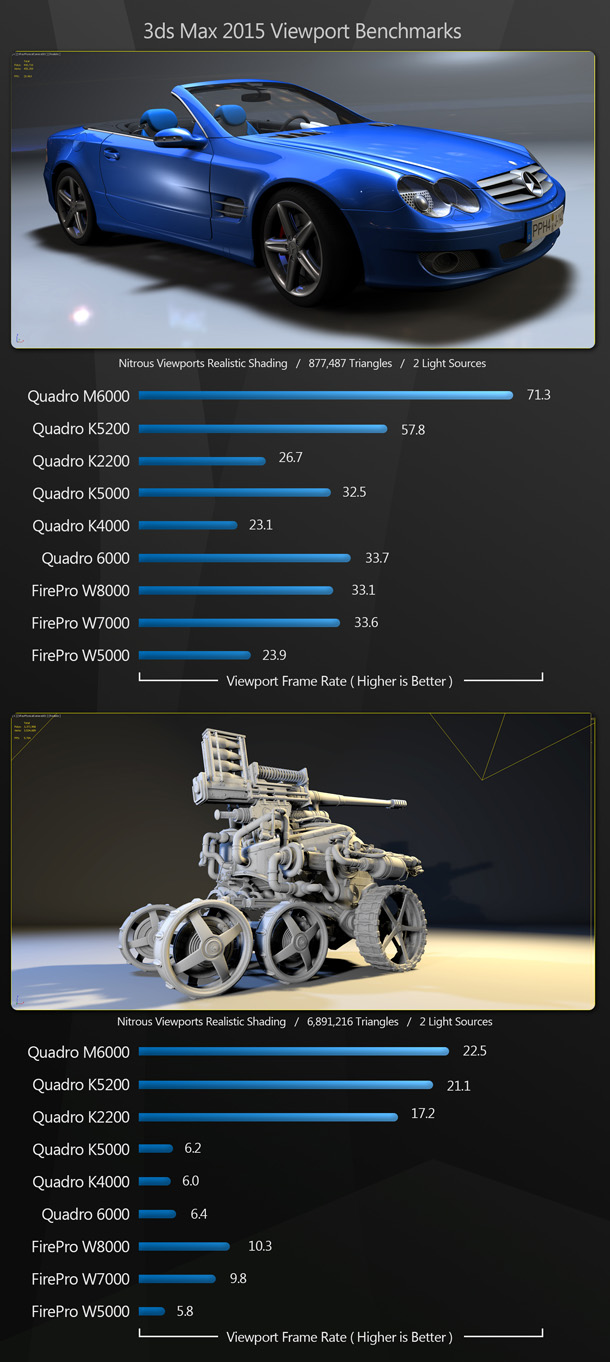

3ds Max 2015

Our 3ds Max benchmarks comprise three high-poly scenes, all running in the Nitrous viewport mode with realistic shading enabled. Our SL500 scene has reflections enabled, and multiple light sources. The steampunk tank benchmark draws just under 7 million triangles and 1,050 objects.

All the new cards command significant leads over their predecessors. Even the mid-range K2200 beats out all the previous-generation Nvidia and AMD cards in the tank benchmark, and two of the previous-generation cards in the SL500 benchmark.

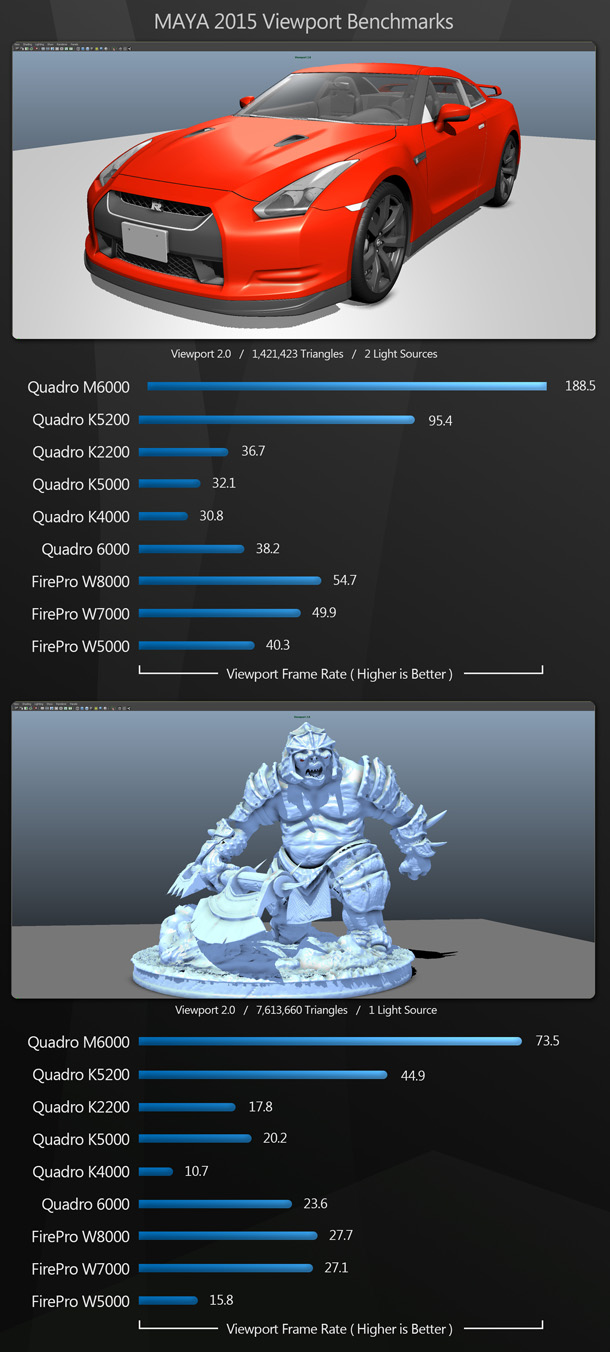

Maya 2015

Our Maya benchmarks run in Viewport 2.0 with AO and shadows enabled. Both are polygon-intensive.

As with the 3ds Max benchmarks, the new cards command significant leads over their predecessors, with the mid-range K2200 beating two of the old higher-tier cards in both tests.

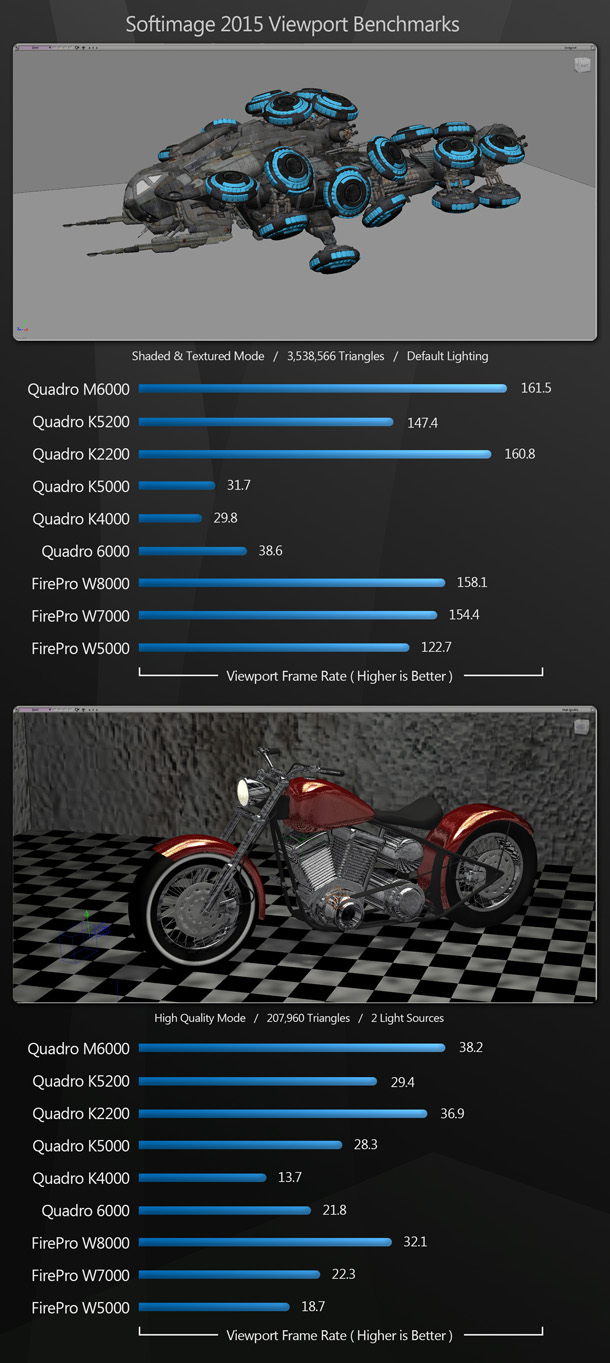

Softimage 2015

Our Softimage benchmarks comprise a 3.6-million-poly scene with textures enabled, and a lower-poly scene running in High Quality display mode with reflections, ambient occlusion and shadows enabled.

The Softimage scores are different to 3ds Max and Maya. While all three new cards beat all three previous-gen Nvidia cards by a large margin, they only just outperform – or slightly underperform – the previous-gen AMD cards; and more interestingly, the K2200 beats the more expensive K5000. My surmise is that neither scene is heavy enough to really tax the cards’ memory or architecture, so the deciding factor is core clock speed.

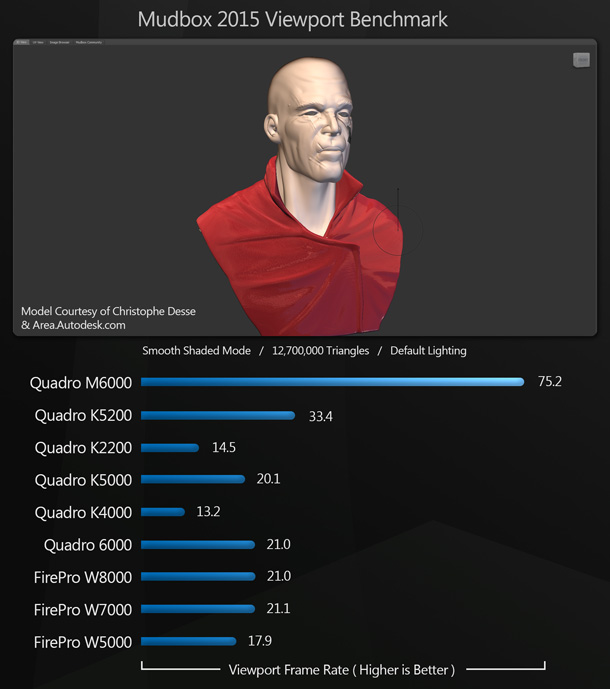

Mudbox 2015

Our Mudbox benchmark is a model subdivided to 12.7 million polygons running in the standard viewport.

Again, the two new high-end Quadro beat all of the previous-generation cards. The new K2200 doesn’t fare as well, but it does beat the K4000: a higher-tier card from the previous generation.

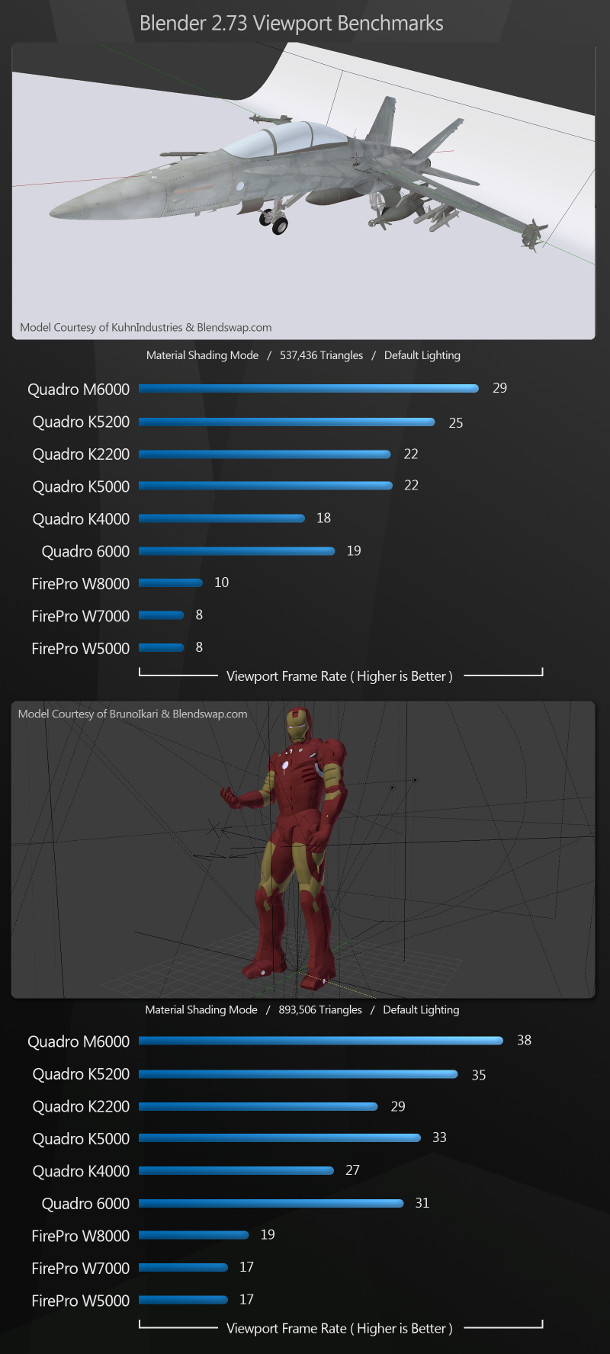

Blender 2.73

Our Blender benchmarks consist of two mid-density models, and run with the viewport shading set to Material.

Once again, the new M6000 and K5200 outperform all of the previous-generation cards, while the K2200 outperforms all but the higher-tier K5000 and 6000.

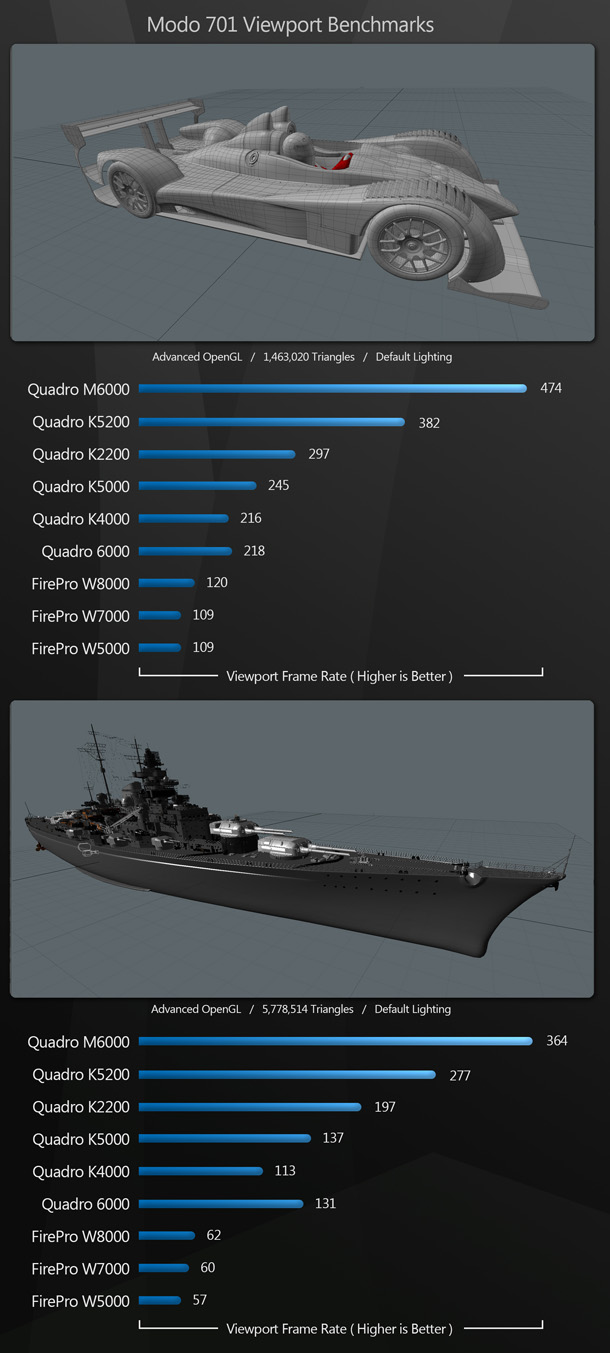

Modo 701

Our Modo benchmarks consists of a mid-density car model, and a higher-poly battleship. Both models are rendered with the Advanced OpenGL mode.

With Modo, we see a similar pattern to 3ds Max and Maya, but this time, all three of the new Nvidia cards take significant leads over all of the previous-generation cards.

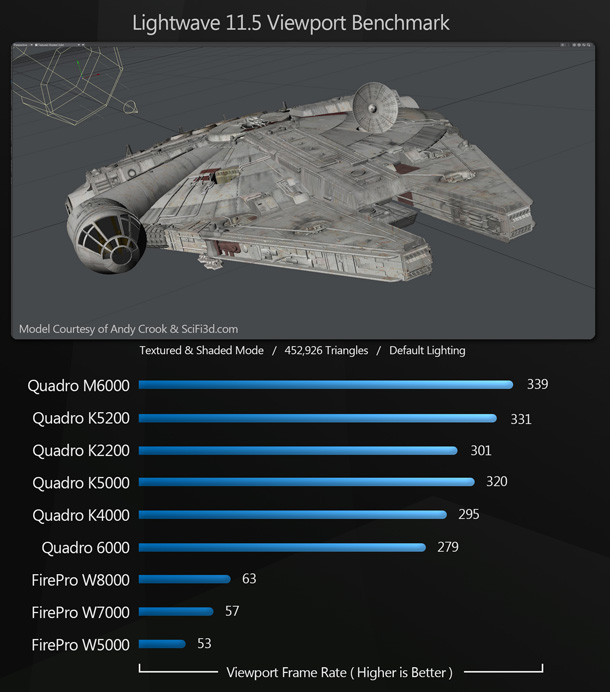

LightWave 11.5

Our LightWave benchmark is of a mid-density test model running in the Textured Solid Shaded display.

Again, the M6000 and K5200 outperform all the previous-gen cards, and the K2200 outperforms all but the higher-tier K5000.

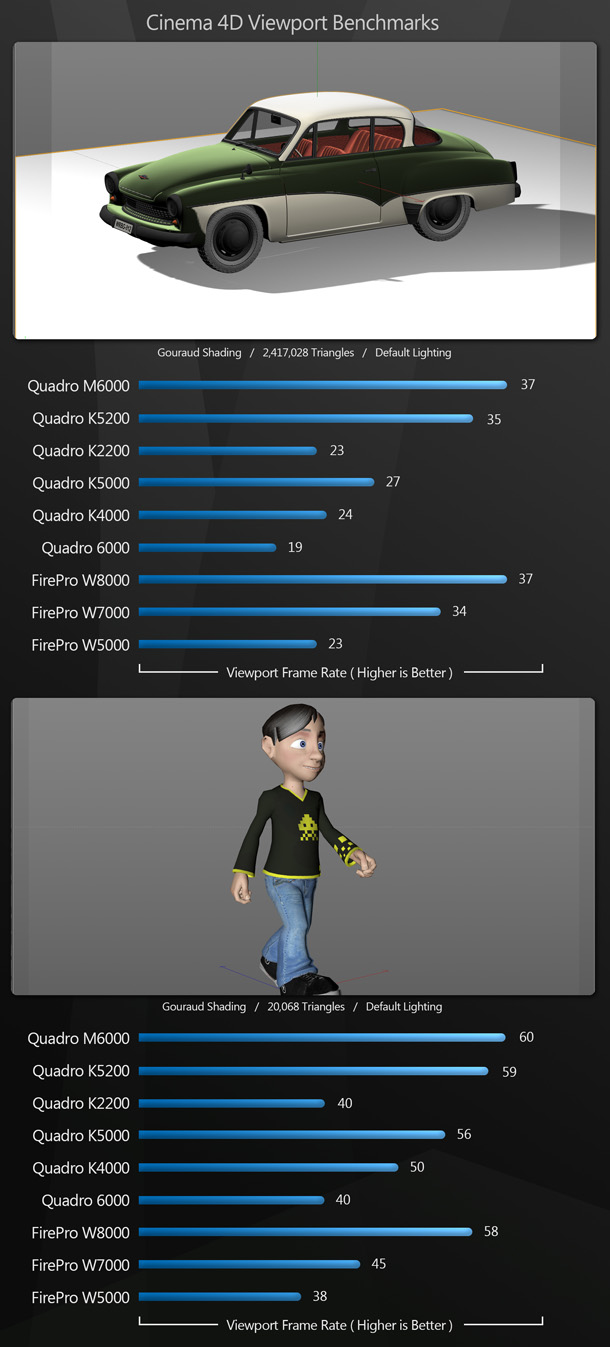

Cinema 4D R14

Our Cinema 4D benchmarks consist of two scenes, one static and one animated, both running in the Gouraud shading mode.

Once again, the new Nvidia cards outperform their previous-generation equivalents significantly. Their lead over the previous-generation AMD cards is smaller, and sometimes non-existent. It would have been interesting to see how the current-gen FirePros fare here, but sadly, AMD declined to take part in this year’s round-up.

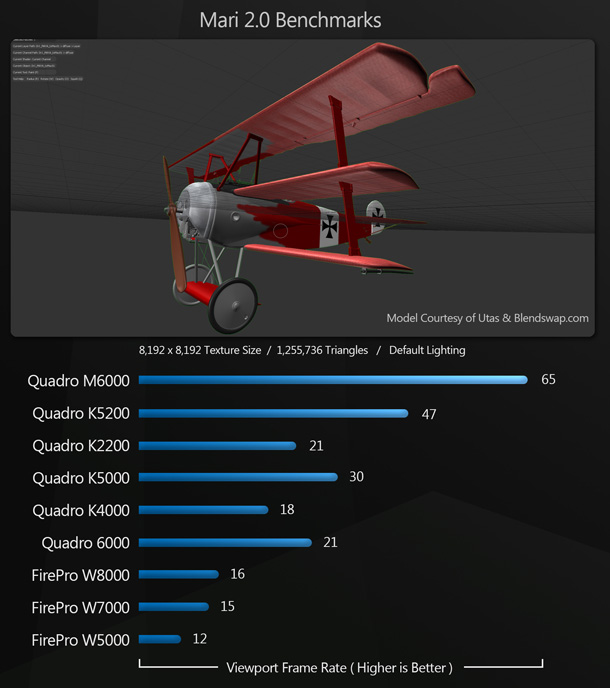

Mari 2.0

Our Mari benchmark consists of a Fokker DR1 triplane model with just over 600,000 polygons, and 8K textures.

Once again, the M6000 and K5200 outperform all of the previous-gen cards, and the K2200 outperforms all but the K5000. The highest performer, the M6000, more than doubles the highest score from the older cards.

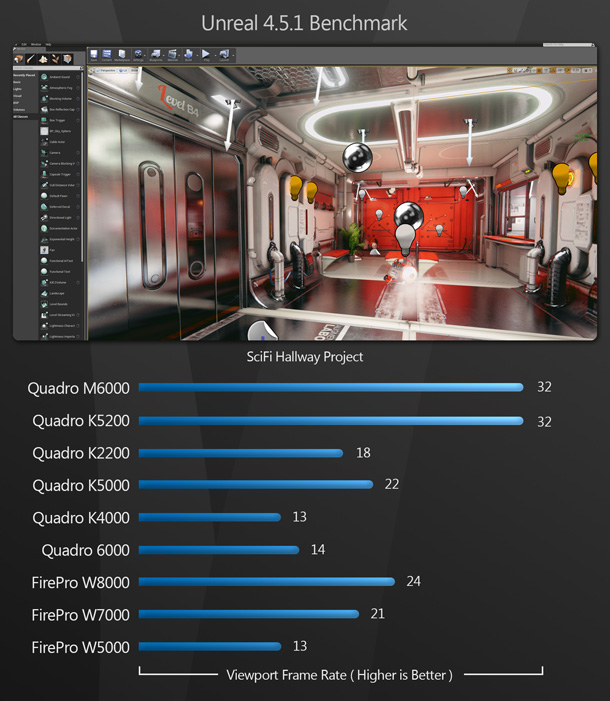

Unreal Engine 4.5.1

Our UE4 benchmark runs in the Unreal editor at the same resolution as our system desktop, 2,560 x 1,600.

Once again, the M6000 and K5200 outperform all of the previous-gen cards, and the K2200 outperforms several of the higher-tier cards from previous generations.

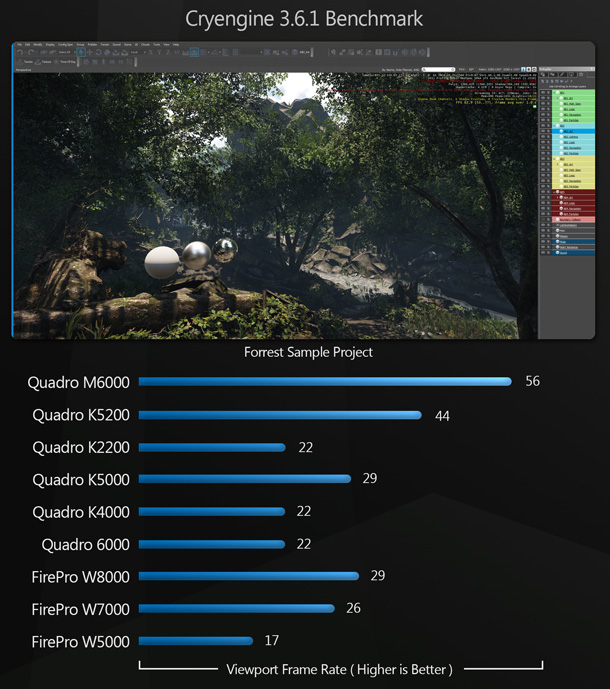

CryEngine 3.6.1

Our CryEngine benchmark also runs in the editor at the same resolution as our system desktop, 2,560 x 1,600.

Again, the M6000 and K5200 significantly outperform all of the previous-gen cards. The K2200 outperforms one of the previous-gen FirePros, and ties with two higher-tier previous-gen Nvidia cards.

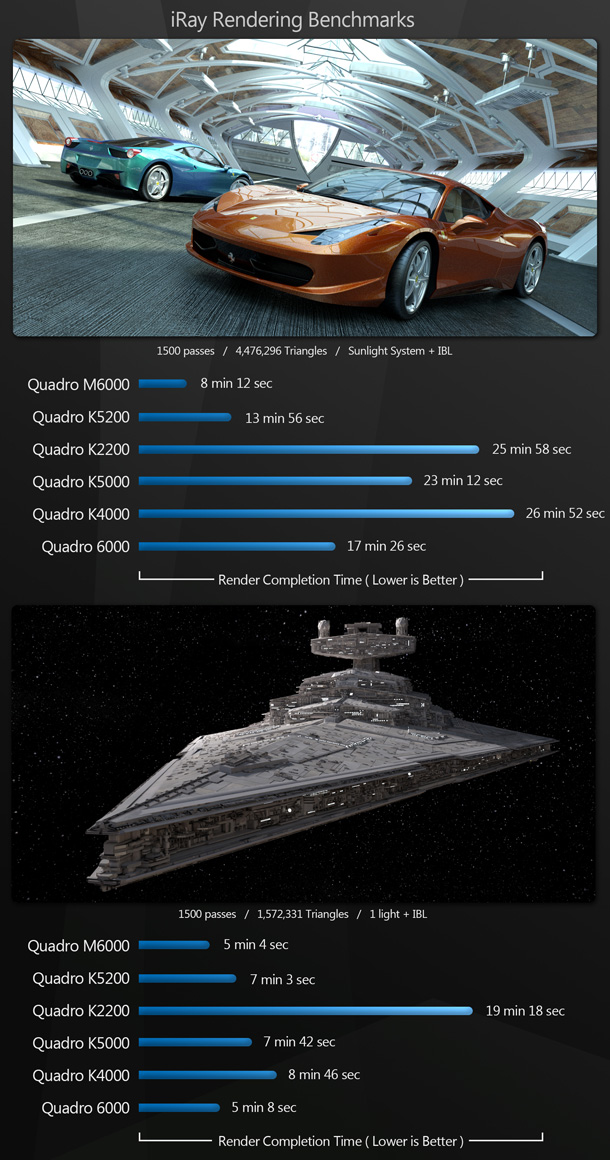

Iray 2.1

The first of our GPGPU benchmarks make use of Iray, Nvidia’s hybrid GPU/CPU renderer. Because Iray is GPU-accelerated solely through Nvidia’s CUDA API, not OpenCL, we haven’t included the AMD cards here.

In the Ferrari garage scene, the M6000 and K5200 greatly outperform all of the previous-gen cards. The K2200 doesn’t fare as well, but remember that it’s from a lower product tier than all of the previous-gen cards.

With the Star Destroyer scene, the results are much closer. The M6000 still beats all of the older cards, but this time, the Quadro 6000 beats the new K5200, while the new K2200 falls way behind in performance. This is probably due to the fact that the scene contains no raytraced materials, and only one light source: the newer GPUs flex their muscles on more complex scenes.

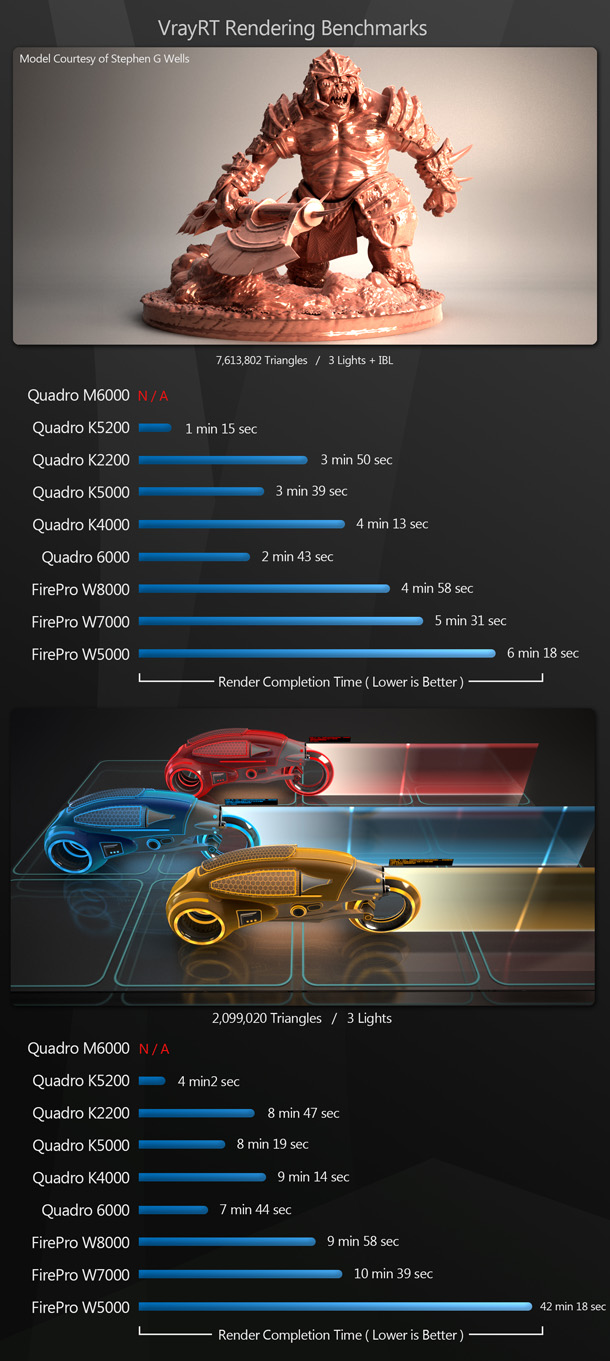

V-Ray 2.5 (V-Ray RT)

Our V-Ray benchmarks use the software’s interactive preview engine, V-Ray RT. It supports both CUDA and OpenCL, so we’ve benchmarked both Nvidia and AMD cards.

Once again, the K5200 outperforms all of the previous-gen cards, while the K2200 outperforms all of the previous-gen AMD cards. The Light Cycles scene is a perfect example of graphics RAM limiting GPU rendering: the scene uses approximately 2.7GB of RAM, so the FirePro W5000, with only 2GB of on-board memory, takes a massive performance hit due to the scene being kicked off the GPU and rendered only with the system’s CPUs.

Unfortunately, the V-Ray benchmarks failed to run on the M6000. I suspect that it may just be too new and that these issues will be resolved with a driver or V-Ray update in the near future.

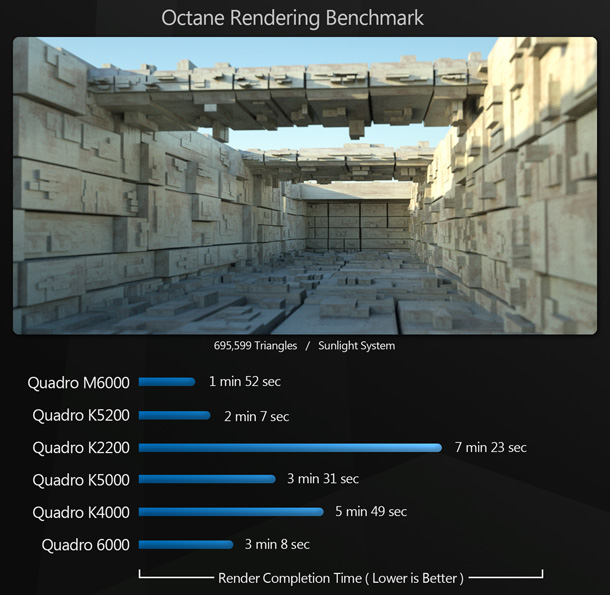

OctaneRender 2.17

Like Iray, OctaneRender is a CUDA-only application, so we’ve only tested the Nvidia cards here.

Once again, the M6000 and K5200 significantly outperform all of the previous-gen cards, while the K2200 falls behind its older, but higher-tier, counterparts.

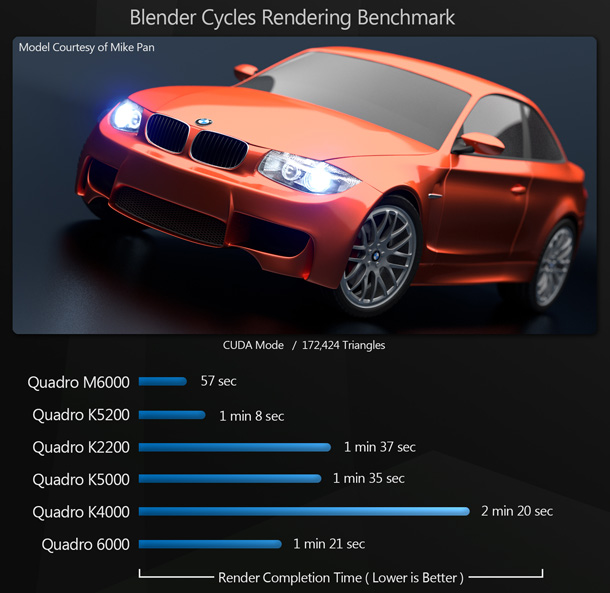

Blender 2.73 (Cycles renderer)

Blender’s Cycles engine also supports GPU acceleration, currently through CUDA. Although active OpenCL support has been resumed with the upcoming 2.75 release, we’ve only benchmarked the Nvidia cards here.

Again, all three new cards perform well, with even the K2200 coming close to the performance of the previous-generation K5000.

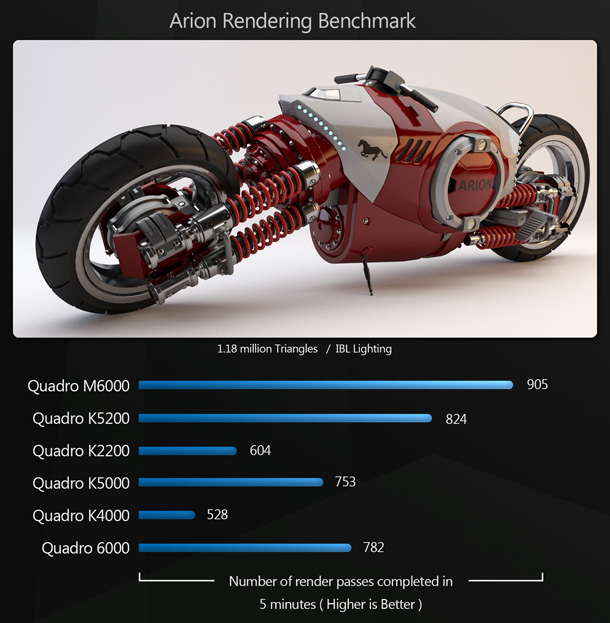

Arion 2.7

The first of several new GPGPU benchmarks in this year’s tests, RandomControl’s Arion is a standalone renderer with plugins for most DCC applications. Like Iray, it is a hybrid CPU/GPU renderer – and also like Iray, it is CUDA-only so, again, we’ve only tested the Nvidia cards.

The Arion benchmark is a little different in that instead of measuring the time to render a single frame, I set a five-minute time limit and recorded the number of render passes that completed in that time frame.

Once again, the mighty M6000 takes the top spot, followed by the K5200. The K2200 comes in the middle of the higher-tier previous-generation cards.

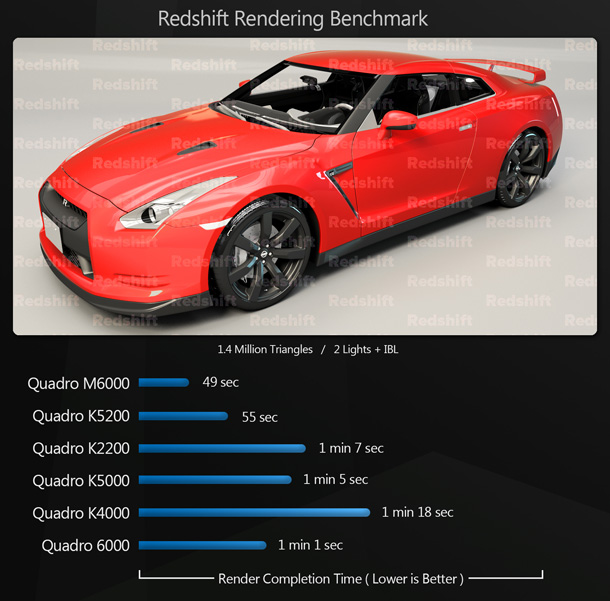

Redshift 1.2.69

A relative newcomer to the DCC market, Redshift is a CUDA-based application, so again, we’ve only tested the Nvida cards. Unlike most GPU renderers, it’s a biased renderer, designed for very low render times. It integrates into its host applications, Maya and Softimage, via plugins.

Again, the M6000 and K5200 take the top spots, with the K2200 close to the previous-generation K5000. As with Cycles, all of the render times are fairly similar, probably due to the relative simplicity of the benchmark scenes.

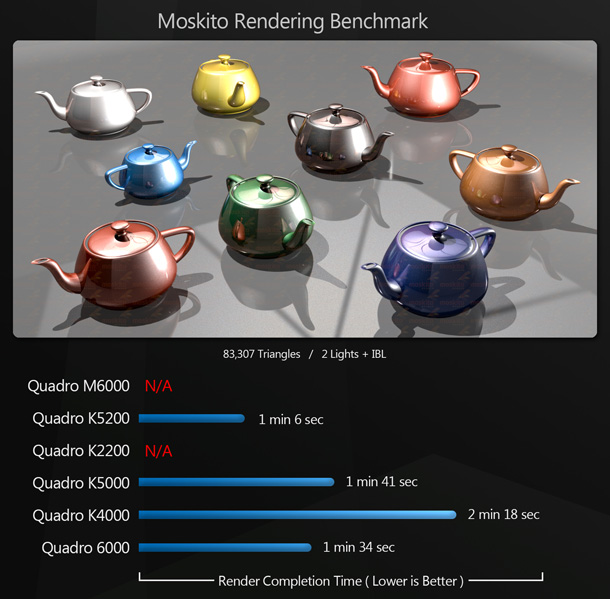

moskitoRender 1.0

The next of our new GPGPU benchmarks, cebas’s 3ds Max renderer, moskitoRender, is another unbiased path tracer. Again, it’s CUDA-based, so we’ve only tested the Nvidia cards.

Again, the K5200 outperforms the previous-generation cards. I was unable to get the software to work with the M6000 and K2200. As with V-Ray, I suspect this may have been an issue with Maxwell GPUs, and for it to be fixed in future by a driver or software update.

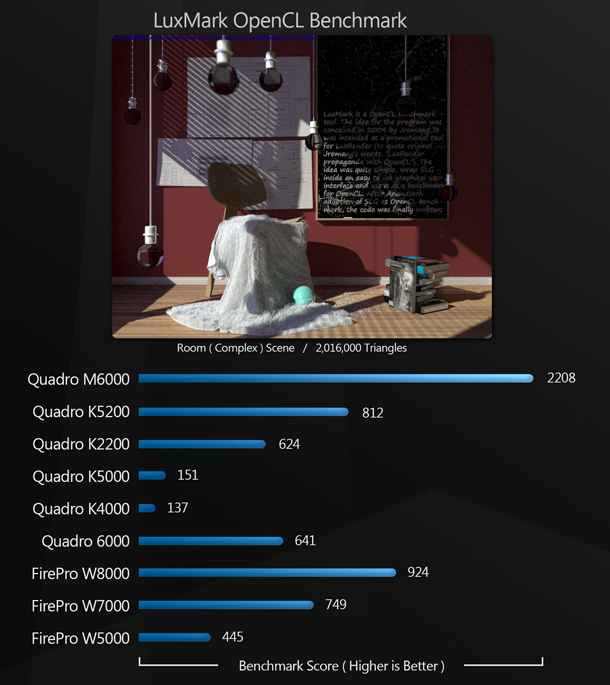

LuxMark 2.0

LuxMark is a benchmarking app based on the open-source OpenCL renderer LuxRender. There are three levels of complexity to choose from: for this review I selected the most complex scene, the Hotel Lobby.

All three new cards score well here compared to the older hardware, although it’s interesting to note how well the previous-generation AMD cards hold up. The M6000 takes top spot with a massive lead over the K5200.

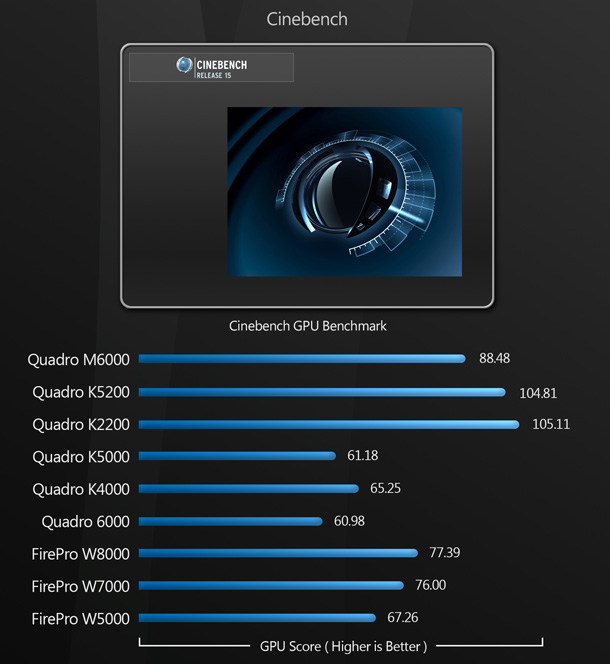

Cinebench 15

Cinebench is the first of the synthetic benchmarks we’re using in these tests. The chart below shows results for the OpenGL test only.

As with many of our viewport performance benchmarks, the new Nvidia cards all outperform all of the previous-generation hardware, although it’s interesting to note that they come in in reverse price order: the K2200 scores highest, followed by the K5200, then the M6000.

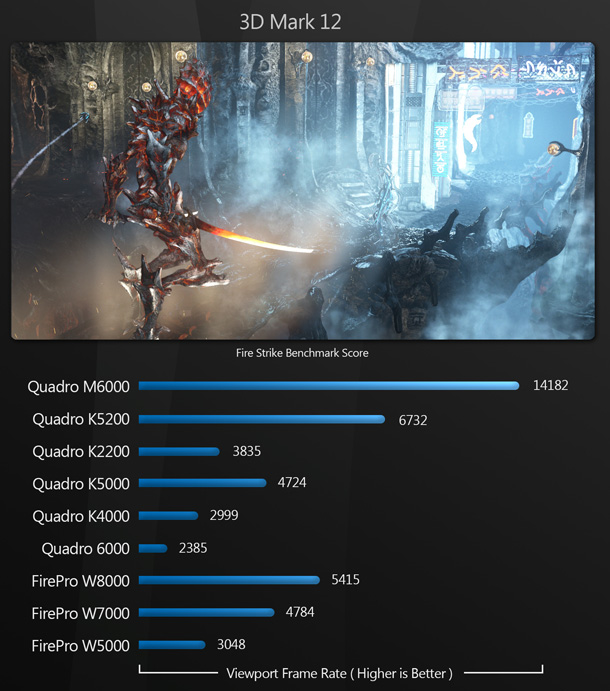

3DMark

Our second synthetic benchmark is the current version of 3DMark: chronologically, version 12, although developer Futuremark doesn’t use a number. Although it is geared towards gaming, it is very GPU-intensive so it is a good gauge for raw 3D performance. I used the base Fire Strike benchmark here.

The Quadro M6000 takes a massive lead over the second-placed K5200, followed by the FirePro W8000 and W7000, the Quadro K5000 and K2200, the FirePro W5000, the Quadro K4000, and lastly the Quadro 6000.

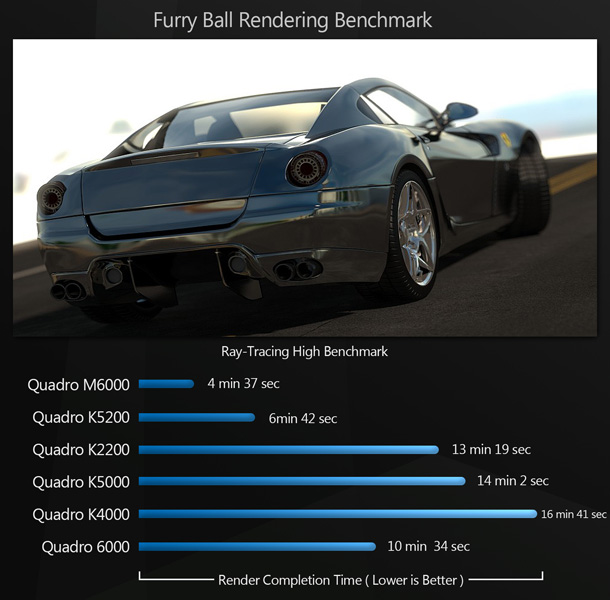

FurryBall 4

Furry Ball is both a biased and unbiased renderer with plugins for 3ds Max, Cinema 4D and Maya. Although it was once OpenCL-based, since incorporating raytracing, it has migrated to CUDA, so once again, we’ve only tested the Nvidia cards. I used the software’s built-in High Raytracing benchmark.

Once again, the M6000 takes the top spot, followed by the K5200; and this time, the K2200 outperforms the previous-generation K5000.

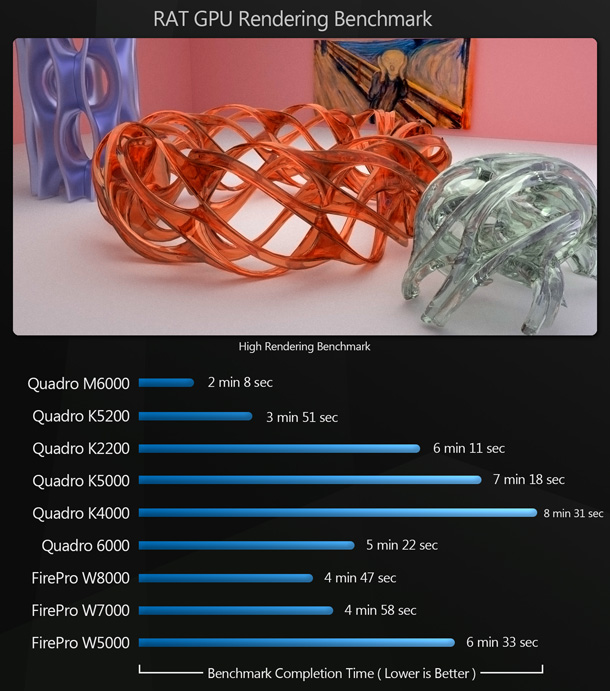

ratGPU 0.8.0

ratGPU is a free, independently developed OpenCL raytracing renderer, and supports 3ds Max and Maya. Again, it has an integrated benchmarking tool, which I used on its High settings.

Again, the M6000 and K5200 take the two top spots, with the K2200 in amongst the previous-generation cards.

GPU computing: general thoughts and future articles

Before we reach the conclusion of this group test, some general thoughts on GPU computing. In addition to application certification, Nvidia is pushing GPU compute performance as a key selling point for its Quadro cards over their consumer counterparts. It has also been doing a lot of development work on Iray, and on its VCA (Visual Computing Appliance), a new rendering/computer server powered by eight M6000 GPUs. This review isn’t the best place to cover these developments, but look for a more in-depth article on the subject soon.

You may also have noticed that some of the GPU computing benchmarks show a much narrower range of scores than others. It seems that as GPU compute performance increases, some of my older test scenes just aren’t complex enough to tax the hardware any more. For example, in the Iray benchmarks, the performance of both new and old cards is very similar in the Star Destroyer scene – although the Ferrari Garage, with its more complex lighting set-up and many reflective surfaces, still returns a wide range of scores. For future reviews, I will slowly be putting together a set of more complex scenes.

Verdict

In terms of performance, Nvidia has hit a home run with its newest Quadros, leading to significantly higher benchmark scores than its previous-generation cards – in some tests, over a 100% increase.

If you are looking for the ultimate, no-holds-barred professional GPU, look no further than the M6000. Its ultra-high-end Maxwell core, coupled with 12GB of graphics RAM, ensured that it took first place in nearly every single benchmark, including every one of our viewport performance and GPU compute tests that it completed.

The K5200 is also a very potent performer. Although it uses a GK110 core, it vastly outperforms its previous-generation counterpart, the K5000, in every test, both in viewport and compute performance.

The last of the new products, the K2200 was a real surprise. While I couldn’t compare it directly to its predecessor – I didn’t have a K2000 to test – its viewport performance often surpassed the high-end parts from the previous generation, thanks in part to its new Maxwell core architecture.

So which one is right for you? If you work with large 3D scenes, or are looking to do high-end GPU rendering, you should probably consider a M6000 or K5200. If your work purely involves asset creation, or you are looking to do less intensive GPU computing, the K2200 should be a good fit.

But no matter which card best fits your needs – and your budget – on the evidence of this year’s benchmarks, you can’t go far wrong with any of Nvidia’s recent Quadros.

Jason Lewis has over a decade of experience in the 3D industry. He is Senior Environment Artist at Obsidian Entertainment and CG Channel’s regular technical reviewer. Contact him at jason [at] cgchannel [dot] com

Links

Read more about Nvidia’s Quadro range of professional GPUs

Acknowledgements

I’d like to thank several vendors and individuals for their contributions to this article.

Vendors

HP

Autodesk

Evermotion

The Foundry

TurboSquid

Individuals

Shannon Deoul of Raz PR

Sean Kilbride of Nvidia

Stephen G Wells