Open Image Denoise 3 will support temporal denoising

Open Image Denoise lead developer Attila Áfra’s presentation on OIDN from the 2025 High-Performance Graphics conference. The section on Open Image Denoise 3 starts at 02:57:20.

Intel has unveiled Open Image Denoise 3 (OIDN 3), the next major version of its open-source render denoiser, integrated into CG applications like Arnold, Blender, Redshift and V-Ray.

The update will introduce a long-awaited feature: support for temporal denoising, reducing flicker when denoising animations – and will do so for both real-time and final-quality workflows.

The new features were actually previewed during a presentation at last year’s HPG conference, but they haven’t received much media coverage, so we felt it was worth posting a story.

An AI-driven hardware-agnostic render denoiser

First released in 2019, Open Image Denoise is a set of “high-performance, high-quality denoising filters for images rendered with ray tracing”.

The technology is now widely integrated into DCC tools and renderers, including Arnold, Blender, Cinema 4D, Houdini, OctaneRender, Redshift, Unity, Unreal Engine and V-Ray.

Like NVIDIA’s OptiX – also integrated into many renderers – it uses AI techniques to accelerate denoising.

But unlike OptiX, OIDN isn’t hardware-specific: while it was designed for Intel 64 CPUs, it supports “compatible architectures”, including AMD CPUs and Apple’s M-Series processors.

Since the release of Open Image Denoise 2.0 in 2023, OIDN has also run on GPUs as well as CPUs, and supports AMD, Intel and NVIDIA graphics cards.

Currently supports spatial but not temporal denoising

In the presentation, Intel Principal Engineer Attila Áfra – a Sci-Tech Academy Award-winner for his work on OIDN – runs through the limitations of its current network architecture.

Issues he identifies include visual artifacts such as overblurring, minor color shifts, and ringing, particularly in lightmaps.

However, the biggest limitation is that OIDN operates on a single frame at a time, meaning that denoising is not temporally coherent: it can vary from frame to frame.

As a result, it can cause noticeable flickering when denoising animations rather than still images.

OIDN 3: support for temporal denoising, for both real-time and final-frame workflows

OIDN 3, the first major update to the architecture since the original release, should resolve many of the visual artifacts – and, more importantly, introduce support for temporal denoising.

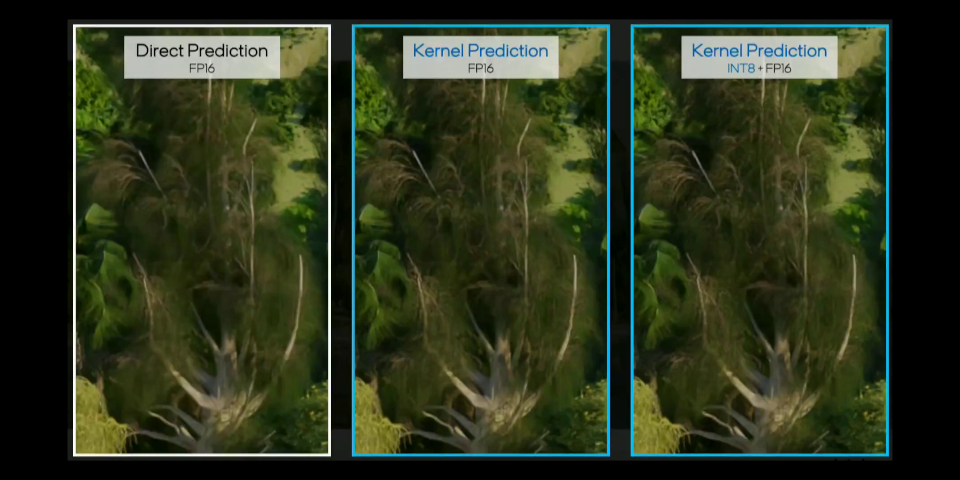

The new architecture – which is still based on U-Net, but moves from direct prediction to kernel prediction-based denoising – with variants of the network for real-time and final-frame work.

Real-time denoising uses the previous denoised frame, backward motion vectors and depth data as inputs.

Final-quality denoising also uses future frames and forward motion vectors as inputs, improving the visual quality of the output at the expense of increasing processing time.

Other benefits of the new architecture include the option to use the same kernels for multiple AOVs, and support for supersampling.

You can see comparisons between the outputs of the current and new architectures on the well-known Moana island data set in the video embedded at the top of the story.

Licensing, system requirements and release date

Open Image Denoise 3 is in development. It was originally due for release at the end of 2025, but Intel tells us that it is now scheduled for the second half of 2026.

At the time of writing, the latest release is still OIDN 2.4.1.

Open Image Denoise is compatible with 64-bit Windows, Linux and macOS. You can find a full list of CPU and GPU architectures supported on the OIDN website.

Both source code and compiled builds are available under an Apache 2.0 licence.

Read more about Open Image Denoise on the OIDN website

(No more information about OIDN 3 at the time of writing)

Have your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we don’t post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.