NVIDIA unveils fVDB

NVIDIA has unveiled fVDB, a new deep learning framework for “building AI algorithms that scale to the size of reality”.

The technology, which is implemented as an extension to PyTorch, builds AI operations on top of OpenVDB, the open standard for representing volumetric data.

Potential uses include creating “reality-scale” digital twins, 3D generative AI at the “scale of city blocks”, and up-resing existing simulations.

fVDB formed part of NVIDIA’s announcements from SIGGRAPH 2024, along with new generative AI models for OpenUSD development.

fVDB is intended for working with “massive data sets” on the scale of digital cities.

A high-performance spatial AI framework built on OpenVDB and NanoVDB

NVIDIA describes fVDB as a deep learning framework for “sparse, large-scale, high-performance spatial intelligence”, enabling software developers to build “AI architectures and algorithms that scale to the size of reality”.

As an efficient way to represent data sets of that scale, it uses OpenVDB, the open standard for sparse volumetric data, and NanoVDB, NVIDIA’s GPU-friendly simplified representation of that data.

fVDB then builds AI operators on top of NanoVDB, making it possible to perform common tasks like convolution, pooling, attention and meshing.

Potential use cases for fVDB range from creating large-scale generative AI models and digital twins to up-resing existing simulations.

Mainly intended for digital twins, but could be used for entertainment work

The primary practical use case for fVDB seems to be the generation of large-scale digital twins of the real world, for tasks like town planning and industrial simulations.

However, there are obvious potential applications to entertainment work, such as generating CG cities for visual effects or game development.

The video at the top of the story shows a couple of interesting demos built using fVDB, including a generative AI model for creating low-res voxel-based representations of entire city blocks, complete with houses, roads and trees.

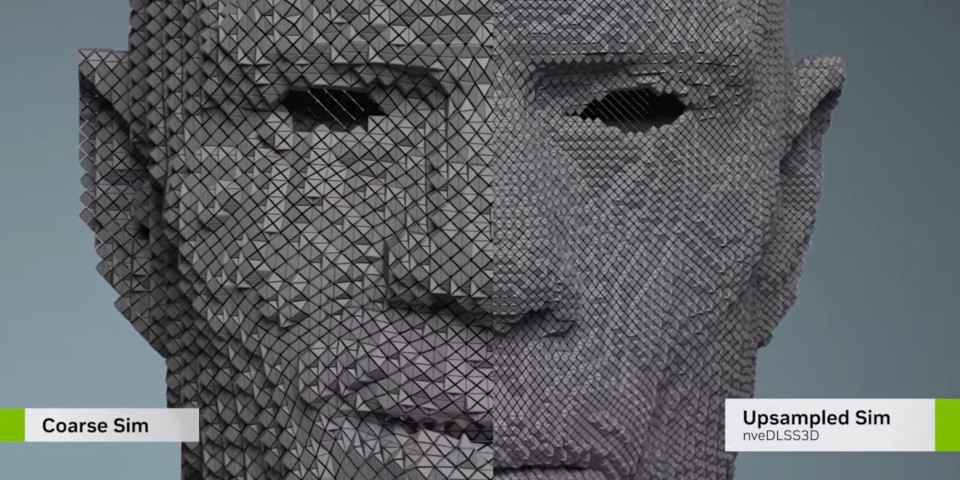

Another demo shows fVDB in use to up-res an existing facial simulation.

NVIDIA told CG Channel that fVDB is a “very general technology”, and that “we expect it to be used for everything”.

Available as a PyTorch extension, and integrated with existing technologies

NVIDIA pitches fVDB as more powerful than existing similar technologies, capable of processing data on “4x spatial scales and 3.5x faster than prior frameworks”.

It is also intended to be easier to implement, providing “easy-to-use APIs so you don’t have to patch together different libraries”.

fVDB is available as a PyTorch extension, can read and write existing VDB data sets out of the box, and integrates with existing NVIDIA technologies like Warp and Kaolin.

How will software developers be able to get access to fVDB?

Source code for fVDB will be made available as part of the Academy Software Foundation-maintained GitHub repository for OpenVDB itself.

fVDB functionality will also become available via NIM, NVIDIA’s system of containers for hosting GPU-accelerated AI microservices.

NVIDIA plans to roll out three fVDB microservices, for mesh generation, generating large-scale NeRFs in USD, and up-resing physics simulations.

System requirements: needs NVIDIA hardware

Any applications built on fVDB will need to be running on NVIDIA hardware.

The framework is “built from the ground up on core NVIDIA tech”, including CUDA, its proprietary GPU compute API, and the Tensor cores in current NVIDIA GPUs.

License conditions and release date

fVDB is due to be merged into the OpenVDB GitHub repository “shortly”. Source code in the repository is available under an open-source MPL 2.0 license.

Software developers can also apply for early access to the fVDB PyTorch extension.

NVIDIA hasn’t announced a release date for the fVDB NIM microservices.

Read more about fVDB on the NVIDIA Developer website

(Includes link to apply for the fVDB PyTorch extension early access program)

Have your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we don’t post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.