Review: Nvidia Titan RTX

Nvidia’s latest Turing GPU offers the power of a workstation card at a price closer to that of a gaming GPU. Jason Lewis assesses how it performs with a range of key DCC and rendering applications.

As I mentioned in my recent review of AMD’s Radeon Pro WX 8200, the last couple of years have seen huge steps forward in workstation hardware. One of the key innovations has been Nvidia’s Turing GPU architecture, found in its latest RTX graphics cards.

Turing is a huge advance over previous Nvidia architectures: not only is it faster all around, but it supports dedicated hardware-accelerated ray tracing through the processors’ new RT cores. I won’t be going into technical detail here (if you want to how the hardware works, Anandtech has a good article). Instead, I am going to focus on how Turing performs in the real world – and specifically, how it affects DCC software.

The RTX-powered GPU we are looking at today is the newest of Nvidia’s high-end Titan range of graphics cards, the $2,499.99 Titan RTX. The original Titan GPU, the GeForce GTX Titan, showed up back in early 2013, and was followed a year later by the much-loved GeForce GTX Titan Black and the GeForce GTX Titan Z, with new cards being added each time Nvidia moved to a new GPU architecture.

The Titan GPUs started out as super-high-end gaming cards for those who wanted the best performance available, and for whom money was no object. But as time went on, it began to feel like they were unofficially filling a niche in the 3D graphics market. Content creators who needed more compute power – and more importantly, more memory – than Nvidia’s GeForce range of gaming cards, but who didn’t need the ISV certifications or support available with its more expensive professional Quadro cards, were turning to the Titans to power their desktop workstations. On top of that, there were the rumors that Nvidia had begun quietly tuning the Titan drivers to boost performance in DCC applications, further cementing the reputation of the Titan GPUs as ‘Quadro, but not Quadro’.

Now, with the Titan RTX, Nvidia is officially including content creators, as well as data scientists and developers of AI and deep learning systems, as part of its target market.

Specifications

The Titan RTX has higher specs than any of Nvidia’s current-generation GeForce RTX cards, with its 24GB of GDDR6 memory more than double that available on the GeForce GTX 2080 Ti. It has 12 memory controllers on a 384-bit bus, for a total memory bandwidth of 672 GB/s.

In addition, the Titan RTX sports a fully unlocked TU102 GPU sporting 4,608 CUDA cores, 72 streaming multiprocessors, 576 Tensor cores for machine learning, and 72 RT cores for ray tracing. At 1,350 MHz, its base clock speed is the same as the 2080 Ti, but it boasts a slightly higher boost clock speed: 1,770 MHz as opposed to 1,635 MHZ. The Titan RTX’s one weakness is compute performance: its FP32 performance is 16.3 Tflops, and its FP64 performance just 509 Gflops, much lower than the previous-generation Titan V.

The Titan RTX is a 280W card, with a pair of 8-pin power connectors to feed the hungry GPU. Nvidia recommends a minimum 650W power supply, although 800W or higher would probably be preferable, especially if your system is sporting a newer high-core-count CPU like an AMD Ryzen Threadripper or an Intel Core i9. The Titan RTX uses Nvidia’s reference design cooler with its full-length vapour chamber and dual axial fans, but unlike the silver/aluminium GeForce RTX 2080 and 2080 Ti, it sports a bling-tastic gold colour scheme. It’s so pretty that it looked way out of place in the wire-jumbled workstation I used for testing. This card wants to be in a windowed, LED-ridden, art-project PC.

At the rear of the card are five display outputs: three DisplayPort 1.4 ports, an HDMI 2.0 port, and a USB Type-C port. Nvidia claims that the DisplayPort 1.4 connector can drive up to an 8K display at 60Hz on a single link. Along the top of the card, Nvidia has done away with SLI in favor of its newer NVLink technology: a high-speed GPU interconnect boasting a bandwidth of 100 GB/s.

Technology focus: NVLink and DirectX Raytracing

NVLink is fairly new, and software developers need to tune their products to really take advantage of that bandwidth, so don’t expect to see massive performance increases just yet. In the short term, when adding a second Titan card to your rig, performance will probably scale in a very similar way to SLI. However, there is one aspect of NVLink that should be a big boon to content creators right now, and that is the fact that the technology allows multiple GPUs to share framebuffer memory.

In the pre-NVLink days, connecting multiple GPUs via an SLI bridge would do nothing to increase GPU rendering performance. The renderer would load the scene independently into each GPU’s memory, and it had to fit into the space available, since applications were usually unable to page data out to system RAM. With NVLink, the high bandwidth of the interconnect permits GPUs to share memory, so with a pair of Titan RTX cards, your available capacity goes from an already impressive 24GB to a massive 48GB. While most GPU renderers can now shift data out of the GPU memory into system RAM – typically referred to as ‘out of core’ – to prevent GPU compute tasks from failing outright if the scene exceeds the available graphics memory, they do so at the cost of much reduced performance. If you are rendering large datasets on the GPU, you are going to benefit greatly from the large pool of memory that NVLink can create.

Another thing to note is that the Titan RTX’s real-time ray tracing capabilities are enabled through a new Microsoft API called DirectX Raytracing (DXR). Since DXR is a DirectX 12 feature, it is unclear whether we will see RTX-accelerated raytracing on Linux applications, or on Windows apps that use OpenGL or Vulkan.

Testing procedure

My test system for this review was a BOXX Technologies APEXX T3 sporting an AMD Ryzen Threadripper 2990WX CPU, 128GB of 2,666MHz DDR4 RAM, a 512GB Samsung 970 Pro M.2 SSD, and a 1,000W power supply. Its horsepower ensures that the only bottleneck on graphics performance should be the GPU itself. The system was connected to a 32-inch 4K display running its native resolution of 3,840 x 2,160px at 60Hz.

For comparison with the Titan RTX, I benchmarked a previous-generation Titan V and two older sub-$1,000 Nvidia gaming cards still often used for GPU rendering: the GeForce GTX 1080 and the GeForce GTX 1070 – in both cases, the Asus Founder’s Edition models. Unfortunately, I didn’t have access to any of the newer GeForce RTX cards, and since some of the GPU renderers I wanted to use for benchmarking solely or primarily make use of Nvidia’s CUDA API, I didn’t include any AMD cards.

The benchmarks were run on Windows 10 Pro, and were broken into three categories:

Viewport and editing performance

3ds Max 2019, Blender 2.79b, Fusion 360 2019, LightWave 2019, Maya 2019, Modo 12.1v2, SolidWorks 2019, Substance Painter 2018.3, Unity 2018.2.0b9, Unreal Engine 4.21

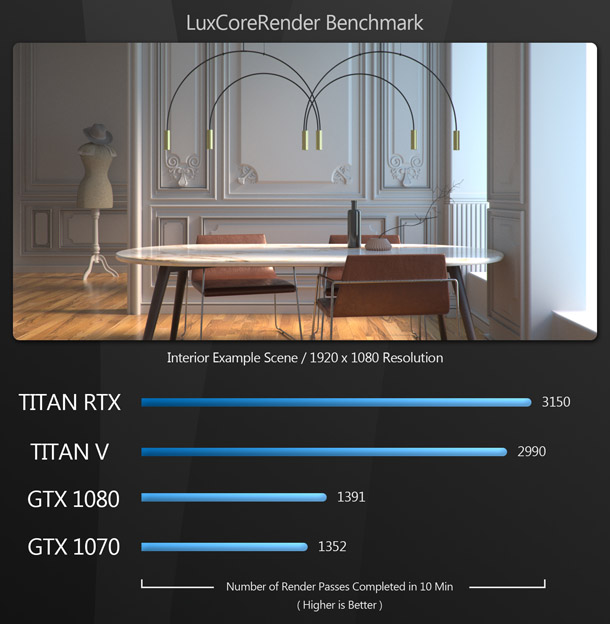

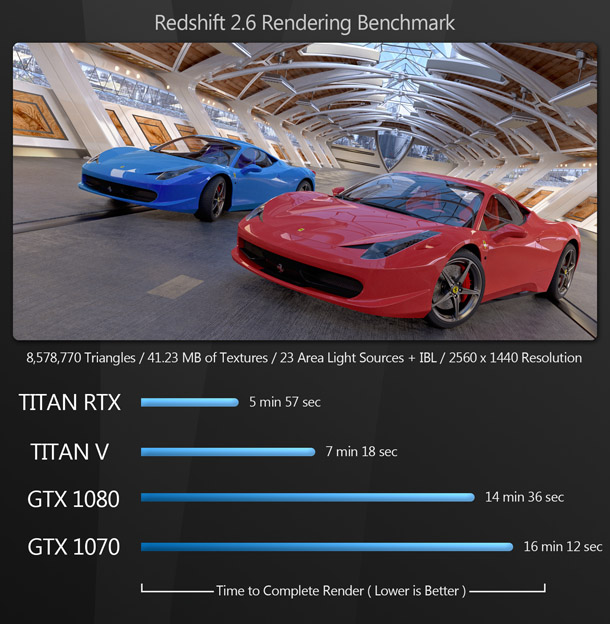

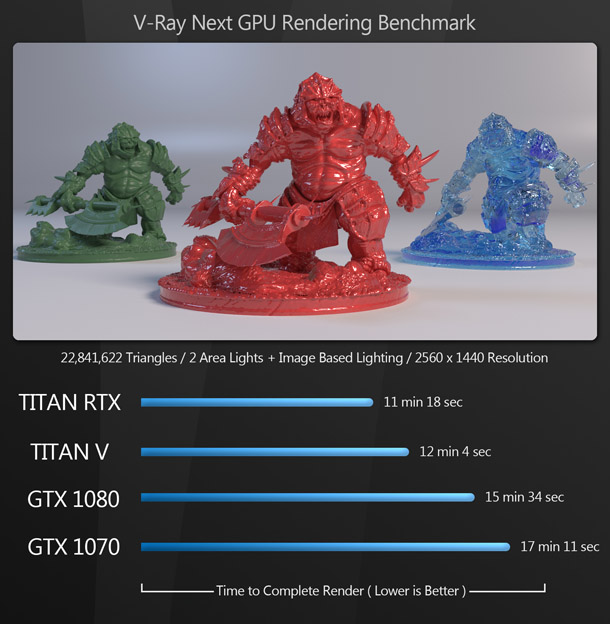

GPU rendering

Blender 2.79b (using the Cycles renderer), Cinema 4D R20 (using Radeon ProRender), IndigoBench 4, LuxCoreRender 2.1, Redshift for 3ds Max 2.6, SolidWorks 2019 (using Visualize), V-Ray Next for 3ds Max (using V-Ray GPU)

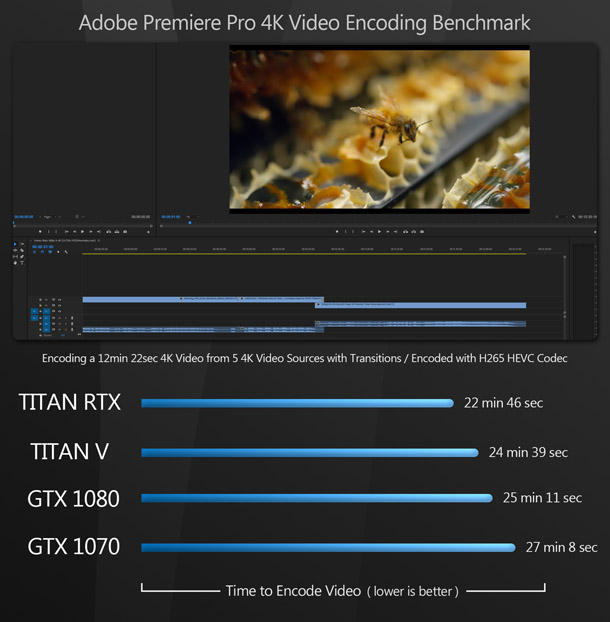

Other benchmarks

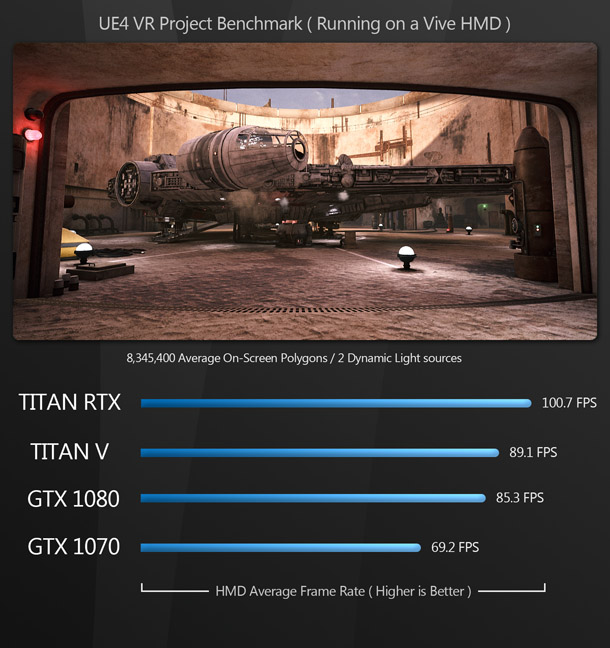

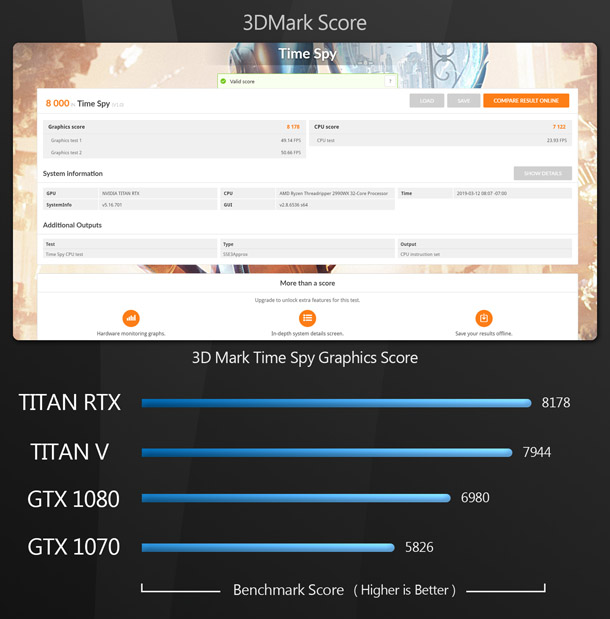

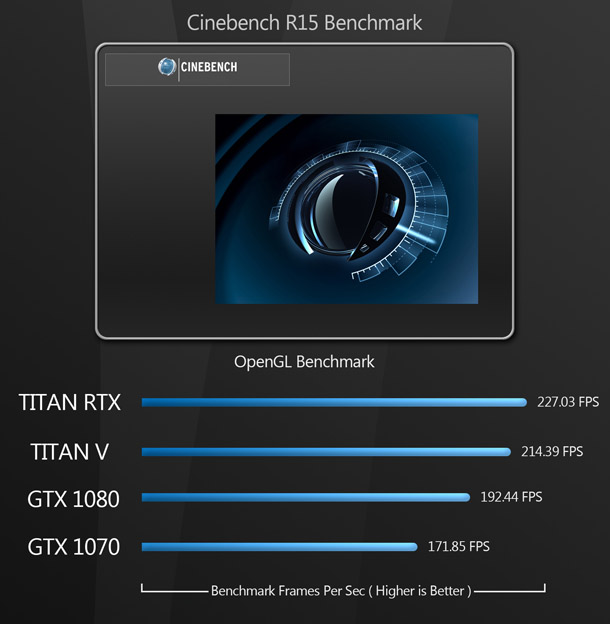

3DMark, Cinebench R15, Premiere Pro CC 2018, Unreal Engine 4.21 (testing VR performance)

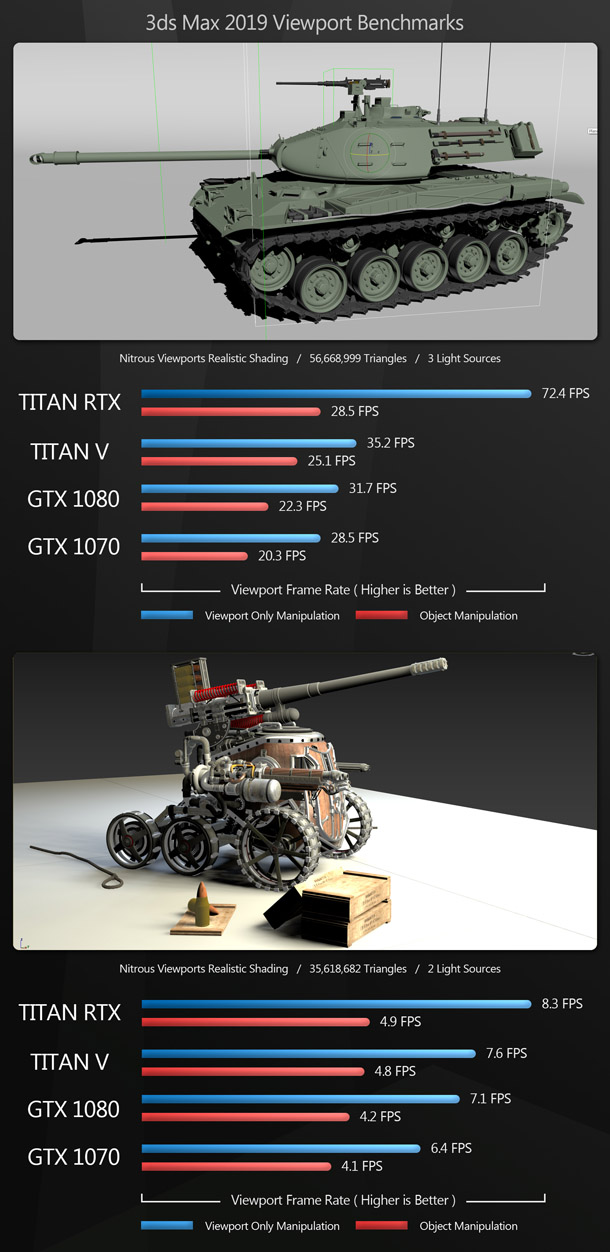

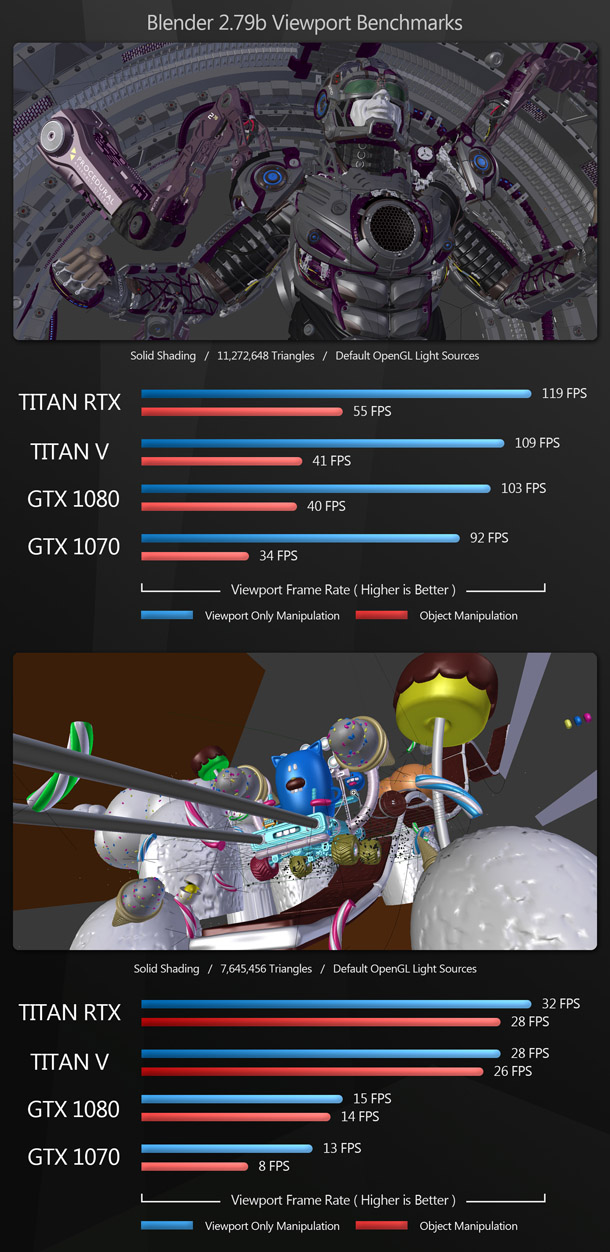

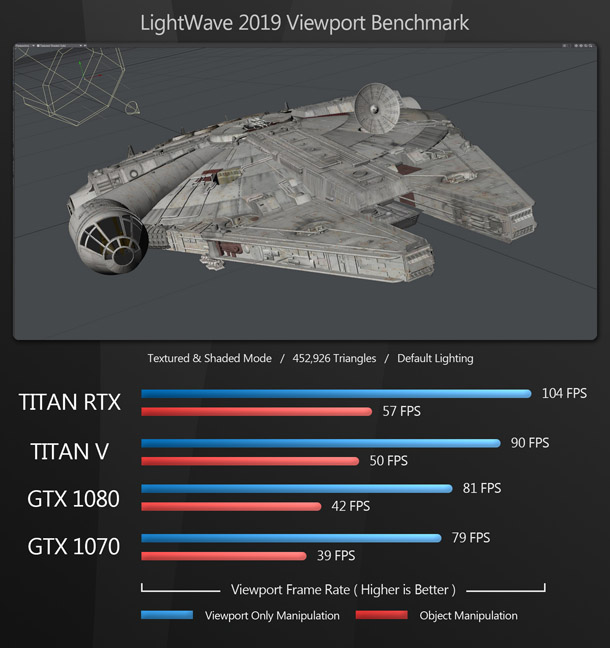

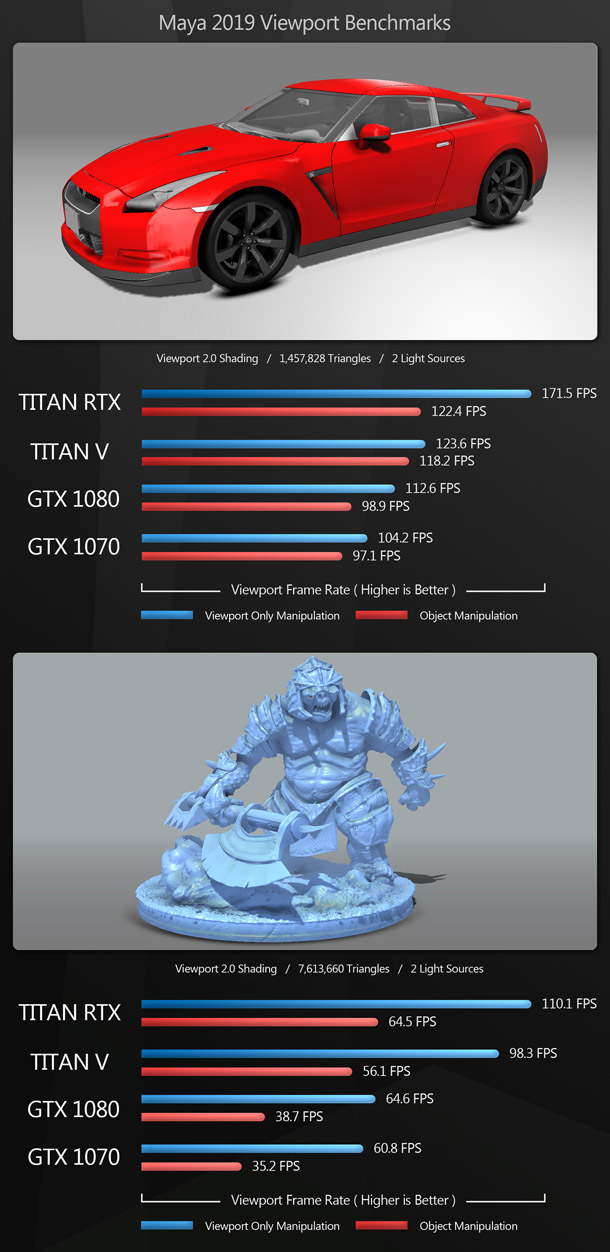

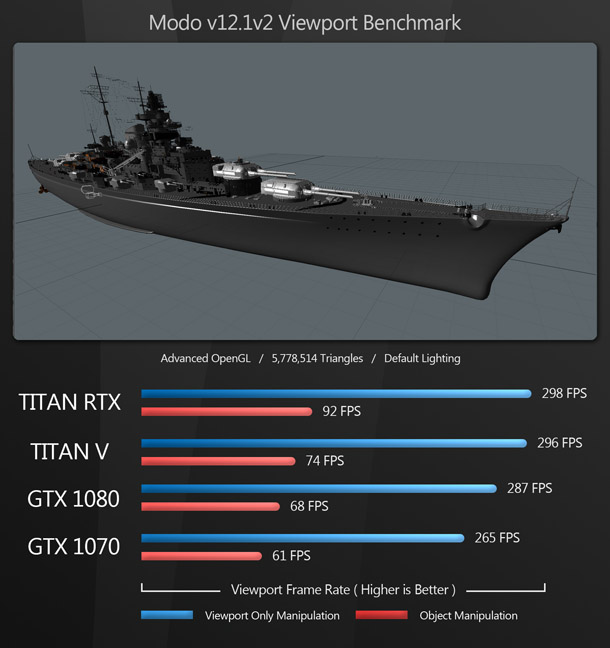

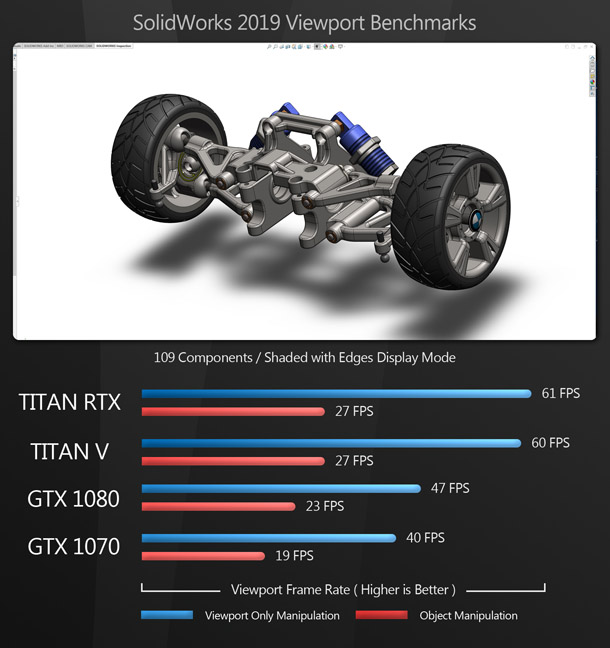

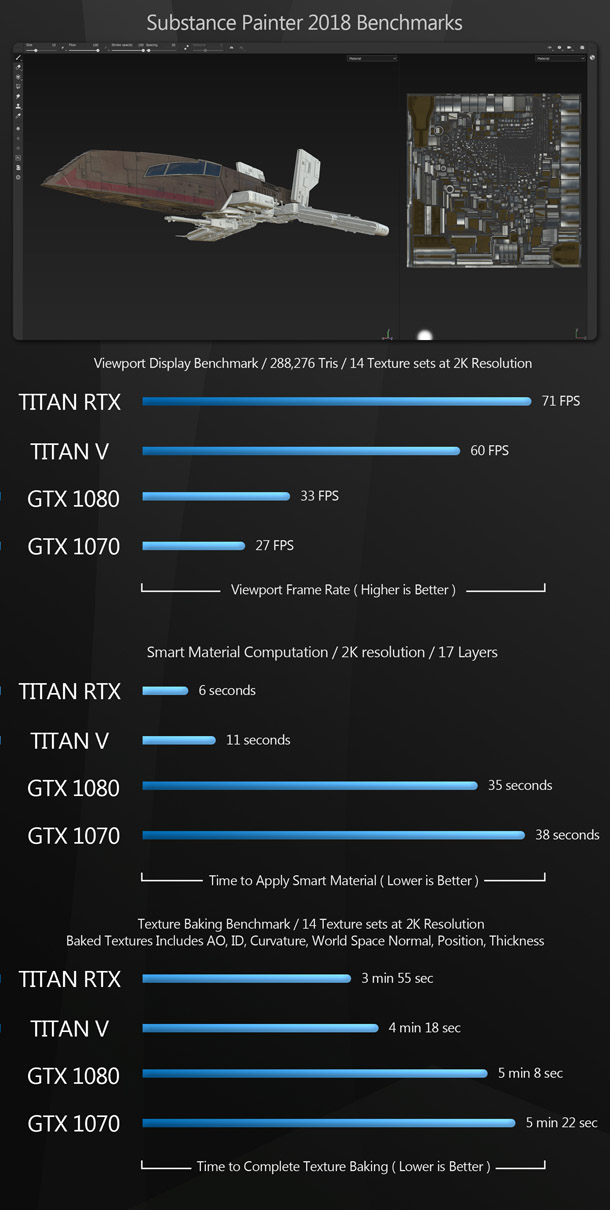

Frame rate scores represent the figures attained when manipulating the 3D models and scenes shown, averaged over 8-10 testing sessions to eliminate inconsistencies. One change to previous reviews is that in some of the benchmarks, I have broken the results into pure viewport performance – when panning, rotating or zooming the camera – and performance when manipulating an object. As anyone who regularly uses DCC applications will know, the latter is typically much slower, even when using a fast GPU. This is due to the fact that while pure viewport manipulation is mostly a GPU task, object manipulation is done using both the GPU and the GPU: if you have good viewport performance, but poor object manipulation, your GPU is probably being bottlenecked by a slower CPU or insufficient system RAM.

With OpenCL rendering benchmarks – Blender, Cinema 4D, IndigoBench and OpenCL – the CPU was deselected so that only the GPU was used for computing. The remaining render benchmarks use CUDA.

Benchmark results

Viewport and editing performance

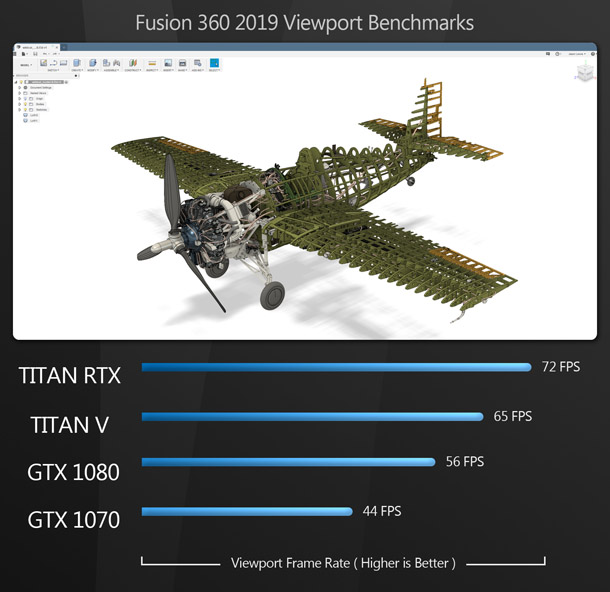

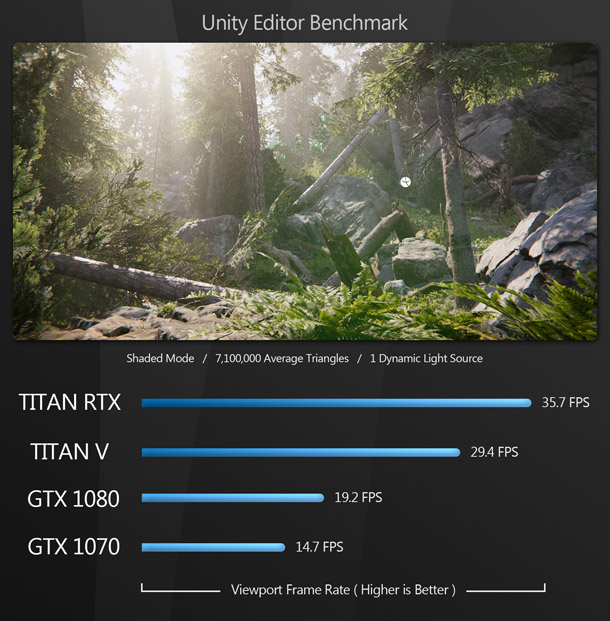

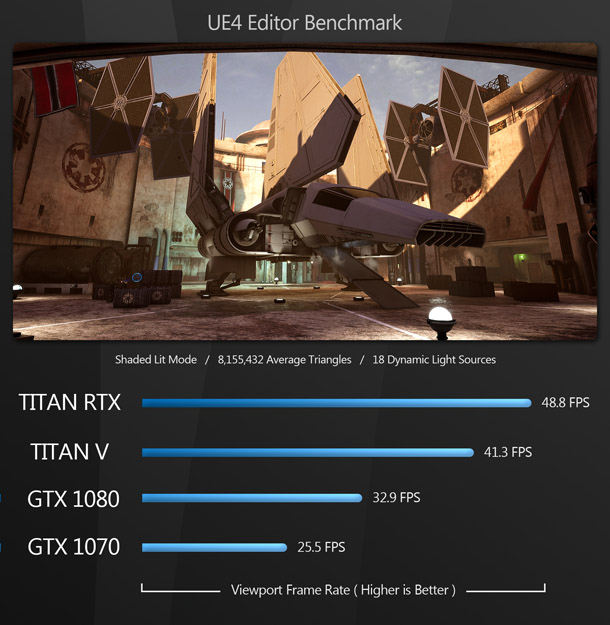

All of the display benchmarks follow the same pattern. While the disparity between the GPUs varies somewhat, the results are consistent, with the Titan RTX taking first place, the Titan V slightly behind, followed by the still-excellent GeForce GTX 1080, and the GeForce GTX 1070 in fourth place.

GPU rendering

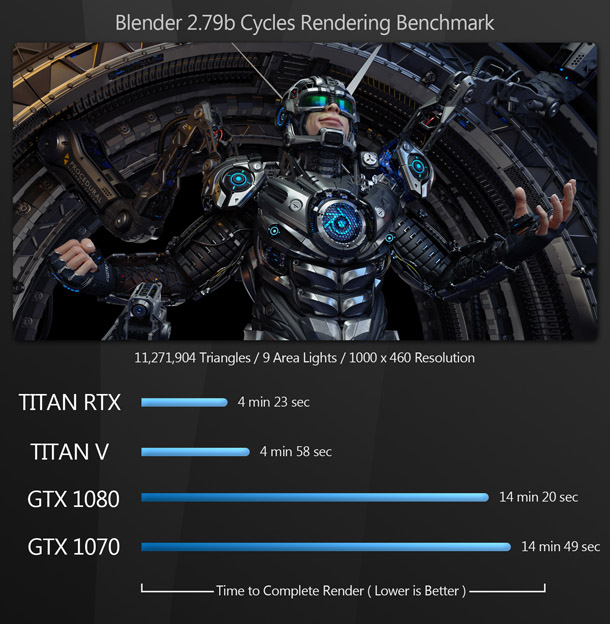

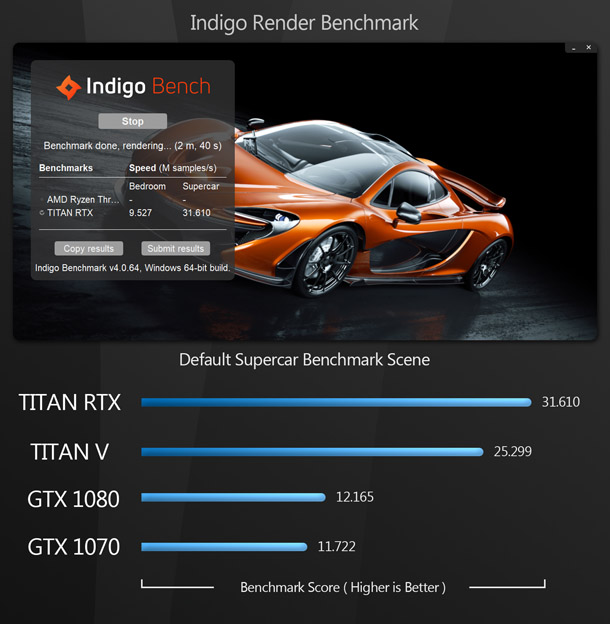

The GPU rendering benchmarks follow a pattern similar to the display benchmarks, with the Titan RTX outpacing the older Titan V. The GeForce GTX 1080 and GeForce GTX 1070 take third and fourth place.

Other benchmarks

The other benchmarks also follow the same pattern as the display benchmarks, with the Titan RTX taking first place, followed by the Titan V, the GeForce GTX 1080, and lastly the GeForce GTX 1070. The one interesting result is the video encoding benchmark with Premiere Pro. While the order remains consistent, all four GPUs are much closer in performance than in the 3D benchmarks.

Other considerations

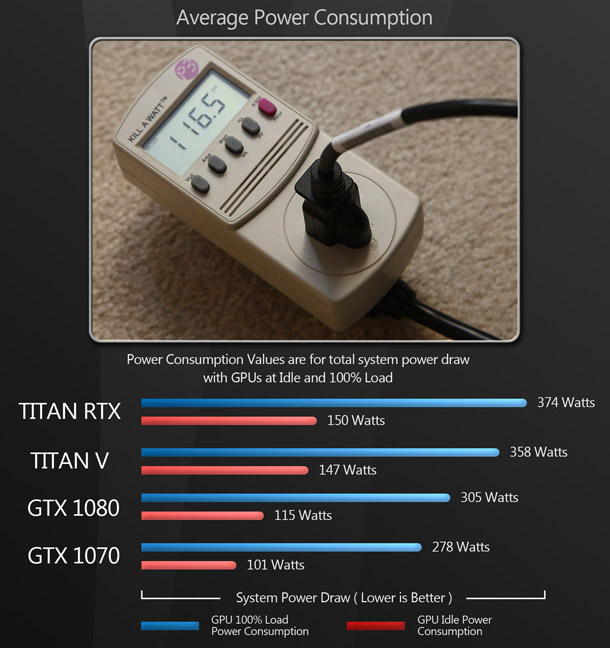

Power consumption

With the ever-increasing cost of electricity, power consumption has become an important metric for some users. If you run your system 24/7, do a lot of GPU computing, or have multiple GPUs in your machine, it can have a significant impact on your bottom line.

The Titan RTX’s massive performance across the board also comes with massive power requirements. It is rated at 280W, compared to 250W for the Titan V, 180W for the GeForce GTX 1080, and 150W for the GeForce GTX 1079. In my tests, this was clearly reflected in the total system power draw with each GPU.

Machine learning and AI

Another area in which Nvidia claims the Titan RTX excels is AI and machine learning, for which its Tensor processor cores are designed. While the word on the street is that machine learning will eventually make its way into content creation tools, it currently has no real benefit in CG work, aside from some render denoising algorithms that have been trained using AI techniques. As a result, I haven’t attempted to benchmark it here.

Conclusion

As benchmark test after benchmark test demonstrates, Nvidia’s Titan RTX is a monstrous powerhouse of a GPU. However, at just under $2,500, its price is pretty monstrous, too. So should you buy it for your own work? If you’re just looking for a GPU that gives you good performance in DCC applications, or in real-time editors like Unreal Engine or Unity, it’s probably overkill. It’s unlikely – although not impossible – that you will use all of its 24GB of graphics memory just to display geometry in the viewport. In this case, a less expensive gaming card like the GeForce RTX 2080 or 2080 Ti – or, if you don’t mind giving up the dedicated ray tracing cores, a GeForce GTX 1080 or 1080 Ti – will give you adequate performance.

But if you’re looking for a GPU for rendering or simulation work, this is where the Titan RTX shines. Its 24GB of graphics memory – doubled to 48GB if you connect two cards via NVLink – will enable you to cache massive datasets without going out of core and having performance suffer as a result. Currently, the only cards with more graphics memory are the 48GB Quadro RTX 8000 and 32GB Quadro GV100, both of which are much more expensive – or, if you don’t need CUDA, AMD’s cheaper 32GB Radeon Pro Duo.

It’s also worth considering the current state of DCC software. Whenever new hardware technologies come out, it takes time for the software developers to catch up, and this is true for Nvidia’s RTX architecture. While upcoming releases of Unreal Engine, OctaneRender and Redshift will be optimised for DXR-enabled hardware, at the time of writing, this was not the case. (I tried running OctaneRender benchmarks for this review, but the tests failed to render on any of the GPUs, and I was unable to debug them in the limited time that the cards were available for testing.) In my opinion, this is actually a plus for the Titan RTX. It already performs very well with GPU renderers, and the likelihood is that as software developers tune their applications for DXR-enabled hardware, it will do better still.

If you’re a content creator who lives and dies by deadlines, the Titan RTX is probably worth the cost, especially considering that the closest GPU in Nvidia’s Quadro range of workstation cards, the Quadro RTX 6000, has a MSRP of $4,000. The only real drawbacks are the Titan RTX’s more limited manufacturer support and range of ISV certifications, and the fact that it uses the same dual axial fan cooler as Nvidia’s GeForce RTX cards, meaning that it recirculates hot air inside the PC case. The Quadro cards vent hot air outside the casing, which is better for rack-mounted systems and workstations with limited case ventilation.

The Titan RTX is a massively powerful GPU that brings a whole new level of performance to content creators, and when software developers begin releasing versions of their applications that take advantage of DXR-capable hardware, we should see its performance increase still further. Speaking personally, I am very excited to see how this new technology develops over the next few years.

Read more about the Titan RTX on Nvidia’s website

Jason Lewis is Senior Environment Artist at Obsidian Entertainment and CG Channel’s regular reviewer. In his spare time, he produces amazing Star Wars fan projects. Contact him at jason [at] cgchannel [dot] com

Acknowledgements

I would like to give special thanks to all the individuals and companies who contributed to this article:

Gail Laguna and Sean Kilbride of Nvidia

Redshift Rendering Technologies

Otoy

Bruno Oliveira on Blend Swap

Stephen G Wells

Adam Hernandez

Thad Clevenger