Free add-on AI Render puts Stable Diffusion into Blender

Developer Ben Rugg has released AI Render, a free add-on that links Stable Diffusion into Blender.

The plugin makes it possible to use the open-source AI text-to-image generation model inside Blender, either by entering a conventional text prompt, or by using a 3D scene to guide the image generated.

With it, users can convert renders of simple blocking geometry into detailed illustrations and concept art.

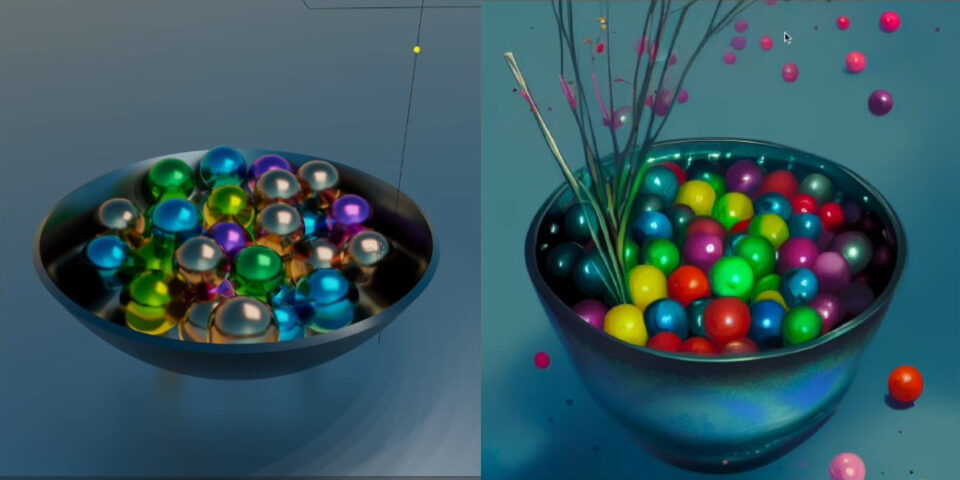

AI Render makes it possible to use a Blender viewport render (left) as the basis of a 2D image generated by Stable Diffusion (right), enabling users to generate non-photorealistic images from 3D scenes.

Convert simple 3D geometry into illustrations or concept art in a wide range of visual styles

Open-sourced earlier this year, and part of a new wave of AI art tools, Stability.ai’s Stable Diffusion model generates 2D images based on user’s text prompts.

AI Render links Stable Diffusion into Blender – at its simplest, making it possible to enter the text prompt inside Blender, rather than using a web interface.

However, a much more interesting way to use the plugin is to use a 3D scene in Blender to guide the image that Stable Diffusion generates, in addition to the text prompt itself.

Blender renders the scene, then uses the render to guide the image that Stable Diffusion generates.

As you can see in the tutorial video below, that makes it possible to block out a scene using very quick, simple geometry, then have Stable Diffusion generate a detailed matching illustration or concept image.

Users can choose from a range of preset visual styles – ranging from basics like anime and line art to fine art styles like surreal and Bauhaus – as well as describing the desired style as part of the text prompt.

That opens up another potential use for AI Render: as a kind of non-photorealistic renderer for Blender, translating standard Cycles or Eevee renders into more stylised images.

Control the style of the image generated from within the Blender interface

AI Render also provides a simple range of controls to guide the image-generation process.

Image similarity determines the similarity of the AI-generated image to the original render, while prompt strength determines the relative influence of the render on the final image relative to the text prompt.

Seed, Steps and Sampler settings adjust corresponding parameters within the Stable Diffusion model itself.

Current limitations of AI Render, and of Stable Diffusion itself

In its current form, AI Render only generates still images, not animations, and is limited to the same image resolutions as Stable Diffusion itself: currently between 512px and 1,024px on either axis.

It also requires you to have a DreamStudio account with Stability.ai, although Rugg says that he aims to support local installation in future releases.

Signing up for an account is free, and gives you 200 credits with which to generate images – at standard settings, DreamStudio uses one credit per image generated – but extra credits cost $10 per thousand.

DreamStudio also generates images under a CC0 licence, which limits their use in commercial projects.

In addition, before using Stable Diffusion, it’s worth reading up about its strengths and limitations: The Verge has a good summary of the wider copyright and ethical issues posed by AI art tools.

Licensing and system requirements

AI Render is compatible with Blender on Windows, Linux and macOS.

You can download compiled binaries for free via Gumroad (enter a figure of $0 at checkout, or make a voluntary donation). The source code is available under an open-source MIT licence.

To use it, you will need to register for a free DreamStudio account with Stability.ai.