The Lytro Cinema system: the next revolution in VFX?

By making it possible to edit camera properties after photography, the new Lytro Cinema system promises to collapse the boundaries between editorial and post-production, eliminating tedious manual work – and even removing the need for greenscreen entirely. So is this the next revolution in VFX? CG Channel investigates.

If you know Lytro, it’s probably as a manufacturer of consumer cameras. Described by Engadget as “the definition of mind-blowing” when it was unveiled in 2011, the company’s light field technology enabled users to refocus digital photographs after snapping them, prompting a wave of headlines in the tech press about ‘the future of photography’. Steve Jobs was even said to be interested in integrating it into the iPhone camera.

But in a market dominated by smartphones, Lytro’s cameras, with their higher price point and space-hungry capture format, struggled to make inroads. The second-generation Lytro Illum repositioned the technology for pro photographers, but still suffered for its awkward handling and poor low-light performance. The mass market simply wasn’t willing to put up with the drawbacks of the new technology for the fun of creating ‘living images’.

So in 2015, CEO Jason Rosenthal changed Lytro’s business model. Realising that to develop new consumer cameras would quickly eat into the $50 million the company had just raised in new venture capital, he decided to ditch the consumer market entirely. “We didn’t have the resources to continue building consumer products and invest in [new market sectors],” he wrote in a frank analysis of Lytro’s fortunes on Backchannel. “A financial bet of this size was almost guaranteed to end the company if we got it wrong.”

The first sign of this change in direction was the upcoming Lytro Immerge, a high-end camera system aimed at the creation of “cinematic VR content”. And now Lytro has gone further still, announcing the Lytro Cinema: a new ultra-high-end camera system that it hopes will shake up movie production and visual effects.

Breaking the boundaries between editorial and effects

While light field photography was little more than a novelty for home users, Lytro claims that it has potential to ease some of the key pain points in the post-production pipeline, removing the need for common low-level visual effects tasks, and increasing the number of delivery formats to which a single shot can be mastered.

According to Ariel Braunstein, the company’s chief product officer, the technology collapses the boundary between editorial and visual effects, opening up the possibilities of entirely new ways of working. “Video until now has been a 2D medium and computer graphics are three-dimensional,” he said. “Cinematographers and visual effects artists … have to struggle through the fact that these are two different media. Light field integrates the two worlds. It turns every frame into a video into a three-dimensional model.”

Or as Jon Karafin, Lytro’s new head of light field video – and formerly director of production at Digital Domain’s old Florida studio – puts it: “This is one of the most exciting possible capture technologies. There are a lot of things you can do in visual effects that would be impossible with any other technology.”

A complete 3D reconstruction of the lighting in a scene

Lytro’s system works by placing an array of microlenses in front of the camera sensor, breaking the incoming light into its angular components. As well as having a colour and an intensity, every pixel in the captured image also has dimensional properties. These properties are determined by the paths that light rays have taken to reach the sensor: the way they have bounced from object to object during their journey from the light’s source.

By creating a three-dimensional reconstruction of the environment in front of the camera, light field technology enables the user to change camera properties dynamically after capture, rather than having them fixed by the settings chosen by the director of photography during a shoot. As Brendan Bevensee, Lytro’s chief engineer for light field video, puts it: “Essentially, we now have a virtual camera that can be controlled in post-production.”

So how could holographic light field capture help visual effects artists?

For VFX professionals, this ability to adjust camera properties after capture has a number of potential uses:

- To change the point of focus and depth of field

- To reposition the camera

- To track the movement of the camera in 3D space

- To extract depth data for keying and other compositing tasks

- To remaster the footage in a range of frame rates and delivery formats

The first benefit – and the one most familiar from Lytro’s consumer cameras – is the ability to refocus a shot or change its depth of field after capture. The resulting output is not limited by the physics of a real lens, or the capabilities of a real camera operator, meaning that users can create effects that would be physically impossible, or animate changes in DoF faster and more accurately than a live operator could pull focus.

As well as changing the point of focus of a shot, users can change the position of the camera. According to Sarafin, using “true optical properties” – that is, the information actually recorded by the sensor – you can move the camera by up to 100mm on any axis.

While that isn’t enough to create a complex virtual camera move – for that, you still need conventional VFX techniques like camera projection – it is enough for dimensionalisation: the interaxial separation on a typical stereoscopic camera rig is just 65mm, and even for extreme effects, varies by no more than 20-40mm. That means that users can generate any arbitrary stereo image pair from a single frame of the footage: a single Lytro camera functions in the same way as a beamsplitter-based two-camera rig.

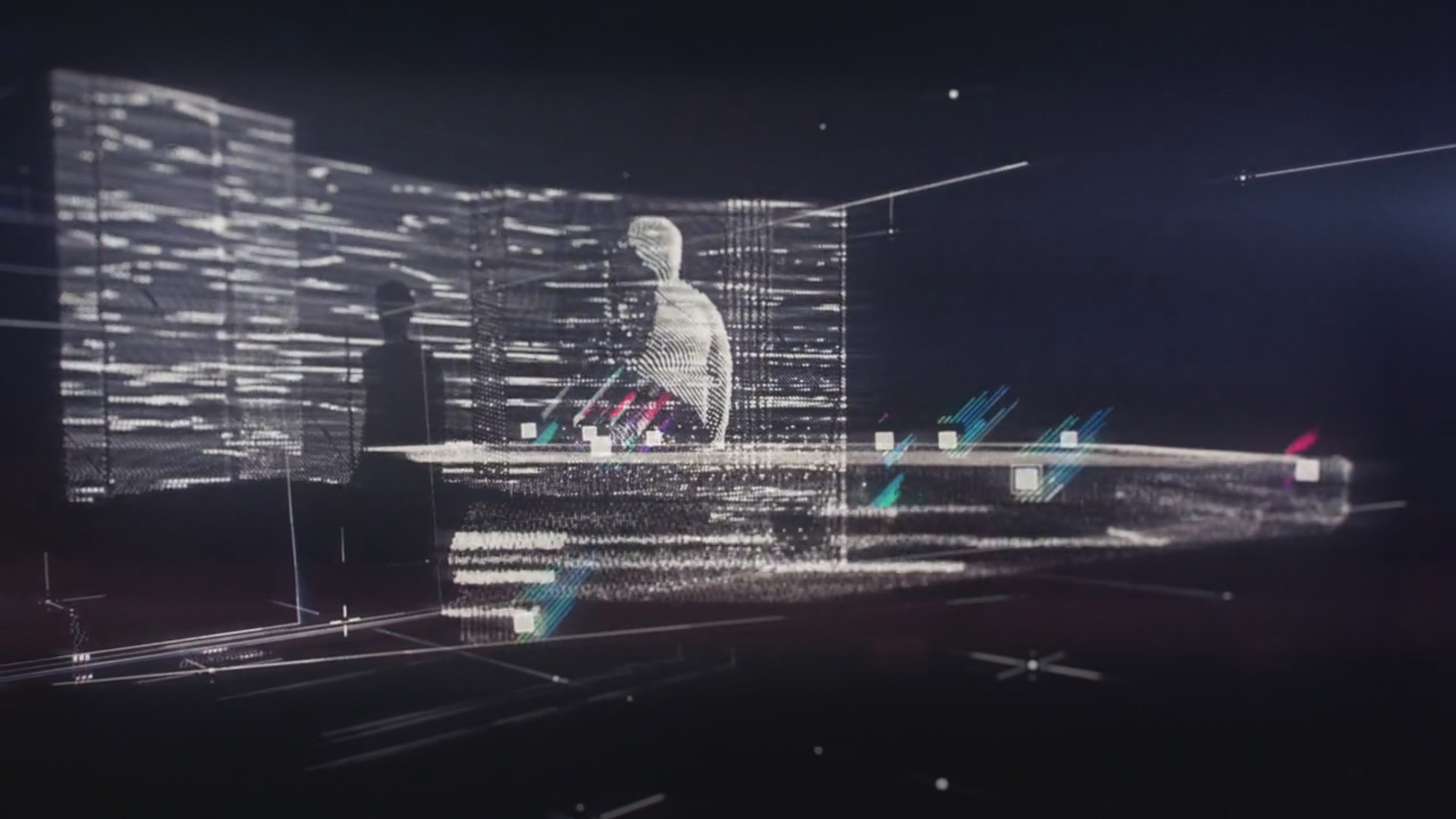

The data can also be used to calculate a complete 3D camera track, as can be shown in the image above. The mosaic of images at the top right of the screen shows the subangular light field samples used to help reconstruct the motion of the camera, even in a shot that would be difficult or impossible with conventional automated camera-tracking software – note the falling confetti surrounding the couple.

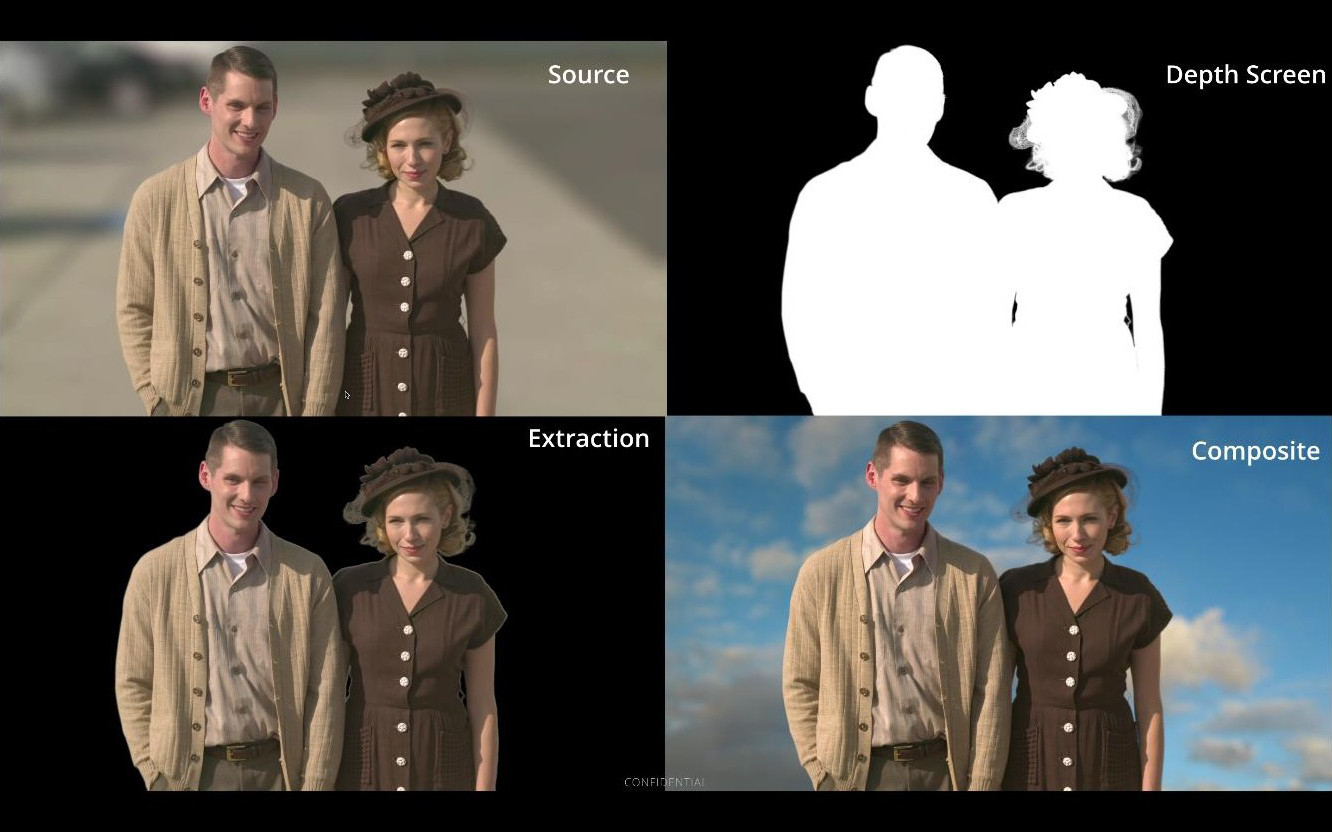

In addition, Lytro’s Depth Screen technology – as well as recording light field data, the cameras incorporate active sensor systems with a range of up to 100m – enables artists to extract depth information from the footage, making it possible to pull a key from any part of a shot without the need for greenscreen. As Jon Sarafin puts it: “It’s as if you had a greenscreen for every object … not limited to any one object, but anywhere in space.”

The image above gives some indication of the quality of results possible, as can be seen from the detail in the depth matte for the actress’s hair and veil. And, as the top left image hints, the footage wasn’t captured in a carefully controlled studio environment, but outdoors: in the parking lot below, in fact.

Once captured, Lytro footage can be processed into any current delivery format. The camera sensor has a maximum resolution of 755 Megapixels at 300fps: high enough for IMAX theatres, and capable of generating super-slo-mo effects when retimed. In contrast to the technique used on pioneering high-frame-rate movies like The Hobbit trilogy of shooting at 48fps and simply discarding every other frame for standard playback, Lytro says that footage can be converted to any arbitrary frame rate without loss of quality. It’s also a high-dynamic-range format, capturing 16 stops of exposure, and can be streamed directly to holographic displays. Sarafin describes it as “the ultimate master format”, future-proofing content for almost any conceivable upcoming delivery format.

Available commercially this year – but with a hefty price tag

The Lytro Cinema system will receive its first public preview at NAB 2016 later this month. The technology will be available to test in Q2 or Q3 this year, and will launch in commercial production in Q3 or Q4.

If you’re a freelancer or small studio, don’t expect to get your hands on the technology just yet. While Karafin says that Lytro’s long-term goal is to create a more portable off-the-shelf product – “when you have something looking like an ARRI camera, you have a direct sales model” – the company is currently adopting a “Panavision-style” full-service model, in which clients pay per minute of content processed, including support and storage.

Packages will start at $125,000, which Sarafin says will typically buy a “series of 10-100 tentpole shots” representing between two and five days’ time on a sound stage.

As well as the camera and server themselves, the package will include access to editing plugins for visual effects software. The only third-party application to be supported at launch will be Nuke, but Lytro says that it intends to add support for other 2D and 3D applications in future.

The next revolution in visual effects?

For directors and DoPs, the potential benefits of the Lytro Cinema system are obvious. Rather than make all of the key creative decisions on set – with the need for a costly re-shoot of you get things wrong – the technology enables users to defer them to the editing suite. As Sarafin puts it: “When you have the ability to never miss focus and change your relative [camera] position at the push of a button, you always get the shot you want.”

For VFX artists, it’s probably more of a mixed bag. On one hand, the volume of extra data the Lytro Cinema system captures will place further demands on networking and storage. And it effectively renders a number of low-level jobs, particularly those in dimensionalisation, redundant. On the other, it has the potential to automate tedious manual tasks, and open up entire new creative workflows – asked which aspect of the technology most excites him, Sarafin replied that it was the fact that every frame of a video effectively becomes a deep image.

Whether those benefits outweigh the costs of the Lytro Cinema system – both the upfront cost of the technology, and the downstream cost of processing the data it generates – remains to be seen. But if Lytro can persuade a high-profile director to champion light field photography, in the same way that James Cameron did with stereoscopic 3D, or Peter Jackson did with high frame rates, it seems likely that the rest of the market will follow.

To that end, Lytro has teamed up with Maleficent director Robert Stromberg – a double Oscar winner for his art direction on Avatar and Alice in Wonderland – on Life, a test short shot with the new camera. Life will premiere at NAB, and should be released publicly online in May.

The film, the trailer for which can be seen above, was shot by X-Men cinematographer David Stump, himself the recipient of a technical Academy Award. “What’s exciting to me about [the Lytro] camera is that it’s headed down a path in imaging that I’ve always wondered [about],” said Stump. “[Life] is proof that it’s possible.”