The Foundry reveals the five key problems of VR

Despite the flurry of product announcements at last week’s NAB show, one of the most interesting sessions from the event was not about a new software package at all – or at least, not a specific one.

In a tech preview on The Foundry’s booth, CTO Jon Wadelton unveiled some of the firm’s research in the fields of virtual and augmented reality, which may form part of future releases of Nuke, or of entirely new products.

As well as showing off a new raytracing renderer, the session showcased some cutting-edge work on what The Foundry describes as the five key “pain points” when creating content for virtual reality applications.

VR: the ‘Wild West’ of modern CG

Speaking to CG Channel from the show, The Foundry chief scientist Simon Robinson described virtual and augmented reality as “the Wild, Wild West” of modern computer graphics.

“I don’t think any of us have any idea where it’s going to end up,” he said. “[Everyone] is doing it in their own special way. It reminds me of when stereo[scopic 3D] kicked off a few years back.”

As well as the hype surrounding headsets such as the Oculus Rift, Robinson attributes the current explosion of interest in VR to the affordability of small, lightweight professional cameras.

“If you want to roll your own [VR capture rig], the technology is there,” he said.

Studios currently ‘rolling their own’ range from major players like Framestore and The Mill to smaller firms working in fields like dome displays for exhibitions.

Given this range of backgrounds, it isn’t surprising that the pioneers in the field are all approaching the task of creating content for virtual reality applications in very different ways.

“A lot of people have their own ‘secret sauce’,” said Robinson. “[As a tools vendor] we go to visit all of them and try to pull out common themes.”

The five key problems of VR #1: Camera aligment

Those common themes include what The Foundry describes as the five biggest problems in VR work: camera alignment and image stitching for environment capture, spherical compositing and rendering, and shot review.

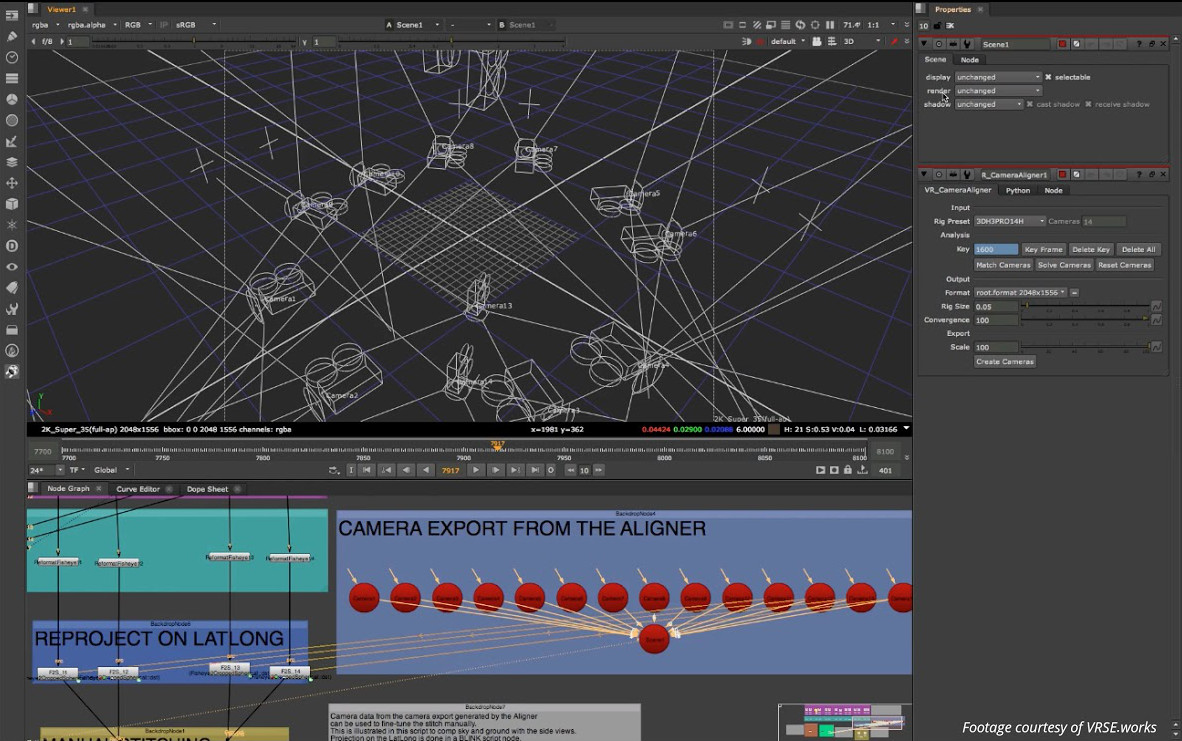

The Foundry’s new camera alignment tools. Click to expand screenshot.

The first of these, camera alignment, immediately became obvious from the test scene used during Wadelton’s demonstration: a live-action beach scene recorded by VRSE.works.

To capture 360-degree footage of the enviroment, the firm used a ring of 14 GoPro cameras arranged in seven pairs. One member of each pair captured the view seen by the viewer’s left eye; the other, the right.

Although not an especially complex set-up by VR standards, it posed the problems of locating each camera in 3D space: a task handled by The Foundry’s new VR_CameraAligner tool, shown above.

At the minute, the process is assisted by manually identifying key points such as the corners of objects, but Wadelton noted that he expected this to become fully automated in future, as in The Foundry’s Ocula toolset.

The five key problems of VR #2: Stitching

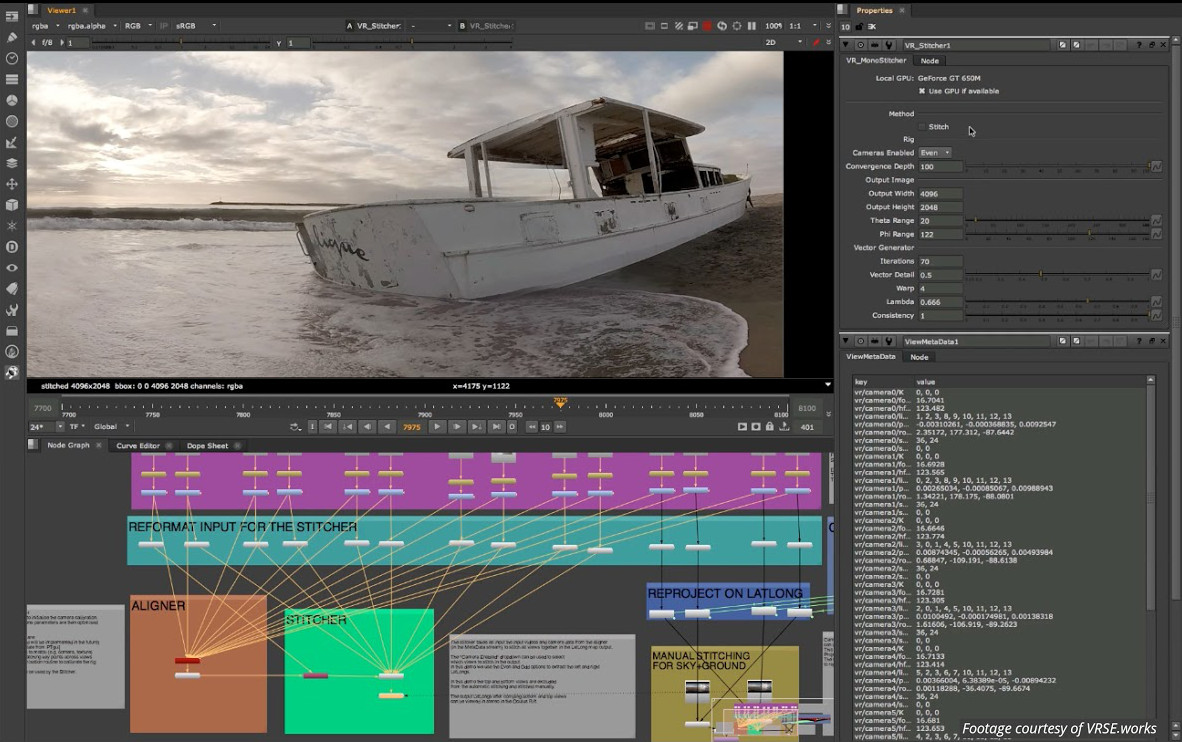

The output of the VR_CameraAligner feeds into another new node, the VR_Stitcher, which combines them into a stitched panoramic environment, warping the source images to remove ghosting created by parallax issues.

Aligner and Stitcher working together. Demo footage courtesy of VRSE.works. Click to expand screenshot.

Since VR environment capture inevitably involves manual clean-up – as Wadelton noted, “you can’t really hide the rig” – the 360-degree panorama is then unwrapped into a format more conducive to paint work.

At the minute, that means a latitude-longitude projection, although Robinson admits that this is as much by convention as design.

“The [industry] has stuck with a rectilinear format for so long, there isn’t a large body of alternatives,” he said. “We’re trying to understand whether we need to invent some new kind of spherical image format.”

Such a format could avoid one of the key issues with latlong maps: that a moving object like a bouncing ball becomed distorted as it approaches the apex of the captured environment – the top edge of the map.

“Do people expect tools that can accommodate that distortion, or do they want to work in other kinds of space where they can continually re-orient the ball to face them as they work?” said Robinson.

The five key problems of VR #3: Compositing

Regardless of whether it ends up being the preferred solution, The Foundry has already done quite a bit of work on translating standard rectilinear image-processing operations into latlong space.

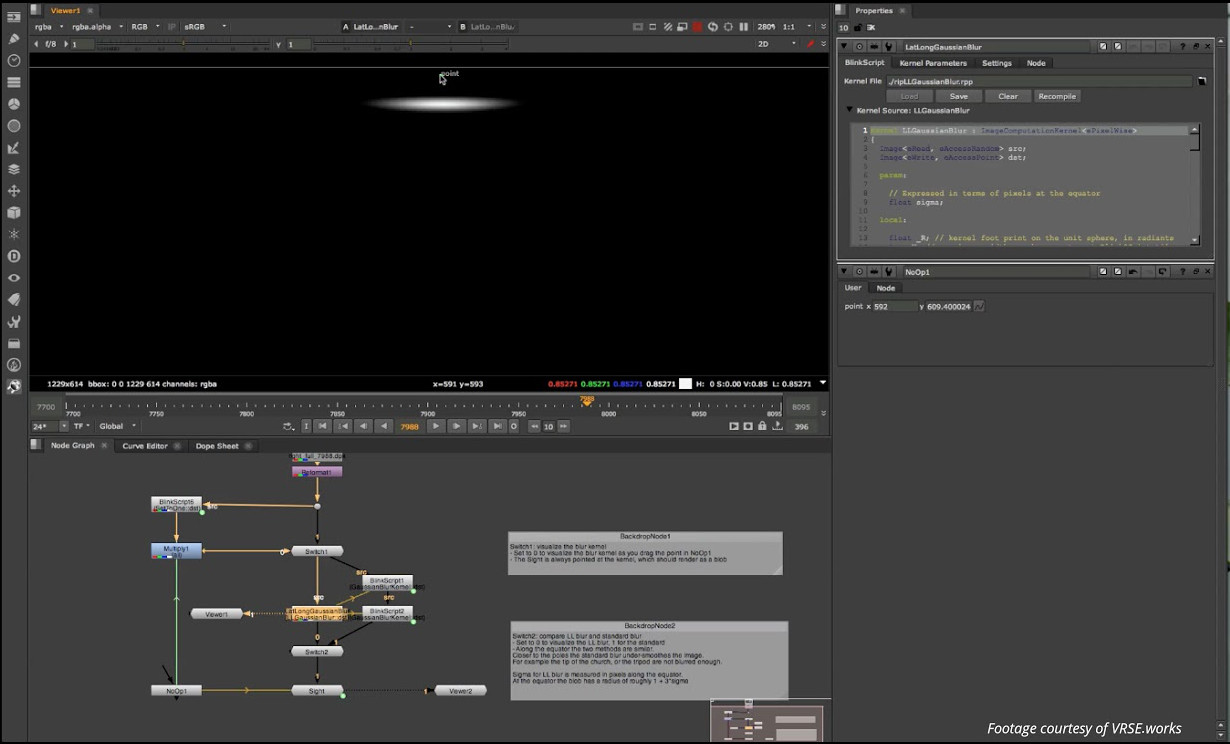

The NAB demo showed a Gaussian blur being applied to a latlong environment, with the shape of the blurred area changing according to its position on the map, and automatically wrapping between left and right edges.

A Gaussian blur in latlong image space: the blurred area distorts horizontally towards the top of the map.

Robinson believes it should be fairly straightforward to extend the same approach to tasks like roto and tracking.

“The framework for the Gaussian filtering example is quite extensible to a lot of our stuff,” he says. “The tougher bit is the user interaction: do people really want to work that way?”

The five key problems of VR #4: Rendering

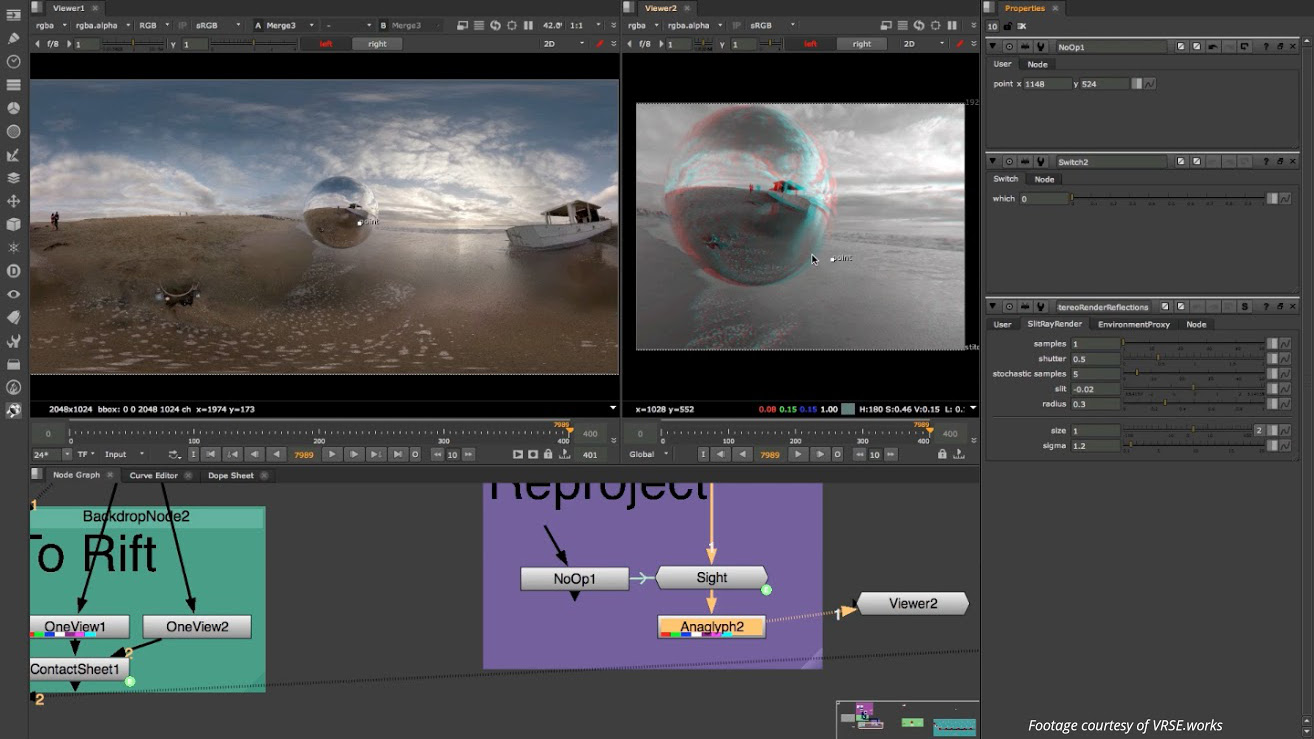

One new feature that may either augment the latlong view, or provide part of an alternative to it, is the Sight: an undistorted view of a small part of the 360-degree environment corresponding to the viewer’s point of focus.

The view through the Sight (in the Viewer2 sub-window). Click to expand screenshot.

In the image above, the view through the Sight is shown in the sub-window on the right. The screenshot also demonstrates something else The Foundry is working on: a new raytracing renderer.

The new engine was written directly inside Nuke to avoid the computational overhead and artefacting created when using the existing scanline renderer for virtual reality work.

However, the benefits for ordinary compositing – for example, to generate reflection or AO passes – are clear.

Robinson says that The Foundry hasn’t decided how to roll out the new tools within Nuke – or indeed, whether they should form a new separate product – but notes that the raytracer could be implemented independently.

“It’s possible that [it forms] a nice generic item, in which case we could roll it out sooner,” he said.

The five key problems of VR #5: Shot review

The last of The Foundry’s five problems of VR work is shot review – more specifically, the hardware on which shots should be displayed during the review process.

The demo showed a new monitor out plugin for the Oculus Rift, but Robinson noted that more will follow.

“As a tools vendor, we have to support all kinds of output devices,” he said. “The Rift is an interesting one, but it’s one on a long list.”

A VR environment ready for review, including output from the new raytrace renderer. Click to expand screenshot.

Instead, Robinson sees a more interesting question being whether artists authoring VR content will want to use specialist hardware at all: an issue raised by the The Foundry’s experiences with sterescopic displays.

“Once we’d done all our 3D support, we found vast bodies of people who never used stereo monitors again,” he said. “They just got used to understanding what they were looking at [on an ordinary screen].”

So when can you try these new tools?

The Foundry hasn’t announced a release date for any of the new technologies, and says that it is still actively looking for production houses to collaborate with on their development.

“If you’ve got footage, there’s a high chance you can get your hands on some of this stuff,” said Wadelton.

However, previous Foundry tech previews have found their way into commercial tools relatively quickly – little over a year, in the case of Project Breakaway, which became Nuke Studio.

Robinson commented that he expected that “our ideas about what we can put in a commercial product will evolve over the next [few] months”, but stressed that the firm isn’t working to a fixed schedule.

“The first thing to do is to make the tools work,” he said. “We’ll worry about commercialisation later.”

Read more about The Foundry’s other presentations at NAB 2015

(Requires you to be signed in to The Foundry’s website)

Updated 23 April: The original version of this story referred to the beach scene used in The Foundry’s demo as having been developed by Framestore LA, not VRSE.works. We’ve updated the text, although you will still see references to Framestore in the recording of the livestream itself. Apologies for any confusion.