CG Science for Artists – Part 1: Real-Time and Offline Rendering

Welcome to my series on the Science of Computer Graphics for artists. My goal with this series is to go through the underlying science behind computer graphics so that you have a better understanding on what’s happening behind the scenes. I’d like to take potentially complex concepts and present them in a way that is approachable by artists.

As a CG artist, you’ve already been exposed to a lot of technical jargon and applied many complex-sounding things in production. For example, you’ve probably used ‘Blinn’ or ‘Lambert’ shaders, but do you know what they actually are? And what is a ‘shader’ anyway?

Today’s applications and game engines have been reasonably successful at making the task of creating artwork simple enough that you don’t need to worry about the math or science, you can just be creative. But this stuff is actually good to know about. My hope is that with this information you may find yourself in a better position to solve problems in production.

Outline of topics

I’ll be covering a lot of different topics, and they will go from very basic (like…stuff every CG artist should know) to potentially mind bending concepts (like quaternions). Here’s a brief outline:

- Real-Time and Offline Rendering

- The Rendering Pipeline

- Polygon models

- What is The Matrix? – Geometry transformations

- Cameras and Projections

- Lighting and Shading

- Texture mapping

- Hierarchical modeling and scene graphs

- Animation

- Raytracing

So…let’s get started.

Part 1: Real-Time and Offline Rendering

It’s good that we make a distinction between real-time and offline rendering. In my series, I’ll end up talking about both. Basically, in real-time rendering, the computer is producing all the images from 3D geometry, textures, etc. on the fly and displaying it to the user as fast as possible (hopefully above 30 frames a second). The user can interact with the 3D scene using a variety of input devices such as mouse/keyboard, gamepad, tablet, etc. You’ll find real-time graphics in everything from the iPhone to your computer and video game consoles. Your CG application viewport is using real-time rendering.

Offline rendering refers to anything where the frames are rendered to an image format, and the images are displayed later either as a still, or a sequence of images (e.g. 24 frames make up 1 second of pre-rendered video). Good examples of offline renderers are Mental Ray, VRay, RenderMan. Many of these software renderers make use of what’s known as a ‘raytracing’ algorithm. I’ll go into more detail about raytracing in due course.

Cinematic still from Final Fantasy XIII - rendered offline, probably using renderers like RenderMan, Mental Ray, and composited for perfection.

Here’s a trailer for Final Fantasy XIII which interchanges real-time and pre-rendered graphics quite a bit. See if you can tell the difference between the two modes (should be obvious).

OpenGL and Direct 3D

You’ve probably already heard of OpenGL and Direct3D. These are known as software ‘Application Programming Interfaces’ or simply ‘software libraries’. They do a lot of the complex math and talking to hardware that most software programmers don’t want to deal with. For example, I have a cube of 8 vertices and I want to draw that cube on the screen. The code behind figuring out how to do all the transformations, projections, line drawing and shading is actually quite difficult, but that’s (mostly and thankfully) done for you with OpenGL and Direct3D.

OpenGL stands for Open Graphics Library. It was created in 1992 by Silicon Graphics and is managed by the non-profit group Khronos. The nice thing about OpenGL is that it is cross platform. Programs that you create with OpenGL can run on Windows, Linux, Mac. There’s even a slimmed down version of OpenGL called OpenGL ES (for Embedded Systems), made for devices such as iPad/iPhone, Android and the PS3.

Direct 3D is the API made by Microsoft, so it only runs on Microsoft platforms such as Xbox 360, Windows 7 phones and Windows. OpenGL and Direct 3D both do practically the same thing in terms handling the complex drawing operations and talking to hardware. Where there is a lot of tension and argument is in the realm of video games, where Direct 3D and OpenGL go head to head. Because of its position as a for-profit corporation, Microsoft has been able to integrate new advancements into Direct 3D and bring them to market quickly. In this regard, OpenGL can be ‘seen’ to lag behind. But in real terms, the games you play on PS3 (using an extended version of OpenGL ES) look just as good as on Xbox 360. A lot of game engines have a ‘graphics middleware’ layer that can make calls to OpenGL or Direct 3D functions depending on what platform you’re on. Cross platform development is extremely important in the industry today.

DirectX 11 tech demo:

If you’re interested in the differences between OpenGL and Direct3D, here’s a good place to start: http://en.wikipedia.org/wiki/Comparison_of_OpenGL_and_Direct3D

Hardware Acceleration

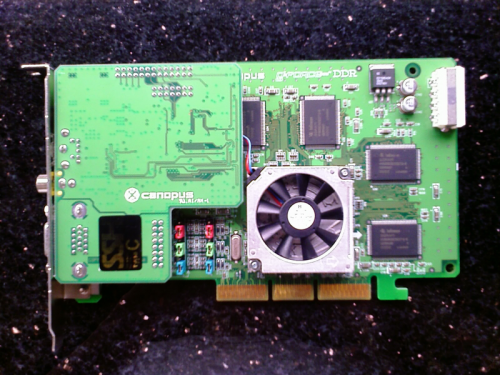

The idea behind ‘hardware acceleration’ is that instead of using a software program to do a particular task, you encode the algorithm into physical silicon (hardware) so that it can run much faster. This idea has been around for a long time and is behind many dedicated electronic devices such as pocket calculators (if you think hard enough, a computer is basically a gigantic, programmable calculator). The first graphics hardware accelerators would do fundamental operations like rasterizing (actual drawing of pixels) and much of the hardware acceleration was ‘fixed’ in the sense that you couldn’t change how the algorithm worked. You just used it. This is what’s known as a ‘fixed function’ graphics pipeline.

When computer graphics was in its ‘hey day’ back in the early 90’s, companies like 3DLabs and Silicon Graphics were well known for making hardware that was specifically designed to run graphics operations really fast. While the ‘lesser folk’ had to use graphics that ran purely in software, SGI machines and workstations equipped with the necessary hardware could render thousands of polygons on screen much faster because the OpenGL functions were embedded into the hardware. During this time, people were paying obscene amounts of money for a graphics workstation and software.

Nostalgia – A video from the Softimage 2006 user group where Mark Schoennagal shows Softimage 3D on an SGI Onyx. Oh how times have changed.

http://video.google.com/videoplay?docid=-163150319185531526

NVIDIA is particularly well known for its efforts in graphics hardware acceleration. It released the GeForce 256 in 1999, which offloaded transformation, lighting, triangle setup/clipping and rendering processes (practically all complex 3D operations) from the CPU and put it on the graphics processor. NVIDIA coined the term ‘Graphics Processing Unit’ (GPU) so that it would be differentiated from other video cards that only did fixed-function processes like rasterizing.

Following that, GPU’s went from being restricted to fixed-function operations to being programmable. By writing ‘shaders’ (vertex and pixel shaders) developers could implement their own algorithms and change the way that graphics was rendered. This opened the door for many improvements in graphics. I’ll talk about shaders in a future article.

GPGPU Rendering

As NVIDIA started making the GPU’s more programmable, it found that it could use the massive computational power in GPU’s to do general purpose (‘GP’) computing — hence the term ‘GPGPU’. This led to the development of CUDA (Compute Unified Device Architecture) and the programming language C for Cuda. This enables programmers to write software that can run on the GPU instead of the CPU. The benefit of doing this is in parallelization. A modern GPU might have 480 cores while a CPU has 8. So if you can make your program more parallel, you’ll potentially get quite good speed out of using a GPU.

This led people to write raytracers that run on the GPU. Basically, you’re taking a raytracer that could be run on a CPU and making it run on a GPU instead. Examples include the Octane renderer and Mental Images iRay.

When people in the industry talk about ‘GPU rendering’, it’s confusing because it may mean real-time rendering using OpenGL/Direct3D in some instances, or raytracing using the GPU’s general purpose computing power. To further confuse people, most (if not all) the current GPGPU renderers use progressive refinement techniques that enable the user to interact with a scene and watch it ray-trace in real-time. This is not the same as rendering using an API like OpenGL/Direct3D. They are fundamentally different techniques for rendering 3D images.

Currently there is some debate in the industry about whether GPU rendering is really better than CPU rendering.

Killer bean rendered with Octane GPU rendering:

Conclusion

So that’s basically a very quick look at the different kinds of rendering and technological advancements made in rendering over the last 15-20 years. Next part will be an introduction to the graphics rendering pipeline.

References

Akenine-Moller T, Haines E, Hoffman N, 2008, Real-Time Rendering 3rd Edition, AK Peters, Wellesley, Massachusetts.

Shirley P, Marschner S et al, 2009, Fundamentals of Computer Graphics 3rd Edition, AK Peters, Wellesley, Massachusetts.

NVIDIA, Graphics Processing Unit, http://www.nvidia.com/object/gpu.html [accessed 14 October 2010]

NVIDIA, GeForce 256, http://www.nvidia.com/page/geforce256.html [accessed 14 October 2010]

Khronos, OpenGL, http://www.opengl.org [accessed 14 October 2010]

Wikipedia, Comparison of OpenGL and Direct3D, http://en.wikipedia.org/wiki/Comparison_of_OpenGL_and_Direct3D [accessed 14 October 2010]

Wikipedia, Direct3D, http://en.wikipedia.org/wiki/Microsoft_Direct3D [accessed 14 October 2010]