We can do previs from an airplane over the Arctic!

Olcun Tan has been working in the visual effects industry for over a decade, including a spell at The Mill’s Oscar-winning movie arm, Millfilm. When Millfilm closed, he moved to DreamWorks Animation as lead developer, before founding his own studio, Gradient Effects, in 2006 with business partner Thomas Tannenberger.

Olcun Tan has been working in the visual effects industry for over a decade, including a spell at The Mill’s Oscar-winning movie arm, Millfilm. When Millfilm closed, he moved to DreamWorks Animation as lead developer, before founding his own studio, Gradient Effects, in 2006 with business partner Thomas Tannenberger.

Gradient has since worked on projects from award-winning music videos to Harry Potter and the Deathly Hallows. Always a pioneer of new techniques, the studio has recently developed its own mobile, real-time previs system, Sandbox, which enables users to scout virtual locations, record camera moves and render simple animations from an iPad or Android tablet.

We caught up with Olcun Tan to discuss the system’s capabilities, the creative possibilities offered by not being tied to a capture stage – and what it feels like to do previs work from an airplane flying over the Arctic!

CG Channel: How does Sandbox work? What does the user actually do with their iPad?

Olcun Tan: The user uses the iPad or Android tablet as a terminal, nothing more. All the high-quality assets are saved on the cloud and simple proxy geometry is used as a placeholder in the iPad. This enables you to use very complex final-quality models to do previs without placing too much computational strain on the tablet.

Once you have constructed your scene with the proxy geometry, Sandbox sends metadata back to the cloud to generate high-quality renders, which are streamed back to the device. This data can be recorded and exchanged with Maya and other 3D packages.

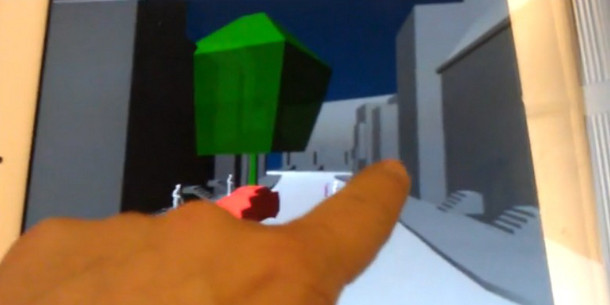

Gradient Effects’ Sandbox. The tool enables directors to perform key previs tasks from an ordinary tablet.

CGC: In practical terms, how does Sandbox differ from existing virtual studio systems?

OT: You don’t have to go to a stage to do previs. Large VFX houses typically have entire virtual studios for this kind of work, which are expensive to operate and can only generate data while staff are present. With Sandbox, you have your locations with you all times. It’s a live storyboarding and previs system in your pocket.

CGC: So you can use your system anywhere in the world, with no set-up required at the location?

OT: That’s correct – even in an airplane 12,000 feet above the Arctic! The video [below] shows me using the system on a flight to Europe. The airplane had a wireless internet connection, which I was using to access the cloud while literally in the clouds.

Our goal was to be able to do previs from anywhere. In the next release of Sandbox you will be able to interconnect different devices anywhere in the world. Imagine like a CineSync session with a full 3D scene, using data that can be exchanged with any other 3D application.

Visualisation at 12,000 feet: Sandbox in action during a transatlantic flight. Click here to play the video.

CGC: Are you using any of the iPad’s native features?

OT: Sandbox can calculate the correct sun/moon lighting in the scene based on the iPad’s GPS coordinates, but you can also input alternative coordinates manually. You can use the Gyro Camera to scout your locations, like you can with a much more complex system.

CGC: What else can the user do apart from scouting a virtual location?

OT: Camera work. They can record a rough camera move and send it to an artist to refine on a proper workstation, or render a camera animation on the cloud and send the video to editorial.

The creative lead sets the tone of the shot, and the specialist on the workstation refines it. The iPad is a very solid device, and simple enough to be used by three-year-olds: you can’t do much wrong with it. It enables the creatives to be hands-on again, like they were before the digital revolution.

A render from Sandbox: this ‘digital backlot’ was created for Paramount by Gradient Effects.

CGC: Why was being able to use hi-res assets for previs so important to you?

OT: At Gradient Effects, were very early users of Lidar scanning: we have our own Lidar pipeline and tons of proprietary tools. When we do VFX work on a project, we always scan the locations for our own records, so we can start work even before we have the plates delivered to us.

But now we can host any type of 3D geometry; it doesn’t have to be Lidar-scanned. Our clients are more interested in hosting [near-final-quality] models from the art department.

CGC: In what format does data get in and out of the system?

OT: We send out proprietary metadata, which can be converted to any software format. At the moment, we can go to Maya and from there to anywhere else. We can also exchange data with game engines.

CGC: What camera formats do you support?

OT: We have all major cameras supported, from the Alexa and RED cameras to DSLRs like the Canon EOS 5D.

CGC: And what rendering system are you using? Are there any limitations on its output?

OT: It’s based on iray, combined with lots of proprietary technology developed at Gradient Interactive, which is a joint company formed by Gradient Effects and miGenius. There are no limitation on the output: it’s truly raytraced imagery, crunched out very much in real time. In fact, one studio exec told me that the render quality is so good, they’re worried what will happen if the directors get used to it!

Another render from the Paramount digital backlot. Sandbox enables clients to ‘scout’ sun direction, camera positions and lens types without having to set foot in the real location

CGC: Are you using Sandbox in live production yet?

OT: We have used it on one major project, but unfortunately it is no longer greenlit, so I can’t talk about the details. But it involved a lot of location shoots, especially in China, and it was important to be able to scout and previs in a timely fashion, because the team was scattered around the world.

CGC: What kinds of problems are your users calling upon Sandbox to solve?

OT: A huge range. For example: how many extras do we need? Where should the greenscreen go? How do the shadows fall at 1pm? What lens should be used on which camera? Or even: what are the safety distances for the explosives?

Our technology gives the producers, DoP, director and VFX supervisor answers while these questions are still fresh. In a busy schedule, it’s easy to forget why questions even came up in the first place, so being able to get answers without having to involve a whole chain of other people can save a lot of time and money.