Tech focus: Nuke’s AIR machine learning framework

There are a lot of significant new features in the Nuke 13.0 product family – Nuke, NukeX, Nuke Studio and Nuke Indie 13.0 – the latest big update to Foundry’s compositing software, released earlier this week.

They include native Cryptomatte and Python 3.7 support, and a new viewport architecture based on Hydra, the USD rendering framework, intended to streamline workflow with other USD-compatible software.

But for us, the really interesting feature was the AIR toolset: a new machine learning framework that enables VFX artists to train their own neural networks to automate repetive tasks like roto and marker removal.

Unlike many of the advanced tools, it’s available in both Nuke and the lower-priced Nuke Indie, as well as NukeX and Nuke Studio, the extended – and more expensive – editions of the software.

Foundry’s website provides an overview of what AIR does, but the firm shared a bit more information in a livestream earlier this week, as part of its Foundry Live 2021 user events.

Below, you can find out what Foundry revealed about machine learning workflows in Nuke during that session, along with a bit of educated guesswork about how the toolset might evolve in future.

AIR: a new machine learning framework that lets VFX artists train their own neural networks

Machine learning has been a research focus for Foundry some time now: in 2019, the firm released source code for ML-Server, an experimental server-based machine-learning system for Nuke, on its GitHub repo.

However, the new AIR toolset – the name just stands for ‘AI Research’ – is the first appearance of machine-learning tools in the core software, and in a form that dispenses with the need for an external server.

Unlike the AI-based features recently added to software like Autodesk’s Flame, it isn’t simply a set of readymade tools that have been trained using machine learning techniques.

Instead, the framework enables users to train their own neural networks to perform VFX tasks specific to a particular image sequence, then to share those networks with other artists.

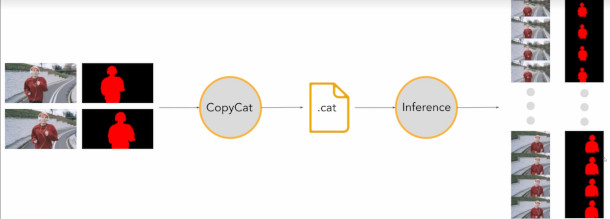

Train neural networks with the CopyCat node, then process them with Inference

The process begins with the CopyCat node, which processes source images to train a network.

Users feed in both raw frames and ‘ground truth’ images – the same frames after VFX operations have been performed on them – for CopyCat to generate a neural network from.

The trained network can then be processed by the Inference node, which then applies the same operations automatically to the remaining frames in the sequence.

Suggested use cases include automating repetitive tasks like roto, garbage matting, marker removal and beauty work, but the system works with “any image-to-image task”.

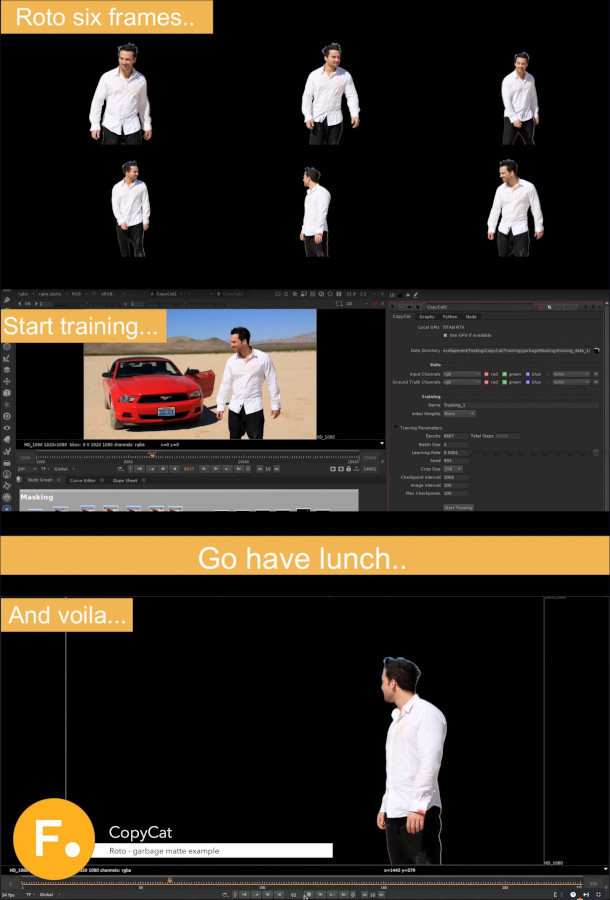

CopyCat being used to train a neural network to isolate an actor automatically from the background of an image sequence, based on six source frames in which the roto work has been done manually.

Another Foundry Live demo showed CopyCat being trained with six frames of a sequence in which an actor’s beard had been painted out manually; then Inference painting out the remaining 100-plus frames.

The results aren’t intended to be perfect, and will probably need some clean-up, but the workflow should greatly reduce the amount of manual paint work required on a job.

GPU-accelerated, flexible, and with minimal file overheads

The machine learning framework is GPU-accelerated, and requires a Nvidia GPU: on first run, it requires the compilation of CUDA code: a one-off process taking “20-30 minutes”.

However, any Nvidia card with compute capability 3.0 and up will work, and you don’t need to connect to the cloud: training is done entirely on the user’s local machine.

The system looks pretty flexible: the .cat files representing the trained neural networks generated by CopyCat can be shared with other artists, who can use them as the basis for their own iterative training.

Storage overheads are also small – the .cat files themselves come in “around 10MB” – while scenes that have been trained using the results are just conventional Nuke scripts.

Foundry says that it hopes to make it possible to enable users to supply their own custom models to the Inference node, and is “interested in exploring” the idea of distributing calculations on a render farm.

Available in Nuke Indie 13.0, bar some restrictions on CopyCat

The new Inference node is present in every edition of Nuke, meaning that you don’t need a higher-priced licence to use neural networks that have already been trained by someone else.

The CopyCat node isn’t in the base edition, so to train networks yourself, you will need NukeX, Nuke Studio – or, if you’re a freelancer, Nuke Studio, the $499/year edition of Nuke for artists earning under $100k/year.

The one caveat is that neural networks generated in the Indie edition are encrypted in its own file format, making them impossible to use in the full editions of Nuke.

However, .cat files generated in NukeX or Nuke Studio can be used in Nuke Indie.

New readymade blur-removal and image-upscaling tools trained using the AIR framework

In addition, the Nuke 13.0 releases ship with two readymade tools that have been trained using the system.

Deblur removes motion blur from footage, while Upscale provides a GPU-accelerated alternative to the existing TVIScale node for up-resing footage, increasing resolution by up to 2x.

Both were identified by Foundry as potential use cases for machine learning in a blog post last year.

The other use case – automated face replacement, particularly for dubbing TV footage into new languages – isn’t present in Nuke 13.0, but it’s a suggestion of where the AIR toolset might go in future.

Read more about the other features in the Nuke 13.0 family on Foundry’s website