This neat new Adobe AI tech turns photos into Substances

The livestream Adobe broadcast yesterday was primarily a chance for the firm to show off the new versions of its texturing and material-authoring software, Substance Painter 2021.1 and Substance Designer 2021.1.

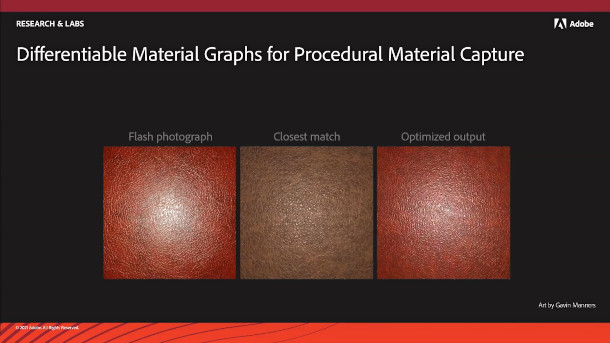

But it also included sneak peeks at several new technologies being developed by Adobe’s R&D teams, including one rejoicing in the title Differentiable Material Graphs for Procedural Material Capture.

The neat new AI-based system takes reference photos of real-world materials and automatically assembles a digital equivalent in Substance format that could be used in game development or VFX work.

Other sneak peeks included a parametric modelling system implemented inside Substance Designer and Medium, Adobe’s VR modelling software, and a new Substance integration for Blender.

New AI-based tech builds Substance materials matching real-world reference photos

Before going any further, it’s worth emphasising that all of the technologies previewed in the livestream are experimental, and have no current release dates.

Adobe hasn’t confirmed which of its applications they will be implemented in, when they will become publicly available – or even whether they will become available at all.

However, one with clear commercial potential is Differentiable Material Graphs for Procedural Material Capture, which you can see at 00:58:10 in the video at the top of the story.

The new system for building procedural materials to match real-world reference photos has been made possible by recent research in machine-learning technology: in the video, Adobe VP Sébastien Deguy says that he doesn’t think it would have been possible even months ago.

The AI-trained algorithm searches through a database of existing Substance materials to find the one that most resembles a source photo, then modifies it automatically to achieve an even closer match.

The process takes “a few seconds”, and the end result is fully procedural – it’s a Substance Designer .sbs file – so the resulting material can be further tweaked by hand.

The match to the original source photo isn’t perfect, but its close enough that you wouldn’t notice unless you were comparing the two images side by side.

The system even works with real-world materials that are difficult to photograph accurately, like reflective metal surfaces, as Adobe demonstrates towards the end of the sneak peek.

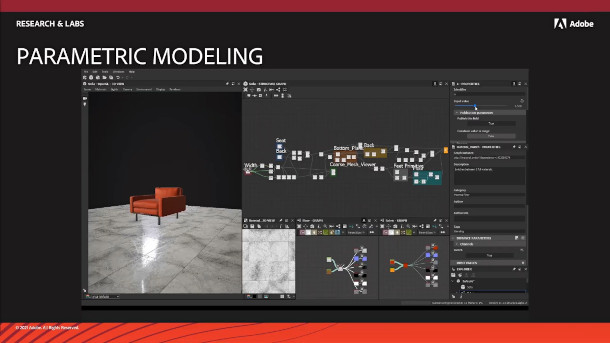

Experimental new parametric modelling systems inside Substance Designer and Medium

Another technology that Adobe has been working on for a bit longer is parametric 3D shape modelling.

The first sneak peek, released last year, showed software generating procedural variants of 3D objects like sofas, guided by high-level parameters like overall width and the number of seat cushions.

At the time, it seemed to be a standalone tool, but the tech has now moved a step closer to a commercial release, with Adobe showing it integrated inside Substance Designer.

The latest sneak peek, which starts at 01:06:00 in the video, shows sofas, fantasy buildings and even fields of grass being generated parametrically inside the software.

There’s even a clip of the same technology powering a procedural geometry brush inside Medium, Adobe’s virtual reality sculpting app, although the firm says it’s “nowhere near ready” for a public release.

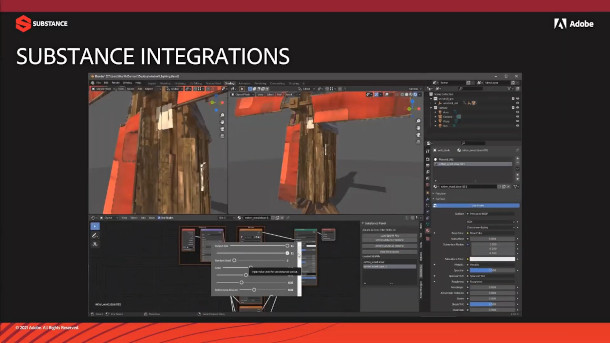

New Substance integration for Blender currently in active development

Of all of the development projects shown, the one that seems closest to becoming publicly available is the long-awaited Substance integration for Blender.

The work would make Substance materials editable natively inside the open-source 3D software, in the same way that they are in tools like 3ds Max, Maya and Houdini.

At 00:49:45 in the video, you can see a material in Substance .sbsar format being edited inside Blender, although the UI is “still very rough”, and there’s no release date yet.

Visit Adobe’s website for the Substance products

(No more information about the R&D work at the time of writing)