Interview: LightWave VP and Avatar veteran Rob Powers

Not many prospective vice presidents of 3D software development go for interviews with their future employer admitting that they “aren’t just a LightWave guy”. But then, most vice presidents of 3D software development aren’t Rob Powers.

Not many prospective vice presidents of 3D software development go for interviews with their future employer admitting that they “aren’t just a LightWave guy”. But then, most vice presidents of 3D software development aren’t Rob Powers.

Hired by NewTek eight months ago to head up product development on LightWave 10, Powers has the unusual distinction of having used his company’s software at the highest level in the industry: as the first 3D artist James Cameron invited to join the team on Avatar, he was responsible for the foundation of the movie’s ground-breaking virtual art department.

But his current role, arguably, carries equal pressure. LightWave 10, released six days ago, comes four years after the last full-point update, and almost two years after NewTek previewed its next-gen CORE architecture.

With CORE itself still some months off, version 10 has come in for intense scrutiny in the 3D community. In this interview, conducted a few weeks before LightWave 10’s commercial release, we talked to Rob Powers about how the toolset has evolved in his time on the job, how his experiences as an artist have influenced his role as a software developer – and what it feels like to work in a market in which a single company, Autodesk, now owns most of the competing products.

If you haven’t seen LightWave 10’s full feature list yet, you can get up to speed with our story here.

CG Channel: How much pressure do you feel personally for LightWave 10 to be a success? As a high-profile user, you’re closely identified with the recent changes to the software.

Rob Powers: Absolutely. I feel a lot of pressure. But if you find anyone else who can do what I’ve been able to do with the software in eight months, let me know. Have them step into the position and do it.

We had a very short amount of time to prepare for Siggraph, and I think [what we showed] was very successful. And we’ve continued to work on those things. As a user, it makes me want to buy LightWave 10, and that’s my main goal. Is it worth it for me? Does it bring things to the table that makes my life easier?

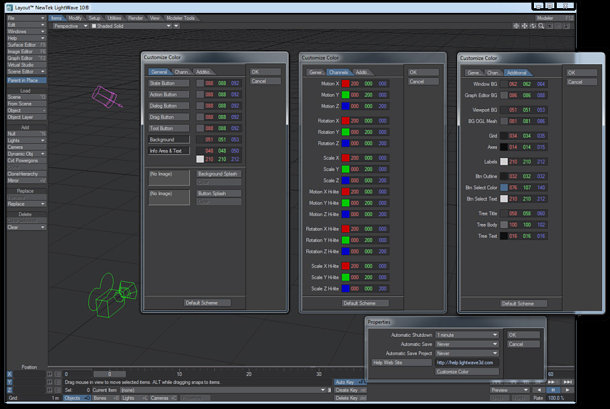

CGC: So let’s talk about those things – of which, the most visible is the new user interface (shown above). What was the thinking behind that?

RP: One of my main priorities when I came in was, “Let’s get this thing a bit more modern-looking.” So we did a complete colour scheme change for both Layout and Modeler. I believe neutral colours are easier on the eyes, and they’re better for making colour choices – when you’re doing colour-space work, you don’t want a garish colour on the buttons next to it. We’ve also added a dedicated UI designer, Matt Gorner, for – I believe – the first time in LightWave’s history.

CGC: Under the hood, one of the main new features of LightWave 10 is the new interactive Viewport Preview Render. Tell us about that.

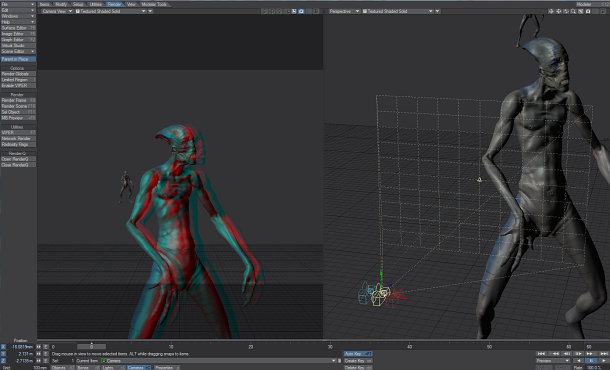

RP: The VPR is a place where artists are going to get a lot of great new feedback. In addition to being able to manipulate the data and see the [result in real time], we’ve combined the OpenGL and the VPR features so you can see your cameras and lights superimposed over the final render of the image. It’s a nice blending of the technologies.

We’ve also really worked on workflow to make it useful for artists. It’s not just for preview for them in the interface: we’ve also added a snapshot feature. When you get an iteration you like – if you’ve changed the colour of the light, the colour of the model, and you think, “I’m not sure I want this to be a final, but I want the client to see it” – you just hit the little button and it automatically saves out the frame number iterated with the version number. You can do this from camera view or any perspective view. It’s a very cool workflow to have.

CGC: But presumably, the VPR is not a complete replacement for LightWave’s standard renderer. Which features aren’t supported yet?

RP: We’re having some issues with some of our nodal shaders: the large majority work perfectly, but there are some where we need to work on full implementations.

But the VPR has full support for raytraced shadows, transparency, volumetrics, all the radiosity calls, [all of the light types], and obviously all of the base shaders: specularity, transparency, reflections, all of that information is included. We’ve tested a lot of the third-party plug-ins – for example, Denis Pontonnier’s volumetrics and lighting plug-ins – and they work as well. And this is only iteration one.

CGC: Given your work on Avatar, LightWave 10’s Virtual Studio Tools must be close to your heart. How did your partnership with InterSense, responsible for the VCam virtual camera controller, come about?

RP: I’ve been working with InterSense for a few years. On Avatar, we were doing these explorations, these set discoveries – like a location scouting process, but virtual – and [InterSense used] the feedback we gave them to develop the VCam.

CGC: So how does the VCam integrate with LightWave?

RP: The control is basically that you hold it and move around. It feels like a real camera, and the buttons are mapped for zoom, focal length: all of that type of stuff. You can use it with the VPR as well, which is pretty interesting: you can freeze [the camera movement], hit a button and it instantly resolves into a VPR image.

Again, we’ve tried to set up a useful workflow, both for exploration and for documenting that exploration. You can record the camera in a take, for example, and map that not only to the camera in the viewport but to an object or a light as well. It also works in Modeler so you can spin around the objects you’re modelling.

CGC: But if the system is intended for work on virtual sets, why also support the 3Dconnexion devices? Surely they’re intended for a far more general audience?

RP: I wanted everybody to have access to the technology. The VCam is a little high end – the entry-level [system] is about $50,000 – so we also implemented the 3Dconnexion input devices, which you can get for [under $100].

It’s not exactly the same functionality as the VCam, but it’s still very handy if you want to sit down with an art director or a production designer and ‘walk’ the set as you would in a first-person game.

CGC: Tools for stereoscopic work are de rigueur in the industry at the minute. How has LightWave’s own stereo set-up evolved?

RP: LightWave’s stereoscopic camera was simply a parallel or offset camera rig; and it had no visual representation in the interface at all. In the months that I’ve been here, [we’ve added] a representation of the right and left camera and the convergence plane.

If you check on the convergence point, it changes the rig to a convergent rig, which we didn’t have before. Unfortunately, every single production I’ve ever worked on has used a convergent rig: [it’s] the most popular stereoscopic rig in visual effects.

We’ve also added the interocular distance, and made [that and the convergence point] animatable. It’s absolutely necessary – and, again, was not a prior part of the LightWave camera.

CGC: Another current industry buzz phrase is linear workflow: something now supported in LightWave 10. What benefits does working in linear colour space bring users?

RP: If you can see everything in the same colour space, you can control it. Before, input, render and output were not continuous, so everything was all over the place. You’d get a scene where you’d think your lights were set properly and yet everything looked kind of dark, so there was always this tendency to pump up the lights to try to light the scene and then you’d get these overblown highlights.

Linear workflow also gives you greater dynamic range. Even if you stay in 24-bit space all the way [to save on file sizes], you get so much more out of your renders than you did. You don’t have to use the HDR formats.

CGC: In addition to NewTek’s own MDD format, LightWave now imports and exports the Autodesk Geometry Cache format. What was the thinking there?

RP: When I joined the company, I said, “I don’t just use LightWave, guys. I’m an artist; I’m interested in workflows.” If you choose MDD or GeoCache, that’s going to cover you for pretty much every application: Blender, 3ds Max, Maya, Softimage, modo, messiah, everything. I use all those packages, and I want to get data back and forth whenever I can.

With this new workflow – the choice of MDD or Geometry Cache – you can load an OBJ straight into Maya. Before, you had to use all these third-party plug-ins, and it was a struggle. Now I’ve just made it work.

CGC: We’ve talked about what’s new in LightWave 10. But what about what hasn’t changed? Why were there no updates to the character animation tools this time round?

RP: You might say the MDD tools are very heavily related to character animation, because they enable the stuff that’s currently in LightWave to get out. I think rigging in LightWave, with automatic falloff for the joints, has sometimes been sold as a negative. Honestly, it’s not a negative. It’s a great option to have.

With the MDD support, [you only need to] bone and joint a character in LightWave, and it comes into MotionBuilder with full falloffs for the skin, without painting a single weight map. That’s a pretty profound workflow, and one that I have tested in production on very rigorous schedules. If you’re using any other package, you have to constantly go back in there and repaint the weights on the geometry; in LightWave, you just replace the object and it works automatically. On Avatar, when I was sitting there with James Cameron and [concept artist] Wayne Barlowe was changing things, I could have an animation ready almost instantaneously he changed the design.

That isn’t articulated because there is a mechanism [in the industry] that is selling one way to do everything; that says there’s a single professional way to work. And that’s not true. You can’t make a team of five people use the same workflows Weta Digital uses without Weta Digital’s resources, without its proprietary software, without all of the shaders and code it wrote for RenderMan, without all the TD support. In my opinion, to sell to users just one way of doing things is doing them a disservice, and that’s the way things have been going for seven years.

CGC: NewTek is unusual in that it runs an open beta programme. That exposes you, as the person in charge of LightWave’s development, to a lot of user feedback, both positive and negative. How does that feel?

RP: It’s hard, quite frankly. There are a lot of different voices, a lot of different perspectives. And it’s my role to listen to all of those people’s needs and to communicate our vision; to throw things out there and say, “Hey, here’s a video demonstrating what we’d like to do: what do you think about that?” It’s an interesting process, but I think it’s accelerating the development of the product.

CGC: So has all that stress been worth it in the end?

RP: The biggest pressure I’ve had is when studios come up to me and say, “In today’s economy, if we didn’t have LightWave, we could not have done this project, because the budget and schedule did not support other options.” And for me, that’s profound. If there are stories that are not being told because people don’t have a proper schedule, that’s a tragedy.

This software has enabled me to do things like meet with James Cameron for the first time [for 2005’s Aliens of the Deep] and go home and do a full model, rig it, test, animate and render, and show him the result within 24 hours – and get the job. LightWave is Hollywood’s best-kept secret, and I want to share that.