CG Channel’s hardware reviews policy explained

By far the most popular articles we publish on CG Channel, at least in terms of reader feedback, are Jason Lewis’s hardware reviews. With his recent group test of professional GPUs prompting another bumper crop of Facebook posts and comments, Jason took some time out to answer the most common questions.

Who are you? Why are you qualified to write about this stuff?

With 15 years of professional experience as a CG artist, I have had the privilege of working with a lot of different hardware configurations from all of the major vendors, both past and present.

It’s this experience I draw upon when forming my more speculative conclusions. However, I do try to keep speculation and opinion to a minimum; and I always specify when any of my comments fall into either category.

That’s not to say that I don’t have personal favourites. (When it comes to software, I’m a 3ds Max fanboy. Let the flame wars begin!) But I try to keep my reviews as unbiased as possible.

If you do feel that a particular piece is biased to one side or the other, or that I’m not sticking to the facts, please let me know. My contact details are at the foot of the page.

Why don’t you include consumer cards in your group tests?

This is by far the most common request I get. And I’m all for it. In fact, before I began writing reviews, I always wanted to see pro and consumer cards compared side by side.

Unfortunately, being a professional artist and part-time journalist, I’m not exactly rolling in cash, so I can’t afford to go out and buy all the newest and greatest gaming cards to compare against the pro models. And Nvidia and AMD only provide us with the pro cards for reviews with DCC applications: their argument being that consumer cards are not targeted for this market and thus drivers aren’t properly optimized.

I have enquired several times about obtaining a few consumer cards to throw into the mix, but both AMD and Nvidia have been hesitant to do this so far. I’ll keep trying for future reviews.

Why won’t Nvidia and AMD provide consumer cards? Is it a Sinister Plot?

Before everyone jumps on the conspiracy theory bandwagon, let me take a minute to talk about the differences between consumer and pro cards. Some of this is drawn from my own experiences over the years; some from speaking to engineers at AMD and Nvidia during the course of these reviews.

First of all, it’s true that, in most cases, the consumer equivalent of any particular pro card will be almost as fast as its more expensive sibling.

However, the biggest and most important difference is stability and support. This is why pro cards are targeted at professionals who are on specific budgets and tight schedules. They need their tools to work consistently, without issues or downtime. If there is a problem, they need those issues to be resolved quickly, at any time of the day. This is the main advantage the pro cards offer over the consumer cards.

And while gaming cards are usually almost as fast as the pro cards, they do have viewport artefacts and inaccuracies, as well as more glitches and bugs than their pro equivalents. (To make matters more complex, the frequency of these issues varies according to which software package you’re using.)

Pro cards usually also have more on-board RAM, which can make a noticeable difference to performance, particularly when working with large scenes or assets, or for GPU computing tasks, such as rendering with iray or V-Ray RT – something I believe will only become more important over time.

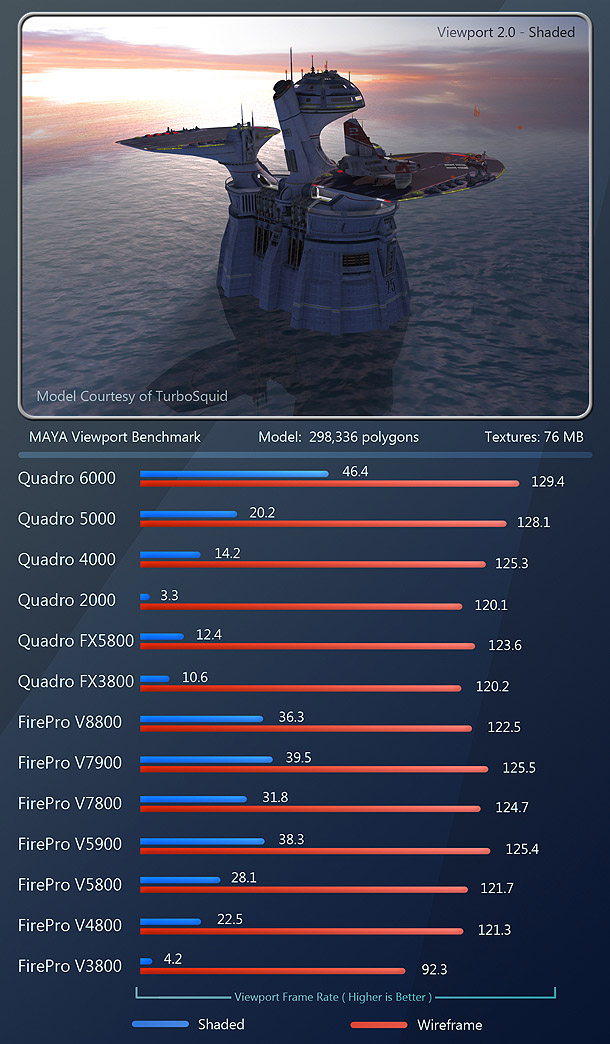

Many of our reviews benchmarks use proprietary assets, like this one from TurboSquid. Jason is working on versions that can be made freely available for download, but we can’t guarantee the timescale.

Why can’t I download your benchmark scenes?

Usually because they include assets from commercial vendors like Evermotion or TurboSquid, or that have been supplied by other artists. In many cases, the creator has spent a lot of time and effort creating a model, and doesn’t want it to appear in a thousand other people’s online galleries.

The alternative would be to use synthetic benchmarks like Cinebench or Specviewperf. As anyone who has read my reviews will know, I’m not a big fan of these. It’s not the fault of the engineers who write them: it’s just that there are too many variables for such benchmarks to predict accurately how a piece of software will perform in all types of production environments.

In light of this, I am currently working on a set of benchmark scenes that I plan to offer here in the future. It is my intent that readers will be able to download the scenes, test them on their own systems, and post the results here in the comments. Those results can then be compared against the ones I have recorded in the articles – which means we should see some comparisons between the pro cards I’ve tested and the vast array of consumer set-ups that our readers are running.

I am trying to have this ready by the next video card review. However, putting benchmark scenes together is very time-consuming, and since I have very little free time on my hands, I make no promises about timescale.

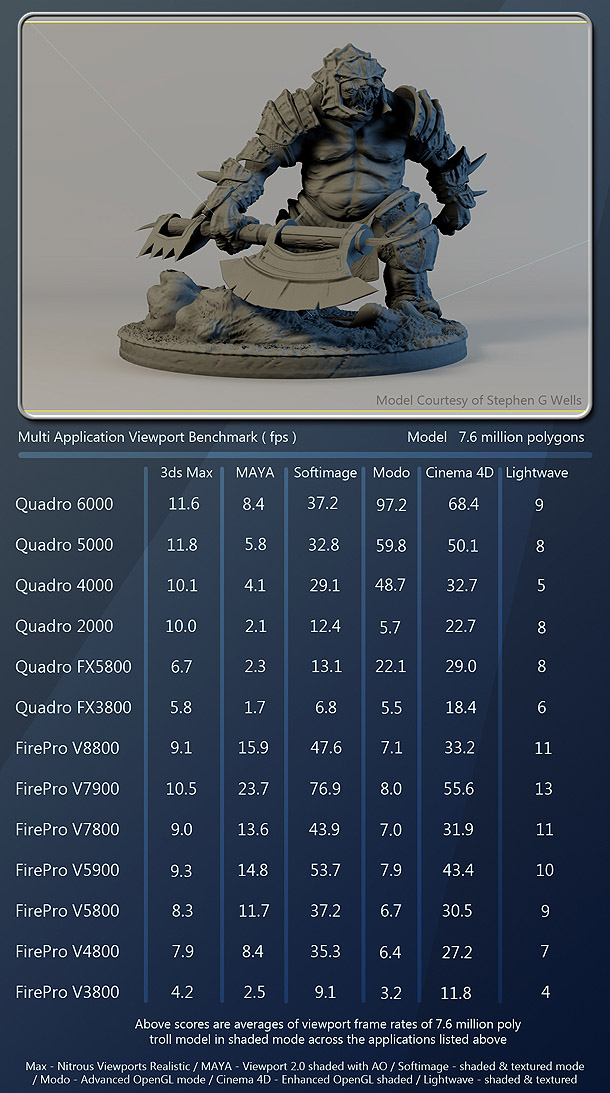

Our new multi-app benchmark isn’t intended as a performance comparison of the software packages used: only of the hardware on test. You read the table down each column, not horizontally across rows.

How does your multi-app benchmark work? Is it intended to compare 3D apps?

No. But judging by the comments, not everyone interpreted it that way, so let me explain it in a bit more detail.

That particular benchmark was not meant to be a comparison between the applications themselves, only a guide to the kind of performance you can expect from each app when running on a given piece of hardware. The chart is meant to be read vertically down each column, not horizontally across the rows.

Each application is running a different viewport mode, with different display quality properties, which is why there is such a discrepancy in the frame rates reported for each application. I haven’t chosen the viewport modes to be directly comparable – I just chose the ones that people I’ve spoken to work with the most.

So although the frame rates reported for Softimage are much higher than those of Maya and 3ds Max, that is because the Softimage viewport is only running a basic shaded and textured mode, giving the user the most minimal viewport feedback for lighting and surface properties of the model; whereas Maya is running the Viewport 2.0 renderer with screen-space ambient occlusion, which is computationally very intensive, but offers much higher-quality shading.

The same goes for 3ds Max, which is running the new Nitrous viewport. In my opinion, it’s the best-quality viewport renderer of any of the programs on that list – but again, ambient occlusion is much more intensive than the simple OpenGL shaded-and-textured mode of Softimage and modo. As soon as you switch the rendering mode in either Maya or 3ds Max to standard simple shading, the viewport frame rates go up significantly.

While you can use the benchmark data to get a general feel for the way the applications are likely to run in the display modes in which you’re likely to use them, you can’t use it as a definitive comparison of performance. The scene wasn’t set up to make a direct comparison between the applications themselves: only the hardware.

Are AMD drivers lower-quality/more unstable than Nvidia’s?

I can only speak from my own experience, but I haven’t found this to be the case. I haven’t used any of the newer Radeon cards, but the drivers for the FirePro cards have been rock-solid throughout testing over the past two years – and, with AMD’s Eyefinity technology, quite enjoyable to use.

Why don’t you talk more about OpenCL when you cover GPU computing?

Supporters of OpenCL are correct that the industry is making a global transition away from proprietary APIs like CUDA. However, it is a rather slow transition. As I have mentioned several times in previous reviews, OpenCL is still very new, and while it is headed in the right direction, CUDA’s feature set is currently much more extensive.

This is partly because it’s newer. But it’s also partly because Nvidia can develop CUDA at its own pace. OpenCL, like OpenGL before it, is an open API, and needs to gain approval from the various members of its development panel – which, unfortunately, means a slower development cycle. It’s like comparing OpenGL and DirectX: since Microsoft answers to no one regarding the development of DirectX, the feature set has jumped far ahead of OpenGL in a relatively short space of time.

Although we are seeing much more OpenCL-enabled software than we did just a year ago, there are still several key players that still only support CUDA. The first of these is Adobe. To my knowledge, no Adobe software currently supports OpenCL. The GPU-accelerated features of Photoshop, After Effects and Premiere are currently using OpenGL, not OpenCL, as their acceleration API, and the Mercury Playback Engine in Premiere CS5 is CUDA-only. To my knowledge, the next iteration of the Adobe Creative Suite will continue to use OpenGL and CUDA, but beyond that, I have no information.

The second example – and the more unfortunate one for users of AMD graphics cards – is today’s most popular GPU-accelerated renderer. iray is CUDA only, and that probably won’t change any time soon, since it is developed by the Nvidia-owned mental images.

It will be a while before all of our GPU-computing desires are rolled up into one nice, universal-standard API. But if GPU computing is a big priority to you right now, going with an Nvidia card currently gives you more options, since the hardware supports both OpenCL and CUDA.

Is there anything else you need to get off your chest?

Why, yes. First off, I want to say thanks again for all of the comments you have posted on my articles over the years. Your feedback as readers has been extremely valuable and has helped guide how my articles evolve.

But while most of you have been very constructive and helpful, there are a small number of readers that take full advantage of the anonymity of the internet to post things like “This is BS!” or “Program X SUCKS!” Posts like these don’t help anyone: they just diminish the credibility of your other comments.

Let’s face it, things could be much worse: most of us are making a living out of doing what we love when we could all be working in a call center, or standing behind a counter muttering, “Would you like fries with that?” Let’s all work together to be constructive and make this an enjoyable place to share ideas.

How can I contact you directly?

If you’ve got a comment that you don’t want to post on a review itself, either because it’s too long, or because it’s not for public consumption, you can email me at jason [at] cgchannel [dot] com.

Thanks again for your comments and opinions. I hold them all in the highest regard, so keep them coming!