Group test: AMD and Nvidia professional GPUs – 2021

Which workstation-class GPU is best for your DCC work? To find out, Jason Lewis puts current Nvidia RTX and AMD Radeon Pro cards through a battery of real-world benchmarks, from 3D modelling to rendering.

In CG Channel’s 2021 group test of professional GPUs, I will be benchmarking two new workstation cards, Nvidia’s RTX A6000 and AMD’s Radeon Pro W6800, comparing them to the GPUs that I looked at last year: Nvidia’s Quadro RTX 8000, 6000, 5000 and 4000 and AMD’s Radeon Pro W5700, W5500 and WX 8200.

Although now previous-generation models, all of the older cards are still available commercially, and are still widely used. As ever, I will be putting each GPU through a series of custom benchmarks designed to replicate real-world production tasks, from asset creation to rendering and simulation.

Jump to another part of this review

Specifications and prices

Technology focus: ray tracing hardware and APIs

Testing procedure

Benchmark results

Other considerations

Verdict

That’s great. But how do I get hold of any of these cards?

Before I get into the meat of this review, I want to address the massive elephant in the room: the current GPU shortage. (Or, at a higher level, The Great Semiconductor Shortage of 2020-2021). I understand that reading GPU reviews is a bit of a bummer at the minute, as it is nearly impossible to get hold of new cards at anything remotely close to their MSRPs. Either you have to get lucky and be one of the first people to click the Add To Cart button when an online retailer gets a shipment, or you have to buy an entire new system – and even then, the GPUs are usually marked up by a small percentage.

So when will the situation change? It’s hard to say. Some people predict that supply levels and prices could return to normal by early winter, as it would be crazy for companies to not cash in during the lucrative holiday season. I think that, while possible, this is very unlikely. We saw unprecedented demand for consumer electronics in 2020, and still GPU manufacturers were unable to keep up. With TSMC and Samsung already running their fabs at or near maximum capacity, and additional complications like Taiwan’s recent drought and the current ABF substrate shortage, we will probably see continued chip shortages well into 2022.

Although a lot of money is going into building new chip foundries, both here in the US and abroad, they will take years to complete. Current facilities are working to expand their capacity, and that is probably what will ease the shortage in the short term, but it will still take many months for those chips to make it onto graphics cards, and the cards to make their way into stores. There are also reports that companies like Intel are prioritising higher-margin products like server and data center chips over consumer products, so we may see Nvidia and AMD follow suit, to the detriment of consumer and perhaps even workstation GPUs.

My own best guess – and despite what other journalists like to claim, all predictions are really just informed guesses – is that we may see an increase in the supply chain, and thus a significant reduction in prices, by the early summer of 2022, with a full return to normal supply and pricing by the end of 2022.

Specifications and prices

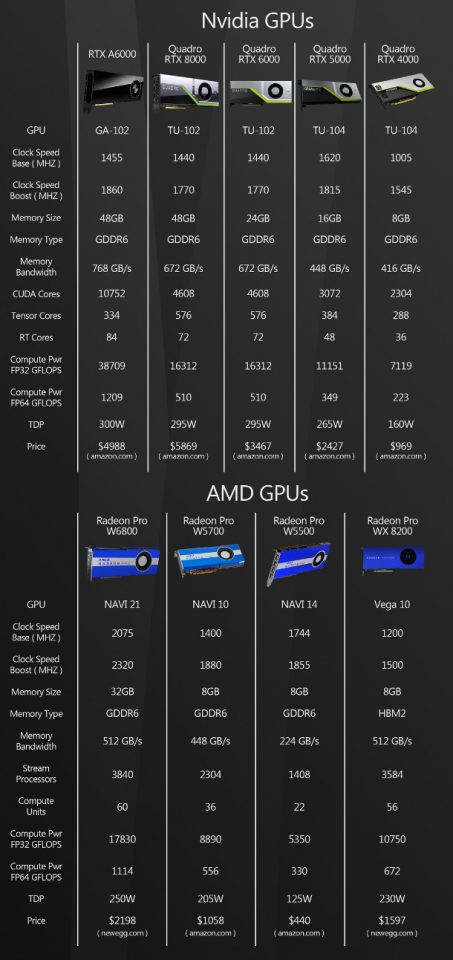

With that out of the way, let’s look at the two new GPUs on test: Nvidia’s RTX A6000 and AMD’s Radeon Pro W6800. Both are currently their respective manufacturer’s top-of-the-line cards.

The RTX A6000 is Nvidia’s first professional card based on its new Ampere GPU architecture, and the first not to use the firm’s longstanding Quadro branding: despite its shortened name, it’s the successor to the previous-generation Quadro RTX 6000 and 8000.

It uses the Ampere GA102 GPU, the same one that powers Nvidia’s GeForce RTX 3080, 3080 Ti and 3090 consumer cards. The difference is that the GA102 in the A6000 is a fully unlocked GPU, having full access to the 10,752 CUDA cores, 84 RT cores and 336 Tensor cores on the GPU die, running at a base clock speed of 1.4GHz and a boost clock speed of 1.8GHz.

The RTX A6000 comes equipped with 48GB of GDDR6 ECC memory on a 384-bit bus for a memory bandwidth of 768GB/s. It’s a significant bump up from the 24GB Quadro RTX 6000, and a direct successor to the 48GB Quadro RTX 8000.

So why doesn’t it also use the higher-bandwidth GDDR6X memory available in the high-end GeForce cards? According to Nvidia, at the time the A6000 was designed, GDDR6X memory simply didn’t come in modules large enough to build a 48GB board. However, software engineers tell me that the extra memory bandwidth provided by GDDR6X has little impact on most DCC and GPU rendering applications: something borne out by my own tests. The one exception is real-time applications like Unreal Engine and Unity.

Using GDDR6 instead of GDDR6X also has the advantage of decreasing power consumption. Even though the A6000 uses a fully unlocked GA102 GPU, and has double the RAM of a GeForce RTX 3090, its TDP is 50W lower. This is significant since, like the previous-generation Turing-based Quadro cards, the RTX A6000 ditches the axial fan of the GeForce GPUs in favour of a traditional blower fan design – albeit an unusual one, with air intakes on both sides of the card. (This is a welcome change when connecting two GPUs with a NVLink bridge, since one card then obstructs the front air intake of the other.)

Although blower fans don’t cool the GPU as effectively as axial fans, they are still the preferred form factor for workstation cards, which are often used in heat-accumulating multi-GPU set-ups, since they vent hot air out of the back of the card, and thus out of the workstation itself, rather than recirculating it inside the chassis and relying on the case fans to do the work.

The other new card is AMD’s Radeon Pro W6800, the successor to the previous-gen Radeon Pro W5700. It uses a Navi 21 GPU based on AMD’s new RDNA 2 architecture: in this case, a partially cut-down Navi 21 GPU sporting 3,840 Stream processors grouped into 60 compute units, and 60 ray accelerators.: about which, more later. It has a base clock speed of 1.8GHz and an estimated boost clock speed of 2.3GHz.

The card comes equipped with 32GB of GDDR6 ECC memory on a 256-bit bus for a memory bandwidth of 512GB/s. This is a massive step up from the 8GB Radeon Pro W5700, and a nice change from AMD’s other recent professional GPUs, which have been stuck on 8GB or 16GB for some time now.

The Radeon Pro W6800 is rated at 250W and, like the Nvidia RTX A6000, uses a traditional blower fan: in this case, an even more traditional design, with only one air intake on the front of the card.

Technology focus: ray tracing hardware and APIs

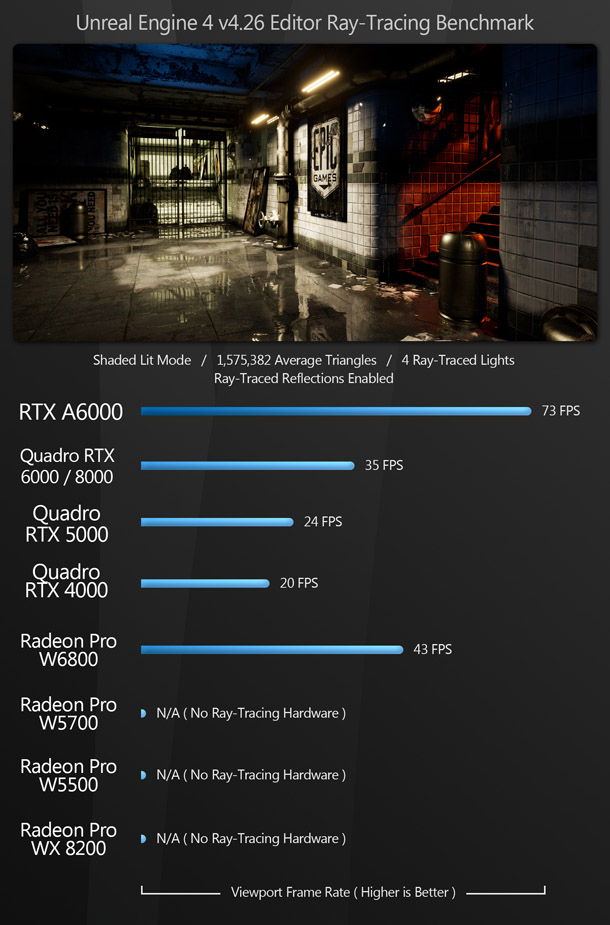

One significant feature of the Radeon Pro W6800’s RDNA 2 architecture is that it features ray accelerators: dedicated hardware cores to speed up ray traced renders.

Here, AMD is playing catch-up with Nvidia, which has featured dedicated ray tracing hardware – in Nvidia’s terminology, RT cores – since its previous-generation Turing GPUs. (New Ampere cards like the RTX A6000 use the second generation of the RT core technology.)

To take advantage of those hardware cores, software packages have to access them through a graphics API. At present, two are widely used in DCC applications: DXR (DirectX Raytracing), which is supported by both AMD and Nvidia GPUs, or Nvidia’s own proprietary OptiX API. DXR is used in game engines like Unreal Engine and Unity, and some real-time renderers, but OptiX is more widely used in offline GPU renderers.

I covered GPU core types and graphics APIs in detail in my 2020 group test, so if you want a fuller discussion of the subject, you can read it here.

Prices are street prices from September 2021, and may have changed by the time you read this.

Testing procedure

For this review, my test system was a Xidax X-10 workstation powered by an AMD Threadripper 3990X CPU. You can find more details in my review of the Threadripper 3990X.

CPU: AMD Threadripper 3990X

Motherboard: MSI Creator TRX40

RAM: 64GB of 3,600MHz Corsair Dominator DDR4

Storage: 2TB Samsung 970 EVO Plus NVMe SSD / 1TB WD Black NVMe SSD / 4TB HGST 7200rpm HD

PSU: 1300W Seasonic Platinum

CPU cooler: Alphacool 360mm AIO liquid cooler

OS: Windows 10 Pro for Workstations

For testing, I used the following applications:

Viewport and editing performance

3ds Max 2021, Blender 2.93, Chaos Vantage 1.4.2, Fusion 360, Maya 2020, Modo 14.0v1, Solidworks 2021, Substance Painter 2021.1.1, Unreal Engine 4.26, Unreal Engine 5 Early Access 2

GPU rendering

Blender 2.93 (using Cycles), Cinema 4D R22 (using Radeon ProRender), IndigoRenderer 4.0.64 (using Indigo Bench), KeyShot 9, Maverick Studio 2021.1, OctaneRenderer 2020.1.5 (using OctaneBench), Radeon ProRender for Maya 3.2, Radeon ProRender for 3ds Max 2.7.6, Redshift 3.0.33, Solidworks 2021 Visualize, V-Ray 5 for 3ds Max (using V-Ray GPU)

Other benchmarks

Houdini 18.5 (using the Axiom GPU solver plugin), Metashape 1.7.4, Premiere Pro 2021

Synthetic benchmarks

3DMark

In the viewport and editing benchmarks, the frame rate scores represent the figures attained when manipulating the 3D assets shown, averaged over five testing sessions to eliminate inconsistencies. In all of the rendering benchmarks, the CPU was disabled, so only the GPU was used for computing. Testing was performed on a single 32” 4K display, running its native resolution of 3,840 x 2,160px at 60Hz.

Benchmark results

Viewport and editing performance

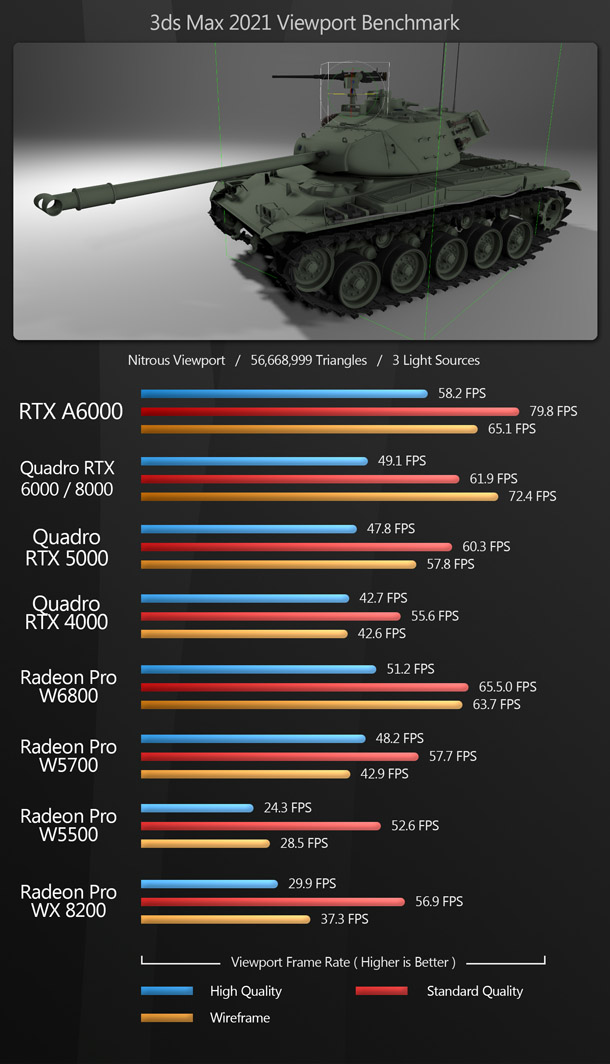

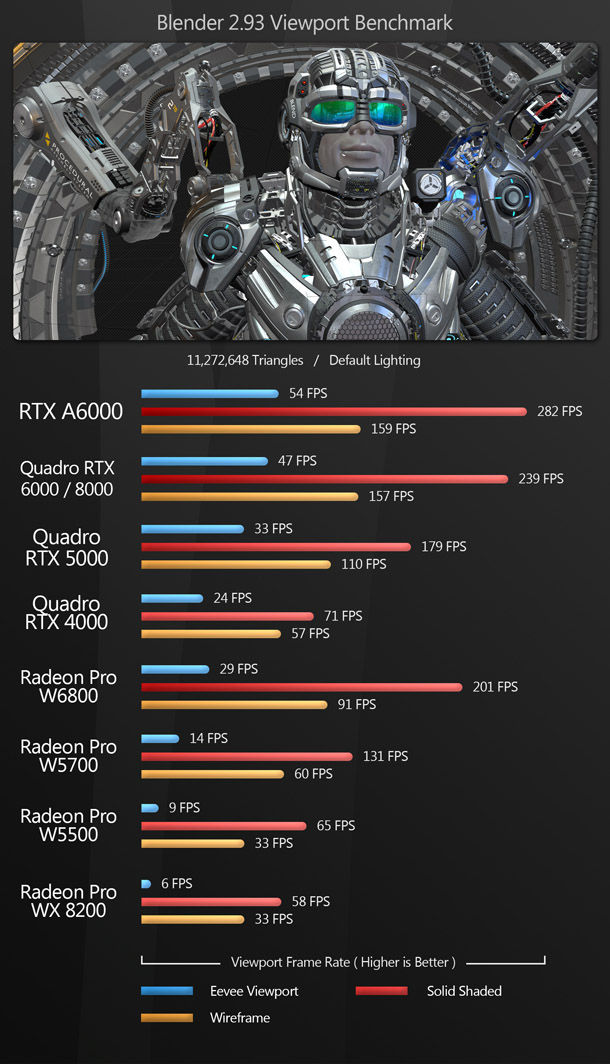

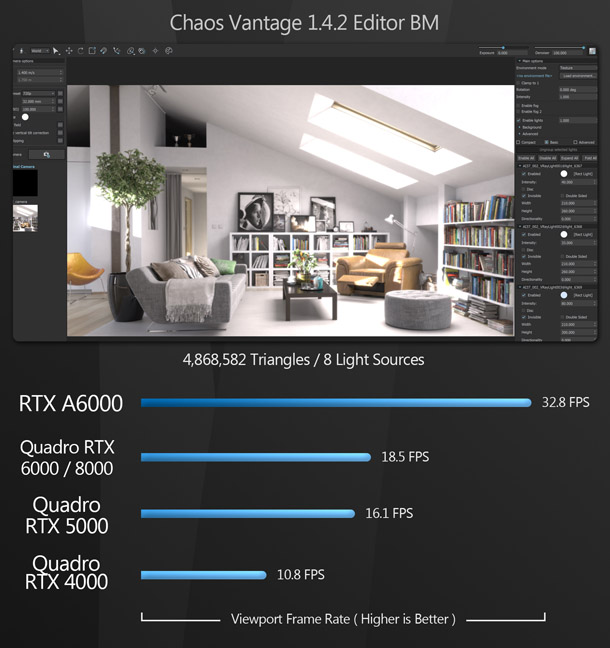

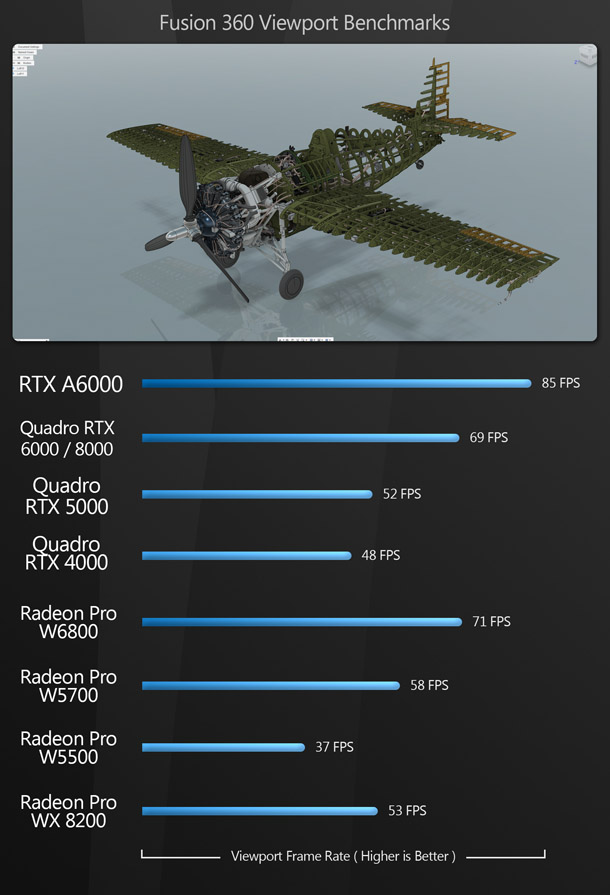

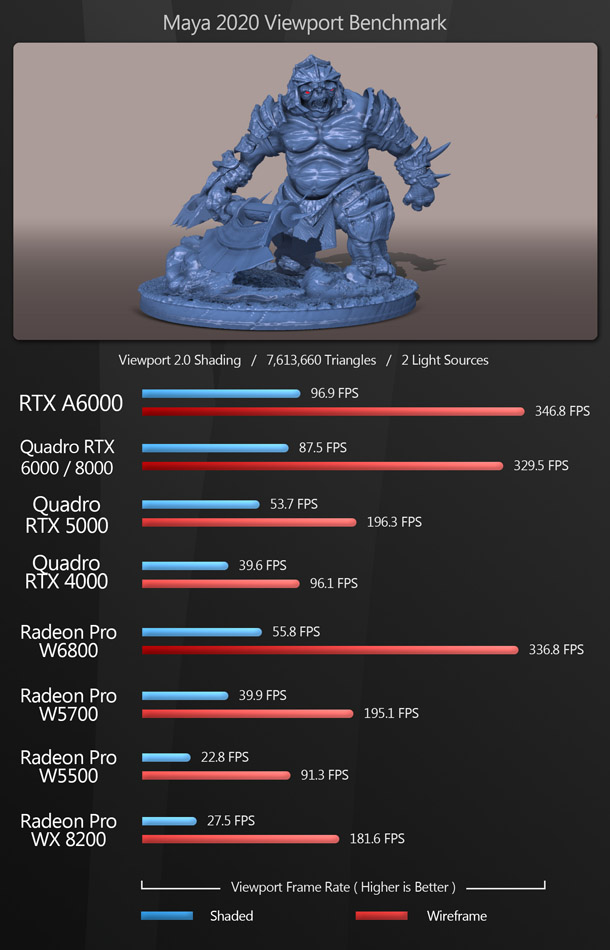

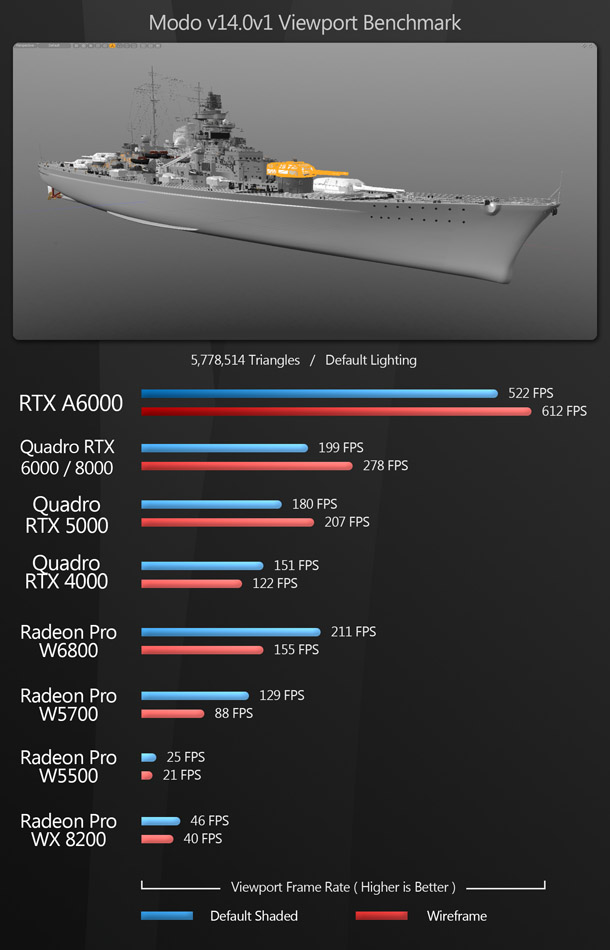

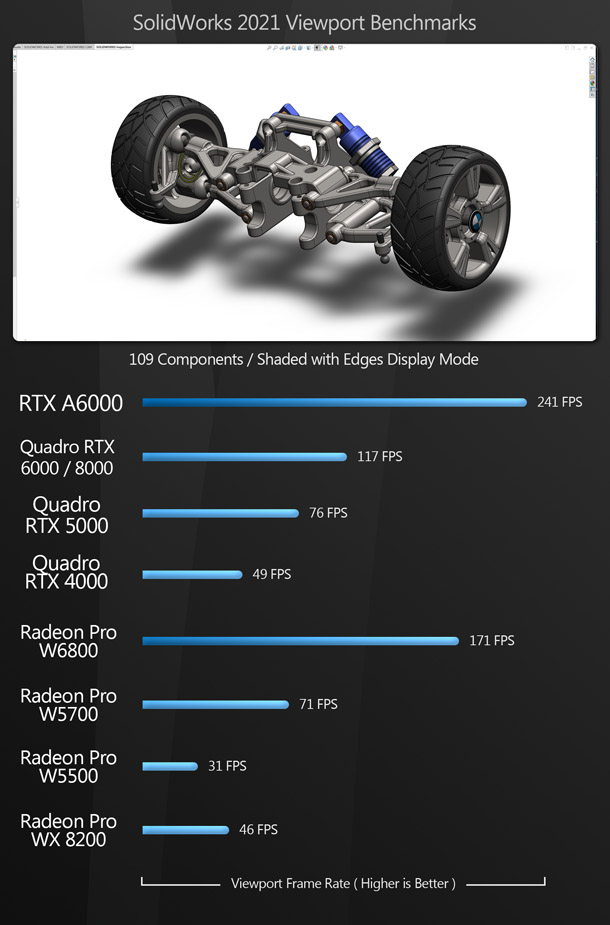

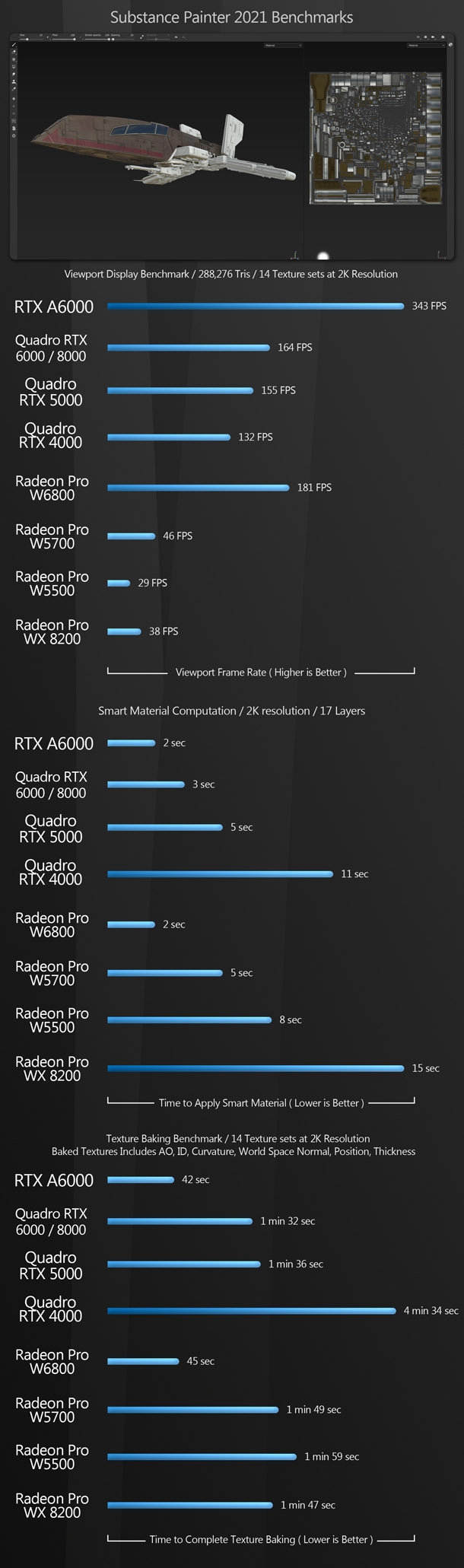

The viewport benchmarks include many of the key DCC applications – both general-purpose 3D software like 3ds Max, Blender and Maya, and more specialist tools like Substance Painter – plus CAD package SolidWorks and game engine and real-time renderer Unreal Engine. Real-time renderer Chaos Vantage uses Nvidia’s OptiX API, so it only ran on the Nvidia cards.

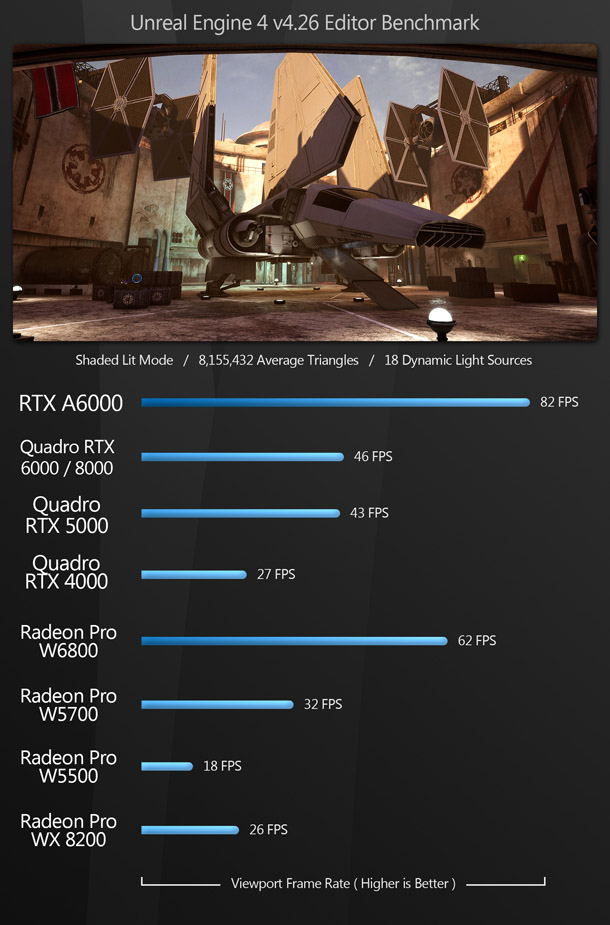

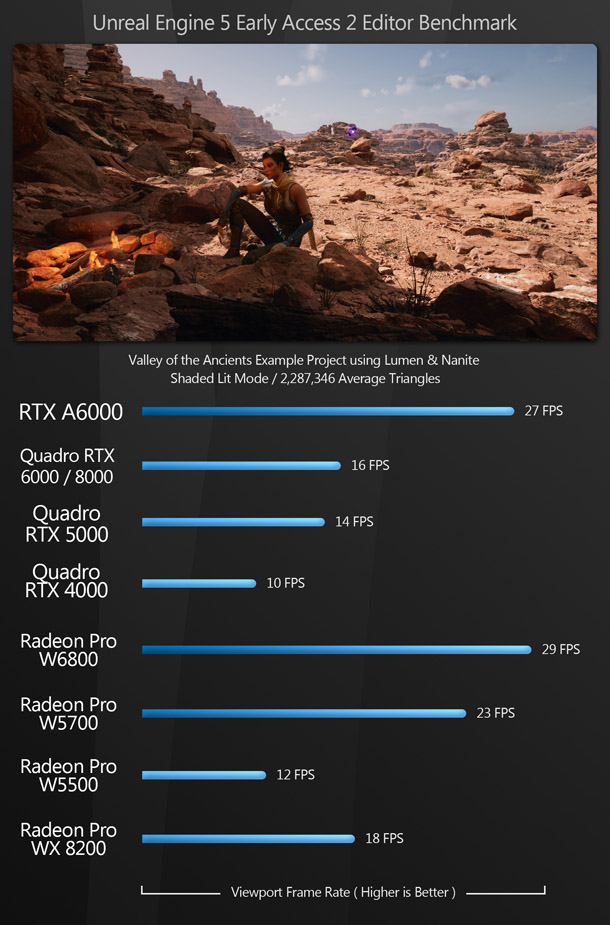

The Nvidia RTX A6000 takes first place in all of the viewport benchmarks, with one exception: the Unreal Engine 5 Valley of the Ancients demo scene, where the Radeon Pro W6800 takes a small lead.

In the other benchmarks, the W6800 follows a similar pattern to the older Radeon Pro cards. 3ds Max, Fusion 360, SolidWorks and Unreal Engine are more AMD-friendly, and here the W6800 comes much closer to the performance of the RTX A6000; the other apps are more Nvidia-friendly, with the A6000 taking a commanding lead.

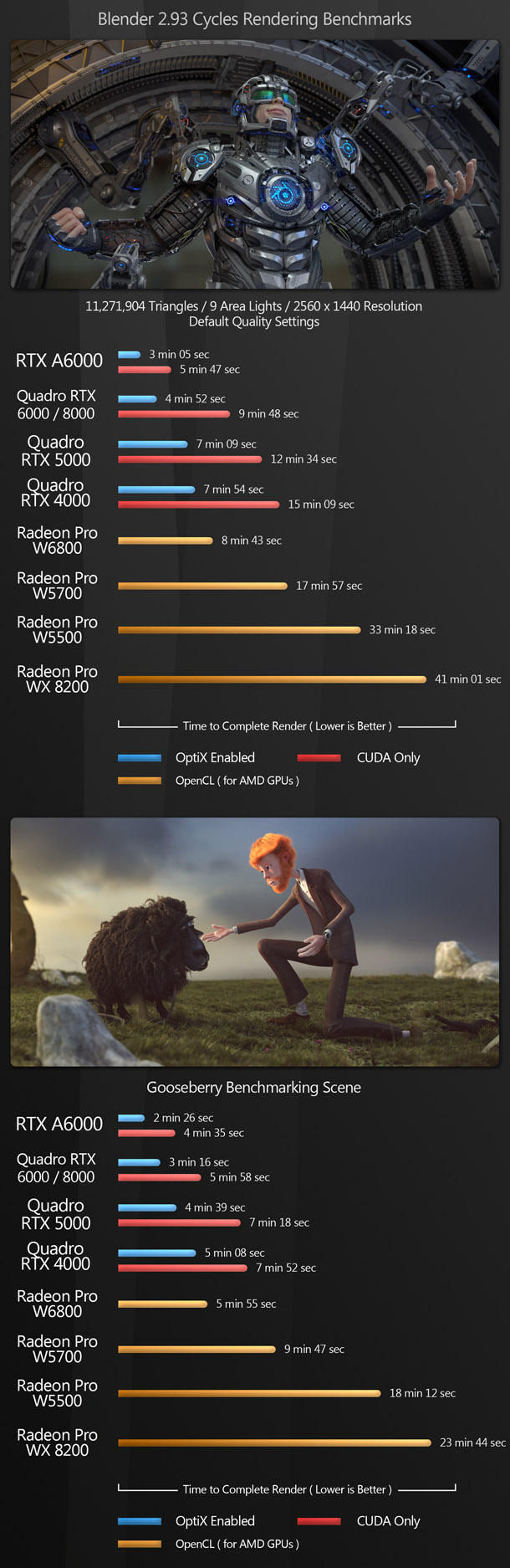

GPU rendering

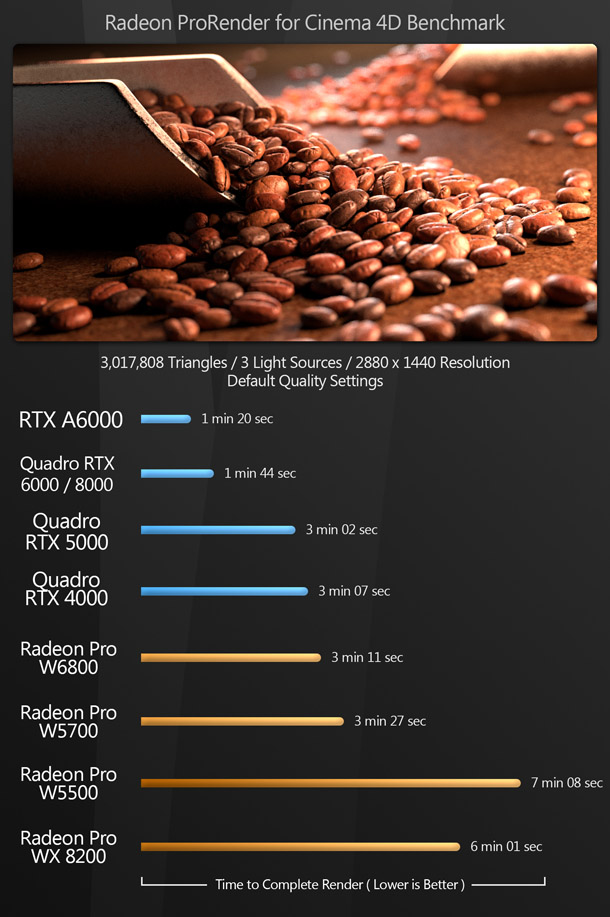

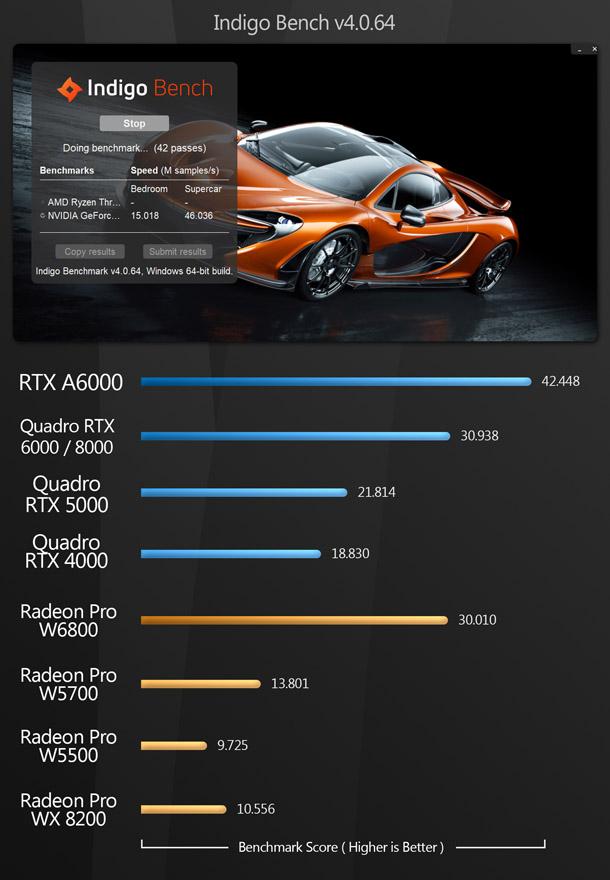

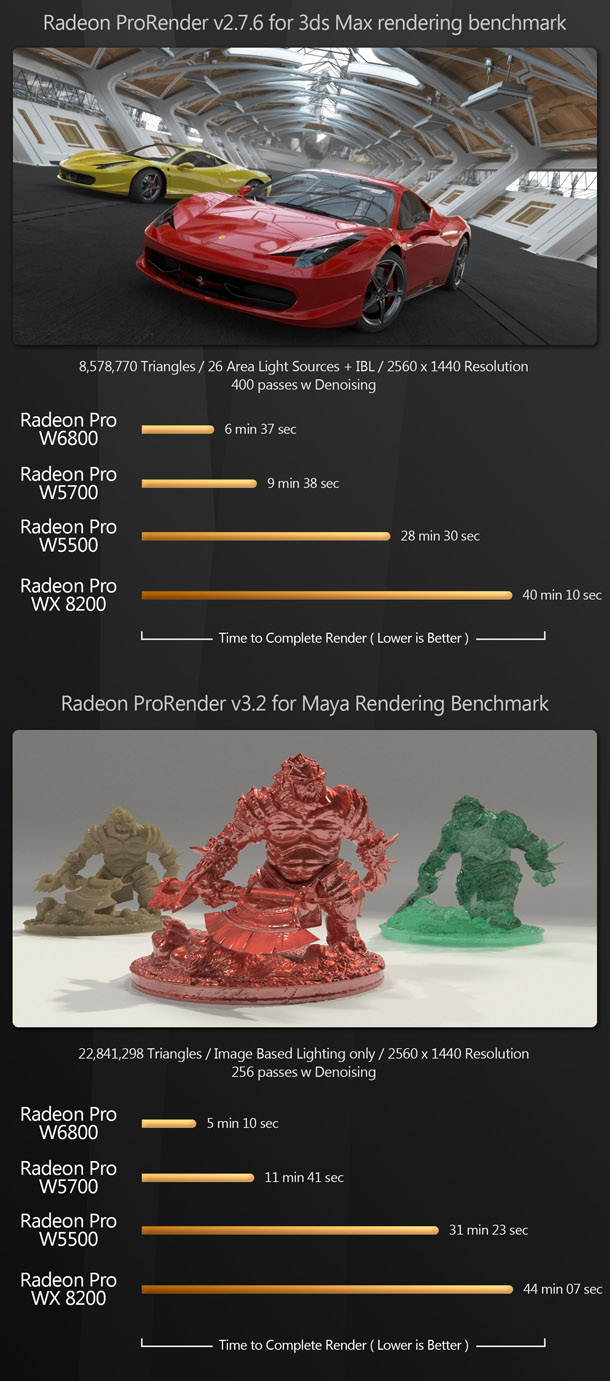

The next group of benchmarks features a range of GPU rendering applications. Since most offline GPU renderers currently only support Nvidia’s CUDA and OptiX APIs, many of these tests could only be run on the Nvidia cards. The exceptions are applications that support the OpenCL open standard, which could be run on both AMD and Nvidia cards, and Radeon ProRender, AMD’s own GPU renderer, which I tested only on AMD cards.

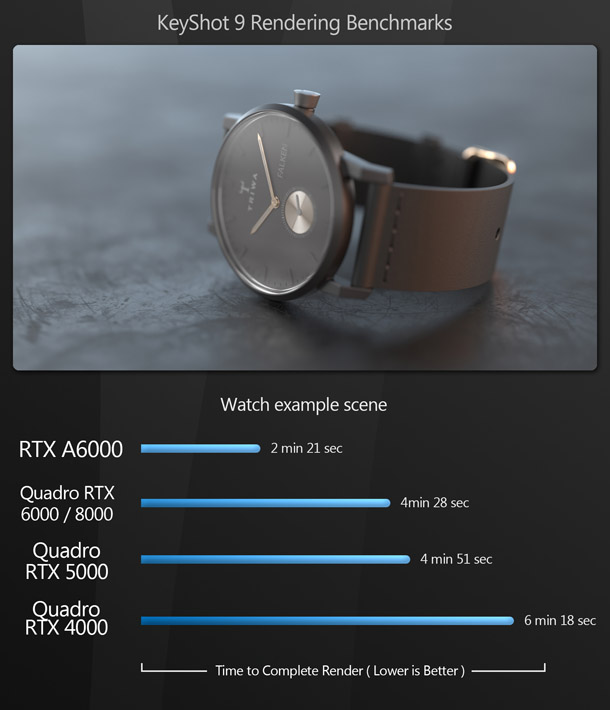

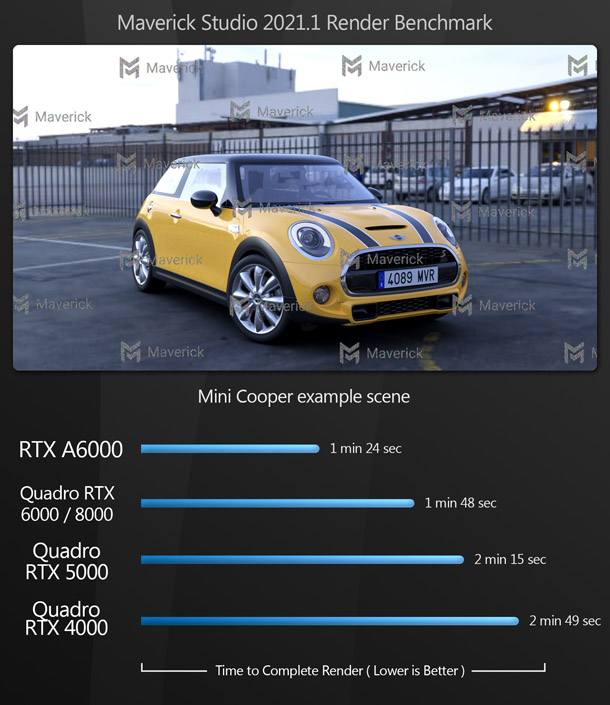

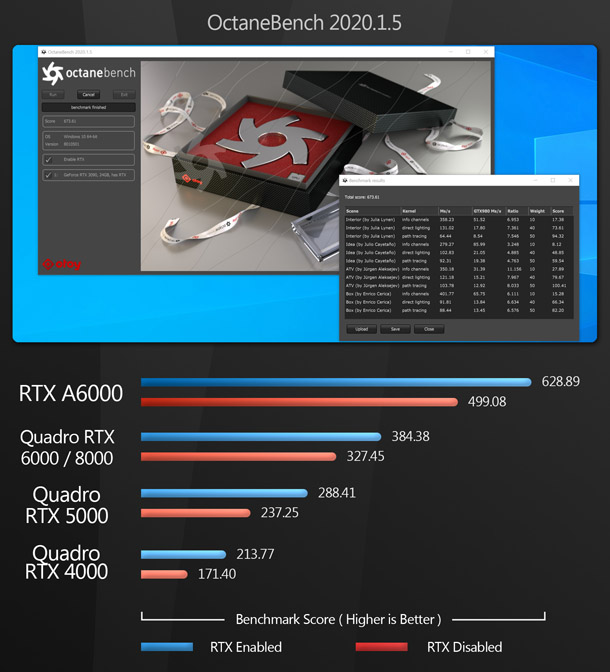

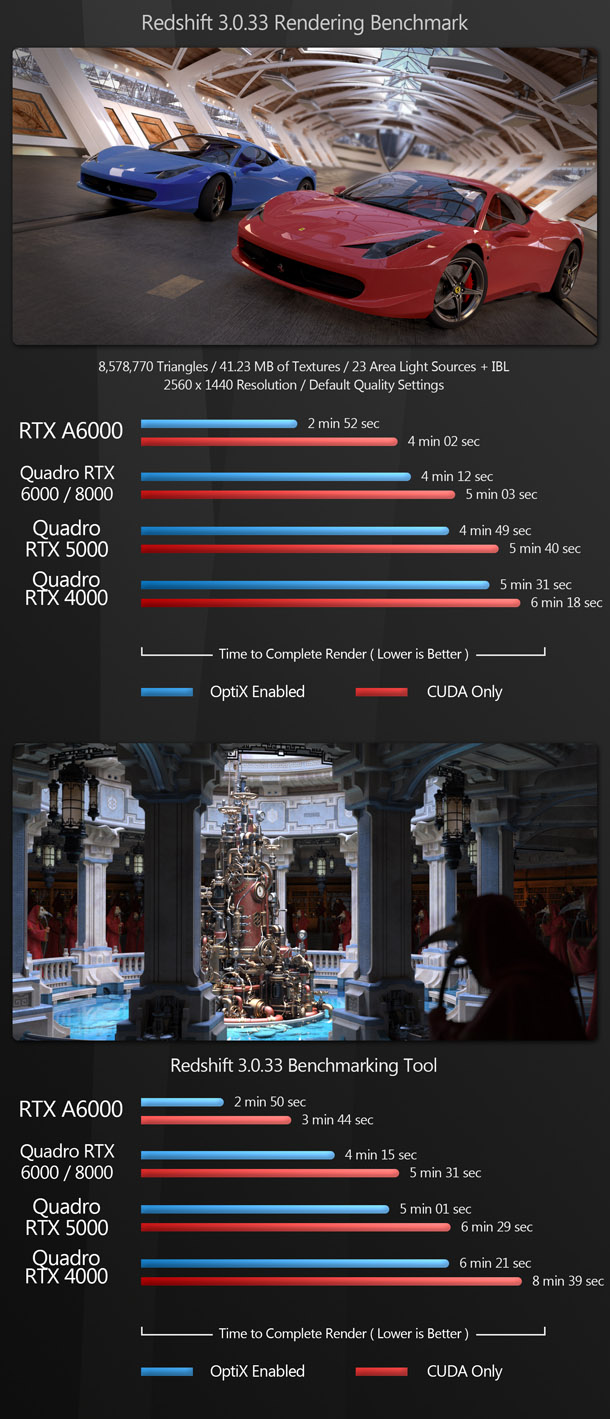

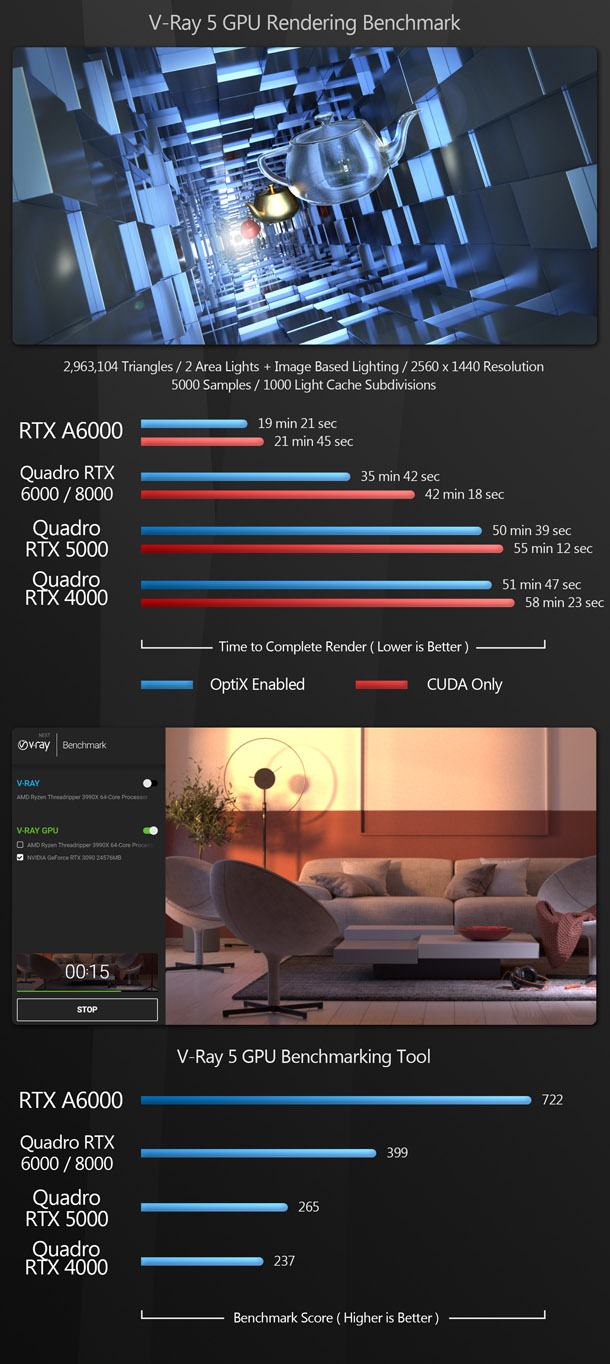

The results can be broken down into three categories. First, the Nvidia-only renderers: KeyShot, Maverick Studio, OctaneRender, Redshift and V-Ray. Here, the RTX A6000 takes the top spot by a huge margin. Its fully unlocked GA102 GPU and 48GB of RAM give it a massive lead over the previous-gen Turing GPUs.

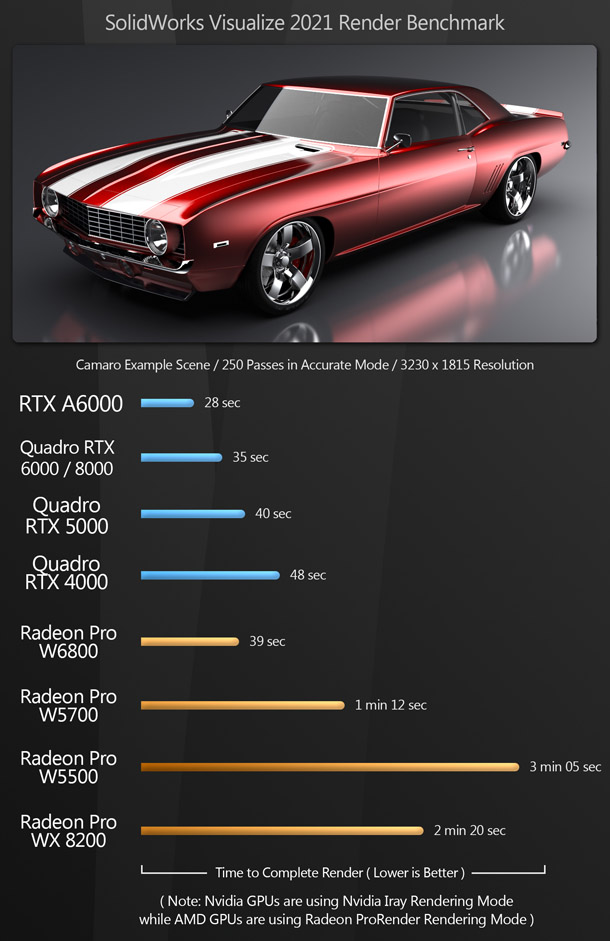

Next, we have the all-inclusive render group where the Nvidia GPUs using CUDA/OptiX square off with the AMD GPUs using OpenCL. This group comprises Blender (using the Cycles renderer), Cinema 4D (using Radeon ProRender), Indigo Renderer (using Indigo Bench) and SolidWorks Visualize. In this category, the Nvidia RTX A6000 takes the top spot among all of the tested GPUs, but the relative performance of the Radeon Pro W6800 varies. In Blender and Cinema 4D, it falls far behind the RTX A6000, but it comes much closer in SolidWorks Visualize and Indigo Bench.

Lastly, in the AMD-only category, the Radeon Pro W6800 takes a massive lead over the previous-gen AMD GPUs in the Radeon ProRender benchmark.

Other benchmarks

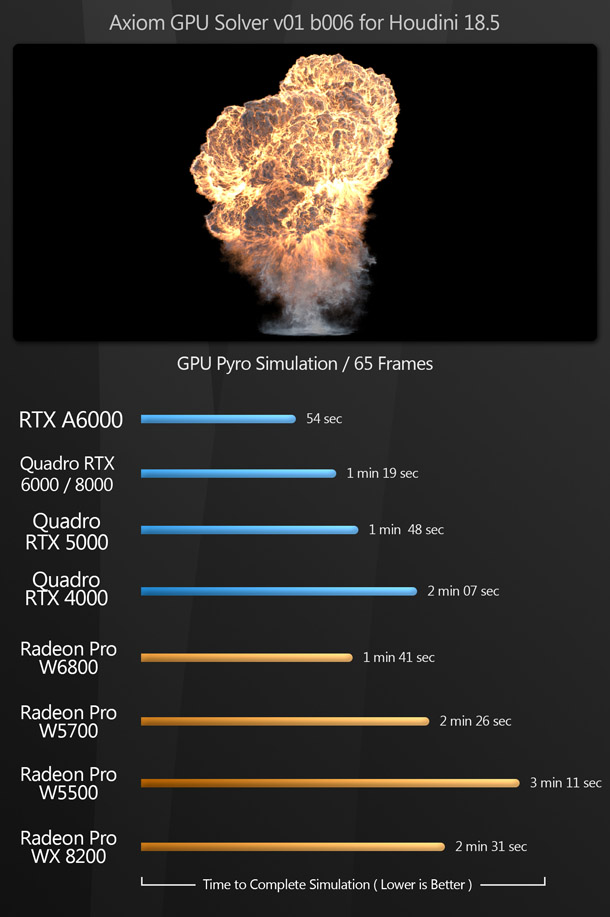

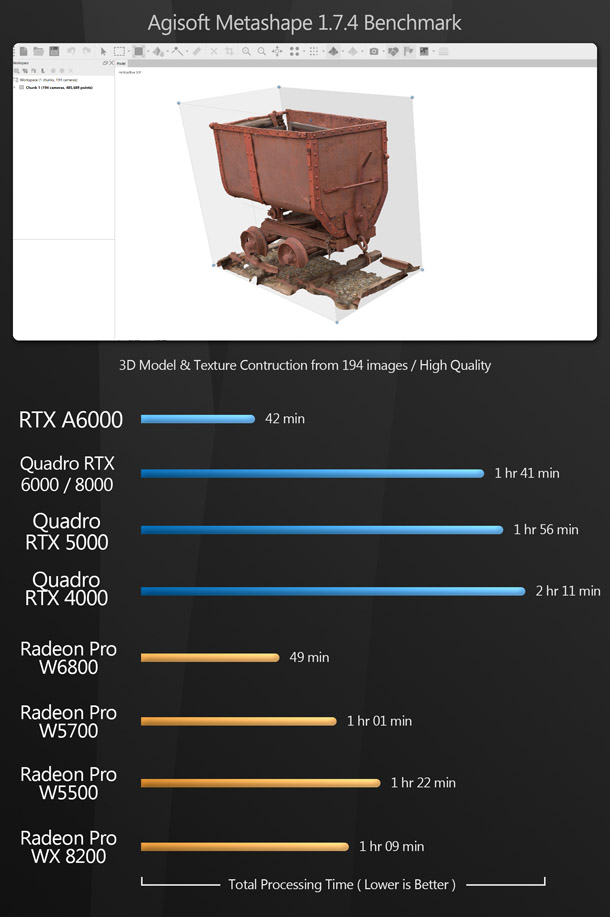

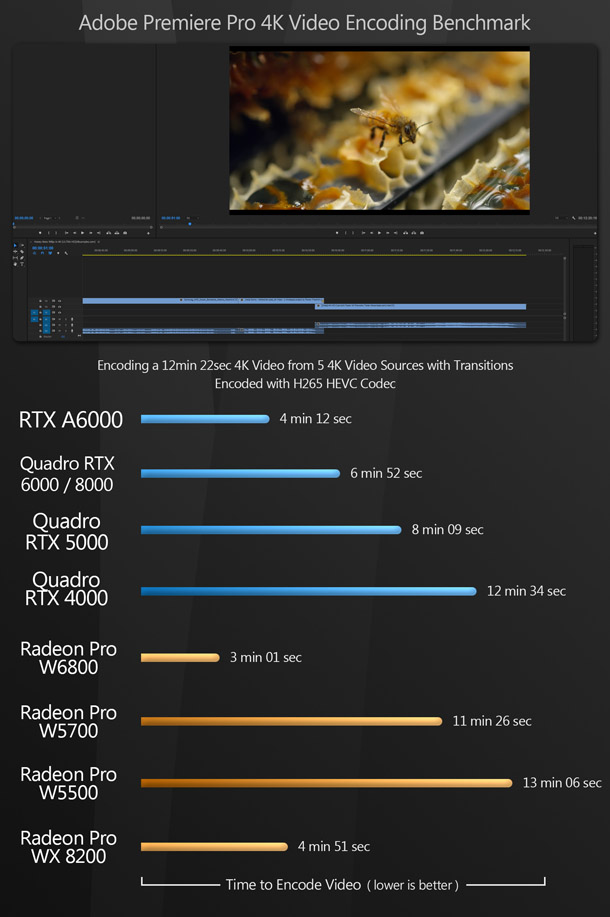

The next set of benchmarks are designed to test the use of the GPU for more specialist tasks. First off, we have a pyrotechnic simulation in Houdini 18.5 using the GPU-based third-party solver Axiom. Next, we have photogrammetry application Metashape, which uses the GPU for image processing, and lastly, Premiere Pro, which uses the GPU for video encoding.

(A note on Premiere Pro: in my previous reviews, I had hardware encoding enabled in Premiere, but some of the preferences were not set up properly, so GPU acceleration was not being used to its full extent. That has now been corrected, so this and future reviews should reflect the contribution of the GPU more accurately.)

The additional benchmarks present a mixed bag of results. With Axiom, the RTX A6000 takes the top spot, with the Radeon Pro W6800 falling a fair way back, coming in behind some of the previous -generation Nvidia Turing GPUs. In Metashape, the RTX A6000 takes the top spot again, but the Radeon Pro W6800 runs it close. In Premiere Pro, things flip, and the Radeon Pro W6800 takes the lead over the RTX A6000.

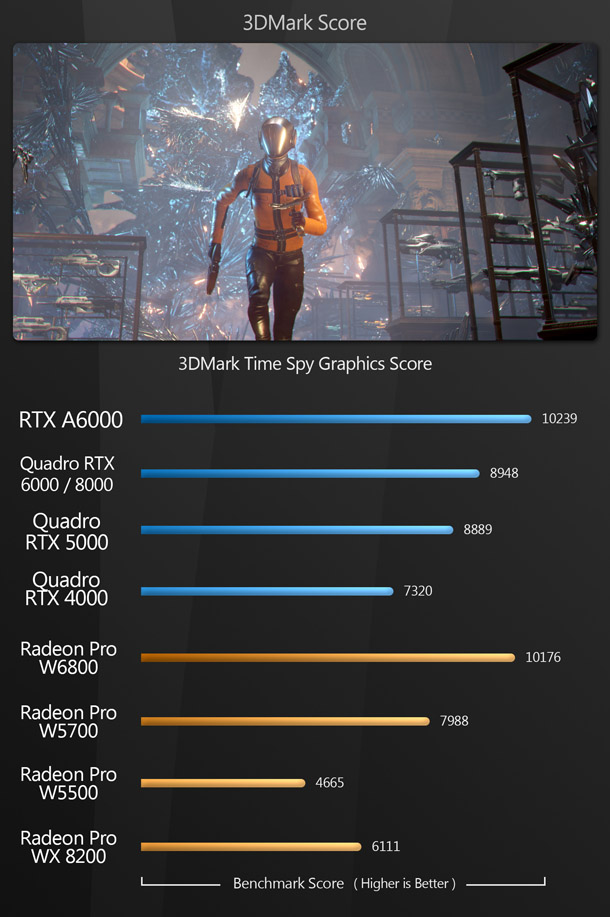

Synthetic benchmarks

Finally, we have the synthetic benchmark 3DMark. Synthetic benchmarks don’t accurately predict how a GPU will perform in production, but they do provide a way to compare the cards on test with older models, since scores for a wide range of GPUs are available online

The results here are as expected: the RTX A6000 takes the top spot, followed by the Radeon Pro W6800, and then all the previous-generation Quadro and Radeon Pro GPUs.

Other considerations

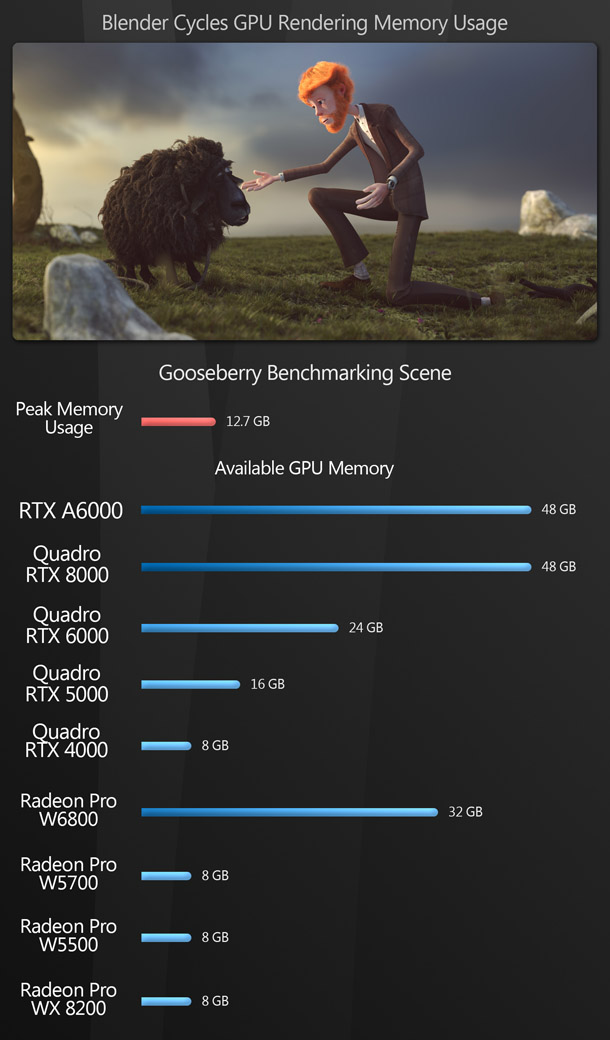

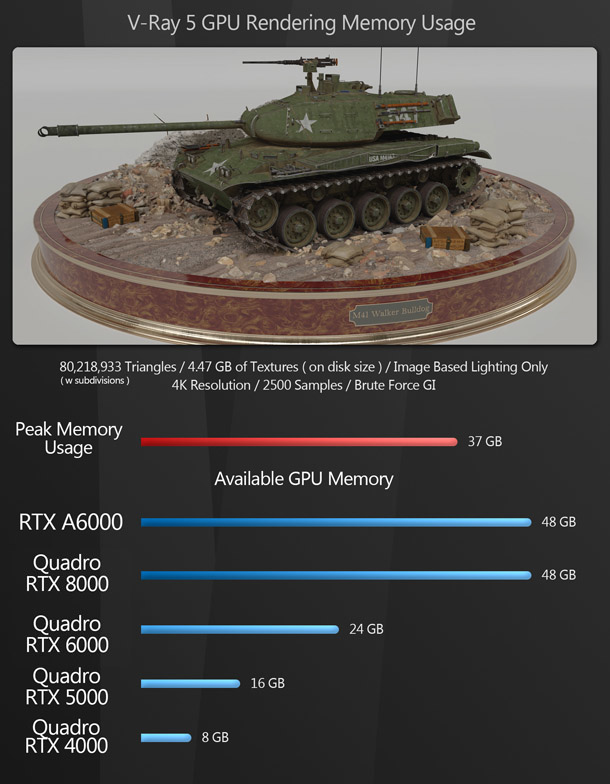

GPU memory

Although I have talked about the importance of available GPU memory for DCC tasks in previous reviews, I think it’s worth going over again in detail here.

There are several features that differentiate consumer GPUs – Nvidia’s GeForce and AMD’s Radeon RX product ranges – from their professional counterparts, but the biggest by far is memory capacity. This is especially true of the two new GPUs in this test. The RTX A6000 has a massive 48GB of on-board VRAM: double that of the nearest consumer card, the GeForce RTX 3090, and four or more time higher than mainstream GeForce GPUs. The same is true of the Radeon Pro W6800: its 32GB of VRAM is double that of the nearest Radeon RX consumer card.

Many DCC tasks, particularly GPU rendering, use more of a GPU’s VRAM than gaming, with the possible exception of 8K gaming, which is barely in its infancy. Although many GPU renderers can work ‘out of core’ by paging data out to system RAM once the VRAM fills up, in most cases, this incurs a pretty significant performance penalty. Some software simply crashes if a task requires more VRAM than is available.

So how much GPU memory do you need for common tasks? Let’s look at a few examples, beginning with offline rendering.

In both these scenes, the memory used exceeds the capacity of most consumer GPUs. When I ran the V-Ray 5 tank scene on a 24GB GeForce RTX 3090, the need to page out to system RAM only incurred a small speed penalty, but it did seriously affect stability. If I made any edits to the scene, then attempted to render it during the same session, the render would crash every time. The only way to complete it reliably was to close 3ds Max, reload the scene, then render it without making any changes.

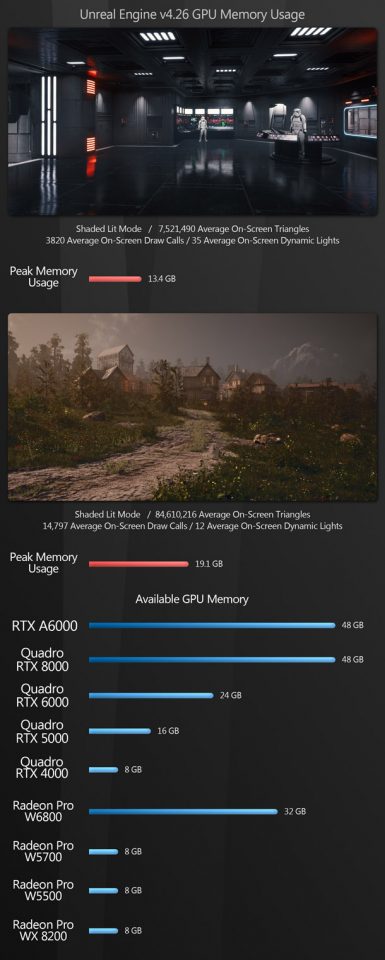

Another task where having a lot of memory can be very important is real-time rendering. With game development getting more and more complex, and with many other industries turning to tools like Unreal Engine and Unity, larger projects can quickly exceed the memory capacity of consumer GPUs. Here are a couple of examples of geometry- and texture-heavy scenes in UE4.

In both cases, loading the entire level in the editor requires more VRAM than is available on any current GeForce GPU, aside from the top-of-the-range GeForce RTX 3090. Of AMD’s gaming cards, the Radeon RX 6800, 6800 XT and 6900 would all be able to handle the Dark Forces scene, but the medieval open world scene would be beyond them. However, both projects fit easily within available VRAM on both the Nvidia RTX A6000 and AMD Radeon Pro W6800.

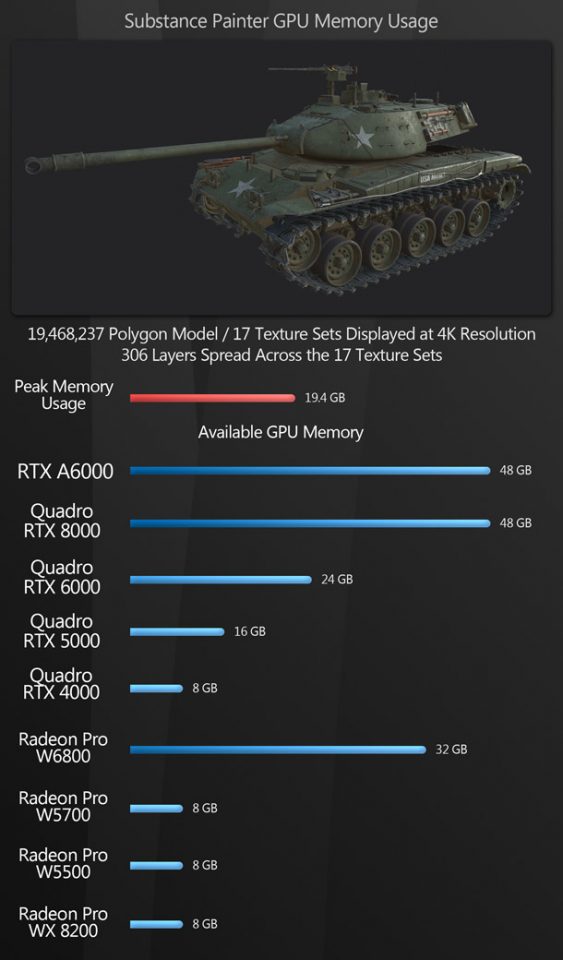

Lastly, I want to examine memory usage with another popular DCC application, Substance Painter. Painter is primarily used for games and real-time development, so many users don’t think of it as a particularly memory-hungry application, when in reality, it can be very memory-intensive, particularly if you work with multiple texture sets on a model, and use complex layers and smart materials.

Again, this test scene exceeds the memory capacity of any 8GB or 16GB GPU, which includes all of the consumer cards currently available, aside from the GeForce RTX 3090. It is also worth noting that Painter suffers from extreme slow-down once GPU memory is full.

Drivers and other software

In addition to their higher memory capacity, professional GPUs come with a suite of software tools meant to aid productivity. I wouldn’t classify them as a major selling point for workstation cards, since there are third-party applications that do the same things, but they’re a nice addition, and since they are written by AMD and Nvidia themselves, they work well with the hardware they are designed for.

The toolset is quite similar for both Nvidia and AMD, with both offering driver profiles, a quick-launch menu for most off-the-shelf DCC applications, and desktop video capture at 4K resolution. I tested the video capture utilities briefly, and they work quite well. Video quality and file sizes are similar for both, and the in-app overlay features are neat. I could see them being useful if you do a lot of remote collaboration, as the broadcast and sharing features are a little better integrated into the desktop than in third-party applications like Microsoft Teams, Google Meet or Slack.

AMD also goes a little further by providing built-in performance and temperature monitoring, fan speed controls, and a feature called Image Boost. This essentially renders your desktop larger than the native resolution for your display, assuming that it runs at less than 4K, then scales the image down to that native resolution. This is neat in theory, but personally, I don’t see much use for it: most DCC professionals today work on at least 1440p displays, which is a high enough resolution for most tasks, and using Image Boost negates the performance benefits of having a display resolution lower than 4K.

One last software feature of the Radeon Pro W6800 is AMD’s Viewport Boost. This dynamically reduces the resolution of the viewport when objects within it are moving quickly, helping to increase frame rates. It is currently supported in 3ds Max, Revit, Twinmotion and Unreal Engine 4. (According to AMD, it only works with packaged Unreal Engine projects, but in my tests I found that it did seem to have an impact when working inside the Unreal Editor itself.)

In my 3ds Max and Unreal Engine tests, I had Viewport Boost set to Always On, since there was no perceivable decrease in visual quality while using it, and in those benchmarks, the viewport performance of the Radeon Pro W6800 came much closer to that of the RTX A6000. With Unreal Engine, Viewport Boost was vastly superior to Unreal Engine 4’s native resolution scaling, and on a par with Unreal Engine 5’s Temporal Super Resolution. So it seems to work well, at least on the two applications I tested it with. Hopefully, we will see the list of supported software grow in the near future.

Power consumption and cooling

I talked about cooling briefly at the beginning of this review, but I want to dive into the subject a little deeper. As I mentioned before, both the Nvidia RTX A6000 and AMD Radeon Pro W6800 ditch the multi-axial fan design of their consumer counterparts in favour of a single blower fan. Although this may work better in multi-GPU set-ups, in single-GPU systems, axial designs seem to do a much better job.

My best comparison is between Nvidia’s professional RTX A6000 card and its consumer GeForce RTX 3090. Both have almost identical GA102 GPUs, and while the A6000 has twice the memory capacity, the 3090 has more power-hungry GDDR6X memory, so in theory, they should generate similar amounts of heat.

However, under heavy load, at stock fan speeds, the A6000 can hit temperatures of 84-85°C, whereas the 3090 tops out at around 71°C. At 65% fan speed, the 3090 falls to 63-65°C, and the A6000 to 74°C, but the A6000 is quite a bit louder.

The situation is similar for AMD’s Radeon Pro W6800, where running the fan at stock speeds seems to be barely adequate, with temperatures reaching 82°C under heavy load. Turning the fan up to 2.5x stock speed reduces temperatures to around 65°C, but like the RTX A6000, fan noise becomes an issue. You can have your professional GPUs cool, or you can have them quiet, but unfortunately not both at once.

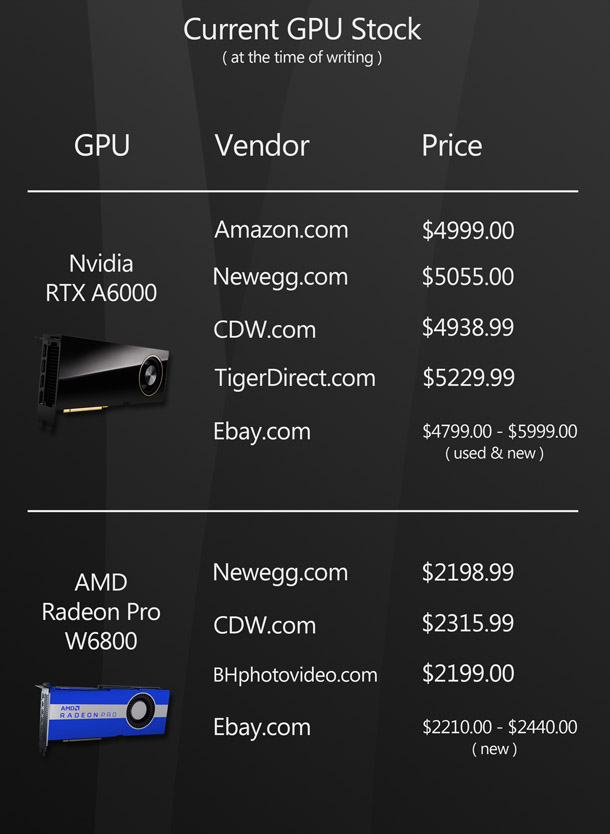

Prices correct as of September 2021.

Price

Lastly, let’s talk about price. I try not to place too much stress on this when it comes to professional GPUs: artists who are used to consumer hardware tend to fall out of their chairs when they see the prices of workstation cards, but in production, the benefits of professional cards do often justify the cost.

The Nvidia RTX A6000 has a MSRP of $4,649: a pretty good figure, considering that the previous-generation Quadro RTX 6000 and RTX 8000 had launch prices of $6,299 and $9,999. (A year later, Nvidia dropped the prices to $4,000 and $5,500.) However, when I was writing this review, I was unable to find any for sale at the MSRP, as the chart above illustrates. At least the percentage mark-up isn’t as high as it is with gaming cards, and the RTX A6000 did actually seem to be in stock with several retailers.

The AMD Radeon Pro W6800 has a MSRP of $2,249: a big jump over the previous-generation W5700, which had a launch price of $799, albeit only a quarter of the memory. If you’re looking to buy a W6800, you’re in a slightly better position than with the RTX A6000 (and a much better position than anyone hoping to buy a gaming GPU). At the time of writing, it is available at multiple retailers: at some for slightly less than the MSRP.

Verdict

With all of the data presented, it’s time to sum up. First, let’s talk about the Nvidia RTX A6000.

As with the GeForce RTX 3090 that I reviewed earlier this year, the RTX A6000’s Ampere GA102 chip provides a huge performance improvement over Nvidia’s previous-generation Turing GPUs. It excelled in all of the tests I put it through, including viewport performance and general GPU compute tasks, and its 48GB of memory makes it especially well suited to GPU rendering and simulation.

I really only have two complaints. First, that the RTX A6000 uses GDDR6 memory instead of the higher-bandwidth GDDR6X. As I mentioned earlier, this doesn’t seem to have been an intentional decision on Nvidia’s part – large enough GDDR6X modules simply weren’t available when the card was designed – and in my tests, it doesn’t seem to make much difference for most DCC tasks.

So why even mention it? Remember that I said ‘most’ tasks. When comparing the RTX A6000 informally to the similarly specced GeForce RTX 3090, which does have GDDR6X memory, the lower memory bandwidth seemed to make a noticeable difference to performance in real-time applications like Unreal Engine 4 and 5, Unity, Lumberyard and Open 3D Engine.

My second complaint is that the RTX A6000, like other professional cards, still clings to the old single blower fan design, which is becoming less and less adequate as GPUs increase in speed. Professional GPUs need to evolve as gaming cards have, and instead rely on better case/rack designs to vent hot air.

However, despite these pretty minor gripes, the RTX A6000 is a monster of a GPU, providing a massive upgrade in performance over previous-generation cards, while maintaining a similar price point.

Next, let’s talk about the AMD Radeon Pro W6800. Like the RTX A6000, it is a significant upgrade over the highest-performing card from the previous generation, the Radeon Pro W5700. Although its frame buffer is not quite as large as the RTX A6000, at 32GB, it’s still pretty massive. It offers solid performance in all of my viewport tests, and with compute tasks that support OpenCL. It also comes with quite a robust software package, with a few more useful features than the Nvidia equivalent.

But what about GPU rendering? This leads me to my complaints about the Radeon Pro W6800. Nvidia dominates the offline GPU rendering market with its robust CUDA and OptiX APIs, while AMD continues to rely on OpenCL. Most popular GPU renderers don’t support OpenCL, and those that do also support CUDA or OptiX, so Nvidia cards tend to massively outperform their AMD counterparts.

While the new OpenCL 3.0 spec has now been finalised, I’m not aware of any major DCC applications that have adopted it. What AMD really needs to do is to develop a GPU compute API that is as powerful as CUDA and OptiX, then push hard to get software developers to adopt it. Dare to dream.

My next gripe isn’t really a problem with the W6800: more a ‘what could have been’. The Radeon Pro W6800 is AMD’s current top-of-the-line professional GPU. Yet despite this, it uses the same RDNA 2 Navi 21 chip as the consumer Radeon RX 6800, when AMD has two variants – used in the Radeon RX 6800 XT and 6900 XT – with more cores, including more ray tracing cores.

I think this is a serious missed opportunity on AMD’s part. Using a higher-specced chip would have pushed the performance of the Radeon Pro W6800 much closer to that of the Nvidia RTX A6000. A card with the Navi 21 GPU from the Radeon RX 6900 XT, 32GB of RAM and AMD’s Viewport Boost technology would have been quite a proposition.

The take-home message

So which one should you buy? It comes down to your workload, your project complexity and your budget. The Nvidia RTX A6000 is a monster of a GPU, but for a lot of workloads, it’s overkill. The 48GB frame buffer is simply unnecessary for all but the most complex modelling, texturing and scene assembly tasks. For example, a 60-million-polygon 3ds Max scene with a lot of 8K textures only requires 9GB of VRAM, while a 54-million-polygon Maya scene with 4K textures uses a little over 8GB, although there are situations – particularly in visual effects and feature animation – where more memory will be required.

Rendering is where the RTX A6000 really shines. It has a massively powerful GA102 GPU, with dedicated ray tracing cores, and its 48GB of VRAM enables it to process extremely complex scenes without having to page data out to system RAM. Although most movies and broadcast series still make use of huge farms of CPU-based render machines for final output, GPU rendering can be extremely beneficial for iterative previews, so TDs, lighters and shader artists could all benefit greatly from the power of the RTX A6000. In addition, more and more architectural and product visualisation projects are now being rendered on the GPU.

The Radeon Pro W6800, while powerful, is in an odd position. It’s a good GPU from a hardware perspective, but the lack of a good API for GPU rendering makes it hard to recommend as a general-purpose card, although there are specific tasks, and specific applications, with which it excels.

If you need solid performance with 3ds Max, are doing a lot of photogrammetry processing with Metashape, or are using your GPU primarily for video editing and encoding in Premiere Pro, the Radeon Pro W6800 may be a good fit. I have also heard that AMD GPUs provide good viewport performance in many CAD applications, and while that isn’t something I’ve tested extensively, the W6800 did well in the SolidWorks test. But if you’re looking for a general-purpose GPU that performs well across all DCC tasks, I would have to recommend the Nvidia RTX A-series cards, with the A6000 being top of the food chain.

There is one final caveat, and it applies equally to Nvidia and AMD. I don’t normally talk much about consumer GPUs in reviews of professional cards, but this time, I feel I must. The one area of DCC work where I would not recommend either of the new professional GPUs is real-time development.

Most of my recent professional work involves Unreal Engine, and here I would recommend the current top-of-the-range gaming cards – the AMD Radeon RX 6900 XT and the Nvidia GeForce RTX 3090 – over their professional counterparts. The 6900 XT has a more powerful GPU than the W6800, and the RTX 3090 has faster memory than the RTX A6000, giving both cards higher frame rates in Unreal Engine 4 and 5, while their memory capacity (16GB for the 6900 XT, 24GB for the W6800) is adequate for all but the most demanding real-time projects. However, I would recommend the professional GPUs over any gaming GPU with less than 12GB of RAM, since that can become a bottleneck on larger scenes.

Lastly, I just wanted to say thanks for sharing your time with us. My goal is to try to address hardware questions as they pertain specifically to digital art and content creation, and I hope that this review has been helpful and informative.

Links

Read more about the Radeon Pro W6000 Series GPUs on AMD’s website

Read more about the RTX A Series and Quadro RTX GPUs on Nvidia’s website

About the reviewer

Jason Lewis is Senior Environment Artist at Survios and CG Channel’s regular reviewer. You can see more of his work in his ArtStation gallery. Contact him at jason [at] cgchannel [dot] com

Acknowledgements

I would like to give special thanks to the following people for their assistance in bringing you this review:

Sean Kilbride of Nvidia

Kasia Johnston of Nvidia

Emily Pallack of Edelman

Cole Hagedorn of Edelman

Stephen G Wells

Adam Hernandez

Thad Clevenger